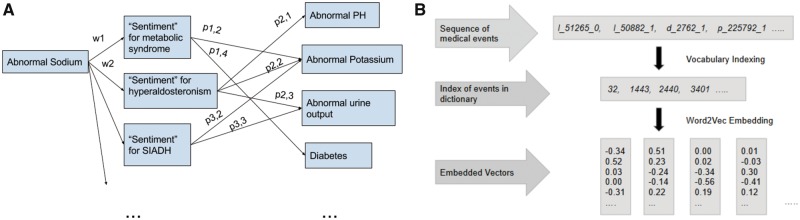

Figure 3.

word2vec embedding of medical events. A, The general architecture of skip-gram embedding used to map sparse one-hot representation of medical codes into dense word vector embeddings. Given a series of discrete medical events, center, and neighboring events are generated in a sliding window fashion, where the neural network learns the relationships nearby words for contextual representation. The weights which map input events onto the hidden layers are used as a filtering layer for future inputs for prediction tasks. B, An overview of the word2vec pipeline for transforming input features from the EHR into word vector representations. Sentence-level representation is being shown here, but word2vec can be used exclusively for diagnostic codes in visit-level representations as well.