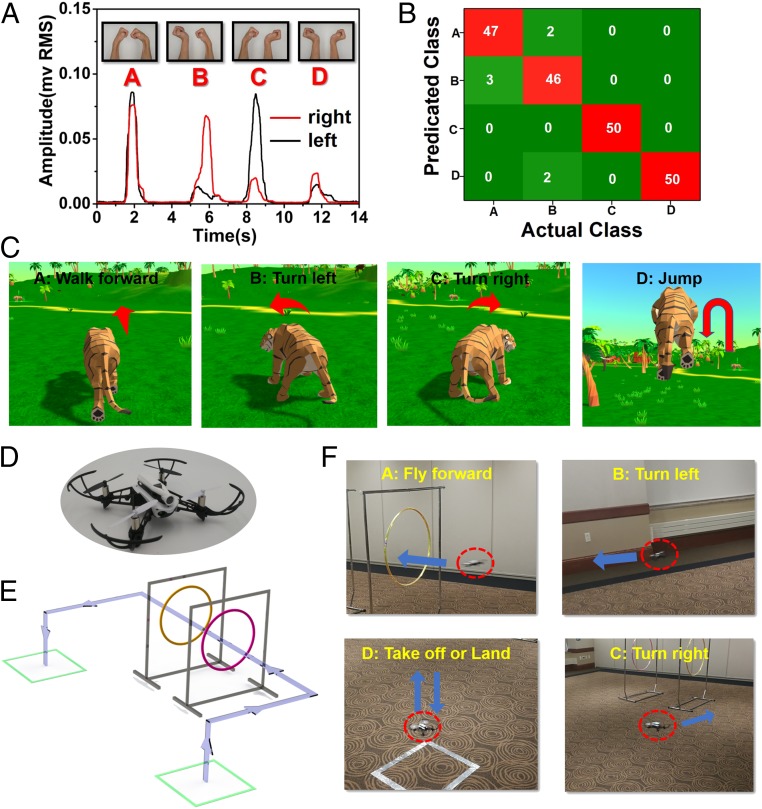

Fig. 5.

Control of a virtual character and a quadcopter via real-time EMG recording. (A) EMG signals recorded from the forearms associated with 4 different bimanual gestures (Inset), which can be classified into 4 distinct control signals, termed A, B, C, and D. (B) Confusion matrix that indicates the classification accuracy. Each bimanual gesture was repeated 50 times. The columns and rows represent actual gestures (actual class) and predicted signals (predicated class), respectively. Numbers are highlighted in the box to show the distribution of predicted signals in each actual gesture motion. (C) Control of a virtual tiger in a 3D game engine using bimanual gestures, where A, B, C, and D correspond to “walk forward,” “turn left,” “turn right,” and “jump,” respectively. (D) A photograph of the quadcopter used in the research. (E) Schematic illustrations of the quadcopter trajectory controlled by bimanual gestures, where arrows indicate the flying direction. (F) Images of the quadcopter control by bimanual gestures, where A, B, C, and D correspond to “fly forward,” “turn left,” “turn right,” and “take off or land,” respectively.