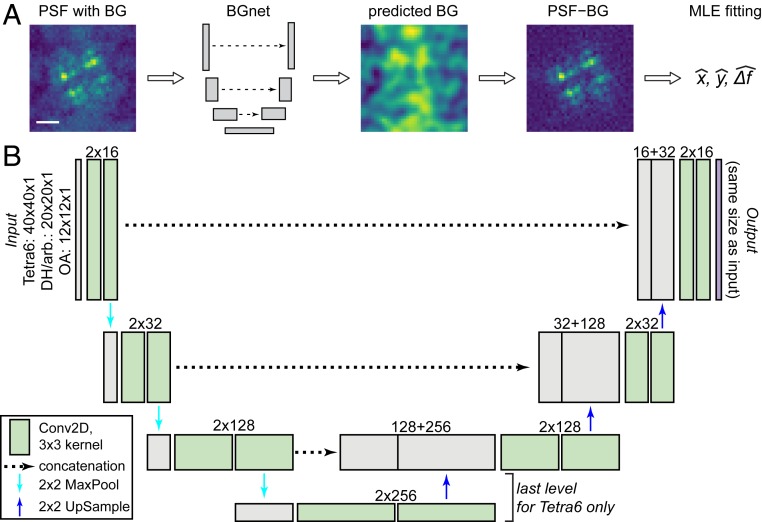

Fig. 1.

General approach and BGnet architecture. (A) BGnet receives an image of a PSF (here the Tetra6 PSF) with BG. Its output is the predicted BG contribution at each pixel. Thus, the predicted BG can be readily subtracted from the input PSF image. The BG-corrected PSF can subsequently be analyzed, for example via MLE fitting for position estimation in x and y with defocus Δf. (B) The PSF images are supplied to BGnet as single-channel 12 × 12 (OA PSF), 20 × 20 (DH, arbitrary PSF), or 40 × 40 pixel images. After two 2D convolutions with 16 filters, batch normalization and rectified linear unit activation, 2 × 2 MaxPooling is performed. Two 2D convolutions with 32 filters are performed. The output is again subjected to 2 × 2 MaxPooling, followed by two 2D convolutions with 128 filters. An additional 2 × 2 MaxPooling, followed by 2 more 2D convolutional layers with 256 filters, is performed for the Tetra6 PSF only. The output of the 2D convolutional layers with the lowest spatial size is up-sampled (2 × 2) and concatenated with the output of the 2D convolutional layer that was supplied to the final 2 × 2 MaxPooling. Up-sampling, concatenation, and 2D convolution are repeated until the spatial scale of the image is again 12 × 12, 20 × 20, or 40 × 40, respectively. The last layer is a 12 × 12 × 1, 20 × 20 × 1, or 40 × 40 × 1 2D convolutional layer, returning the predicted BG. (Scale bar in A, 1 μm.)