Abstract

Background Preventable adverse events continue to be a threat to hospitalized patients. Clinical decision support in the form of dashboards may improve compliance with evidence-based safety practices. However, limited research describes providers' experiences with dashboards integrated into vendor electronic health record (EHR) systems.

Objective This study was aimed to describe providers' use and perceived usability of the Patient Safety Dashboard and discuss barriers and facilitators to implementation.

Methods The Patient Safety Dashboard was implemented in a cluster-randomized stepped wedge trial on 12 units in neurology, oncology, and general medicine services over an 18-month period. Use of the Dashboard was tracked during the implementation period and analyzed in-depth for two 1-week periods to gather a detailed representation of use. Providers' perceptions of tool usability were measured using the Health Information Technology Usability Evaluation Scale (rated 1–5). Research assistants conducted field observations throughout the duration of the study to describe use and provide insight into tool adoption.

Results The Dashboard was used 70% of days the tool was available, with use varying by role, service, and time of day. On general medicine units, nurses logged in throughout the day, with many logins occurring during morning rounds, when not rounding with the care team. Prescribers logged in typically before and after morning rounds. On neurology units, physician assistants accounted for most logins, accessing the Dashboard during daily brief interdisciplinary rounding sessions. Use on oncology units was rare. Satisfaction with the tool was highest for perceived ease of use, with attendings giving the highest rating (4.23). The overall lowest rating was for quality of work life, with nurses rating the tool lowest (2.88).

Conclusion This mixed methods analysis provides insight into the use and usability of a dashboard tool integrated within a vendor EHR and can guide future improvements and more successful implementation of these types of tools.

Keywords: clinical decision support, health information technology, electronic health record, patient safety, information/data visualization, usability, dashboard

Background and Significance

Inpatient adverse events (AEs) are patient injuries due to medical management that prolong hospitalization, produce a disability at time of discharge, or both. 1 Since the 2000 Institute of Medicine Report “To Err is Human” highlighted the prevalence and importance of medical errors, 2 health care systems have been increasingly focused on improving patient safety and reducing AEs. Patient safety experts have increasingly leveraged health information technology (HIT) as one way to address this problem. The potential of HIT to provide generalizable solutions has been aided by the recent proliferation of electronic health records (EHRs) in the inpatient setting, with 96% of all U.S. hospitals having implemented a certified EHR technology as of 2015, with 92% using one of the top six EHR vendors. 3 4

One benefit of EHRs is that they can collect data on routine clinical care processes for innovation and improvement purposes. 5 However, current EHR systems have also created cognitive overload for clinicians as the amount of information they hold has drastically increased. 6 7

To further complicate matters, data within a single EHR may be “siloed” by data type (e.g., test results, medication information, and vital signs) and provider type (e.g., nursing vs. physician's view of the same patient). These structural limitations inhibit interdisciplinary communication, reduce user performance, and potentially negatively impact patient safety.

Data automatically abstracted from an EHR can form the basis of clinical decision support systems (CDSS). 8 Such tools built “around” an EHR (i.e., using live EHR data, accessible from the EHR environment, and yet still proprietary software) can be iteratively refined more quickly than the vendor EHR itself, may provide added value over an EHR's current offerings, and can be spread to other institutions that use the same EHR platform. CDSS tools in the form of a dashboard, which query multiple datasets onto one visual display, 9 may improve patient safety by addressing concerns related to siloed data. Studies implementing dashboards have reported improved compliance with evidence-based care and reduced patient safety events. 10 11 Users of visual data may also be able to complete tasks faster and with greater accuracy than the traditional EHR system, 12 13 and surveys have suggested that nurse providers see benefit in quickly accessing vital patient information. 14

As these tools continue to develop and increase in prevalence, it is critical to consider how providers utilize these tools to maximize impact. Studies investigating clinicians' perceived obstacles to adopt CDSS tools have identified common themes, including speed, capability to deliver information in real-time, ability to fit into users' workflow, and usability. 15 The extent to which these issues apply to dashboards integrated within a vendor EHR is less well studied.

In this study, we evaluated the implementation of a CDSS tool, a Patient Safety Dashboard, integrated within a vendor EHR. 16 The Dashboard accesses and consolidates real-time EHR data to assist clinicians in quickly assessing high-priority safety domains for patients admitted to the hospital. In this mixed-method evaluation, we report on actual use by providers, perceptions of its usability and effectiveness, and field observations on clinicians' use of the tool to provide lessons learned for the design and implementation of future tools of this type.

Methods

Setting

This study was performed at an academic, acute-care hospital. The tool was implemented in a cluster-randomized stepped-wedge trial on 12 units in neurology, oncology, and general medicine services beginning December 1, 2016 and ending May 31, 2018. The study was approved by the Partners HealthCare Institutional Review Board.

Intervention

The Patient Safety Learning Laboratory (PSLL) focused on engaging patients, families, and professional care team members in identifying, assessing, and reducing threats to patient safety. With support from the Agency for Healthcare Research and Quality, the PSLL team of researchers, clinicians, health system engineers, technical staff, and stakeholders developed a suite of patient- and provider-facing tools to promote patient safety and patient-centered care. The Patient Safety Dashboard was one of the provider-facing tools developed.

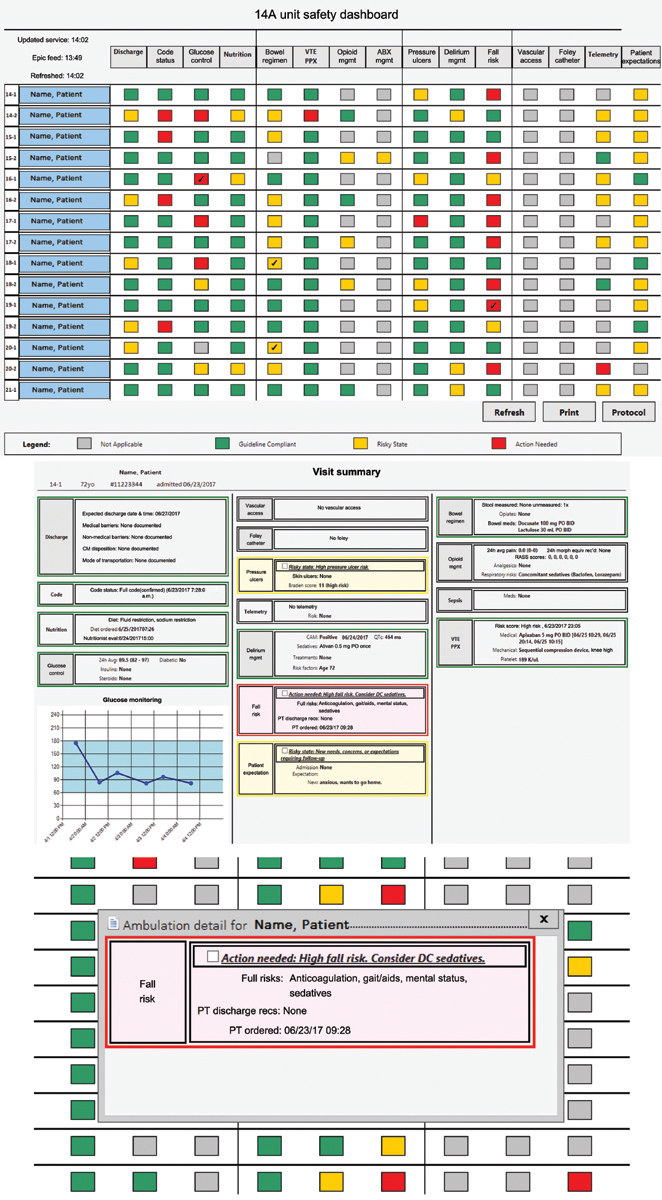

The development, features, and functions of the Patient Safety Dashboard have been previously described. 16 Through iterative, user-centered design, the Dashboard sought to mitigate preventable harms by visualizing real-time EHR data to assist clinicians in assessing 13 safety domains: code status, glucose control, nutrition, bowel regimen, venous thromboembolism (VTE) prophylaxis, opioid management, antibiotic management, pressure ulcer prevention and management, delirium management, fall risk, vascular access, urinary catheter management, and telemetry. Two additional domains, discharge planning and patient expectations, were added to the Dashboard later in the study. The Dashboard used a color grading system to indicate when inpatients were at risk for potential harm (yellow) or were at high risk for harm that warranted a specific action to be taken (red). The text of the color-coded alert boxes (“flags”) consolidated information of several different data types around each domain to support providers in making clinical decisions. The Dashboard also flagged when the patient was not at risk or in need of an action to be taken (green) or when the safety domain was not applicable, for example, urinary catheter management for a patient without a catheter (gray). The decision support was available by clicking directly on flags from the unit-level view or by accessing the patient-level view, which displayed patient-specific decision support for all flags. Users could also acknowledge an alert by clicking on a check-box next to the text ( Fig. 1 ).

Fig. 1.

The figure displays (1) a unit-level view of the safety dashboard, updated to include the Discharge Planning and Patient Expectations columns; (2) a patient-level view accessed by clicking the name of a patient in the unit-level view; (3) a flag view, generated when clicking on a flag from the unit-level view. Clicking the white box to the left of the alert message allows a user to acknowledge the alert and would mark the corresponding box on the unit-view with a check mark. All patient information has been de-identified. (This figure has been adapted from: Mlaver E, Schnipper JL, Boxer R, et al. User-centered collaborative design and development of an inpatient safety dashboard. J Comm J Qual Pat Saf 2017;43(12):676–685, 16 with permission from Elsevier).

Implementation

As units moved from usual care to the intervention as part of the stepped wedge study design, researchers systematically trained most of the unit-based nurses (>80%), and engaged with physicians during weekly meetings. Study staff continued to provide “at-the-elbow” support and visited the units weekly to answer questions and promote user buy in. Organizational leadership encouraged but did not mandate use of the tool, which was designed to be implemented into care during morning rounds. Feedback from providers was collected early in the study to determine barriers to tool adoption and to develop approaches to increase use. Suggestions from users informed iterative refinements to the Dashboard, including changes to the user interface and to the logic that prompted alerts. Eight months into implementation of the tools, research assistants (RAs) began generating weekly basic user reports incorporating visual displays to allow for quick and effective communication 17 of study goals, 18 expectations, and accomplishments. These reported on logins by day and total number of logins, recognized top users, and summarized highlights from other technologies in the intervention. Reports were emailed to providers on study units (starting with general medicine, then neurology). Competitive weekly user reports later replaced basic reports on general medicine to encourage dashboard use, tapping into users' internal motivation for mastery. These reports included features from the basic reports but also awarded virtual trophies and ribbons to top individual users and recognized units for highest number of total logins, as well as number of days with at least one login per week.

Measurement of Dashboard Use

Usage by unit and provider type was automatically tracked throughout the implementation period to determine the number of times the tool was accessed by providers, including prescribers (physicians or physician assistants [PAs]), nurses, and unit-level clinical leadership. Two representative 1-week periods of the study were analyzed for insight on typical use by unit and provider role. These 1-week “deep dives” measured the ways providers interacted with the tool, whether they opened the unit view, selected a patient detail view, or opened or acknowledged a red or yellow flag. We also analyzed what time the Dashboard was accessed and categorized this into before (12:00–8:30 a.m.), during (8:30–11:30 a.m.) and after (11:30–12:00 a.m.) morning rounds for general medicine and oncology. A brief interdisciplinary rounding session on neurosurgery occurred from 10:00 to 10:30 a.m. and on neurology from 10:30 to 11:00 a.m.

Measurement of Perceived Usability

Provider perceptions of usability of the tool were measured using the Health Information Technology Usability Evaluation Scale (Health-ITUES). 19 The customizable questionnaire is composed of four subcomponents: quality of work life (QWL), perceived usefulness (PU), perceived ease of use (PEU), and user control (UC), each rated on a 5-point Likert scale in which 1 denotes “strongly disagree” to 5 denotes “strongly agree.” Higher scores indicate greater perceived usability. The Health-ITUES survey was administered to providers on neurology, oncology, and medicine units through the REDCap (Research Electronic Data Capture, Vanderbilt University, Nashville, Tennessee, United States) platform 4 to 14 weeks after the intervention period ended.

Research Assistant Observations

RAs observed provider use of the Dashboard throughout the duration of the study, during training sessions, formal observation periods, user meetings, and while on the units for data collection. Following completion of the intervention, a question guide was sent to former and current RAs involved in the study, and responses were provided verbally or on paper. Field observations were collected on provider use of the Dashboard, provider use of additional electronic tools in conjunction with the Dashboard, and overall RA impressions of implementation.

Analysis

Data on use of the Dashboard are presented descriptively. Health-ITUES responses were analyzed for mean scores and standard deviation for each survey item. Qualitative data gathered from PSLL RAs were descriptively coded into major themes and subthemes around tool usage by three research team members (P.G., T.F., and K.B.). Two team members independently coded the RAs' feedback and identified common themes and subthemes, with final consensus made by the team's human factors expert (PG). Finally, representative quotes for each subtheme were identified.

Results

Dashboard Use

The Patient Safety Dashboard was accessed by at least one provider on 70% of intervention days (382 days out of 547), for a total of 8,302 logins by 413 individual providers. Providers included 184 nursing staff (nurses, patient care assistants, and nursing students), 179 prescribers (attending physicians, PAs, nurse practitioners, fellows, residents, and medical students), and 19 unit leadership staff (administrators and nurse/medical directors). Additionally, eight users were listed under a different role (e.g., pharmacist), and the role of 23 users could not be identified. All users who open the Dashboard default to the unit-level view. The total number of individual flags and patient detail views opened were measured and analyzed by service, with patient detail views (as opposed to flag views) accounting for 24% of views on medicine, 87% of views on neurology, and 68% of views on oncology.

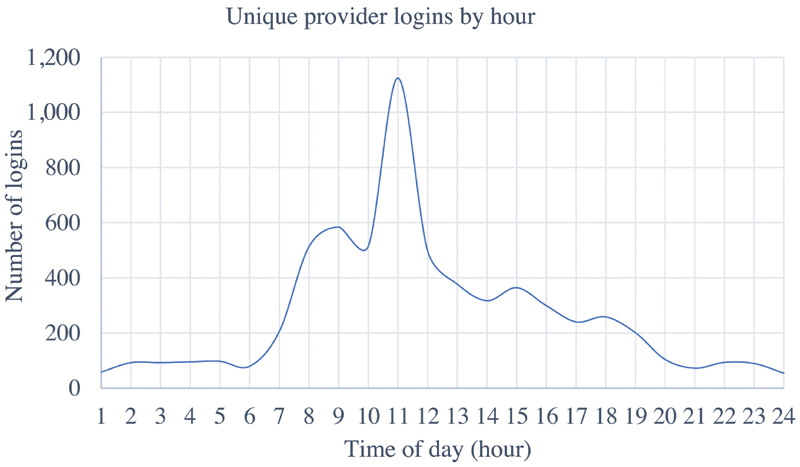

The number of providers that logged in each hour was captured throughout the 18-month implementation period. There was a high concentration of logins between 5 and 8 a.m., a large increase in logins during morning rounds (8:30–11:30 a.m.), and a decrease in logins each subsequent hour ( Fig. 2 ).

Fig. 2.

Total number of provider log-ins per hour throughout the duration of the study.

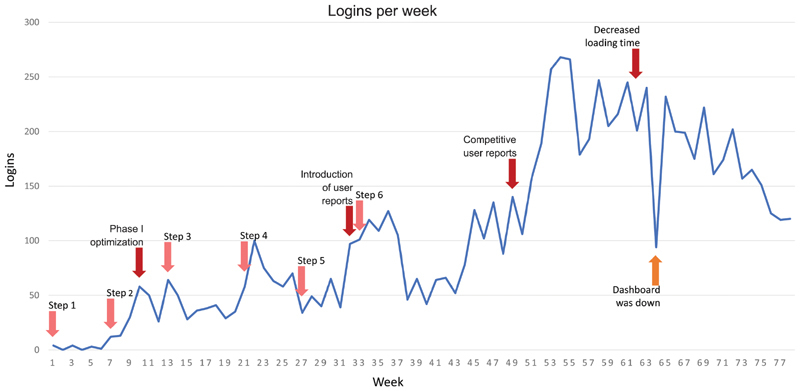

Use of the Dashboard was also measured by the number of logins per week ( Fig. 3 ), with a fairly steady increase throughout the implementation period. Several notable points of uptake in use were associated with implementation on additional study units (“steps”), enhancements made to the tool, or the distribution of basic and competitive user reports. There were several days where the Dashboard was down, and users were unable to log in, indicated by a low number of logins between weeks of high use.

Fig. 3.

Number of logins per week throughout the duration of the study. “Basic user reports” beginning in week 32 reported logins by day, recognized top users, and summarized highlights from other components of the intervention. “Competitive weekly user reports” awarded virtual ribbons and trophies to units for the highest number of total logins, as well as number of days per week with at least one login.

Further analyses (“deep dives”) were conducted on two separate weeks of the study ( Table 1 ). During the week of January 14 to 20, 2018, the Dashboard was opened 169 times by 127 nurses, 32 prescribers, and 10-unit leadership staff members. Most logins were by nurses on medicine units viewing red and yellow flags, followed by patient detail views. It is notable that medicine providers only opened the Dashboard on morning rounds thrice during that week, that is, while rounding with nurses, while medicine nurses opened it 31 times during those same hours, most likely when not rounding with the team. In contrast, on neurology, the Dashboard was rarely opened by nurses but was opened by a single prescriber (PA) on 3 separate days during the 30-minute interdisciplinary rounding sessions. The Dashboard was never opened on oncology units during this week. For medicine units, the Dashboard was frequently opened by nurses across all six units; however, prescribers only opened the tool on four of these units, with unit leadership viewing it on the other two medicine units (data not shown).

Table 1. “Deep dive” of dashboard use.

| Week 1 | ||||||

| Use metric (counts) | Neurology (3 units) | Medicine (6 units) | ||||

| Nursing staff | Prescribers | Unit leadership | Nursing staff | Prescribers | Unit leadership | |

| Logins | 1 | 5 | 0 | 126 | 27 | 10 |

| Red/yellow flags opened | 15 (11/4) | 0 | 0 | 371 (168/203) | 48 (26/22) | 30 (20/10) |

| “Patient detail” view opened | 0 | 3 | 0 | 62 | 24 | 5 |

| Red/yellow flags acknowledged | 15 (11/4) | 0 | 0 | 425 (170/255) | 79 (34/45) | 22 (15/7) |

| Before rounds a | 1 | 0 | 0 | 42 | 12 | 1 |

| During rounds | 0 | 3 | 0 | 31 | 3 | 5 |

| After rounds | 0 | 2 | 0 | 53 | 12 | 4 |

| Week 2 b | ||||||

| Neurology (3 units) | Medicine (6 units) | |||||

| Nursing Staff | Prescribers | Unit leadership | Nursing staff | Prescribers | Unit leadership | |

| Logins | 0 | 7 | 0 | 82 | 23 | 6 |

| Red/yellow flags opened | 0 | 1 (0/1) | 0 | 112 (58/54) | 73 (41/32) | 15 (4/11) |

| “Patient detail” view opened | 0 | 8 | 0 | 68 | 3 | 4 |

| Red/yellow flags acknowledged | 0 | 0 | 0 | 163 (66/97) | 23 (20/3) | 17 (4/13) |

| Before rounds a | 0 | 1 | 0 | 31 | 4 | 2 |

| During rounds | 0 | 5 | 0 | 17 | 3 | 2 |

| After rounds | 0 | 1 | 0 | 34 | 16 | 2 |

Rounds defined as 8:30–11:30 a.m. on medicine and between 10:30–11:00 a.m. on neurology.

Tool was used once on oncology units during this period.

During the week of April 1 to 7, 2018, providers logged in 120 times by 82 nurses, 32 prescribers, and 6-unit leadership staff. Most logins were by nurses from general medicine, but there were fewer logins than the week in January. There was a high concentration of nurse logins in the morning before rounds at 8:30 a.m. General medicine nurses continued to login throughout the day. Prescribers on medicine logged in before and during rounds; however, most of the logins took place after rounds ended at 11:30 a.m. Medicine units had similar patterns of use as the previous deep dive, with unit leadership opening it on the same 2 units, and nurses opening the tool on all 6 units; however, this time prescribers accessed the tool on all 6 units (data not shown). This week also saw that again most neurology logins were during interdisciplinary rounding sessions by prescribers, in this case two PAs. Again, the tool was used rarely on oncology services.

Assessment of Usability

There were 53 respondents out of 180 providers who were emailed the Health-ITUES (response rate 29%). Responses were categorized by provider role for further analysis: nurse ( n = 18), PA ( n = 13), resident ( n = 16), and attendings ( n = 6), as well as by service, such as oncology, neurology, and medicine. The overall ratings for the four measures were quality of work life (3.19 ± 1.09), perceived usefulness (3.27 ± 0.85), perceived ease of use (3.61 ± 0.95), and user control (3.40 ± 0.72), with variability by provider role and service ( Table 2 ). Attendings rated each measure highest out of the four groups, and nurses provided the lowest ratings for the tool.

Table 2. Providers perceptions' of dashboard usability.

| Measure | Mean (SD) | ||||

|---|---|---|---|---|---|

| All | Nurses | Residents | PAs | Attendings | |

| Quality of work life | 3.19 (1.09) | 2.88 (1.00) | 3.31 (1.19) | 3.26 (1.07) | 3.72 (0.96) |

| Perceived usefulness | 3.27 (0.85) | 3.03 (0.74) | 3.30 (0.96) | 3.52 (0.79) | 3.62 (0.58) |

| Perceived ease of use | 3.61 (0.95) | 3.64 (0.82) | 3.26 (0.95) | 3.91 (0.70) | 4.23 (0.82) |

| User control | 3.40 (0.72) | 3.41 (0.62) | 3.50 (0.63) | 3.46 (0.66) | 3.50 (0.84) |

Abbreviations: PAs, physician assistants; SD, standard deviation.

Research Assistant Observations

Seven RAs who worked on the PSLL project responded to the question guide (response rate of 100%). Themes that emerged from qualitative responses centered around variation and inconsistency in how the Dashboard was accessed and used, social and cultural barriers influencing user retention and adoption, usability and technical issues, and overall impressions and suggestions ( Table 3 ). Most RAs reported on variations in use across different roles and services. Overall impressions were that nurses on general medicine typically pulled up the Dashboard periodically throughout their shift to check on their patients. PAs on both general medicine and neurology were strong proponents of the tool. Providers who frequently used the Dashboard had identified specific domains in which they found value and fit it into their workflow where they found it appropriate.

Table 3. Themes regarding dashboard use from research assistants.

| Theme | Subtheme | Representative quotes |

|---|---|---|

| Variation in the Dashboard access and use | Method of accessing the Dashboard evolved with training | “All users of the Dashboard have been initially taught to pin it to their epic start menu as a ‘favorite’ of sorts in order to simplify the process of accessing it” |

| Dashboard use frequency and timing | “Neuro providers only used it during a 30-minute interdisciplinary round, but GMS providers conducted bedside rounds and used it then (which lasted all morning). Nurses checked the dashboard periodically throughout the entire day” “On neuro: the chief PA was really behind the dashboard, and wanted it up at all [interdisciplinary] rounds so that as the residents were actively entering orders in Epic they could also address any documentation issues shown on the dashboard” “During piloting, we saw residents using the dash prerounds/we saw the nurse director looking at the dashboard to check on quality metrics” |

|

| Provider’s preference for unit level display versus patient view | “Most users clicked on the patient's name after opening up the unit level view to see all flags that were relevant for that patient” “Nurses tend to log into the unit view, especially as charge nurse, because they are regionalized of course. I think the patient view was used more by MDs and PAs because they were less regionalized/maybe only ‘owned’ a few patients on each pod” |

|

| Social and cultural barriers influencing user retention and adoption | Division of responsibility | “Most successful usage initially on neuro where chief PA would have a resident pull it up during interdisciplinary rounds” “Felt very much like every role did not want to take on the responsibility for checking it so would ‘share’ it, leading to things getting missed” “I don't know how much of it was “dividing responsibility” versus “that's not my problem.” For instance, MDs had no idea what to do with the ulcer and fall columns. So they just said “that side is for nursing.” Nursing would get frustrated when they would bring it up and then get shot down (e.g., ‘code status is red’ ‘yup’) with no further action” |

| Threats to workflow | “Might not have seen full value and didn't have incentive or expectation to use tools so didn't. Not wanting to complicate or change workflow” |

|

| Usability and technical issues | Clinical relevance of dashboard alerts | “Many issues over the course of the study, usually because our logic was faulty (not pulling all required fields in epic, etc.) … Other times there were disagreements about what patient status necessitated a red/yellow/green/gray and these issues warranted discussions around our logic” “Most people found a few easy ‘wins’ from the dashboard (e.g. ‘red’ showed who didn't have code status, or morphine equivalent dose), and that was how they usually found value in it” “… Providers did get frustrated by items that would just always be red or yellow that they felt they couldn't change” |

| Technical bugs | “For the dashboard, main concerns that I heard were persisting bugs that probably discouraged widespread use. Other concerns I heard were that it could have been designed to look more streamlined/not create too much cognitive overload” | |

| Performance and speed | “Dashboard loading times were an issue that caused usability problems” | |

| Overall impressions and suggestions | Dashboard success | “Large-scale implementation of novel health IT, which led to some successes in terms of streamlining inpatient care. This success was only possible because both sides (research and clinical users) put the time and effort to make sure that it was fully disseminated across the study units” |

| Suggestions for improvement | “Advanced planning about how to engage stakeholders in order to have consistent and effective engagement—ensuring follow through on engagement – regular meetings or rounding to discuss issues that come up from clinicians” “Bring in clinical users early. Clinical users who can pilot constantly. Clinical users who work on the floors all the time. Better communication with leadership early so they have agency over the intervention and therefore have a little bit more excitement about it because they were part of the conception” |

Abbreviations: GMS, general medicine service; IT, information and technology; PA, physician assistant.

RAs also provided opinions on what impeded use by providers, citing barriers, such as uncertainty on who should take responsibility for flagged items. There was also difficulty with providers buying into the tool at the beginning of the study due to issues with accessing the tool within the EHR, bugs, and slow loading times. Although many of these issues were addressed throughout the study, RAs observed that many providers were discouraged by their initial impressions. There was also agreement among RAs that future efforts should focus on stronger implementation planning and increased engagement of stakeholders.

Discussion

We evaluated the Patient Safety Dashboard using a mixed-method analysis and found considerable variation in how it was used during implementation. For example, on medicine the tool was used globally more often than on other services, specifically by nurses more than prescribers. On neurology, the opposite was true, while use on oncology services was virtually nonexistent. Ratings of usability were fair, with highest ratings for ease of use and lowest for impact on work life and on perceived usefulness. Thematic evaluation of RA observations suggested pockets of successful use of the tool but also identified barriers related to the technology, impact on workflow, culture, and teamwork.

The Dashboard was originally designed for interdisciplinary use during rounds, when prescribers, nurses, patients, and other care team members, including caregivers, could efficiently review flags, discuss issues as needed, and act upon mitigating safety risks. Both quantitative and qualitative results reveal partial success with this goal. For example, one clinical champion, the neurology chief PA, often viewed the Dashboard once a day, for 30 minutes, during interdisciplinary neurology rounding sessions, thus having an impact on the entire service. On the other hand, on medicine, where morning bedside rounds with nurses can last 3 hours, multidisciplinary use was uncommon. Use on medicine rounds requires buy-in of both the responding clinician (resident or PA) and attending physician, and since both change frequently, different levels of provider buy-in can have large effects on workflow. Instead, nurses often used the Dashboard by themselves, that is, when the physician team was rounding with a different nurse. Several factors may have contributed to the high use of the Dashboard among nurses despite rating the tool low on quality of work life, including remaining on the study units throughout the duration of the study and encouragement by nursing director clinical champions..

The Dashboard was also supposed to improve interdisciplinary communication regarding patient safety issues by displaying the same information to all users, regardless of role, breaking down siloes in how data are displayed within an EHR. However, persistent cultural issues about ownership of certain patient safety issues (e.g., physicians considering prevention of pressure ulcers as a nursing concern) deterred use. Nurses felt frustrated when raising matters viewed as being for “physicians,” as physicians did not always act in a manner that would address the flag. If either group of providers felt that the other was not committed to using the Dashboard, that led to disincentives to use it themselves. Furthermore, given the technical design of the Dashboard, some flag alerts would not change (e.g., the inability of a yellow flag regarding pressure ulcer risk to turn green because of the way the logic was designed), adding to dissatisfaction with the tool.

Other barriers to use concerned previously well-described interactions between technical issues, the work environment, the organization, the people involved, and the tasks required. 20 For example, based on RA observation, nonuse on oncology could be attributed to several interacting factors, including the lack of regionalized teams on oncology, the inability of the Dashboard to create a team-level view (i.e., lists of patients by prescriber as opposed to patients by unit, which becomes even more important when teams are not regionalized), and the lack of a clinical champion. Nurses on oncology also provided feedback regarding their inability to change flag status due to the Dashboard being orders-based (i.e., requiring prescriber input), leading to decreased use.

We also saw wide variation in the uptake of the Dashboard by different users within each role, which we suspect could be explained by their willingness to adopt new technology and make changes to their workflow. When users saw the value of the tool, for example, leading to actions that they might not otherwise have taken, then they generally continued to use it. This was indeed the intention of the tool's designers for providers to see value in this tool and conclude that it saved time by consolidating information, or that the benefits of its use outweighed the cost of time taken to use it. However, it was instructive to see that perceived usefulness was one of the lower ranked domains in the Health-ITUES survey.

It should be noted that these results were found despite stakeholder engagement in the development of the tool and continuous refinement to its user interface and logic. There were also implementation campaigns involving unit leadership, clinical champions, and frontline clinicians. The results reveal the challenges in accomplishing these basic principles of implementation science. For example, despite several approaches to teaching users how best to access the tool from within the EHR environment, our results revealed that this messaging did not reach all users. The same could be said regarding best practices of pulling up the patient-level view, with details of all the flags of a given patient, as opposed to just pulling up details of one flag at a time from the unit-level view. Even though tool use was inconsistent, there were several successes in promoting end-user adoption. For example, large reductions in loading time were achieved, mostly by periodically archiving the Dashboard “meta-data.” Another success was the creation of weekly competitive user reports, which increased use on medicine teams. We also worked with stakeholders on the best ways to use the Dashboard outside of rounds for those less willing to change their rounding workflow.

Most studies of electronic dashboards integrated within a vendor EHR focus on their benefits, such as completing tasks faster and positive perceptions among users. It is important to keep in mind that these tools will only benefit providers and improve patient care if they can be designed and implemented optimally. Few studies have addressed instances of low CDSS user adoption and approaches implemented, such as monitoring use and attending to maintenance, for sustained adoption rates, 21 as well as feedback on provider experience. 22 This mixed-method study adds to the existing literature by highlighting the challenges in building this next generation of CDSS tools and suggesting ways to overcome these challenges, specifically in the context of a provider-facing patient safety dashboard.

Limitations

This analysis has several limitations. First, the initial application tracker was not able to identify users that logged directly into the EHR without first logging into the hospital's workstation. A second database was created several months into the study to track Dashboard application logins. Users were matched between the databases according to login times within 10 seconds of masked IDs; however, some users could not be identified and were not included in our analyses.

Second, the deep dives only captured 2 weeks and may not be representative of the complete study. However, given the similarities in findings between the 2 weeks and the consistency with the qualitative findings, we believe these findings were representative. Third, the response rate to the ITUES study was low (29%) and likely subject to response bias. On the other hand, it probably reflects the views of those providers who were familiar enough with the tool to respond. The RA observations were gathered at the end of the intervention, rather than formally collected and analyzed throughout the duration of the study, and some additional observations may not have been captured. Lastly, this study was conducted at one hospital and may not be generalizable to other institutions. However, it should be noted that among the three services, there were different cultures, for example, rounds were conducted differently on neurology than on general medicine and oncology units. Even within general medicine, rounding structure and team size varied, and physician personnel varied month to month on all three services, leading to variation in use. Moreover, several of the lessons learned are likely generalizable across institutions and regardless of the exact build of the application. For example, services that have dedicated time for interdisciplinary rounds might have an easier time adopting these tools than those that don't. Also, services with a stable group of providers, such as PAs, might be more successful than those run by rotating personnel, such as residents and teaching attendings. Fourth, applications should be pilot tested and iteratively refined to be as fast and bug free as possible before going live to avoid losing potential users who may not come back to it later, and any decrements in performance (such as loading time) need to be addressed immediately as they arise. Lastly, workflow and cultural issues need to be addressed upfront and continually during implementation; in the case of a patient safety dashboard, the most prominent issues will likely include the time pressure of rounds and the perception of different safety issues “belonging” to different provider types. Future studies should revisit these issues as these types of tools are implemented more widely.

Next Steps

Study investigators are currently working with a “productization” team to further enhance the Dashboard and implement it throughout the hospital and an affiliated community hospital. During this process, we are taking into consideration of all the findings from this study regarding both the design and implementation of the Dashboard. For example, several technical improvements are being made to the Dashboard regarding usability and its real and perceived usefulness, including the ability to customize safety domains by service, allow for a team-level view, allow for the patient-level view to be accessed directly from each patient's chart, links within flags to take users directly to orders or to update the chart, and steps to ensure that the tool is as fast and reliable as possible. We have continued to engage stakeholders, including front-line providers and the hospital's Department of Quality and Safety (DQS) in the design of the user interface and in the logic of decision support. Allowing providers to have more say in the project and take a larger ownership should strengthen their own use and assist them with promoting it to other providers. Additionally, our team is reviewing process and outcome measure data to determine if the Dashboard was effective in improving patient care. Positive results may encourage providers to adopt it.

We are also considering lessons learned when planning the implementation effort. For example, rather than training different types of users separately, we plan to combine prescribers, nurses, and leadership in training sessions so that they can understand each other's roles in using the Dashboard. We also plan to leverage DQS in the messaging campaign so that users understand the hospital's commitment to specific patient safety domains (such as prevention of catheter-associated urinary tract infections) and how the Dashboard is a means to achieving these goals. We will increase “at the elbow” support to help users with accessing the Dashboard, demonstrate its various features, and solicit feedback. We will better engage leadership in the expectation that the tool should be used on rounds whenever possible. We will track use from the beginning and provide feedback on use to different stakeholders.

Conclusion

In conclusion, we performed a mixed methods analysis of use and perceived usability and found many specific opportunities for improvement that should lead to a better designed tool and more successful implementation of the next version. We believe many of the lessons learned and this evaluation process may be useful for any electronic dashboard built “around” a vendor EHR to improve the quality and safety of health care delivery. Performing more of these kinds of evaluations could result in software that meets clinician needs and performs better in terms of improving clinical care.

The current study analyzed the use and perceived usability of a clinical decision support tool implemented as part of a suite of health information technology tools. Review of use by providers throughout the study showed varied use by provider type, provider service, and the time of day. Perceived usability of the tool also had mixed results depending on provider role. Increasing use of the tool was seen after employing methods, such as friendly competition between providers and inpatient units and enhancements to the technology (e.g., faster loading times).

Clinical Relevance Statement

Increased adoption of vendor EHRs across health care systems provides opportunities to develop clinical decision support tools that are integrated with these EHRs. These tools hold the potential to identify and mitigate patient harm risks and improve patient care. Results from this study provide valuable insights regarding barriers to use and some approaches to improve the design of these tools and increase user adoption.

Multiple Choice Questions

-

Which of the following are known problems with current EHR systems?

Cognitive overload.

Data can be siloed by data and provider type.

They do not contain enough information.

Both a and b.

Correct Answer: The correct answer is option d. EHR systems offer great potential to improve patient care, however, current systems do not operate optimally. Two concerns that have been identified by providers include a high amount of information causing cognitive overload and data being segregated by data type (e.g., test results, medication information, vital signs) and by provider type (e.g., nurses seeing different information than doctors).

-

Serious adverse events (e.g., prolonged hospital stays, permanent harm requiring life-sustaining intervention, patient death) are estimated to occur in what percentage of hospitalized patients?

7–10%

11–18%

14–21%

26–38%

Correct Answer: The correct answer is option c. Serious adverse events continue to be a problem in the inpatient setting, affecting between 14 and 21% of patients.

-

As of 2015, what percentage of U.S. hospitals have implemented a certified EHR system?

96%

84%

67%

56%

Correct Answer: The correct answer is option a. Hospitals have experienced a large increase in adoption of EHR systems. Data collected in 2015 revealed that 96% of hospitals in the US had implemented an EHR, with a majority of these being one of the top six EHR vendors.

-

Research assistant observations during implementation of the Patient Safety Dashboard found barriers to tool adoption included which of the following?

Slow loading time.

Unable to communicate with other clinicians through the tool.

Uncertainty regarding responsibility for addressing flagged items.

Unable to add notes to flags.

All of the above.

Both a and c.

Correct Answer: The correct answer is option f. Research assistants observed clinicians and identified several barriers, including slow loading times, uncertainty regarding responsibility of flagged items, difficulty accessing the tool within the EHR, and persistent bugs. The other options may be barriers new technologies face in general but were not noted during the present study.

Funding Statement

Funding This study was funded by Agency for Healthcare Research and Quality (AHRQ) 1P30HS023535 Making acute care more patient centered.

Conflict of Interest One of the authors (J.L.S.) is the recipient of funding from Mallinckrodt Pharmaceuticals for an investigator-initiated study of opioid-related adverse events after surgery and from Portola Pharmaceuticals for an investigator-initiated study of patients who decline doses of pharmacological prophylaxis for venous thromboembolism. Another author (R.R.) consults for EarlySense, which makes patient safety monitoring systems. He receives cash compensation from CDI (Negev), Ltd., which is a not-for-profit incubator for health IT startups. He receives equity from ValeraHealth which makes software to help patients with chronic diseases. He receives equity from Clew which makes software to support clinical decision-making in intensive care. He receives equity from MDClone which takes clinical data and produces deidentified versions of it. Financial interests have been reviewed by Brigham and Women's Hospital and Partners HealthCare in accordance with their institutional policies.

Protection of Human and Animal Subjects

This study was approved by the Partners HealthCare Institutional Review Board.

References

- 1.Agency for Healthcare Research and Quality.Adverse events, near misses and errorsAvailable at:https://psnet.ahrq.gov/primer/adverse-events-near-misses-and-errors. Accessed October 19, 2019

- 2.Kohn L T, Corrigan J M, Donaldson M S.Institute of Medicine (U.S.) Committee on Quality of Health Care in America;, eds.;To Err is Human: Building a Safer Health System Washington (DC)National Academies Press (US)2000 [PubMed] [Google Scholar]

- 3.The Office of the National Coordinator for Health Information Technology.Nonfederal acute care hospital electronic record adoptionAvailable at:https://dashboard.healthit.gov/quickstats/pages/FIG-Hospital-EHR-Adoption.php. Accessed April 9, 2019

- 4.The Office of the National Coordinator for Health Information Technology.Hospital health IT developers: certified health IT developers and editions reported by hospitals participating in the Medicare EHR incentive programAvailable at:dashboard.healthit.gov/quickstats/pages/FIG-Vendors-of-EHRs-to-Participating-Hospitals.php. Accessed July 2017

- 5.Abernethy A P, Etheredge L M, Ganz P A et al. Rapid-learning system for cancer care. J Clin Oncol. 2010;28(27):4268–4274. doi: 10.1200/JCO.2010.28.5478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Singh H, Spitzmueller C, Petersen N, Sawhney M, Sittig D. Information overload and missed test results in EHR-based settings. JAMA Intern Med. 2013;173(08):702–704. doi: 10.1001/2013.jamainternmed.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Murphy D R, Meyer A N, Russo E, Sittig D F, Wei L, Singh H. The burden of inbox notifications in commercial electronic health records. JAMA Intern Med. 2016;176(04):559–560. doi: 10.1001/jamainternmed.2016.0209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Miriovsky B J, Shulman L N, Abernethy A P. Importance of health information technology, electronic health records, and continuously aggregating data to comparative effectiveness research and learning health care. J Clin Oncol. 2012;30(34):4243–4248. doi: 10.1200/JCO.2012.42.8011. [DOI] [PubMed] [Google Scholar]

- 9.Egan M. Clinical dashboards: impact on workflow, care quality, and patient safety. Crit Care Nurs Q. 2006;29(04):354–361. doi: 10.1097/00002727-200610000-00008. [DOI] [PubMed] [Google Scholar]

- 10.Pageler N M, Longhurst C A, Wood M et al. Use of electronic medical record-enhanced checklist and electronic dashboard to decrease CLABSIs. Pediatrics. 2014;133(03):e738–e746. doi: 10.1542/peds.2013-2249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Shaw S J, Jacobs B, Stockwell D C, Futterman C, Spaeder M C. Effect of a real-time pediatric ICU safety bundle dashboard on quality improvement measures. Jt Comm J Qual Patient Saf. 2015;41(09):414–420. doi: 10.1016/s1553-7250(15)41053-0. [DOI] [PubMed] [Google Scholar]

- 12.Schall M C, Jr., Cullen L, Pennathur P, Chen H, Burrell K, Matthews G. Usability evaluation and implementation of a health information technology dashboard of evidence-based quality indicators. Comput Inform Nurs. 2017;35(06):281–288. doi: 10.1097/CIN.0000000000000325. [DOI] [PubMed] [Google Scholar]

- 13.Brown N, Eghdam A, Koch S. Usability evaluation of visual representation formats for emergency department records. Appl Clin Inform. 2019;10(03):454–470. doi: 10.1055/s-0039-1692400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Tan Y-M, Hii J, Chan K, Sardual R, Mah B. An electronic dashboard to improve nursing care. Stud Health Technol Inform. 2013;192:190–194. [PubMed] [Google Scholar]

- 15.Bates D W, Kuperman G J, Wang S et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(06):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mlaver E, Schnipper J L, Boxer R B et al. User-centered collaborative design and development of an inpatient safety dashboard. Jt Comm J Qual Patient Saf. 2017;43(12):676–685. doi: 10.1016/j.jcjq.2017.05.010. [DOI] [PubMed] [Google Scholar]

- 17.Few S.Data Visualization for human perceptionAvailable at:https://www.interaction-design.org/literature/book/the-encyclopedia-of-human-computer-interaction-2nd-ed/data-visualization-for-human-perception. Accessed December 17, 2019

- 18.Khasnabish S, Burns Z, Couch M, Mullin M, Newmark R, Dykes P C.Best practices for data visualization: creating and evaluating a report for an evidence-based fall prevention programJ Am Med Inform Assoc2019. Doi: 10.1093/jamia/ocz190 [DOI] [PMC free article] [PubMed]

- 19.Yen P Y, Sousa K H, Bakken S.Examining construct and predictive validity of the Health-IT Usability Evaluation Scale: confirmatory factor analysis and structural equation modeling results J Am Med Inform Assoc 201421(e2):e241–e248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Carayon P, Wetterneck T B, Rivera-Rodriguez A J et al. Human factors systems approach to healthcare quality and patient safety. Appl Ergon. 2014;45(01):14–25. doi: 10.1016/j.apergo.2013.04.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Khan S, Richardson S, Liu A et al. Improving provider adoption with adaptive clinical decision support surveillance: an observational study. JMIR Human Factors. 2019;6(01):e10245. doi: 10.2196/10245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kurtzman G, Dine J, Epstein A et al. Internal medicine resident engagement with a laboratory utilization dashboard: mixed methods study. J Hosp Med. 2017;12(09):743–746. doi: 10.12788/jhm.2811. [DOI] [PMC free article] [PubMed] [Google Scholar]