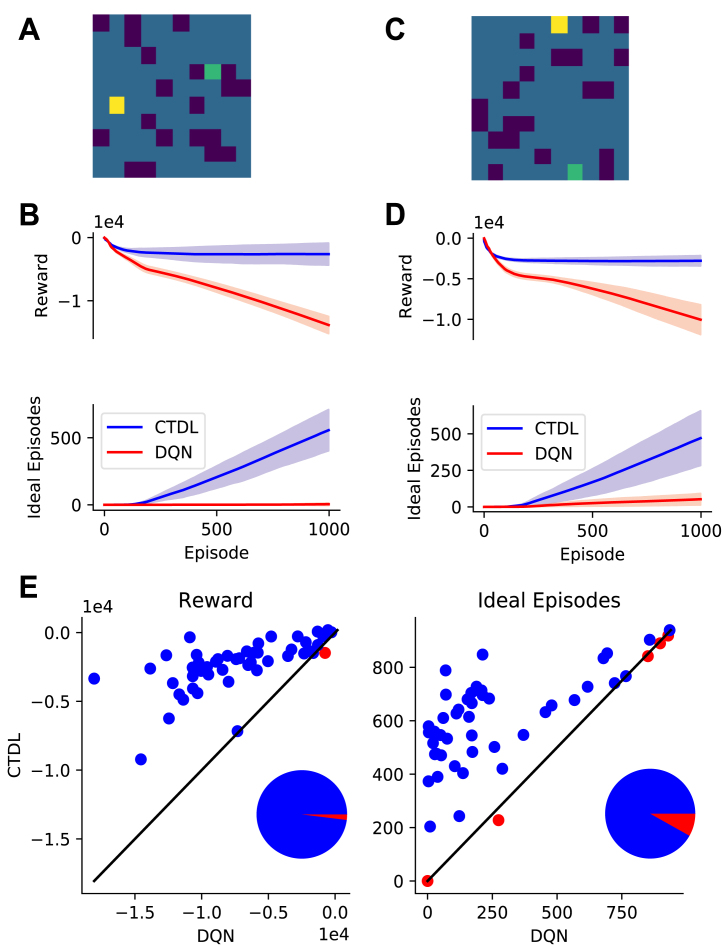

Fig. 1.

A: First example grid world, dark blue cells represent negative rewards (−1), the green cell represents the goal (1) and the yellow cell represents the agents starting position. B: Performance of CTDL and DQN on the first example gird world in terms of cumulative reward and ‘ideal’ episodes over the course of learning. An ‘ideal’ episode is an episode where the agent reached the goal location without encountering a negative reward. Both CTDL and DQN were run 30 times on each maze. C: Second example gird world. D: Performance of CTDL and DQN on the second example grid world. E: Scatter plots comparing the performance of CTDL and DQN on 50 different randomly generated grid worlds. Both CTDL and DQN were run 30 times on each maze and the mean value at the end of learning was calculated. Blue points indicate grid worlds where CTDL out-performed DQN and red points indicate grid worlds where DQN out-performed CTDL. The pie charts to the lower right indicate the proportions of blue and red points . (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)