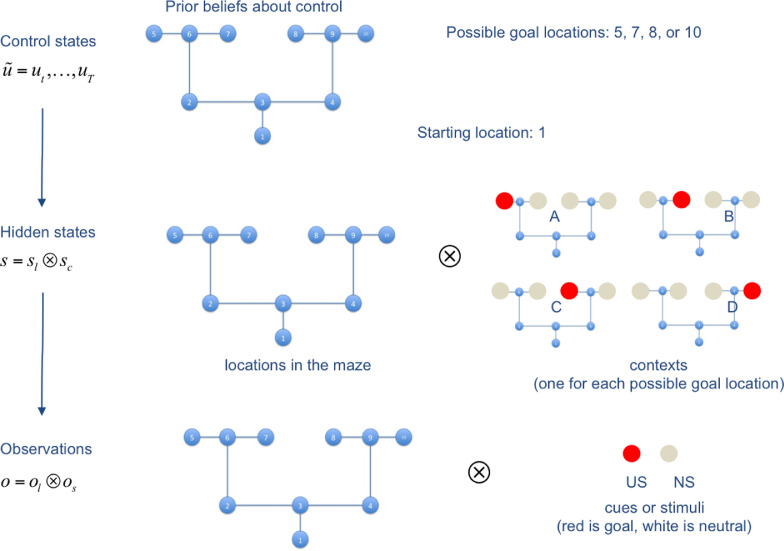

Fig. 3.

Schematic illustration of the generative model. The generative model comprises 10 control states, 40 hidden states and 20 observations. Red is a reward observation, while white is a neutral, non-rewarding stimulus. This figure shows that the starting location is always 1, but it can correspond to 4 different states depending on the context (e.g., state 1 if the context is A, state 4 if the context is D). There are 4 potential goal locations: location 5 if the context is A (which corresponds to state 17), location 7 if the context is B, location 8 if the context is C, and location 10 if the context is D (which corresponds to state 17). Note that in our simulations, we only use the two contexts A and D. We constructed 17 policies (not shown here) that cover the possible moves of the agent. The two most important policies are policy 1 = up, left, up, left, which is the best policy under context A, and policy 2 = up, right, up, right, which is the best policy under context D.