Abstract

Background

Pediatric residents must demonstrate competence in several clinical procedures prior to graduation, including simple laceration repair. However, residents may lack opportunities to perform laceration repairs during training, affecting their ability and confidence to perform this procedure.

Objective

We implemented a quality improvement initiative to increase the number of laceration repairs logged by pediatric residents from a baseline mean of 6.75 per month to more than 30 repairs logged monthly.

Methods

We followed the Institute for Healthcare Improvement's Model for Improvement with rapid plan-do-study-act cycles. From July 2016 to February 2018, we increased the number of procedure shifts and added an education module on performing laceration repairs for residents in a pediatric emergency department at a large tertiary hospital. We used statistical process control charting to document improvement. Our outcome measure was the number of laceration repairs documented in resident procedure logs. We followed the percentage of lacerations repairs completed by residents as a process measure and length of stay as a balancing measure.

Results

Following the interventions, logged laceration repairs initially increased from 6.75 to 22.75 per month for the residency program. After the number of procedure shifts decreased, logged repairs decreased to 13.40 per month and the percentage of lacerations repaired by residents also decreased. We noted an increased length of stay for patients whose lacerations were repaired by residents.

Conclusions

While our objective was not met, our quality improvement initiative resulted in more logged laceration repairs. The most effective intervention was dedicated procedure shifts.

What was known and gap

The use of procedure technicians has decreased the procedural burden on physicians, but also has decreased pediatric residents' exposure to laceration repairs.

What is new

A quality improvement (QI) initiative to increase the number of laceration repairs logged by pediatric residents using plan-do-study-act cycles.

Limitations

The institution's large QI program and use of procedure technicians might be unique and limit generalizability. Determining causation was difficult because 3 interventions were implemented concurrently.

Bottom line

The specific goal was not met, but the QI project did increase the number of laceration repairs performed by pediatric residents.

Introduction

Pediatric residents must achieve competence in 13 procedures, including simple laceration repair, according to the Accreditation Council for Graduate Medical Education (ACGME) Common Program Requirements.1 More than 75% of pediatric residency program directors rated resident ability to repair lacerations as highly important.2 Nationally, nearly 90% of graduating pediatric residents feel comfortable performing this procedure independently.3 Moreover, practicing general pediatricians may perform this procedure at least once per year.4

The ACGME annual survey of graduating residents from 2015 to 2017 demonstrated only 63% of our pediatric residents agreed or strongly agreed they were well prepared to repair a simple laceration, which is well below the national average of 90%.3 Thus, many of our pediatric residents felt they graduated without adequate training to independently perform this procedure. Prior to this quality improvement (QI) project, our graduating residents logged an average of 2.6 laceration repairs total during training, fewer than our institution's recommendation of 5 repairs. A review of electronic health records (EHRs) and discussion with the chief residents suggested the suboptimal numbers of logged repairs stemmed from limited clinical opportunities to perform repairs, as opposed to a failure of residents to log completed repairs.

Our pediatric emergency department (PED) utilizes a procedure technician program to decrease the procedural burden on physicians.5 Technicians complete a variety of procedures, including the majority of laceration repairs. Despite this program's initial goal to balance residents' education with clinical demands, its success may have decreased pediatric residents' exposure to laceration repairs within our institution. Trainee procedural competence is a common concern for other programs as well. Training institutions have approached this issue with boot camps, simulation, and education modules.6–9 Video-based learning and live workshop training resulted in similar suturing scores.6 Simulation opportunities may improve self-reported confidence and competence with variable retention.8,9 We chose to utilize the flexibility and adaptability of a QI project to address this concern.

Our specific aim was to increase the number of laceration repairs logged by all pediatric residents from a baseline mean of 6.75 laceration repairs logged per month to greater than 30 by the end of 2018 using established QI principles and processes.

Methods

Context

This study took place at a large tertiary PED with an annual volume of 90 000 patients. The institution trains 110 categorical pediatric residents. The intervention portion of the study was July 2016 to February 2018.

Interventions

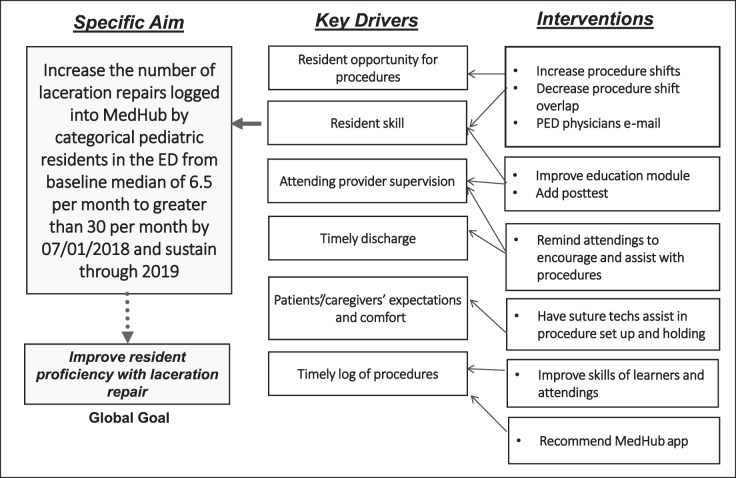

A multidisciplinary team determined key drivers and planned interventions according to the Institute for Healthcare Improvement (IHI) method (Figure 1). The team selected a goal of 30 laceration repairs logged per month for the residency, with the reasoning that if reached, this goal would allow for each graduating resident to likely have averaged double the number of repairs the residency program recommends as a minimum (5). We defined a “logged laceration repair” as one entered in the residency's procedure tracking system, which reports residents' laceration repair numbers to the ACGME. Three interventions using the plan-do-study-act cycle model were implemented (Figure 2) and are outlined below.10

Figure 1.

Key Driver Diagram

Note: This figure depicts the global goal to improve resident competence with simple laceration repair. The global goal designates our long-term goal that was not measured or studied. Our quality improvement initiative created a specific aim to increase the number of laceration repairs logged into MedHub by pediatric residents as our first step toward this global goal and was the focus of our study. The key drivers are the main leverage points that influence the specific aim and the interventions are specific areas of change which may lead to improvement in one or more of the key drivers.

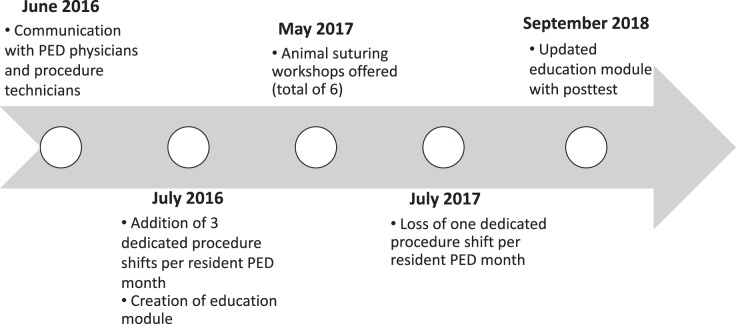

Figure 2.

Timeline of Interventions

Note: This figure shows what and when interventions took place. Interventions are specific tests of change likely to lead to improvement of key drivers, which are the main leverage points that influence the specific aim.

The first intervention in June 2016 addressed institutional culture. PED physicians were notified of trainees' insufficient laceration repair numbers and ACGME concerns. The importance of balancing resident education with patient care was stressed to the procedure technicians, who then supported trainees by assisting and setting up equipment. Finally, the procedural goal of 5 laceration repairs logged by each resident throughout residency was emphasized to residents and attendings.

The second intervention in July 2016 increased the number of dedicated procedure shifts from 1 to 3 per resident in the PED each month. In July 2017, due to conflicting educational and clinical demands placed on the residents, the number of procedure shifts assigned to each resident decreased to 2 per month.

The third and final intervention included a 2-pronged education approach. The first comprised of a video-based module in July 2016 with an update in September 2017, assigning textbook chapters, and stating clear procedure shift goals.11 The second component included voluntary suturing labs available to all residents (Figure 2). Due to scheduling conflicts, trainee participation was not mandatory and attendance was not tracked.

Measures

We utilized statistical process control charts to monitor outcome, process, and balancing measures.12,13 Control charts utilize time series graphs to display data in a way that identifies special causes of variation.12,13 Special cause variation is noted when data points occur outside upper or lower control limits (± 3 standard deviations) or follow a statistically different pattern within these control limits.

Our outcome measure, which measures the result of the improvement process, tracked monthly resident laceration repair procedure logs. We used a c-chart, a type of control chart that addresses attribute data and counts the number of desired actions (in this case, laceration repairs logged by residents).13 We collected data from the ACGME, E*Value, and MedHub because the residency changed procedural log programs during the time of data collection.

Our process measure, which ensures our interventions were performing as desired, monitored the percentages of PED laceration repairs completed by pediatric residents. We used a P-chart, a type of control chart that addresses variable data and evaluates the percentage of desired results, which, in this case, is lacerations repairs completed by pediatric residents as opposed to procedure technicians, other trainees, or attendings.13 EHR laceration procedure note documentation was utilized to determine the proportion completed by pediatric residents.

We monitored length of stay (LOS) for patients requiring laceration repair as our balancing measure. Balancing measures evaluate any unintended consequences of our QI project. We used an I-chart, a type of control chart that measures variable data, which in this case was time.13 The process and balancing measure baseline time period did not correlate to the duration of our outcome measure due to an upgrade of the EHR.

This study was deemed QI by the Institutional Review Board and was exempt from human subjects review.

Analysis

Utilizing control charts, we followed Nelson rules to determine significance of variability in our data.14 Nelson rules most relevant to our study regard trends (6 or more consecutive increasing or decreasing points) and shifts (at least 8 points on 1 side of the mean). The 2-sample t test allowing unequal variance (Welch's t test) was used to evaluate for significant differences in LOS.15

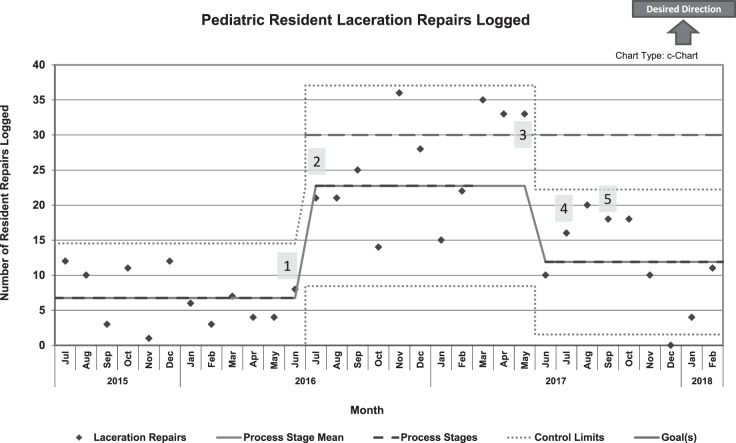

Results

Baseline data demonstrated a monthly mean of 6.35 laceration repairs logged for the residents, which increased immediately following culture changes, procedure shift increases, and education module deployment (Figure 3). After 8 months, a clear shift to a new mean of 22.75 repairs logged emerged. The goal of 30 repairs logged was reached during 4 of the 8 months. In July 2017, after a decrease in procedure shift numbers, monthly laceration repairs logged decreased to a mean of 13.40, although this still represented a doubling of the baseline mean. There was no trend or shift in the data following the education module update in September 2017.

Figure 3.

Outcome Measure

Note: This figure shows pediatric resident laceration repair procedure logs over the duration of this quality improvement initiative, as well as the process stage mean, process stages, control limits, and goals over time. Labeled numerically within the figure are 5 interventions that took place, which include: (1) E-mails Sent to Faculty; (2) Increase to 3 Resident Procedure Shifts; (3) Animal Suture Labs Begin to Be Offered; (4) Loss of a Resident Procedure Shift; and (5) Update of Education Module and Posttest.

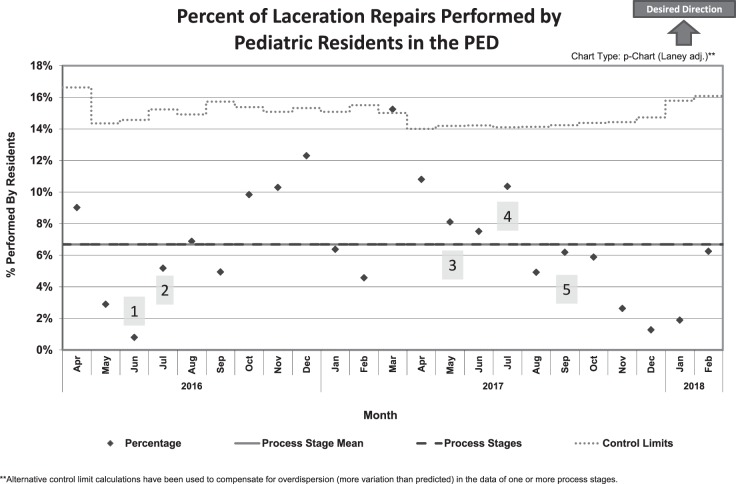

Our process measure was the ratio of resident laceration repairs to total repairs. No centerline shift was demonstrated by our P chart, because no significant change in the percentage of laceration repairs performed by pediatric residents occurred (Figure 4). However, after the decrease in the number of procedure shifts, 7 data points below the current mean implied a potential downward shift.

Figure 4.

Process Measure

Note: This figure shows the percent of laceration repairs performed by pediatric residents in the pediatric emergency department (PED) over time. Also shown in this figure are the process stage mean, process stages, control limits, and 5 interventions labeled numerically within the figure. These interventions include: (1) E-mails Sent to Faculty; (2) Increase to 3 Resident Procedure Shifts; (3) Animal Suture Labs Begin to Be Offered; (4) Loss of a Resident Procedure Shift; and (5) Update of Education Module and Posttest.

While the average LOS for all patients undergoing laceration repair in the PED did not change in a statistically significant way, we did find a statistically significant difference in patient LOS based on type of performing provider during both the baseline and intervention periods. During the baseline period, mean LOS for patients undergoing laceration repair by a procedure technician was 132 minutes compared to 151 minutes for patients repaired by a pediatric resident (95% CI 10.15–33.04, P = .001). Similarly, during our intervention period, patients repaired by procedure technicians had a shorter average LOS (126 minutes) compared to patients repaired by pediatric residents (156 minutes; 95% CI 4.65–55.95; P = .013).

Discussion

Our study demonstrates QI methodology can successfully increase the number of laceration repairs logged by residents. We confirmed that increased opportunity to complete laceration repairs, specifically with dedicated shifts for procedures, correlates with increased laceration repairs logged by residents.

The statistical process control charts demonstrated that the number of procedure shifts directly correlated with procedures logged. With greater procedure shifts, the goal of 30 logged resident laceration repairs per month was met during 4 months, implying an achievable project aim. The current outcome measure mean remains double the baseline average, likely because the number of dedicated procedure shifts for each pediatric resident remains higher since our initiative. The other interventions (culture shifts and educational modules), although not easily measurable, may help create lasting influence.

Perceived resident inefficiency may contribute to fewer residents performing laceration repairs. The procedure technicians are extremely efficient, leading to concerns that our initiative would slow the flow of the PED. Our data supported this concept, as the LOS was longer for patients repaired by a pediatric resident. Despite this, overall average LOS was stable before and after our intervention, likely due to a low percentage of laceration repairs performed by pediatric residents.

While showing the success of using a QI initiative, there are limitations of this study that may hinder generalizability. The results may not be reproduceable due to specific characteristics of our institution. Our institution has a large QI program offering significant expertise and resources, which may be difficult for other institutions to reproduce. Also, our employment of procedure technicians may be unique, though physician extenders in other centers may mirror the competition for procedures seen here. Determining causation is difficult because 3 interventions were implemented concurrently, and we were unable to track measures for some interventions. Repair counts may have been falsely counted low if residents delayed logging the repair; however, repeated queries identified no significant changes in the numbers. Finally, our initiative did not measure resident competence with laceration repair and instead presumed numbers of repairs to be a proxy of skill.

Although the study did not demonstrate effect of interventions such as workshops and education modules on the number of laceration repairs logged, it highlights the opportunity for further investigation of these interventions. Methods of increasing resident performance of laceration repairs during standard PED shifts should be identified. Future ACGME surveys could be reviewed to evaluate the effect of our initiative on resident confidence in laceration repair.

Conclusions

Despite not reaching our goal, this study showed QI methodology can increase pediatric resident laceration repairs logged. Dedicated procedure shifts likely drove the greatest improvement.

Footnotes

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

This work was previously presented as a poster at the American Pediatric Program Director Annual Spring Meeting, Atlanta, GA, March 20–23, 2018.

The authors would like to thank Nathanial Gallup, BSBA, for his work with data analysis and the QI team members.

References

- 1.Accreditation Council for Graduate Medical Education. Pediatrics. 2019 http://www.acgme.org/Specialties/Program-Requirements-and-FAQs-and-Applications/pfcatid/16/Pediatrics Accessed December 18.

- 2.Gaies MG, Landirgan CP, Hafler JP, Sandora TJ. Assessing procedural skills training in pediatric residency programs. Pediatrics. 2007;120(4):715–722. doi: 10.1542/peds.2007-0325. [DOI] [PubMed] [Google Scholar]

- 3.Schumacher DJ, Frintner MP, Cull W. Graduating pediatric resident reports on procedural training and preparation. Acad Pediatr. 2018;18(1):73–78. doi: 10.1016/j.acap.2017.08.001. [DOI] [PubMed] [Google Scholar]

- 4.Ben-Isaac E, Keefer M, Thompson M, Wang VJ. Assessing the utility of procedural training for pediatrics residents in general pediatric practice. J Grad Med Educ. 2013;5(1):88–92. doi: 10.4300/JGME-D-11-00255.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Apolo JO, Dicocco D. Suture technicians in a children's hospital emergency department. Pediatr Emerg Care. 1988;4(1):12–14. doi: 10.1097/00006565-198803000-00004. [DOI] [PubMed] [Google Scholar]

- 6.Chien N, Trott T, Doty C, Adkins B. Assessing the impact of accessible video-based training on laceration repair: a comparison to the traditional workshop method. West J Emerg Med. 2015;16(6):856–858. doi: 10.5811/westjem.2015.9.27369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Starr M, Sawyer T, Jones M, Batra M, McPhillips H. A simulation-based quality improvement approach to improve pediatric resident competency with required procedures. Cureus. 2017;9(6):e1307. doi: 10.7759/cureus.1307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Augustine EM, Kahana M. Effect of procedure simulation workshops on resident procedural confidence and competence. J Grad Med Educ. 2012;4(4):479–485. doi: 10.4300/JGME-D-12-00019.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gaies MG, Morris SA, Hafler JP, Graham DA, Capraro AJ, Zhou J, et al. Reforming procedural skills training for pediatric residents: a randomized, interventional trial. Pediatrics. 2009;124(2):610–619. doi: 10.1542/peds.2008-2658. [DOI] [PubMed] [Google Scholar]

- 10.Institute for Healthcare Improvement. Science of improvement: testing changes. 2019 http://www.ihi.org/resources/Pages/HowtoImprove/ScienceofImprovementTestingChanges.aspx Accessed December 18.

- 11.Trott A. Wounds and Lacerations: Emergency Care and Closure 4th ed. Philadelphia, PA: Elsevier Saunders; 2012. [Google Scholar]

- 12.Hart MK, Hart RF. Statistical Process Control for Health Care. Boston, MA: Cengage; 2001. [Google Scholar]

- 13.Provost LP, Murray SK. The Health Care Data Guide: Learning from Data for Improvement. San Francisco, CA: Jossey-Bass; 2011. [Google Scholar]

- 14.Nelson L. Technical aids. J Qual Tech. 1984;16(4):238–239. [Google Scholar]

- 15.Welch BL. The significance of the difference between two means when the population variances are unequal. Biometrika. 1938;29(3/4):350. [Google Scholar]

- 16.Hedges JR, Trout A, Magnusson AR. Satisfied patients exiting the emergency department (SPEED) study. Acad Emerg Med. 2002;9(1):15–21. doi: 10.1111/j.1553-2712.2002.tb01161.x. [DOI] [PubMed] [Google Scholar]

- 17.Lowe DA, Monuteaux MC, Ziniel S, Stack AM. Predictors of parent satisfaction in pediatric laceration repair. Acad Emerg Med. 2012;19(10):1166–1172. doi: 10.1111/j.1553-2712.2012.01454.x. [DOI] [PubMed] [Google Scholar]

- 18.Lee SJ, Cho YD, Park SJ, Kim JY, Yoon YH, Choi SH. Satisfaction with facial laceration repair by provider specialty in the emergency department. Clin Exp Emerg Med. 2015;2(3):179–183. doi: 10.15441/ceem.15.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Poots AJ, Reed JE, Woodcock T, Bell D, Goldmann D. How to attribute causality in quality improvement: lessons from epidemiology. BMJ Qual Saf. 2017;26(11):933–937. doi: 10.1136/bmjqs-2017-006756. [DOI] [PubMed] [Google Scholar]

- 20.Ogrinc G, Davies L, Goodman D, Batalden PB, Davidoff F, Stevens D. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence) Revised publication guidelines from a detailed consensus process. BMJ Quality and Safety. 2015. Online first, September 15. [DOI] [PMC free article] [PubMed]