Highlights

-

•

Three-month-old infants demonstrate a looking preference for fearful over happy faces.

-

•

Infants also show differential neural responses to fearful vs. happy facial expressions.

-

•

Neural responses differ over occiptotemporal regions implicated in face perception.

-

•

Neural responses did not differ over frontocentral regions implicated in attention.

-

•

Early perceptual sensitivity may presage the attentional bias for fearful faces.

Keywords: Infancy, Emotion, Facial expressions of emotion, Fear, Attentional bias, Event-related potentials

Abstract

An important feature of the development of emotion recognition in infants is the emergence of a robust attentional bias for fearful faces. There is some debate about when this enhanced sensitivity to fearful expressions develops. The current study explored whether 3-month-olds demonstrate differential behavioral and neural responding to happy and fearful faces. Three-month-old infants (n = 69) participated in a behavioral task that assessed whether they show a visual preference for fearful faces and an event-related potential (ERP) task that assessed their neural responses to fearful and happy faces. Infants showed a looking preference for fearful over happy faces. They also showed differential neural responding over occiptotemporal regions that have been implicated in face perception (i.e., N290, P400), but not over frontocentral regions that have been implicated in attentional processes (i.e., Nc). These findings suggest that 3-month-olds display an early perceptual sensitivity to fearful faces, which may presage the emergence of the attentional bias for fearful faces in older infants. Tracking the ontogeny of this phenomenon is necessary to understand its relationship with later developmental outcomes.

1. Introduction

Infants’ ability to perceive, discriminate, and interpret facial expressions of emotion is critical for infant-caregiver interaction and the development of attachment, emotion regulation, and later socio-emotional skills (Bornstein and Arterberry, 2003; Peltola et al., 2015; Steele et al., 2008; Thompson, 1991; Tronick, 1989; Walker-Andrews and Dickson, 1997). Shortly after birth newborns are able to discriminate among a limited number of facial expressions (e.g., happy, sad; Barrera and Maurer, 1981; Farroni et al., 2007; Field et al., 1982; Young-Browne et al., 1977). By 3 months of age, infants begin to show more sophisticated abilities, including categorization of facial expressions (Walker-Andrews et al., 2011) and bimodal matching across facial and vocal expressions of emotion (Kahana-Kalman and Walker-Andrews, 2001; Montague and Walker-Andrews, 2001, Montague and Walker-Andrews, 2002), although these abilities are initially limited to caregiver faces. By 4–7 months, infants generalize these abilities to unfamiliar faces (Bornstein and Arterberry, 2003; Kaneshige and Haryu, 2015; Kotsoni et al., 2001; Ludemann, 1991; Ludemann and Nelson, 1988; Nelson et al., 1979; Nelson and Dolgin, 1985; Safar and Moulson, 2017).

Infants also display consistent attentional preferences for specific facial expressions of emotion across the first year of life. In particular, the emergence of a robust attentional bias for fearful facial expressions, by 7 months of age, is a well-established feature of the development of emotion recognition in infancy. This bias is demonstrated across different paradigms and multiple measures. Seven-month-olds look longer at fearful compared to happy faces in a visual paired-comparison (VPC) task, (Ludemann and Nelson, 1988; Nelson and Dolgin, 1985; Peltola et al., 2009a), which is a reversal of younger infants’ bias to attend to positive compared to negative faces (Bayet et al., 2015; Farroni et al., 2007; LaBarbera et al., 1976). They also demonstrate longer latency to disengage from a centrally-presented fearful face compared to non-fearful expressions when presented with a peripheral target (Leppänen et al., 2010; Peltola et al., 2008, Peltola et al., 2009b, Peltola et al., 2013). The attentional bias for fearful expressions is also evident in physiological responses. Seven-month-olds demonstrate greater heart rate deceleration, an indication of heightened attention, in response to fearful than happy expressions.(Leppänen et al., 2010; Peltola et al., 2011). At the neural level, event-related potential (ERP) studies have consistently revealed a larger Nc amplitude – a frontal-centrally located component presumed to reflect allocation of attention to salient stimuli (Courchesne et al., 1981) – in response to fearful than happy or neutral expressions, and angry than fearful expressions (Kobiella et al., 2008; Leppänen et al., 2007; Peltola et al., 2009a).

Understanding the emergence of the attentional bias to fearful facial expressions has been an important focus of research for several reasons. First, this bias may reflect a broader phenomenon whereby threat-relevant stimuli robustly capture visual attention (LoBue et al., 2010; LoBue and Rakison, 2013; Öhman et al., 2001). Visual search tasks are frequently used to demonstrate this phenomenon: Both adults and children more rapidly identify pictures of threat-relevant stimuli (e.g., snakes, spiders) amongst a background of threat-irrelevant distractor stimuli (e.g., flowers, frogs, mushrooms) than the converse (Flykt, 2006, 2005; LoBue and DeLoache, 2008; LoBue and Rakison, 2013; Öhman et al., 2001). Like adults and young children, 8- to 14-month-old infants orient faster to snakes compared to flowers and frogs (DeLoache and LoBue, 2009). This enhanced detection of threat-relevant stimuli may be evolutionarily essential to escape harm and facilitate our probability of survival – thus, understanding the emergence of the attentional bias for fearful facial expressions gives us insight into a broader phenomenon. Second, recent research has demonstrated that the attentional bias to fearful facial expressions serves as something of a ‘bellwether’ of socioemotional development. Both maternal anxiety (Morales et al., 2017) and infant negative affect (Pérez-Edgar et al., 2017) predict individual differences in the attentional bias to threat; in turn, these individual differences predict later socioemotional outcomes (e.g., attachment security; Peltola et al., 2015, anxiety disorders; Field and Lester, 2010). Therefore, examining the emergence of this bias is important given its association with both normative and non-normative later developmental outcomes.

The attentional bias for fearful faces is robust in 7-month-olds, and studies with static faces have generally supported its emergence between 5 and 7 months of age (Leppänen et al., 2018). Leppänen and Nelson (2009) theorized that its emergence during this period of development is due to the confluence of 1) the functional maturation of neural regions critical for emotion processing and attention, including the amygdala, occipitotemporal, and orbitofrontal cortices; and 2) infants’ increased experience with a wider range of facial expressions due to the onset of locomotion around the middle of the first year. A growing number of studies, however, suggest that differential processing of fearful faces may appear earlier in infancy, although evidence is mixed. Using dynamic stimuli, Heck et al. (2016) demonstrated that 5-month-olds, but not 3.5-month-olds, disengaged their attention less frequently from a centrally presented fearful face than non-fearful faces when presented with a peripheral target. This contrasts with previous findings suggesting an emergence between 5 and 7 months of age using static faces (Peltola et al., 2009a). The authors suggested that using dynamic faces, which contain more emotion-relevant information than static faces, provided infants with a better opportunity to display their emotion-processing capabilities (Heck et al., 2016). There are also mixed findings from ERP studies. Several studies have found increased Nc amplitudes in response to fearful expressions compared to happy expressions (Leppänen et al., 2007; Peltola et al., 2009a) or neutral expressions (Hoehl and Striano, 2010) in infants older than 6 months, but not in infants younger than 6 months. In contrast, Yrttiaho et al. (2014) found a smaller N290 and faster P400 response to fearful compared to happy and neutral faces at both 5 and 7 months of age. The N290 and P400 are often considered developmental precursors to the adult N170 face-sensitive component (de Haan et al., 2003). Finally, in a recent study by Bayet et al. (2017), 3.5-month-old infants demonstrated enhanced detection of fearful compared to happy faces. Specifically, they used a visual preference procedure where faces mixed with varying levels of visual noise were compared with pure visual noise. Detection thresholds (i.e., the lowest level of “face signal” at which infants preferred the face over visual noise) were lower for fearful faces than happy faces, suggesting a perceptual advantage for detecting fearful compared to happy faces.

How can we reconcile these mixed findings in infants younger than 7 months? Bayet et al. (2017) suggest an important distinction that may underlie these discrepancies: It is possible that there are early differences in perceptual processing of fearful and happy facial expressions that are a precursor to the attentional bias for fear that emerges later. If this is the case, we might expect that differential processing of fearful and happy faces would appear in younger infants in tasks that tap perceptual processing (e.g., face detection) but not in tasks that tap attentional processing (e.g., visual preference). The goal of our study, therefore, was to investigate infants’ perception of fear using a measure that could both capture and distinguish between perceptual and attentional processes. Event-related potentials (ERPs) are uniquely suited to this goal. In addition to the excellent temporal resolution and non-invasive nature of this technique (de Haan et al., 2003; Woodman, 2010) the infant ERP in response to visual stimuli has been well characterized. Researchers generally focus on three components in studies of visual perception with infants: the N290, P400, and Nc. The N290 and P400 components are maximal over occipitotemporal regions and are often presumed to reflect early perceptual processes. In contrast, the Nc, maximal over frontal-central regions, is presumed to reflect attentional processes (Reynolds and Richards, 2005). Cortical source localization confirms the distinction: In a recent study with a large sample of infants using realistic head models, the N290 was localized to occipitotemporal cortex, particularly the middle fusiform gyrus, while the Nc seemed to be the result of generators in both anterior regions (e.g., orbitofrontal gyrus, anterior cingulate) and posterior regions (e.g., middle fusiform gyrus, medial inferior occipital gyrus) (Guy et al., 2016). Interestingly, the P400 seemed to have similar neural generators as the Nc, suggesting that it may also reflect both attentional and perceptual processes.

In the current study we examined whether 3-month-olds demonstrate differential perceptual and attentional processing of fearful and happy faces using both a VPC and ERP task. In the VPC task, infants viewed fearful paired with happy expressions and their spontaneous looking behavior was measured. In the ERP task, infants viewed fearful and happy expressions presented one at a time while recording EEG. Based on Heck and colleagues (Heck et al., 2016) we did not expect 3-month-olds to show an overt behavioral attentional preference for fearful compared to happy facial expressions. Consistent with a distinction between perceptual and attentional processing of fearful faces, we predicted differential ERP responses for the N290, and potentially P400, indicating differential perceptual sensitivity to fearful compared to happy faces, but no difference in Nc response to fearful and happy faces.

2. Method

2.1. Participants

Three-month-old infants (n = 69) participated in this study. The sample comprised Caucasian (n = 36), Black (n = 3), East Asian (n = 5), South Asian (n = 6), Hispanic (n = 1), and Mixed (n = 18) participants. Sixty infants (Mage = 89.56 days) successfully completed the VPC task. Data from the other nine infants were excluded due to side-bias during testing (>95 % looking time to one side across all trials; n = 7), experimenter error (n = 1), or twin with another infant in the study (n = 1). Thirty-six infants (Mage = 89.22 days) successfully completed the ERP task. Data from the other 33 infants were excluded due to refusal to wear the ERP cap (n = 1), fussiness (n = 3), excessive artefact resulting in fewer than 9 good trials per condition (n = 27), experimenter error (n = 1), or twin with another infant in the study (n = 1). This attrition rate is typical of ERP studies with infants in this age range (Stets et al., 2012). All infants were born ±4 weeks of their due date and none had been diagnosed with visual impairment per parent report. Participants were recruited through a database that contains contact information for parents from a large metropolitan area who expressed an interest in participating in developmental research.

2.2. Stimuli and procedure

Stimuli consisted of color photographs of 2 (VPC) and 12 (ERP) unfamiliar female Caucasian faces each displaying happiness and fear drawn from the Karolinska Directed Emotional Face Set (Lundqvist et al., 1998). In a validation study of these stimuli, (Goeleven et al., 2008) adults’ identification of both happy and fearful faces was above chance. Happy faces were rated as greater in intensity than fearful faces, although arousal for both expressions was rated similarly. Although previous studies have demonstrated that differential responding to emotional expressions is more likely to be observed with dynamic faces (Heck et al., 2016), we were limited to static presentation of the emotions due to the use of ERPs.

All appointments were scheduled when infants were most active and alert, as reported by the primary caregiver. The Research Ethics Board of Ryerson University approved the current study. Parents of participants provided written informed consent. The VPC and ERP tasks took place in the same testing room. The infant sat on their parent’s lap facing a computer screen. A video camera situated directly above the computer screen captured infant looking behavior. The video signal was projected onto a second computer screen in an adjacent room, which allowed the experimenter to monitor infant looking behavior online throughout the experiment. All infants completed the VPC task first, followed by the ERP task.

Visual paired-comparison task. Parents wore a sleep mask throughout the task so that they did not influence the infant’s looking behavior. The VPC task was programmed in E-Prime 3.0 (Psychology Software Tools, 2016) and consisted of four trials that each lasted for a fixed length of 10 s. An attention-grabbing stimulus (a bouncing ball) appeared in the center of the screen between trials to redirect the infant’s attention to the screen. The experimenter initiated each trial when the infant was looking at the screen. Each trial consisted of one female face expressing happiness on one side of the computer screen and fear on the other. From a viewing distance of 60 cm, each face image subtended approximately 19 × 24 degrees of visual angle. The first pair of trials showed the same identity, with the left/right position of the happy and fearful expressions reversed from trial 1 to trial 2. The second pair of trials showed a second identity, with the left/right position of the happy and fearful expressions reversed from trial 3 to trial 4. Which identity was shown in the first versus second pair of trials, and the left versus right appearance of the fearful face were counterbalanced across infants (Fig. 1). The experimenter and a research assistant blind to the left-right position of the fearful and happy expressions coded infant looking time offline. Trials were coded frame-by-frame at 30 frames/second using DataVyu (v1.2). Inter-observer reliability was r = .88 based on 20 % of total infant looking time data.

Fig. 1.

Visual paired-comparison task. The task consisted of four fixed length 10 s trials. A happy and fearful face appeared side-by-side on each trial; the same first identity was presented on the first pair of trials and the second same identity was paired on the second set of trials. The order of identity presentation was counterbalanced, as well as the left versus right appearance of the fearful face. A bouncing ball (attention-grabbing stimulus) appeared in the center of the screen between trials to redirect infants’ attention to the screen.

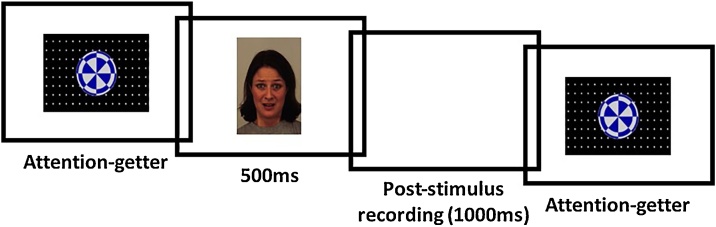

Event-related potential task. The ERP task was programmed in E-Prime (Psychology Software Tools, 2016). During EEG recording, infants saw 12 unique identities each expressing happy and fearful facial expressions. Stimuli were presented one at a time in random order at a viewing distance of 60 cm, such that each face image subtended approximately 15 × 20 degrees of visual angle. Each trial consisted of a 200 ms baseline, 500 ms stimulus presentation, and 1000 ms inter-trial interval during which a blank white screen was presented. An attention-grabbing stimulus appeared in the center of the screen between trials to redirect the infant’s attention to the screen (Fig. 2). The experimenter only initiated a trial when the infant was looking at the screen. Trials continued until infants become too inattentive or fussy or infants viewed a maximum of 240 trials.

Fig. 2.

ERP Task. Infants saw happy and fearful faces presented one at a time in random order. Each trial consisted of a 200 ms baseline, a 500 ms stimulus presentation, and a 1000 ms inter-trial interval during which a blank white screen was presented. A bouncing ball (attention-grabbing stimulus) appeared in the center of the screen between trials to redirect infants’ attention to the screen.

Electrophysiological recording and processing. Continuous EEG was recorded using a 128-channel Geodesic Sensor Net (Electrical Geodesics Inc.), connected to a high-input-impedance amplifier (Net Amps 300). The analog EEG signal was referenced online to vertex (Cz), digitized at a sampling rate of 500 Hz, band-pass filtered from 0.1−100 Hz, and stored on the hard drive of a Macintosh computer to be processed offline. Impedances for each electrode were at or less than 40 kΩ at the beginning of each recording.

Net Station 4.2 (Electrical Geodesics Inc.) was used to process the data offline. Continuous EEG was band-pass filtered from 0.1−30 Hz and segmented into 1200 ms epochs (200 ms baseline, 500 ms stimulus presentation, 500 ms post-stimulus recording). Epochs were baseline corrected to the mean of the 200 ms baseline period. Each trial was visually inspected for eye blinks, eye movements, and bad channels. Trials were excluded from analysis if more than 13 of 128 channels (∼10 %) were marked bad (see Table 1 for the average number and range of good trials per stimulus category). Bad channels were replaced using spherical spline interpolation in trials containing 10 % or fewer bad channels. Individual waveform averages for each participant were generated for each of the stimulus categories and re-referenced to the average reference. Grand means were generated by averaging together the individual waveform averages and inspected to identify time windows and regions that captured the components of interest. The Nc was examined from 380−650 ms post-stimulus onset at frontocentral regions over the left hemisphere (electrodes 13, 19, 20, 28, 29, 30), midline (electrodes 5, 6, 7, 11, 12, 106, 129), and right hemisphere (electrodes 4, 105, 111, 112, 117, 118). The N290 was examined from 280−450 ms post-stimulus onset and the P400 was examined from 380−600 ms post-stimulus onset at lateral occipital-temporal scalp regions over the left (electrodes 58, 59, 64, 65) and right (electrodes 90, 91, 95, 96) hemispheres.

Table 1.

Average numbers of good trials by emotion.

| M (SD) | Range | |

|---|---|---|

| Fearful | 24.94 (10.47) | 9-51 |

| Happy | 26.27 (11.65) | 10-58 |

3. Results

3.1. Behavior

Proportion looking time to the fearful facial expression was calculated for each trial (looking time to fearful/[looking time to fearful + looking time to happy]) and averaged across the four trials. A one-sample t-test comparing proportion looking time to the fearful expression against chance (50 %) was conducted. Three-month-olds demonstrated a significant looking preference for fearful facial expressions (M = 0.56, SD = 0.14, t(59) = 3.234, p = 0.002, two-tailed, d = 0.42). To control for the possibility that a few infants with large fear preferences might drive 3-month-olds’ preference for the fearful expression, we examined whether the observed proportion of infants showing an attentional preference for fearful expressions significantly differed from chance. Of 60 infants, 39 showed a preference for the fearful expression, which was significantly greater than expected by chance (Binomial probability, p = 0.027, two-tailed).

3.2. ERP

For each component of interest (Nc, N290, P400), mean amplitude was extracted and analyzed using repeated-measures ANOVAs with emotion (happiness, fear) and hemisphere (left, midline, right for Nc; left, right for N290 and P400) as within-subject factors. Paired sample t-tests, Bonferroni corrected for multiple comparisons, were conducted for significant main effects revealed by the ANOVA.

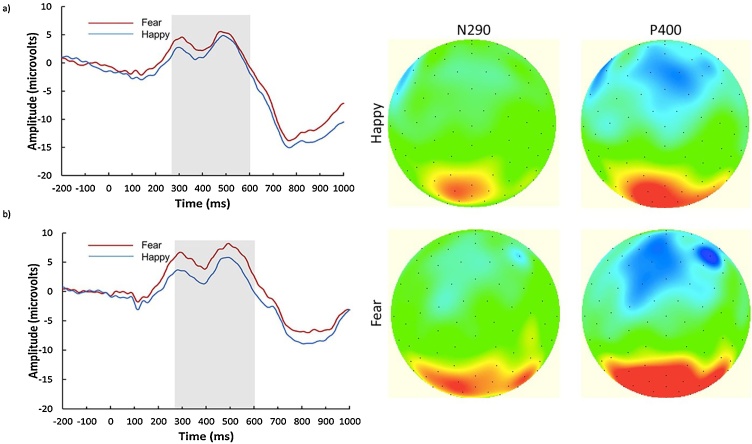

Nc. For mean amplitude, there were no main effects of emotion (F(1, 35) = 0.568, p = 0.456, ηp2 = 0.016) or hemisphere (F(1.311, 45.893) = 1.257, p = 0.281, ηp2 = 0.035; Greenhouse-Geisser corrected degrees of freedom), and no hemisphere x emotion interaction (F(2, 70) = 0.686, p = 0.507, ηp2 = 0.019). Fig. 3a, b, and c show the grand averaged ERP waveforms and topographies for the Nc.

Fig. 3.

Grand averaged ERP waveforms over frontocentral electrode sites and topographies. The grand average waveforms are displayed for happy and fearful faces over the left hemisphere (a), midline (b), and right hemisphere (c). The x-axis represents latency in milliseconds and the y-axis represents amplitude in microvolts. Scalp topographies are plotted for the middle of the time window for the Nc (515 ms).

N290. For mean amplitude, there was a significant main effect of emotion (F(1, 35) = 5.879, p = 0.021, ηp2 = 0.144). Fearful faces generated a significantly smaller (less negative) N290 (M = 4.33 μV, SD = 5.43) than happy faces (M = 2.22 μV, SD = 6.04; pcorr = 0.021). No main effect of hemisphere (F(1, 35) = 2.648, p = 0.113, ηp2 = 0.07), and no emotion x hemisphere interaction (F(1, 35) = 0.484, p = 0.491, ηp2 = 0.014) were found.

P400. For mean amplitude, there was no main effect of emotion (F(1, 35) = 3.2, p = 0.082, ηp2 = 0.084), however this effect was marginal such that fearful faces generated a larger P400 amplitude (M = 4.71 μV, SD = 6.04) than happy faces (M = 3.05 μV, SD = 6.49; pcorr = 0.082). No main effect of hemisphere (F(1, 35) = 2.834, p = 0.101, ηp2 = 0.075), and no emotion x hemisphere interaction (F(1, 35) = 0.62, p = 0.436, ηp2 = 0.017) were found. Fig. 4a and b show the grand averaged ERP waveforms and topographies for the N290 and P400.

Fig. 4.

Grand averaged ERP waveforms over occipitotemporal electrode sites. The grand averaged waveforms are displayed for happy and fearful faces over the left hemisphere (a), and right hemisphere (b). The x-axis represents latency in milliseconds and the y-axis represents amplitude in microvolts. Scalp topographies are plotted for the middle of the time windows for the N290 (365 ms) and the P400 (490 ms).

Exploratory Analysis. Upon visual inspection of the waveforms and topographical maps over occipitotemporal regions, we noted that the response to happy and fearful faces diverged even earlier than the time window of the N290 and continued through the time window of the P400. To capture this persistent difference in response that extended over the entire time window of the N290 and P400 components, we conducted an exploratory analysis on the mean amplitude from 250 to 600 ms. Here, we found a significant main effect of emotion (F(1, 35) = 4.687, p = 0.037, ηp2 = 0.118). The mean amplitude across this broad time window was significantly greater for fearful faces (M = 4.65 μV, SD = 5.35) than happy faces (M = 2.76 μV, SD = 5.9; pcorr = 0.037). No main effect of hemisphere (F(1, 35) = 3.614, p = 0.066, ηp2 = 0.094), and no emotion x hemisphere interaction (F(1, 35) = 0.562, p = 0.458, ηp2 = 0.016) were found.

4. Discussion

In the present study, 3-month-old infants demonstrated differential responses to fearful and happy faces at both the behavioral and neural level. Consistent with our hypotheses, infants displayed differential neural processing of happy and fearful faces over occipitotemporal regions that have been implicated in face perception, but not over fronto-central regions that have been implicated in attentional processing. Infants also showed significantly longer looking to fearful than happy faces in the VPC task, suggesting increased visual attention to fearful faces, a finding that was inconsistent with our predictions. These results are discussed in turn.

Infants displayed a smaller N290 and marginally larger, although not significant, P400 response to fearful compared to happy facial expressions. Although the majority of studies examining ERP responses to emotional expressions focus on the Nc, our findings are consistent with the small number of studies that have examined the ERP response over occipitotemporal regions as well (Leppänen et al., 2007; Yrttiaho et al., 2014). In particular, our exploratory analysis examining a broad latency window is consistent with a study showing an increase in positivity to fearful faces over a widespread latency corresponding to the N290 and early P400 in 5-month-old infants (Yrttiaho et al., 2014). In contrast to our findings over occipitotemporal regions, we found no difference in Nc response to fearful and happy faces. In older infants (e.g., 7 months) the Nc is reliably larger for fearful than happy faces (Leppänen et al., 2007; Peltola et al., 2009a), but the few previous studies that have examined the Nc response to emotional expressions in infants younger than 7 months also find no differentiation at the Nc (e.g., Hoehl and Striano, 2010; Peltola et al., 2009a). As a whole, then, our results are consistent with previous ERP studies of emotional face processing. They also extend this literature by demonstrating differential responding to fearful and happy expressions over occipitotemporal regions as early as 3 months of age.

Infants in the current study also demonstrated an attentional bias for fearful faces in the VPC task. This finding was unexpected, and in fact, inconsistent with studies using similar techniques with young infants. Peltola and colleagues (Peltola et al., 2009a) found that while 7-month-olds displayed both a visual attentional bias and a differential Nc response for fearful compared to happy expressions, 5-month-olds did not. More recently, Heck et al. (2016) found an attentional bias for dynamic fearful faces in 5-month-olds, but not 3.5-month-olds, and Leppanen and colleagues (Leppänen et al., 2018) replicated a looking preference for fearful over happy static faces in 7- and 12-month-olds, but not 5-month-olds. It is unclear what underlies the discrepancy between these reports and the current findings, although it is possible that task design is a factor (e.g., visual preference task in current study vs. attention overlap task in Heck et al., 2016). Additionally, it should be noted that the attentional preference observed for fearful faces at 3 months of age is not yet completely robust, given that not all infants displayed a fear preference. Thirty-nine of 60 infants displayed the preference; although this proportion was significantly greater than expected by chance, it is by no means an overwhelming majority. Thus, the attentional preference for fearful faces may begin to emerge by 3 months of age and become increasingly robust into the second half of the first year.

There is also the intriguing possibility that the development of increased visual attention to fearful faces does not show a linear trajectory across the first year. Non-linear development is common across multiple domains of infant development (Thelen, 2005), including face perception (Cashon et al., 2013; Cashon and Cohen, 2004; Cohen and Cashon, 2001). For example, 4- and 7-month-old infants display holistic face processing, but 6-month-old infants do not, suggesting a non-linear trajectory of specialized face processing in infancy (Cashon and Cohen, 2004). This U-shaped pattern of development for holistic face processing is also shown to be associated with sitting ability at this same age (Cashon et al., 2013). Although several studies have demonstrated no visual preference for static fearful faces in 5-month-old infants, no one has yet investigated whether 3-month-olds show a visual preference for static fearful faces in a traditional VPC task. Perhaps the emergence of this attentional bias follows a U-shaped pattern, whereby it initially emerges around 3 months, disappears by 5 months, then re-emerges around 7 months of age. Future longitudinal studies investigating the development of the attentional bias for fearful faces from 3 to 7 months would be necessary to evaluate this possibility.

Considering our ERP and behavioural findings in concert reveals an apparent contradiction: Infants displayed no difference in Nc response but a visual attentional preference for fearful faces. Previous research seems to implicitly assume that the larger Nc amplitude and attentional bias for fear reflect the same underlying mechanism; indeed, source localization of the Nc finds generators in the anterior cingulate cortex (ACC) and prefrontal cortex areas implicated in attentional processes (Reynolds and Richards, 2005). One possible explanation for the lack of Nc response to fearful faces observed in the current study may be that functional connections among frontal regions and subcortical limbic structures (i.e., amygdala) are not yet mature by 3 months (Leppänen and Nelson, 2009; Peltola et al., 2009a). In support of this explanation, it has been proposed that early visual preferences may be mediated by an early developing attention system consisting of a spatial orienting network (involving the parietal cortex and parts of the brainstem) and object recognition network (involving connections among primary visual and inferior temporal cortices), whereas aspects of attentional control are mediated by a later developing attention system involving primarily frontal regions (i.e., frontal eye fields, ACC, and prefrontal cortex) that emerges in the latter half of the first year (Colombo, 2001; Ruff and Rothbart, 1996). Given our finding of a visual preference early in the first year, yet no difference in Nc responsivity to fearful relative to happy faces, it is possible that sensitivity to these expressions at 3 months may reflect a more preliminary form of visual attention that does not yet fully recruit immature frontal cortical regions, but does reflect activity in occipitotemporal regions involved in object recognition.

Taken as a whole, the current findings seem consistent with the distinction between perceptual differentiation of fearful and happy faces in younger infants, and the attentional bias for fear that emerges in older infants. Consistent with Bayet et al. (2017), who found better detection of fearful than happy faces presented in noise, we demonstrate differential processing of happy and fearful faces over ERP components that have been implicated in perceptual processing (i.e., face perception), but not ERP components that have been implicated in attentional processes. Although the visual preference for fearful faces in the VPC task was surprising at first glance, as detailed above it is possible that different cortical networks mediate early-emerging versus later visual preferences. It has been proposed that early perceptual sensitivity to fearful faces may serve as a mechanism to promote robust detection of threat-relevant stimuli later in the first year, and serves to facilitate fear learning even with minimal exposure to such stimuli (Bayet et al., 2017).

The current study has a number of limitations. Certain features of the design and analysis should be considered when interpreting the results. Infants completed the VPC and ERP tasks in a fixed order: All infants completed the VPC task followed by the ERP task. We chose to order the tasks in this way because the VPC task is significantly shorter and less demanding than the ERP task, making it more likely that infants would complete at least one task successfully. This raises the possibility, however, that responses in the ERP task could have been influenced by infants’ increased familiarity with happy and fearful faces following the VPC task. If anything, though, this increased familiarity with both emotions should have reduced any differential responding to happy and fearful faces; thus, we can remain confident in the effects we observed. A larger concern, one that plagues infant ERP research as a whole (Stets et al., 2012), is the number of infants who were excluded from the ERP analysis due to insufficient good trials in each condition, resulting primarily from artifact caused by muscle or eye movements. Approximately 50 % of infants were excluded from our ERP analysis in the current study. Given the possibility that this high exclusion rate means we are unintentionally biasing our sample, it is important to consider methods for retaining as much infant data as possible. Unfortunately, several commonly used techniques to eliminate artifact successfully in adult data, such as independent component analysis (ICA), do not work well with infant data (please see Fujioka et al., 2011 for a number of reasons why this is the case). However, we acknowledge that alternative methods such as recording eye movements (DeBoer et al., 2013) and applying newer algorithms for specifically identifying artifact in infant ERP data (i.e., artifact blocking, independent channel rejection; see Fujioka et al., 2011) could be applied in addition to visual inspection to rescue more infant data.

The most important limitation to point to in the current study is the use of only two emotional expressions (i.e., happiness and fear). This leaves us unable to determine whether the effects we observed are specific to fearful faces, or may have been observed in response to any negative or unfamiliar emotional expression (e.g., angry faces, neutral faces) contrasted with happy expressions. In previous studies with older infants, a “control” condition has usually been included (e.g., neutral faces, faces with a novel expression; Peltola et al., 2009b, 2008), allowing the researchers to conclude that the attentional bias is specific to fearful faces. Here, we cannot conclude the same thing. We argue, however, that the present findings are still an important addition to the literature; in particular, they extend recent behavioural (Bayet et al., 2017) and neural (Yrttiaho et al., 2014) findings that suggest a potential perceptual precursor in young infants to the attentional bias for fearful faces that emerges in the second half of the first year.

Relatedly, it is also difficult to determine what exactly underlies the differential response to fear faces—that is, what about fearful faces drives increased visual attention or differential neural responding? In older infants it is traditionally interpreted as a developing appreciation of the signal value of fear (and the signal value of threatening stimuli more broadly- Leppänen and Nelson, 2012); in younger infants, however, it is less clear what may drive differential responding. Johnson et al. (2015) suggest that fearful faces are a “super-stimulus” in that salient features (e.g., eyes) are exaggerated. Thus, differential responding to fearful faces may reflect more general face perception processes, rather than emotion recognition processes per se. Regardless of the underlying reason, however, the differential perceptual processing of fearful and happy faces may lay the foundation for the different attentional responding to these different emotional signals later in the first year.

In conclusion, our study is the first to report both behavioral and electrophysiological evidence demonstrating that differential responding to fearful (versus happy) facial expressions is present by 3 months of age. This differential responding, likely driven by perceptual mechanisms, may presage the emergence of the attentional bias for fearful faces that develops in older infants. Recent research has demonstrated that individual differences in the attentional bias to threat are both an outcome of individual differences in infant temperament (Pérez-Edgar et al., 2017) and emotional environment (Morales et al., 2017), and a predictor of individual differences in later socieoemotional outcomes (e.g., attachment security; Peltola et al., 2015). Thus, understanding the ontogeny of this phenomenon is crucial to determining when these meaningful individual differences begin to emerge.

Declaration of Competing Interest

The authors have no conflicts of interest to declare.

Acknowledgements

We thank Nicole Soriano and Stephanie Wong for their help with data collection and data coding. In particular, we thank the infants and parents who gave generously of their time to participate in this study. This research was supported by grants from the Social Sciences and Humanities Research Council of Canada (Ref #: 435-2017-1438) and the Ontario Ministry of Research, Innovation, and Science (Ref #: ER15-11-162) awarded to M. C. Moulson.

References

- Barrera M.E., Maurer D. The perception of facial expressions by the three-month-old. Child Dev. 1981;52:203–206. [PubMed] [Google Scholar]

- Bayet L., Quinn P.C., Tanaka J.W., Lee K., Gentaz É., Pascalis O. Face gender influences the looking preference for smiling expressions in 3.5-month-old human infants. PLoS One. 2015;10 doi: 10.1371/journal.pone.0129812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayet L., Quinn P.C., Laboissière R., Caldara R., Lee K., Pascalis O. Fearful but not happy expressions boost face detection in human infants. Proc. Biol. Sci. 2017;284 doi: 10.1098/rspb.2017.1054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornstein M.H., Arterberry M.E. Recognition, discrimination and categorization of smiling by 5-month-old infants. Dev. Sci. 2003;6:585–599. [Google Scholar]

- Cashon C.H., Cohen L.B. Beyond U-shaped development in infants’ processing of faces: an information-processing account. J. Cogn. Dev. 2004 [Google Scholar]

- Cashon C.H., Ha O.R., Allen C.L., Barna A.C. A U-Shaped relation between sitting ability and upright face processing in infants. Child Dev. 2013 doi: 10.1111/cdev.12024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L.B., Cashon C.H. Do 7-Month-Old Infants Process Independent Features or Facial Configurations? Infant Child Dev. 2001 [Google Scholar]

- Colombo J. The development of visual attention in infancy. Annu. Rev. Psychol. 2001;52:337–367. doi: 10.1146/annurev.psych.52.1.337. [DOI] [PubMed] [Google Scholar]

- Courchesne E., Ganz L., Norcia A.M. Event-related brain potentials to human faces in infants. Child Dev. 1981;52:804. [PubMed] [Google Scholar]

- de Haan M., Johnson M.H., Halit H. Development of face-sensitive event-related potentials during infancy: a review. Int. J. Psychophysiol. 2003;51:45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- DeBoer T., Scott L.S., Nelson C.A. Infant EEG and Event-Related Potentials. Psychology Press; 2013. Methods for acquiring and analyzing infant event-related potentials. [Google Scholar]

- DeLoache J.S., LoBue V. The narrow fellow in the grass: human infants associate snakes and fear. Dev. Sci. 2009;12:201–207. doi: 10.1111/j.1467-7687.2008.00753.x. [DOI] [PubMed] [Google Scholar]

- Farroni T., Menon E., Rigato S., Johnson M.H. The perception of facial expressions in newborns. Eur. J. Dev. Psychol. 2007;4:2–13. doi: 10.1080/17405620601046832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field A.P., Lester K.J. Is there room for “Development” in developmental models of information processing biases to threat in children and adolescents? Clin. Child Fam. Psychol. Rev. 2010 doi: 10.1007/s10567-010-0078-8. [DOI] [PubMed] [Google Scholar]

- Field T.M., Woodson R., Greenberg R., Cohen D. Discrimination and imitation of facial expressions by neonates. Science. 1982;218:179–181. doi: 10.1126/science.7123230. [DOI] [PubMed] [Google Scholar]

- Flykt A. Visual search with biological threat stimuli: accuracy, reaction times, and heart rate changes. Emotion. 2005;5:349–353. doi: 10.1037/1528-3542.5.3.349. [DOI] [PubMed] [Google Scholar]

- Flykt A. Preparedness for action: responding to the snake in the grass. Am. J. Psychol. 2006;119:29–43. [PubMed] [Google Scholar]

- Fujioka T., Mourad N., He C., Trainor L.J. Comparison of artifact correction methods for infant EEG applied to extraction of event-related potential signals. Clin. Neurophysiol. 2011 doi: 10.1016/j.clinph.2010.04.036. [DOI] [PubMed] [Google Scholar]

- Goeleven E., De Raedt R., Leyman L., Verschuere B. The Karolinska Directed Emotional Faces: a validation study. Cogn. Emot. 2008;22:1094–1118. [Google Scholar]

- Guy M.W., Zieber N., Richards J.E. The cortical development of specialized face processing in infancy. Child Dev. 2016 doi: 10.1111/cdev.12543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heck A., Hock A., White H., Jubran R., Bhatt R.S. The development of attention to dynamic facial emotions. J. Exp. Child Psychol. 2016;147:100–110. doi: 10.1016/j.jecp.2016.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoehl S., Striano T. The development of emotional face and eye gaze processing. Dev. Sci. 2010;13:813–825. doi: 10.1111/j.1467-7687.2009.00944.x. [DOI] [PubMed] [Google Scholar]

- Johnson M.H., Senju A., Tomalski P. The two-process theory of face processing: modifications based on two decades of data from infants and adults. Neurosci. Biobehav. Rev. 2015 doi: 10.1016/j.neubiorev.2014.10.009. [DOI] [PubMed] [Google Scholar]

- Kahana-Kalman R., Walker-Andrews A.S. The role of person familiarity in young infants’ perception of emotional expressions. Child Dev. 2001 doi: 10.1111/1467-8624.00283. [DOI] [PubMed] [Google Scholar]

- Kaneshige T., Haryu E. Categorization and understanding of facial expressions in 4-month-old infants. Psychol. Res. 2015 [Google Scholar]

- Kobiella A., Grossmann T., Reid V.M., Striano T. The discrimination of angry and fearful facial expressions in 7-month-old infants: an event-related potential study. Cogn. Emot. 2008 [Google Scholar]

- Kotsoni E., de Haan M., Johnson M.H. Categorical perception of facial expressions by 7-month-old infants. Perception. 2001 doi: 10.1068/p3155. [DOI] [PubMed] [Google Scholar]

- LaBarbera J.D., Izard C.E., Vietze P., Parisi S.A. Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Dev. 1976;47:535–538. [PubMed] [Google Scholar]

- Leppänen J.M., Nelson C.A. Tuning the developing brain to social signals of emotions. Nat. Rev. Neurosci. 2009;10:37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen J.M., Moulson M.C., Vogel-Farley V.K., Nelson C.A. An ERP study of emotional face processing in the adult and infant brain. Child Dev. 2007;78:232–245. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen J.M., Nelson C.A. Early development of fear processing. Curr. Dir. Psychol. Sci. 2012;21:200–204. [Google Scholar]

- Leppänen J.M., Peltola M.J., Mäntymaa M., Koivuluoma M., Salminen A., Puura K. Cardiac and behavioral evidence for emotional influences on attention in 7-month-old infants. Int. J. Behav. Dev. 2010;34:547–553. [Google Scholar]

- Leppänen J.M., Cataldo J.K., Bosquet Enlow M., Nelson C.A., Enlow M.B., Nelson C.A. Early development of attention to threat-related facial expressions. PLoS One. 2018;13 doi: 10.1371/journal.pone.0197424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LoBue V., DeLoache J.S. Detecting the snake in the grass: attention to fear-relevant stimuli by adults and young children. Psychol. Sci. 2008;19:284. doi: 10.1111/j.1467-9280.2008.02081.x. [DOI] [PubMed] [Google Scholar]

- LoBue V., Rakison D.H. What we fear most: a developmental advantage for threat-relevant stimuli. Dev. Rev. 2013;33:285–303. [Google Scholar]

- LoBue V., Rakison D.H., DeLoache J.S. Threat perception across the life span: evidence for multiple converging pathways. Curr. Dir. Psychol. Sci. 2010;19:375–379. [Google Scholar]

- Ludemann P.M. Generalized discrimination of positive facial expressions by seven‐ and ten‐month‐Old infants. Child Dev. 1991 [PubMed] [Google Scholar]

- Ludemann P.M., Nelson C.A. Categorical representation of facial expressions by 7-month-old infants. Dev. Psychol. 1988;24:492–501. [Google Scholar]

- Lundqvist D., Flykt A., Öhman A. 1998. The Karolinska Directed Emotional Faces (KDEF) [Google Scholar]

- Montague D.P., Walker-Andrews A.S. Peekaboo: a new look at infants’ perception of emotion expressions. Dev. Psychol. 2001 [PubMed] [Google Scholar]

- Montague D.P.F., Walker-Andrews A.S. Mothers, fathers, and infants: the role of person familiarity and parental involvement in infants’ perception of emotion expressions. Child Dev. 2002 doi: 10.1111/1467-8624.00475. [DOI] [PubMed] [Google Scholar]

- Morales S., Brown K.M., Taber-Thomas B.C., LoBue V., Buss K.A., Pérez-Edgar K.E. Maternal anxiety predicts attentional bias towards threat in infancy. Emotion. 2017;17:874–883. doi: 10.1037/emo0000275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson C.A., Dolgin K.G. The generalized discrimination of facial expressions by seven-month-old infants. Child Dev. 1985;56:58–61. [PubMed] [Google Scholar]

- Nelson C.A., Morse P.A., Leavitt L.A. Recognition of facial expressions by seven-month-old infants. Child Dev. 1979 [PubMed] [Google Scholar]

- Öhman A., Flykt A., Esteves F. Emotion drives attention: detecting the snake in the grass. J. Exp. Psychol. Gen. 2001;130:466–478. doi: 10.1037/0096-3445.130.3.466. [DOI] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Palokangas T., Hietanen J.K. Fearful faces modulate looking duration and attention disengagement in 7-month-old infants. Dev. Sci. 2008;11:60–68. doi: 10.1111/j.1467-7687.2007.00659.x. [DOI] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Mäki S., Hietanen J.K. Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Soc. Cogn. Affect. Neurosci. 2009;4:134–142. doi: 10.1093/scan/nsn046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Vogel-Farley V.K., Hietanen J.K., Nelson C.A. Fearful faces but not fearful eyes alone delay attention disengagement in 7-month-old infants. Emotion. 2009;9:560–565. doi: 10.1037/a0015806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola M.J., Leppänen J.M., Hietanen J.K. Enhanced cardiac and attentional responding to fearful faces in 7-month-old infants. Psychophysiology. 2011;48:1291–1298. doi: 10.1111/j.1469-8986.2011.01188.x. [DOI] [PubMed] [Google Scholar]

- Peltola M.J., Hietanen J.K., Forssman L., Leppänen J.M. The emergence and stability of the attentional bias to fearful faces in infancy. Infancy. 2013;18:905–926. [Google Scholar]

- Peltola M.J., Forssman L., Puura K., van Ijzendoorn M.H., Leppänen J.M. Attention to faces expressing negative emotion at 7 months predicts attachment security at 14 months. Child Dev. 2015;86:1321–1332. doi: 10.1111/cdev.12380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pérez-Edgar K., Morales S., LoBue V., Taber-Thomas B.C., Allen E.K., Brown K.M., Buss K.A. The impact of negative affect on attention patterns to threat across the first 2 years of life. Dev. Psychol. 2017;53:2219–2232. doi: 10.1037/dev0000408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Psychology Software Tools . 2016. E-Prime. [Google Scholar]

- Reynolds G.D., Richards J.E. Familiarization, attention, and recognition memory in infancy: an event-related potential and cortical source localization study. Dev. Psychol. 2005;41:598–615. doi: 10.1037/0012-1649.41.4.598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruff H.A., Rothbart M.K. Osford University Press; New York: 1996. Attention in Early Development: Themes and Variations. [Google Scholar]

- Safar K., Moulson M.C.M.C. Recognizing facial expressions of emotion in infancy: a replication and extension. Dev. Psychobiol. 2017;59 doi: 10.1002/dev.21515. [DOI] [PubMed] [Google Scholar]

- Steele H., Steele M., Croft C. Early attachment predicts emotion recognition at 6 and 11 years old. Attach. Hum. Dev. 2008 doi: 10.1080/14616730802461409. [DOI] [PubMed] [Google Scholar]

- Stets M., Stahl D., Reid V.M. A meta-analysis investigating factors underlying attrition rates in infant ERP studies. Dev. Neuropsychol. 2012;37:226–252. doi: 10.1080/87565641.2012.654867. [DOI] [PubMed] [Google Scholar]

- Thelen E. Dynamic systems theory and the complexity of change. Psychoanal. Dialogues. 2005;15:255–283. [Google Scholar]

- Thompson R.A. Emotional regulation and emotional development. Educ. Psychol. Rev. 1991 [Google Scholar]

- Tronick E.Z. Emotions and emotional communication in infants. Am. Psychol. 1989 doi: 10.1037//0003-066x.44.2.112. [DOI] [PubMed] [Google Scholar]

- Walker-Andrews A.S., Dickson L.R. The Development of Social Cognition. Psychology Press; 1997. Infants’ understanding of affect. [Google Scholar]

- Walker-Andrews A.S., Krogh-Jespersen S., Mayhew E.M.Y., Coffield C.N. Young infants’ generalization of emotional expressions: effects of familiarity. Emotion. 2011 doi: 10.1037/a0024435. [DOI] [PubMed] [Google Scholar]

- Woodman G.F. A brief introduction to the use of event-related potentials in studies of perception and attention. Atten. Percept. Psychophys. 2010 doi: 10.3758/APP.72.8.2031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young-Browne G., Rosenfeld H.M., Horowitz F.D. Infant discrimination of facial expressions. Child Dev. 1977 [Google Scholar]

- Yrttiaho S., Forssman L., Kaatiala J., Leppänen J.M. Developmental precursors of social brain networks: the emergence of attentional and cortical sensitivity to facial expressions. PLoS One. 2014;9:1–10. doi: 10.1371/journal.pone.0100811. [DOI] [PMC free article] [PubMed] [Google Scholar]