Abstract

The reliable detection of environmental molecules in the presence of noise is an important cellular function, yet the underlying computational mechanisms are not well understood. We introduce a model of two interacting sensors which allows for the principled exploration of signal statistics, cooperation strategies and the role of energy consumption in optimal sensing, quantified through the mutual information between the signal and the sensors. Here we report that in general the optimal sensing strategy depends both on the noise level and the statistics of the signals. For joint, correlated signals, energy consuming (nonequilibrium), asymmetric couplings result in maximum information gain in the low-noise, high-signal-correlation limit. Surprisingly we also find that energy consumption is not always required for optimal sensing. We generalise our model to incorporate time integration of the sensor state by a population of readout molecules, and demonstrate that sensor interaction and energy consumption remain important for optimal sensing.

Subject terms: Biophysics; Biological physics; Statistical physics, thermodynamics and nonlinear dynamics

Cells exhibit exceptional chemical sensitivity, yet we haven’t fully understood how they achieve it. Here the authors consider the mutual information between signals and two coupled sensors as a proxy for sensing performance and show its optimisation depending on noise level and signal statistics.

Introduction

Cells are surrounded by a cocktail of chemicals, which carry important information, such as the number of nearby cells, the presence of foreign material, and the location of food sources and toxin. The ability to reliably measure chemical concentrations is thus essential to cellular function. In fact, cells can exhibit extremely high sensitivity in chemical sensing, for example, our immune response can be triggered by only one foreign ligand1 and Escherichia coli chemotaxis responds to nanomolar changes in chemical concentration2. But how does cellular machinery achieve such sensitivity?

One strategy is to consume energy: molecular motors metabolise ATPs to drive cell movement and cell division, and kinetic proofreading employs nonequilibrium biochemical networks to increase enzyme–substrate specificity3. Indeed, the role of energy consumption in enhancing the sensitivity of chemosensing is the subject of several studies4–8. However, whether nonequilibrium sensing can supersede equilibrium limits to performance is unknown9,10.

Interactions also directly influence sensitivity, and receptor cooperativity is a biologically plausible strategy for suppressing noise11–13. These results, however, apply in steady state11 and it is independent receptor that maximise the signal-to-noise ratio under a finite integration time14,15 even when receptor interactions are coupled to energy consumption16. More generally, a trade-off exists between noise-reduction and available resources, such as integration time and the number of readout molecules6,7. It is therefore important to examine how sensor circuit sensitivity depends on the level of noise and the structure of the signals without a priori fixing the interactions or the energy consumption.

We introduce a general model for nonequilibrium coupled binary sensors. Specialising to the case of two sensors, we obtain the steady state distribution of the two-sensor states for a specified signal. We then determine the sensing strategy that maximises the mutual information for a given noise level and signal prior. We find that the optimal sensing strategy depends on both the noise level and signal statistics. In particular, energy consumption can improve sensing performance in the low-noise, high-signal-correlation limit but is not always required for optimal sensing. Finally, we generalise our model to include time averaging of the sensor state by a population of readout molecules, and show that optimal sensing remains reliant on sensor interaction and energy consumption.

Results

Model overview

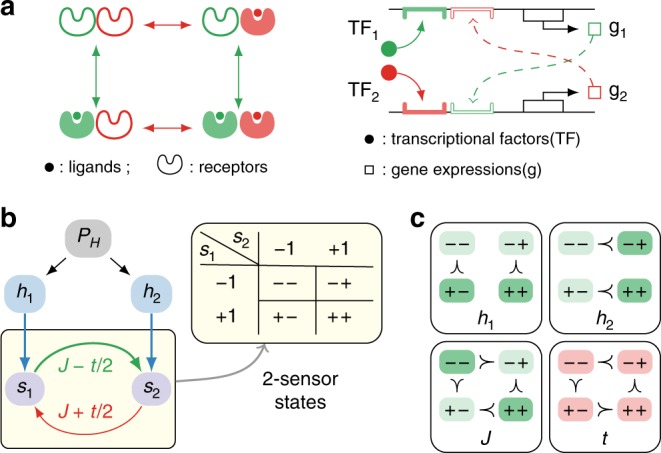

We consider a simple system of two information processing units (sensors), an abstraction of a pair of coupled chemoreceptors or two transcriptional regulations with cross-feedback (Fig. 1a). The sensor states depend on noises, signals (e.g., chemical changes) and sensor interactions, which can couple to energy consumption. Instead of the signal-to-noise ratio, we use the mutual information between the signals and the states of the system as the measure of sensing performance. Physically, the mutual information corresponds to the reduction in the uncertainty (entropy) of the signal (input) once the system state (output) is known. In the absence of signal integration, the mutual information between the signals and sensors is also the maximum information the system can learn about the signals as noisy downstream networks can only further degrade the signals. However, computing mutual information requires the knowledge of the prior distribution of the signals. Importantly, the prior encodes some of the information about the signal, e.g., signals could be more likely to take certain values or drawn from a set of discrete values. Although the signal prior in cellular sensing is generally unknown, one simple, physically plausible choice is the Gaussian distribution, which is the least informative distribution for a given mean and variance.

Fig. 1. Model overview.

We consider a minimal model of a sensory complex, which includes both external signals and interactions between the sensors. a Physical examples of a sensor system with two interacting sensors: a pair of chemoreceptors with receptor coupling (left) and a pair of transcriptional regulations of gene expressions with cross-feedback (right). b Model schematic. Each sensor is endowed with binary states s1 = ±1 and s2 = ±1, so that the sensor complex admits four states: −, −+, +− and ++. A signal H is drawn from the prior distribution PH and couples to each sensor via the local fields h1 and h2. The coupling between the sensors is described by J12 = J + t∕2 and J21 = J − t∕2, and can be asymmetric so in general J12 ≠ J21. c The field hi favours the states with si = +1 (top row); the coupling J favours the correlated states −− and ++ (bottom left); and the nonequilibrium drive t generates a cyclic bias (bottom right).

Nonequilibrium coupled sensors

We provide an overview of our model in Fig. 1b. Here, a sensor complex is a network of interacting sensors, each endowed with binary states s = ±1, e.g., whether a receptor or gene regulation is active. The state of each sensor depends on that of the others through interactions, and on the local bias fields generated by a signal; for example, an increase in ligand concentration favours the occupied state of a chemoreceptor. Owing to noise, the sensor states are not deterministic so that the probability of every state is finite. We encode the effects of signals, interactions and intrinsic noise in the inversion rate—the rate at which a sensor switches its state. We define the inversion rate for the ith sensor

| 1 |

where S = {si} denotes the present state of the sensor system, H = {hi} the signal, Jij the interactions, and β the sensor reliability (i.e., the inverse intrinsic noise level). The transition rate determines the lifetime, and thus the likelihood, of each state S. In the above form, the coupling to the signal, hisi, favours alignment between the sensor si and the signal hi, whereas the interaction Jij > 0 (Jij < 0) encourages correlation (anticorrelation) between the sensors si and sj. The constant sets the overall timescale but drops out in steady state, which is characterised by the ratios of the transition rates. In the context of chemosensing, the signal {hi} parametrises the concentration change of one type of ligand when all sensors in the sensing complex respond to the same chemical, and of multiple ligands when the sensors exhibit different ligand specificity.

Given an input signal H, the conditional probability of the states of the sensor complex in steady state PS∣H is obtained by balancing the probability flows into and out of each state while conserving the total probability ∑SPS∣H = 1,

| 2 |

Here and in the following, the state vector Si is related to S by the inversion of the sensor i, si → −si, whereas all other sensors remain in the same configuration.

In equilibrium, detailed balance imposes an additional constraint forbidding net probability flow between any two states,

| 3 |

and this condition can only be satisfied by symmetric interactions Jij = Jji (see, Coupling symmetry and detailed balance in Methods). We define the equilibrium free energy

| 4 |

such that the inversion rate depends on the initial and final states of the system only through the change in free energy

| 5 |

Together with the detailed balance condition (Eq. (3)), this equation leads directly to the Boltzmann distribution with the partition function . When constrained to equilibrium couplings, this model has been previously investigated in the context of optimal coding by a network of spiking neurons17. Asymmetric interactions Jij ≠ Jji break detailed balance, resulting in a nonequilibrium steady state (see, Coupling symmetry and detailed balance in Methods).

We specialise to the case of two coupled sensors S = (s1, s2), belonging to one of the four states: −−, −+, ++ and +− (Fig. 1b). For convenience, we introduce two new variables, the coupling J and nonequilibrium drive t, and parametrise J12 and J21 such that J21 = J − t ∕ 2 and J12 = J + t ∕ 2 (Fig. 1b). The effects of the bias fields (h1, h2), coupling J and nonequilibrium drive t are summarised in Fig. 1c. Compared with the equilibrium inversion rate [Eq. (5)], a finite nonequilibrium drive leads to a modification of the form

| 6 |

where S → Si is cyclic if it corresponds to one of the transitions in the cycle −− → −+ → ++ → +− → −−, and anticyclic otherwise. Recalling that this probability flow vanishes in equilibrium, it is easy to see that, depending on whether t is positive or negative, the nonequilibrium inversion rates result in either cyclic or anticyclic steady state probability flow.

A net probability flow in steady state leads to power dissipation. By analogy with Eq. (5), we write down the effective change in free energy of a transition S → Si,

That is, the system loses energy of 4t per complete cycle. To conserve total energy, the sensor complex must consume the same amount of energy it dissipates to the environment. The nonequilibrium drive also modifies the steady state probability distribution. Solving Eq. (2), we have (see also, Steady state master equation in Methods)

| 7 |

where FS∣H denotes the free energy in equilibrium [Eq. (4)]. The nonequilibrium effects are encoded in the noise-dependent term

| 8 |

and note that δFS∣H → 0 as t → 0.

Mutual information

We quantify sensing performance through the mutual information between the signal and sensor complex I(S; H), which measures the reduction in the uncertainty (entropy) in the signal H once the system state S is known and vice versa. For convenience, we introduce the “output” and “noise” entropies where output entropy is the entropy of the two-sensor state distribution , whereas the noise entropy is defined as the average entropy of the conditional probability of sensor states . Here, PH is the prior distribution from which a signal is drawn and the entropy of a distribution is defined by . In terms of the output and noise entropies, the mutual information is given by

| 9 |

and we seek the sensing strategy (the coupling J and nonequilibrium drive t) that maximises the mutual information for given reliability β and signal priors PH. In practice, we solve this optimisation problem by a numerical search in the J–t parameter space using standard numerical-analysis software (see, Code availability for an example code for numerical optimisation of mutual information).

Correlated signals

The bias fields at two sensors are generally different, for example, chemoreceptors with distinct ligand specificity or exposure, and we consider signals H = (h1, h2), drawn from a correlated bivariate Gaussian distribution (Fig. 2a),

| 10 |

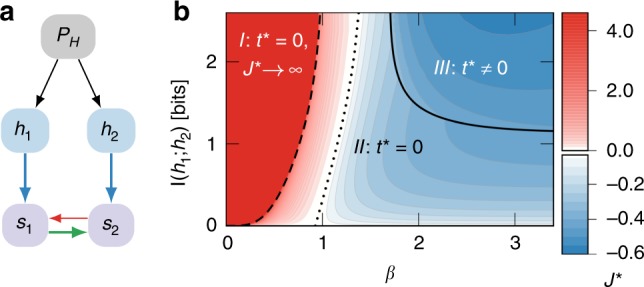

where α ∈ [−1, 1] is the correlation between h1 and h2. When we maximise the mutual information in the J–t parameter space, we find that the mutual information is maximised by an equilibrium system (t* = 0) for small β. In Fig. 2b, we show that the optimal strategy is cooperative (J > 0) at small β and switches to anticooperative (J < 0) around β ~ 1. Below a certain value of β, the optimal coupling diverges J* → ∞ (region I in Fig. 2b). In addition, sensor cooperativity is less effective for less-correlated signals because a cooperative strategy relies on output suppression (which reduces both noise and output entropies). This strategy works well for more correlated signals as they carry less information (low signal entropy), which can be efficiently encoded by fewer output states. Thus, a reduction in noise entropy increases mutual information despite the decrease in output entropy. This is not the case for less-correlated signals, which carry more information (higher entropy) and which require more output states to encode effectively. As sensors become less noisy, the optimal strategy is nonequilibrium (t* ≠ 0; region III in Fig. 2b) only when the signal redundancy, i.e., the mutual information between the input signals I(h1; h2), is relatively high. The sensing strategies t = ±∣t*∣ are time-reversed partners of one another both of which yield the same mutual information. This symmetry results from the fact that the signal prior PH (Eq. (10)) is invariant under h1 ↔ h2, hence the freedom in the choice of dominant sensor (i.e., we can either make J12 > J21 or J12 < J21).

Fig. 2. Optimal sensing for correlated signals.

Nonequilibrium sensing is optimal in the low-noise, high-correlation limit for correlated Gaussian signals. a We assume that the signal directly influences both sensors with varying correlation, Eq. (10). b The optimal coupling J* as a function of sensor reliability β and the signal redundancy, i.e., the mutual information between the input signals I(h1; h2). The optimal coupling diverges J* → ∞ at small β (region I, left of the dashed curve) and decreases with larger β. Between the dashed and solid curves (region II), the mutual information I(S; H) is maximised by equilibrium sensors with a finite J* that changes from cooperative (red) to anticooperative (blue) at the dotted line. Nonequilibrium sensing is the optimal strategy for signals with relatively high redundancy in the low-noise limit (region III, above the solid curve).

Although Fig. 2b shows the results for positively correlated signals (α > 0), the optimal sensing strategies for anticorrelated signals (α < 0) exhibit the same dependence on sensor reliability and the signal redundancy but with the same nonequilibrium drive t = ±∣t*∣ and the optimal coupling J* that is opposite to that in the case α > 0.

Perfectly correlated signals

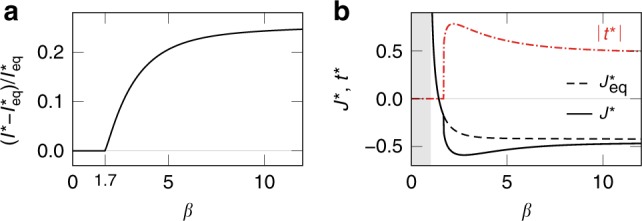

To understand the mechanisms behind the optimal sensing strategy for correlated signals, we consider the limiting case of completely redundant Gaussian signals (h1 = h2), which captures most of the phenomenology depicted in Fig. 2b. We find that nonequilibrium drive allows further improvement on equilibrium sensors only for β > 1.7 and that the nonequilibrium gain remains finite as β → ∞ (Fig. 3a). In Fig. 3b, we show the optimal parameters for both equilibrium and nonequilibrium sensing. The optimal coupling diverges for sensors with β < 1, decreases with increasing β and exhibits a sign change at β = 1.4. For β > 1.7, the nonequilibrium drive is finite and the couplings are distinct .

Fig. 3. Optimal sensing in the high-signal-correlation limit.

For perfectly correlated Gaussian signals, the nonequilibrium information gain is largest in the noiseless limit. We consider a sensor complex driven with signal H = (h, h) with . a The nonequilibrium gain as a function of sensor reliability β. The gain grows from zero at β = 1.7 and increases with β, suggesting that the enhancement results from the ability to distinguish additional signal features. b Optimal sensing strategy for varying noise levels. For β < 1 (shaded), the mutual information is maximised by an equilibrium system (t* = 0) with infinitely strong coupling. The equilibrium strategy remains optimal for β < 1.7 with a coupling J* (solid) that decreases with β and exhibits a sign change at β = 1.4. At bigger β, the optimal coupling in the equilibrium case (dashed) continues to decrease but equilibrium sensing becomes suboptimal. For β > 1.7, the mutual information is maximised by a finite nonequilibrium drive (dot-dashed) and negative coupling.

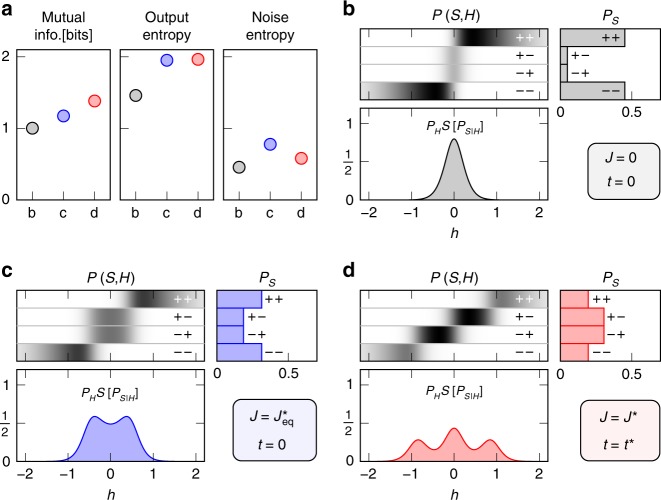

Figure 4a compares the output and noise entropies of equilibrium and nonequilibrium sensing at optimal with that of noninteracting sensors for a representative β = 4. Here, anticooperativity () enhances mutual information in equilibrium sensing by maximising the output entropy, whereas nonequilibrium drive produces further improvement by lowering noise entropy. Compared with the noninteracting case Fig. 4b, optimal equilibrium sensing distributes the probability of the output states, PS, more evenly (Fig. 4c), resulting in higher output entropy. This is because a negative coupling J < 0 favours the states +− and −+, which are much less probable than ++ and −− in a noninteracting system subject to perfectly correlated signals (Fig. 4b). However, this also leads to higher noise entropy, as the states +− and −+ are equally likely for a given signal (Fig. 4c). By lifting the degeneracy between the states +− and −+, nonequilibrium sensing suppresses noise entropy while maintaining a relatively even distribution of output states, Fig. 4d. As a result, for signals with low redundancy (I(h1; h2) ≲ 1), a nonequilibrium strategy allows no further improvement (Fig. 2b) because the states of a sensor complex are less likely to be degenerate (since the probability that h1 ≈ h2 is smaller).

Fig. 4. Mechanisms behind nonequilibrium performance improvement for joint, correlated signals.

For perfectly correlated Gaussian signals, in the low-noise limit, sensor anticooperativity (J < 0) increases the mutual information by maximising the output entropy while nonequilibrium drive (t ≠ 0) provides further improvement by suppressing noise entropy. a We compare the mutual information, output, and noise entropies for three sensing strategies at β = 4: (b grey) noninteracting, (c blue) equilibrium and (d red) nonequilibrium cases. For each case, we find the configuration that maximises the mutual information under the respective constraints. b–d Signal-sensor joint probability distribution P(S, H), output distribution PS, and the “signal-resolved noise entropy” , corresponding to the cases shown in a where β = 4. Note that the noise entropy is the area under the signal-resolved curve. As the optimal coupling is negative (J < 0) at β = 4 (see Fig. 3b), the states −+ and +−, which are heavily suppressed by fully correlated signals in a noninteracting system (b), become more probable in the interacting cases, resulting in a more even output distribution c, d and thus a larger output entropy a. However anticooperativity also increases noise entropy in the equilibrium case a since the states −+ and +− are degenerate c. By lifting this degeneracy (d), a nonequilibrium system can suppress the noise entropy and further increase mutual information a.

Although anticooperativity increases output entropy more than noise entropy at β = 4, it is not the optimal strategy for β < 1.4. For noisy sensors, a positive coupling J > 0 yields higher mutual information, Fig. 3b. This is because when the noise level is high, the output entropy is nearly saturated and an increase in mutual information must result primarily from the reduction of noise entropy by suppressing some output states–in this case, the states +− and −+ are suppressed by J > 0.

We emphasise that the nonequilibrium gain is not merely a result of an additional sensor parameter. Instead of nonequilibrium drive we can introduce signal-independent biases on each sensor and keep the entire sensory complex in equilibrium. Such intrinsic biases can lower noise entropy in optimal sensing by breaking the degeneracy between the states +− and −+ (Fig. 4b, c). However, favouring one sensor state over the other results in a greater decrease in output entropy and hence lower mutual information (see, Supplementary Fig. 1).

Our analysis does not rely on the specific Gaussian form of the prior distribution. Indeed, for correlated signals the nonequilibrium improvement in the low-noise, high-correlation limit is generic for most continuous priors (see, Supplementary Fig. 2).

Time integration

Cells do not generally have direct access to the receptor state. Instead, chemosensing relies on downstream readout molecules whose activation and decay couple to sensor states. Repeated interactions between receptors and a readout population provide a potential noise-reduction strategy through time averaging, which can compete with sensor cooperativity and energy consumption14,16. We generalise our model to incorporate time integration of the sensor state by a population of readout molecules and demonstrate that sensor coupling and nonequilibrium drive remains essential to optimal sensing.

We consider a system of binary sensors S, coupled to signals H and a readout population r. We expand our original model (Nonequilibrium coupled sensors) to include readout activation (r → r + 1) and decay (r → r − 1), resulting in modified transition rates:

| 11 |

| 12 |

where S → Si denotes sensor inversion si → − si, r the readout population, bi the sensor-specific bias and the overall timescale constant. We also introduce the sensor-dependent differential readout potential δμi; the sensor state favours a larger readout population (by increasing activation rate and suppressing decays), whereas biases the readout towards a smaller population. This allows the readout population to store samplings of sensor states over time, thus providing a physical mechanism for time integration of sensor states. The readout population in turn affects sensor inversions: the larger the readout population, the more favourable over . This two-way interplay between sensors and readouts is essential for a consistent equilibrium description, for one-way effects (e.g., sampling sensor states without altering the sensor complex) require Maxwell’s demon—a nonequilibrium process not described by the model.

We further assume a finite readout population r ≤ r0, and that the readout activation and decay are intrinsically stochastic. Consequently, a readout population has a limited memory for past sensor states. Indeed, readout stochasticity sets a timescale beyond, which an increase in measurement time cannot improve sensing performance6. To investigate this fundamental limit, we let the measurement time be much longer than any stochastic timescale. In this case, the sensor-readout joint distribution Pr,S∣H satisfies the steady state master equation with the transition rates in Eqs. (11), (12) (see, Steady state master equation in Methods).

When Jij = Jji, the steady state distribution obeys the detailed balance condition (see, Coupling symmetry and detailed balance in Methods) and is given by

| 13 |

with the free energy

| 14 |

This distribution results in , implying a Markov chain H → S → r (H affects r only via S), hence the data processing inequality I(S; H) ≥ I(r; H). That is, in equilibrium, time integration of sensor states cannot produce a readout population that contain more information about signals than the sensor states7 (see, also refs. 18,19). This result applies to any equilibrium sensing complexes (see, Equilibrium time integration in Methods).

We now specialise to the case of two coupled sensors S = (s1, s2) and introduce two new variables, Δ and δ, defined via

| 15 |

For this parametrisation, the effective chemical potentials for readout molecules are given by

where μS = ∑iδμisi. We see that Δ (δ) parametrises how different the sensor states ++ and −− ( +− and −+ ) appear to the readout population. To maximise utilisation of readout states, we set the sensors biases to bi = δμir0 ∕ 2 where r0 is the maximum readout population. For optimal equilibrium sensing, we maximise mutual information I(r; H) by varying J and δ under the constraint t = 0, and the noninteracting case corresponds to J = t = δ = 0.

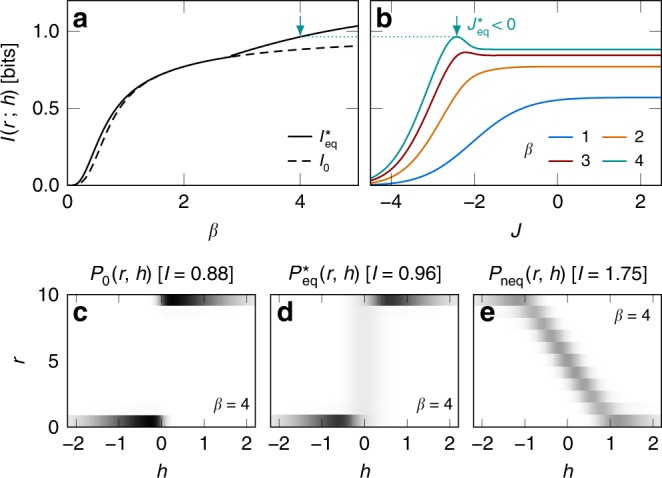

Figure 5a depicts the readout-signal mutual information for perfectly correlated Gaussian signals for the cases of optimal equilibrium sensing (solid) and noninteracting sensors (dashed). Independent sensors are suboptimal for all β, i.e., we can always increase mutual information by tuning δ and J away from zero. At maximum mutual information we find δ = 0 and J ≠ 0. In Fig. 5b, we show the mutual information as a function of J (at δ = 0). We see that in the noisy limit (low β) and in the low-noise limit (high β). This crossover from cooperativity to anticooperativity is consistent with our results in Correlated signals and Perfectly correlated signals (see also, Figs. 2 and 3b).

Fig. 5. Optimal sensing in the presence of time integration.

Sensor interaction and energy consumption remain important for optimal sensing in the presence of time integration of sensor states by a population of readout molecules. We consider a two-sensor complex, driven by signal H = (h, h) with and coupled to a readout population r (Eqs. (11), (12)). a Mutual information between the signal and the readout population for noninteracting sensors (dashed) and optimal equilibrium sensors (solid). Independent sensors are always suboptimal. b Mutual information as a function of sensor coupling J at various sensor reliabilities β (see legend). The optimal equilibrium strategy changes from cooperativity (J > 0) in the noisy limit to anticooperativity (J < 0) in the low-noise limit. c–e Joint probability distribution of signal and readout population at β = 4 for three sensing systems: noninteracting c, optimal equilibrium (d), and near-optimal nonequilibrium (e) (cf. Fig. 4). Optimal equilibrium sensors use anticooperativity to increase the probability of the states +− and −+, which map to intermediate readout populations 0 < r < r0, and as a result, allows for a more efficient use of output states compared with noninteracting sensors. Nonequilibrium drive lifts the degeneracy of intermediate readout states, leading to an even more effective use of readout states. For the nonequilibrium example in e, we obtain Ineq = 1.75 bits, compared with bits for optimal equilibrium sensors at the same β. In a–e, we use Δ = 1 and r0 = 10, and in e, J = −2, t = 7, and δ = −0.6.

To reveal the mechanism behind optimal equilibrium sensing in the low-noise limit, we examine the joint probability distribution P(r, h) at β = 4 for noninteracting (Fig. 5c) and optimal equilibrium sensors (Fig. 5d). We see that anticooperativity increases mutual information by distributing the output (readout) states more efficiently (cf. Fig. 4b, c). Noninteracting sensors partition outputs into large and small readout populations, which corresponds to positive and negative signals, respectively. This is because correlated signals favour the chemical potentials μ++ = − μ−− = Δ, which bias the readout population towards r = 0 and r = r0, and suppress μ+− = −μ−+ = δ = 0, which encourage evenly distributed readout states. By adopting an anticooperative strategy (J < 0) to counter signal correlation, optimal equilibrium sensors can use more output states (on average) to encode the signal. The increase in accessible readout states also raises noise entropy, but the increase in output entropy dominates in the low-noise limit, resulting in higher mutual information.

Finally, we demonstrate that energy consumption can further enhance sensing performance. Figure 5e shows P(r, h) for a nonequilibrium sensor complex. We see that nonequilibrium drive lifts the degeneracy in intermediate readout states (0 < r < r0), leading to a much more effective use of output states. For the nonequilibrium sensor complex in Fig. 5e, we obtain Ineq(r; h) = 1.75 bits, compared with bits for optimal equilibrium sensors (Fig. 5a, b) at the same sensor reliability (β = 4). We note that this nonequilibrium gain relies also on δ ≠ 0 to distinguish the sensor states +− and −+. The staircase of readout states in Fig. 5e corresponds to the anticorrelated sensor states +− and −+ which do not always favour higher readouts at positive signals (see also, Supplementary Figs. 3, 4).

Discussion

We introduce a minimal model of a sensor complex that encapsulates both sensor interactions and energy consumption. For correlated signals, we find that sensor interactions can increase sensing performance of two binary sensors, as measured by the steady state mutual information between the signal and the states of the sensor complex.

This result highlights sensor cooperativity as a biologically plausible sensing strategy11–13. However, the nature of the optimal sensor coupling does not always reflect the correlation in the signal; for positively correlated signals, the optimal sensing strategy changes from cooperativity to anticooperativity as the noise level decreases, see also ref. 17. Anticooperativity emerges as the optimal strategy through countering the redundancy in correlated signals by suppressing correlated outputs, and thus redistributing the output states more evenly. The same principle also applies to population coding in neural networks17, positional information coding by the gap genes in the Drosophila embryo20–22 and time-telling from multiple readout genes23. Surprisingly, we find that energy consumption leads to further improvement only when the noise level is low and the signal redundancy high.

We find that sensor coupling and energy consumption remain important for optimal sensing under time integration of the sensor state—a result contrary to earlier findings that a cooperative strategy is suboptimal even when sensor interaction can couple to nonequilibrium drive14,16. This discrepancy results from an assumption of continuous, deterministic time integration that requires an infinite supply of readout molecules and external nonequilibrium processes, and which also leads to an underestimation of noise in the output; we make no such assumption in our model. In addition, we use the data processing inequality to show for any sensing system that time integration cannot improve sensing performance unless energy consumption is allowed either in sensor coupling or downstream networks (see also refs. 5,7).

Our work highlights the role of signal statistics in the context of optimal sensing. We show that a signal prior distribution is an important factor in determining the optimal sensing strategy as it sets the amount of information carried by a signal. With a signal prior, we quantify sensing performance by mutual information, which is a generalisation of linear approximations used in previous works6–8,11,14,16.

To focus on the effects of nonequilibrium sensor cooperation, we neglect the possibility of signal crosstalk and the presence of false signals. Limited sensor-signal specificity places an additional constraint on sensing performance24 (but see, ref. 25). Previous works have shown that kinetic proofreading schemes3 can mitigate this problem for isolated chemoreceptors that bind to correct and incorrect ligands26,27. Our model can be easily generalised to include crosstalk, and it would be interesting to investigate whether nonequilibrium sensor coupling can provide a way to alleviate the problem of limited specificity.

Although we considered a simple model, our approach provides a general framework for understanding collective sensing strategies across different biological systems from chemoreceptors to transcriptional regulation to a group of animals in search of mates or food. In particular, possible future investigations include the mechanisms behind collective sensing strategies in more complex, realistic models, non-binary sensors, adaptation, and generalisation to a larger number of sensors. It would also be interesting to study the channel capacity in the parameter spaces of both the sensor couplings and the signal prior, an approach that has already led to major advances in the understanding of gene regulatory networks28. Finally, the existence of optimal collective sensing strategies necessitates a characterisation of the learning rules that gives rise to such strategies.

Methods

Steady state master equation

The steady state probability distribution satisfies a linear matrix equation

| 16 |

where pj denotes the probability of the state j. The matrix W is defined such that

| 17 |

where Γj→i denotes the transition rate from the states j to i and Γj→j = 0. The solution of Eq. (16) corresponds to a direction in the null space of the linear operator W. For two coupled sensors considered in Nonequilibrium coupled sensors, Eq. (16) (Eq. (2)) becomes a set of four simultaneous equations, which we solve analytically for a solution (Eq. (7)) that also satisfies the constraints of a probability distribution (∑jpj = 1, and pi ≥ 0 for all i). In a larger system an analytical solution is not practical. For example, in ‘Time integration’ we consider a 44-state system of two sensors with at most 10 readout molecules. In this case we obtain the null space of the matrix W from its singular value decomposition, which can be computed with a standard numerical software.

Coupling symmetry and detailed balance

Here we show that the transition rates in Eq. (1) does not satisfy Kolmogorov’s criterion—a necessary and sufficient condition for detailed balance—unless Jij = Jji. Consider the sensors si and sj in a sensor complex S = {s1, s2, …, sN} with N > 1. This sensor pair admits four states (si, sj) = −−, −+, ++, +−. The transitions between these states form two closed sequences in opposite directions

|

18 |

Kolmogorov’s criterion requires that, for any closed loop, the product of all transition rates in one direction must be equal to the product of all transition rates in the opposite direction—i.e., . For the rates in Eq. (1), we have

| 19 |

therefore, only symmetric interactions satisfy Kolmogorov’s criterion and any asymmetry in sensor coupling necessarily breaks detailed balance. This result holds also for the generalised transition rates in Eqs. (11), (12).

Equilibrium time integration

Following the analysis in Supplemental Material of ref. 7, we provide a general proof that equilibrium time integration of receptor states cannot generate readout populations that contain more information about the signals than the receptors. We consider a system of receptors S = (s1, s2, …, sN), driven by signal H = (h1, h2, …, hN) and coupled to readout populations R = (r1, r2, …, rM). In equilibrium, this system is described by a free energy

| 20 |

where f(H, S) and g(S, R) describe signal-sensor and sensor-readout couplings, respectively, and include interactions among sensors and readout molecules. The Boltzmann distribution for sensors and readouts reads

| 21 |

with the partition function ZH. Therefore, we have

| 22 |

where the summation is over all readout states. This allows the decomposition of the joint distribution, PR,S∣H = PR∣S,HPS∣H = PR∣SPS∣H, which implies a Markov chain H → S → R—that is, H affects R only through S. (This does not mean R does not affect S, for PS∣R,H still depends on R.) From the data processing inequality, it immediately follows that I(H; S) ≥ I(H; R). We emphasise that this constraint applies to any equilibrium sensor complex and downstream networks, which can be described by the free energy in Eq. (20), regardless of the numbers of sensors and readout species, sensor characteristics (e.g., number of states), sensor-signal and sensor-readout couplings (including crosstalk), and interactions among sensors and readout molecules.

Supplementary information

Acknowledgements

We are grateful to Gašper Tkačik and Pieter Rein ten Wolde for useful comments and a critical reading of the manuscript. V.N. acknowledges support from the National Science Foundation under Grants DMR-1508730 and PHY-1734332, and the Northwestern-Fermilab Center for Applied Physics and Superconducting Technologies. G.J.S. acknowledges research funds from Vrije Universiteit Amsterdam and OIST Graduate University. D.J.S. was supported by the National Science Foundation through the Center for the Physics of Biological Function (PHY-1734030) and by a Simons Foundation fellowship for the MMLS. This work was partially supported by the National Institutes of Health under award number R01EB026943 (V.N. and D.J.S.).

Author contributions

V.N., D.J.S., and G.J.S. conceived the study, interpreted the results and wrote the manuscript. V.N. performed numerical calculations.

Data availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Code availability

A Mathematica code for computing the optimal sensor parameters (J*, t*) that maximise the mutual information between two coupled sensors and correlated Gaussian signals is available at https://github.com/vn232/NeqCoopSensing.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: David J. Schwab, Greg J. Stephens.

Supplementary information

Supplementary information is available for this paper at 10.1038/s41467-020-14806-y.

References

- 1.Huang J, et al. A single peptide-major histocompatibility complex ligand triggers digital cytokine secretion in CD4(.) T cells. Immunity. 2013;39:846–857. doi: 10.1016/j.immuni.2013.08.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mao H, Cremer PS, Manson MD. A sensitive, versatile microfluidic assay for bacterial chemotaxis. Proc. Natl Acad. Sci. USA. 2003;100:5449–5454. doi: 10.1073/pnas.0931258100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hopfield JJ. Kinetic proofreading: a new mechanism for reducing errors in biosynthetic processes requiring high specificity. Proc. Natl Acad. Sci. USA. 1974;71:4135–4139. doi: 10.1073/pnas.71.10.4135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Tu Y. The nonequilibrium mechanism for ultrasensitivity in a biological switch: sensing by Maxwell’s demons. Proc. Natl Acad. Sci. USA. 2008;105:11737–11741. doi: 10.1073/pnas.0804641105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mehta P, Schwab DJ. Energetic costs of cellular computation. Proc. Natl Acad. Sci. USA. 2012;109:17978–17982. doi: 10.1073/pnas.1207814109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Govern CC, ten Wolde PR. Optimal resource allocation in cellular sensing systems. Proc. Natl Acad. Sci. USA. 2014;111:17486–17491. doi: 10.1073/pnas.1411524111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Govern CC, ten Wolde PR. Energy dissipation and noise correlations in biochemical sensing. Phys. Rev. Lett. 2014;113:258102. doi: 10.1103/PhysRevLett.113.258102. [DOI] [PubMed] [Google Scholar]

- 8.Okada, T. Ligand-concentration sensitivity of a multi-state receptor, Preprint at https://arxiv.org/abs/1706.08346 (2017).

- 9.Aquino G, Wingreen NS, Endres RG. Know the single-receptor sensing limit? think again. J. Stat. Phys. 2016;162:1353–1364. doi: 10.1007/s10955-015-1412-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.ten Wolde PR, Becker NB, Ouldridge TE, Mugler A. Fundamental limits to cellular sensing. J. Stat. Phys. 2016;162:1395–1424. doi: 10.1007/s10955-015-1440-5. [DOI] [Google Scholar]

- 11.Bialek W, Setayeshgar S. Cooperativity, sensitivity, and noise in biochemical signaling. Phys. Rev. Lett. 2008;100:258101. doi: 10.1103/PhysRevLett.100.258101. [DOI] [PubMed] [Google Scholar]

- 12.Hansen CH, Sourjik V, Wingreen NS. A dynamic-signaling-team model for chemotaxis receptors in Escherichia coli. Proc. Natl Acad. Sci. USA. 2010;107:17170–17175. doi: 10.1073/pnas.1005017107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Aquino G, Clausznitzer D, Tollis S, Endres RG. Optimal receptor-cluster size determined by intrinsic and extrinsic noise. Phys. Rev. E. 2011;83:021914. doi: 10.1103/PhysRevE.83.021914. [DOI] [PubMed] [Google Scholar]

- 14.Skoge M, Meir Y, Wingreen NS. Dyna mics of cooperativity in chemical sensing among cell-surface receptors. Phys. Rev. Lett. 2011;107:178101. doi: 10.1103/PhysRevLett.107.178101. [DOI] [PubMed] [Google Scholar]

- 15.Singh V, Tchernookov M, Nemenman I. Effects of receptor correlations on molecular information transmission. Phys. Rev. E. 2016;94:022425. doi: 10.1103/PhysRevE.94.022425. [DOI] [PubMed] [Google Scholar]

- 16.Skoge M, Naqvi S, Meir Y, Wingreen NS. Chemical sensing by nonequilibrium cooperative receptors. Phys. Rev. Lett. 2013;110:248102. doi: 10.1103/PhysRevLett.110.248102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tkačik G, Prentice JS, Balasubramanian V, Schneidman E. Optimal population coding by noisy spiking neurons. Proc. Natl Acad. Sci. USA. 2010;107:14419–14424. doi: 10.1073/pnas.1004906107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ouldridge TE, Govern CC, ten Wolde PR. Thermodynamics of computational copying in biochemical systems. Phys. Rev. X. 2017;7:021004. [Google Scholar]

- 19.Mehta P, Lang AH, Schwab DJ. Landauer in the age of synthetic biology: energy consumption and information processing in biochemical networks. J. Stat. Phys. 2016;162:1153–1166. doi: 10.1007/s10955-015-1431-6. [DOI] [Google Scholar]

- 20.Tkačik G, Walczak AM, Bialek W. Optimizing information flow in small genetic networks. Phys. Rev. E. 2009;80:031920. doi: 10.1103/PhysRevE.80.031920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Walczak AM, Tkačik G, Bialek W. Optimizing information flow in small genetic networks. II. Feed-forward interactions. Phys. Rev. E. 2010;81:041905. doi: 10.1103/PhysRevE.81.041905. [DOI] [PubMed] [Google Scholar]

- 22.Dubuis JO, Tkačik G, Wieschaus EF, Gregor T, Bialek W. Positional information, in bits. Proc. Natl Acad. Sci. USA. 2013;110:16301–16308. doi: 10.1073/pnas.1315642110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Monti M, tenWolde PR. The accuracy of telling time via oscillatory signals. Phys. Biol. 2016;13:035005. doi: 10.1088/1478-3975/13/3/035005. [DOI] [PubMed] [Google Scholar]

- 24.Friedlander T, Prizak R, Guet CC, Barton NH, Tkačik G. Intrinsic limits to gene regulation by global crosstalk. Nat. Commun. 2016;7:12307. doi: 10.1038/ncomms12307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Carballo-Pacheco M, et al. Receptor crosstalk improves concentration sensing of multiple ligands. Phys. Rev. E. 2019;99:022423. doi: 10.1103/PhysRevE.99.022423. [DOI] [PubMed] [Google Scholar]

- 26.Mora T. Physical limit to concentration sensing amid spurious ligands. Phys. Rev. Lett. 2015;115:038102. doi: 10.1103/PhysRevLett.115.038102. [DOI] [PubMed] [Google Scholar]

- 27.Cepeda-Humerez SA, Rieckh G, Tkačik Gcv. Stochastic proofreading mechanism alleviates crosstalk in transcriptional regulation. Phys. Rev. Lett. 2015;115:248101. doi: 10.1103/PhysRevLett.115.248101. [DOI] [PubMed] [Google Scholar]

- 28.Tkačik G, Walczak AM. Information transmission in genetic regulatory networks: a review. J. Phys. Condens. Matter. 2011;23:153102. doi: 10.1088/0953-8984/23/15/153102. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

A Mathematica code for computing the optimal sensor parameters (J*, t*) that maximise the mutual information between two coupled sensors and correlated Gaussian signals is available at https://github.com/vn232/NeqCoopSensing.