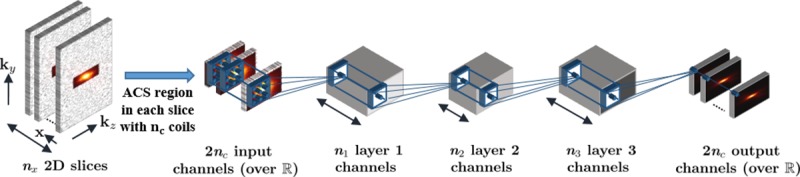

Fig 1. The CNN architecture to learn and enforce the coil self-consistency rule.

The number of layer output channels is denoted by depth of blocks. All layers, except the last one, were followed by rectifier linear units (ReLU) as activation functions. The kernel sizes of the layers were 5×5, 3×3, 3×3 and 5×5, respectively. Each layer had 16, 8, 16 and 2nc output channels, respectively. The 3D k-space data was first inverse Fourier transformed along fully-sampled kx dimension. Subsequently 2D convolutional kernels were jointly trained on the ACS region of resultant 2D slices of data to learn the self-consistency rule.