Abstract

Accurate perception of emotional (facial) expressions is an essential social skill. It is currently debated whether emotion categorization in infancy emerges in a “broad-to-narrow” pattern and the degree to which language influences this process. We used an habituation paradigm to explore: (a) whether 14- and 18-month-old infants perceive different facial expressions (anger, sad, disgust) as belonging to a superordinate category of negative valence, and (b) how verbal labels influence emotion category formation. Results indicated that infants did not spontaneously form a superordinate category of negative valence (Experiments 1 and 3). However, when a novel label (“toma”) was added to each event during habituation trials (Experiments 2 and 4), infants formed this superordinate valance category when habituated to disgust and sad expressions (but not when habituated to anger and sadness). These labeling effects were obtained with two stimuli sets (Radboud Face Database and NimStim), even when controlling for the presence of teeth in the expressions. The results indicate that infants, at 14- and 18-months of age, show limited superordinate categorization based on the valence of different negative facial expressions. Specifically, infants only form this abstract emotion category when labels were provided; and the labeling effect depends on which emotions are presented during habituation. These findings have important implications for developmental theories of emotion and affective science.

Keywords: emotion perception, infancy, categorization, language, facial expressions

Accurate perception of emotional (facial) expressions is an essential social skill. Children who can correctly infer and appropriately respond to others’ emotions have improved social-emotional, academic, and occupational outcomes (Grinspan, Hemphill, & Nowicki, 2003; Izard et al., 2001). Thus, it is important to facilitate and understand the development of emotion perception in early childhood, starting in infancy. Many developmental researchers have suggested that preverbal infants have an innate or early-emerging ability to perceive facial expressions in terms of narrow/basic-level categories, such as “happiness,” “anger,” and “fear” (Izard, 1994; Leppänen & Nelson, 2009; Walker-Andrews, 1997). It has been hypothesized that infants form these basic-level categories before grouping facial expressions into broad/superordinate categories, such as positive and negative valence (Quinn et al., 2011). In contrast, many studies have found that preschoolers have superordinate, valence-based emotion categories (e.g., negative valence) that slowly differentiate into basic-level categories (e.g., anger, disgust) over the first decade of life (for a review, see Widen, 2013). Researchers have extrapolated these findings to hypothesize that: (a) younger, preverbal infants also (exclusively) have superordinate categories for facial expressions, and/or (b) language is fundamental to the acquisition of these categories (Barrett, 2017; Lindquist & Gendron, 2013; Shablack & Lindquist, 2019; Widen, 2013).

To date, it is unknown whether emotion categorization in infancy emerges in a “narrow-to-broad” or “broad-to-narrow” pattern and whether language influences this process. The current studies are the first to test: (a) whether infants spontaneously form superordinate valence-based categories of negative facial expressions, and (b) how language influences emotion categorization in infancy.

Infant Emotion Categorization

Basic-level categorization.

These questions about the progression of infants’ emotion categorization intersect a larger debate on the nature of emotion itself. Here, it is important to emphasize that although this debate, in part, focuses on the experience/expression of emotion, our focus is on the perception of other’s emotions (for further discussion, see Hess, 2017; Hoehl & Striano, 2010). In regards to emotion perception, there is currently considerable disagreement as to whether certain “basic” emotions have universally “recognized”, evolutionary-based signals. Specifically, the “classical” or “common” view of emotions supposes that a specific set of emotions—happiness, sadness, anger, fear, surprise, and disgust—have corresponding facial expressions that are easily identified in others (Ekman, 1994; Izard, 1994). Many infancy researchers endorse the notion (explicitly or implicitly) that preverbal infants have a biological preparedness to “recognize” facial expressions in terms of these basic-level categories (Izard, 1994; Jessen & Grossmann, 2015; Leppänen & Nelson, 2009; Walker-Andrews, 1997).

Prior studies on infants’ emotion categorization lend some support the idea that infants can form basic-level emotion categories (Ruba & Repacholi, in press). In looking-time categorization studies, infants are habituated/familiarized to pictures of multiple models (i.e., people) expressing one emotion (e.g., happiness). At test, infants are thought to form an emotion category if they (a) do not recover looking time to novel models expressing the habituation emotion (e.g., happiness), and (b) recover looking time to familiar models expressing a novel emotion (e.g., fear) (Quinn et al., 2011). The most consistent finding from these studies is that, by 7-months of age, infants can form a basic-level category of happiness (i.e., after habituation to happy expressions) and differentiate this category from sad, angry, and fearful expressions at test (Amso, Fitzgerald, Davidow, Gilhooly, & Tottenham, 2010; Bornstein & Arterberry, 2003; Cong et al., 2019; Kotsoni, de Haan, & Johnson, 2001; Safar & Moulson, 2017; Walker-Andrews, Krogh-Jespersen, Mayhew, & Coffield, 2011). Infants at this age can also form a basic-level category of anger during habituation and differentiate this category from happy, sad, fear, and disgust expressions at test (Caron, Caron, & Myers, 1985; Lee, Cheal, & Rutherford, 2015; Ruba, Johnson, Harris, & Wilbourn, 2017; Serrano, Iglesias, & Loeches, 1995; White et al., 2019). Thus, it appears that in the first year of life, infants are beginning to form basic-level categories for some facial expressions of emotion.

However, critics of this “classical” emotion view have challenged this interpretation of the infant categorization literature. In particular, “constructionist” views of emotion reject the idea of universal, “basic” emotions, instead, arguing that both emotion expression and perception are highly variable processes (Barrett, 2017; Barrett, Adolphs, Marsella, Martinez, & Pollak, 2019) (see Footnote 1). With respect to the infancy literature, constructionists contend that infants form basic-level emotion categories on the basis of salient facial features, rather than affective meaning (Barrett, Lindquist, & Gendron, 2007). For example, infants may form categories of anger facial expressions because stereotypical anger expressions display “furrowed eyebrows,” but infants may not appreciate that these expressions may convey “danger” (Ruba et al., 2017). In other words, infants are thought to form “perceptual” emotion categories, rather than “conceptual” categories. To the degree that preverbal infants do attribute affective meaning to facial expressions, constructionists argue that this meaning is based on valence (positive vs. negative) and level of arousal or activation (high vs. low): two dimensions which characterize all “basic” emotions (Russell, 1980). These broad/superordinate emotion concepts are thought to gradually narrow into basic-level emotion concepts over the first decade of life (Widen, 2013).

Perceptual or conceptual categories?

Thus, there is disagreement as to whether infant responses in emotion categorization tasks reflect only “perceptual” abilities or a conceptual “understanding” of facial expressions. A handful of studies have attempted to address this question by manipulating salient facial features, such as the presence versus absence of teeth. If infants are able to form an emotion category (e.g., happiness) over perceptually variable facial expressions (e.g., small, closed-mouth smiles and big, toothy smiles), then infants may not simply be responding to specific facial features. In fact, several studies have found that 5- to 12-month-olds are able to form a category for happiness when the expressions vary in intensity across the habituation and test trials (Bornstein & Arterberry, 2003; Kotsoni et al., 2001; Lee et al., 2015; Ludemann & Nelson, 1988; but see, Cong et al., 2019; Phillips, Wagner, Fells, & Lynch, 1990)

In contrast, one study (Caron et al., 1985) has been frequently cited as evidence that infants only categorize facial expressions on a perceptual basis. In this study, after habituation to “non-toothy” happy expressions, 4- to 7-month-olds showed heightened attention to a novel model expressing “toothy” happiness at test. This finding suggests that infants did not perceive the two happy expressions as belonging to the same category. However, this does not necessarily imply that infants did not “understand” the meaning of these two facial expressions. For example, although adults conceptually understand facial expressions, their emotion categorization is also influenced by salient facial features (Ruba, Wilbourn, Ulrich, & Harris, 2018). Further, this sensitivity to salient facial features in infancy seems to decrease over the first year of life (Caron et al., 1985; Ludemann, 1991). It is possible that older infants, who process faces in a more holistic manner (Cohen & Cashon, 2001), would not be influenced by these perceptual cues. A secondary aim of the current studies was to explore this possibility.

Superordinate categorization?

Although infants in the first year of life can form basic-level emotion categories (which may or may not be perceptual in nature), it is unclear whether infants also form superordinate categories of facial expressions. Superordinate categories are based on abstract features of emotion, such as valence or arousal. For instance, while infants categorize anger expressions as distinct from disgust expressions (i.e., a basic-level category), they may not recognize that both anger and disgust are “negative”, “high arousal” emotions (i.e., a superordinate category). Thus, superordinate categories are more likely to be “conceptual” categories, based on some affective meaning, rather than perceptual information alone.

To date, only one study has examined whether infants can form superordinate categories of facial expressions. Ludemann (1991) habituated 7- and 10-month-olds to four people expressing happy and pleasant-surprise expressions. At test, 10-month-olds (but not 7-month-olds) looked longer at novel people expressing anger and fear compared to novel people expressing the habituated emotions of happiness and pleasant-surprise. At first glance, these results suggest that the older infants may have formed a category of positive valence (happiness and pleasant-surprise) that was distinct from each of the two negative emotions. However, an alternative interpretation is that infants simply perceived that the happy and surprised expressions were perceptually distinct from the anger and fearful expressions. Quinn and colleagues (2011) suggest that in order to determine whether infants formed a superordinate emotion category (based on positive valence), a different positive emotion (e.g., interest) would need to be included in the test trials. In a similar vein, infants could be habituated to two negative emotions (e.g., disgust, sadness) and presented with a novel, negative emotion at test (e.g., anger) to determine whether they have formed a category of negative valence. The current studies explore this latter possibility.

There is reason to believe that infants can form a superordinate category of negative valence. Social referencing studies, for instance, have found that infants differentially respond to other’s emotional expressions based on valence. Specifically, by 12-months of age, infants will approach an ambiguous object that is the target of a happy expression, but will avoid an ambiguous object that is the target of a negative emotional expression (e.g., disgust) (Hertenstein & Campos, 2004; Moses, Baldwin, Rosicky, & Tidball, 2001; Repacholi, 1998). More importantly, infants respond with behavioral avoidance to a variety of negative emotional expressions, such as anger, disgust, and fear (Martin, Maza, McGrath, & Phelps, 2014; Sorce, Emde, Campos, & Klinnert, 1985; Walle, Reschke, Camras, & Campos, 2017; but see, Ruba, Meltzoff, & Repacholi, 2019). This suggests that infants may view all of these expressions as members of the same negative emotion category. However, with the exception of Ludemann (1991), no prior research has tested whether infants form superordinate, valence-based emotion categories in a categorization task.

Does Language Influence Emotion Categorization in Infancy?

The current studies also explore whether and how language influences emotion categorization in infancy. The importance of language to emotion categorization has been extensively supported by research with verbal children and adults. For instance, the inclusion of emotion labels in sorting tasks improves emotion categorization accuracy (Nook, Lindquist, & Zaki, 2015; Russell & Widen, 2002; Widen & Russell, 2004), while reduced accessibility to emotion labels leads to slower and less accurate emotion categorization (Gendron, Lindquist, Barsalou, & Barrett, 2012; Lindquist, Barrett, Bliss-Moreau, & Russell, 2006; Lindquist, Gendron, Barrett, & Dickerson, 2014; Ruba et al., 2018). According to one constructionist theory—the “theory of constructed emotion” (Barrett, 2017)—children and adults use language as context for the categorization of emotional expressions. In particular, emotion labels (e.g., “happy”) facilitate categorization because they impose a categorical structure on otherwise variable emotional expressions. For example, the label “anger” can refer to toothy and non-toothy grimaces, expressed in a variety of contexts across different people. Without this label, “anger” expressions may not share enough similarities to group them together as members of the same category (Fugate, 2013; Fugate, Gouzoules, & Barrett, 2010). While the “theory of constructed emotion” has made explicit predictions about the role of language in subordinate emotion categorization (e.g., anger), there has been less discussion on the role of language in superordinate emotion categorization (e.g., negative valence). Recently, supporters of this theory have argued that subordinate emotion categorization, similar to superordinate emotion categorization, is a process of forming abstract categories (Hoemann et al., in press). Thus, language should have a similar role in forming various types of abstract emotion categories, subordinate or superordinate.

It has been further argued that language is particularly important for emotion categorization in infancy, when emotion labels are first learned (Barrett, 2017). However, there has been no published work examining whether labels facilitate emotion categorization in infancy. Nevertheless, studies have documented the impact of labels on infants’ categorization of objects and object properties (Balaban & Waxman, 1997; Ferry, Hespos, & Waxman, 2010). In these studies, infants are familiarized/habituated to different objects from a category (e.g., cars and airplanes), which are presented with a single label (e.g., “vehicle”) (Waxman & Markow, 1995). The addition of a label to these trials has a facilitative effect on infants’ categorization of objects (Booth & Waxman, 2002; LaTourrette & Waxman, 2019) and spatial relations, such as “containment” (Casasola, 2005) and “path” (Pruden, Roseberry, Güksun, Hirsh-Pasek, & Golinkoff, 2013). This facilitative effect appears to be unique to verbal labels, in so far as infants do not form these categories when presented with a pure tone sequence or backwards human-speech (Balaban & Waxman, 1997; Ferry, Hespos, & Waxman, 2013; Fulkerson & Waxman, 2007). One explanation for these effects is that verbal labels prompt infants to search for non-obvious commonalities between objects (Althaus & Mareschal, 2014; Waxman & Markow, 1995). It is possible that similar labeling effects may be found when facial expressions are used as stimuli.

Current Studies

The first primary aim of the current studies was to determine whether infants perceive that different negative facial expressions (anger, sadness, disgust) all belong to a superordinate category of negative valence. This information can inform debates about whether emotion categorization follows a “broad-to-narrow” or “narrow-to-broad” trajectory in infancy. Given the research on infant social referencing (Sorce et al., 1985) and preschool emotion categorization (Widen, 2013), we expected that infants would be able to form superordinate emotion categories. The second primary aim of the current studies was to explore whether and how verbal labels influence infants’ emotion categories. We expected that labels would alter infants’ emotion categorization, in line with hypotheses made by the “theory of constructed emotion” (Barrett, 2017) and research on object categorization (Waxman & Markow, 1995). A secondary aim of the current studies was to explore these questions using facial expressions from two different stimuli sets: one that controlled for the presence of teeth displayed in the facial expressions (Tottenham et al., 2009), and one that did not (Langner et al., 2010).

Across four studies, 14- and 18-month-olds were tested in a habituation-categorization paradigm. These ages were chosen since emotion labels begin to appear in infants’ productive vocabularies around 18-months (Ridgeway, Waters, & Kuczaj, 1985). Fourteen-month-olds were tested to capture any developmental differences in emotion categorization prior to the acquisition of emotion labels. At least one study has found that labels facilitate categorization of novel objects for 18-month-olds, but not for 14-month-olds (Booth & Waxman, 2002). Negative facial expressions were examined, given that four of the five emotions that are considered “universal” are negative valence (i.e., sadness, anger, fear, disgust) (Ekman, 1994). Negative emotions provide a wealth of social information for infants, and these expressions are thought to be important from an evolutionary perspective (see Repacholi, Meltzoff, Hennings, & Ruba, 2016; Repacholi, Meltzoff, Toub, & Ruba, 2016; Vaish, Grossmann, & Woodward, 2008). Consequently, it would be beneficial for infants to be able to form negative emotion categories.

Experiment 1

Experiment 1 tested whether infants could form a superordinate category of negative valence. Infants were habituated to two different negative facial expressions (e.g., disgust and sadness) expressed by three people. After habituation, infants were shown four test trials. Forming an abstract, superordinate category requires that infants treat a novel instance of the abstract category as familiar (Casasola, 2005; Quinn et al., 2011). Thus, if infants formed a superordinate category of negative valence, their looking time to a negative familiar event (a person and emotion seen during habituation; e.g., disgust) should not differ from their looking time to a negative novel face (a person not seen during habituation, expressing one of the habituation emotions; e.g., sadness) and a negative novel emotion (a person seen during habituation expressing a novel, negative emotion; e.g., anger). In other words, even though infants have not seen the two negative novel events before, their looking time should be equivalent to the negative familiar event (i.e., they should treat the novel events as familiar). Further, if infants formed a superordinate category, their looking time to each of these three negative emotions should also be significantly shorter than their looking time to a positive novel emotion (a person seen during habituation expressing a novel, positive emotion, i.e., happiness). We predicted that infants would exhibit this pattern of looking, that is, they would form a superordinate emotion category.

Methods

Participants.

The participants were 48 (24 female) 14-month-olds (M = 14.08 months, SD = .15 months, range = 13.81 – 14.40 months) and 48 (24 female) 18-month-olds (M = 18.10 months, SD = .19 months, range = 17.72 – 18.41 months). A power analysis indicated that a sample size of 48 infants (24 for each age/condition) would be sufficient to detect reliable differences in a 4 (Trial) x 2 (Condition) x 2 (Age) mixed-model design, assuming a medium effect size (f = .25) at the .05 alpha level with a power of .80. This was pre-selected as the stopping rule for the study. An additional 31 infants (24 18-month-olds) were tested but excluded from the final analyses for the following reasons: failure to meet the habituation criteria, described below (n = 7; 5 18-month-olds); failure to finish the study due to fussiness (n = 15; 14 18-month-olds); and fussiness/inattentiveness that lead to difficulties with accurate coding (n = 9; 5 18-month-olds). To this latter point, the blind, online coder marked the tested infant as likely too fussy and/or inattentive for coding to be reliable. Another blind coder confirmed this decision during secondary offline coding. The high attrition rate for the 18-month-olds is similar to other emotion categorization studies with this age group (Ruba et al., 2017).

Infants were recruited from a university database of parents who expressed interest in having their infants participate in research studies. All infants were healthy, full-term, and had normal birth weight. Infants were identified as Caucasian (81%, n = 78), multiracial (15%, n = 14), Asian (3%, n = 3), or African American (1%, n = 1). About 7% (n = 7) of infants were identified as Hispanic or Latino. Infants came from middle and upper-middle class families in which English was the primary language (at least 75% exposure to English). The study was conducted following APA ethical standards and with approval of the Institutional Review Board (IRB) at the University of Washington (Approval Number: 50377, Protocol Title: “Emotion Categories Study”). Infants were randomly assigned to the Anger-Sad or the Disgust-Sad conditions.

Stimuli.

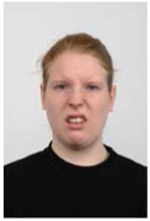

Semi-dynamic events were created in iMovie, using static images from the Radboud Faces Database (for validation information, see Langner et al., 2010). Pictures of neutral, sad, anger, disgust, and happy facial expressions were chosen as stimuli. Each event began with a picture of an adult female displaying a neutral expression. After 1.5s, a picture of the person’s facial expression (e.g., anger) appeared. This static expression was presented for 3.5s before a black screen appeared, which lasted for 1s. These 6s events were looped five times, without pause, to create a 30s video, which comprised a single trial in the study.

Three of the four “universal” negative emotions—anger, disgust, and sadness—were used in the current studies. Fear was excluded because previous research (Grossmann & Jessen, 2017; Kotsoni et al., 2001), as well as our own pilot testing, suggested that infants have a spontaneous preference for fearful expressions and dishabituate to these faces at test. Thus, of the three negative emotions available for use, two emotions were used during the habituation trials, while the third emotion was used for the novel negative emotion test trial (see “Procedure”). During the habituation trials, infants viewed either anger and sad (Anger-Sad condition) or disgust and sad expressions (Disgust-Sad condition). An Anger-Disgust condition was not included given that (a) infants tend to form more inclusive categories when there is perceptual overlap among the exemplars (Oakes, Coppage, & Dingel, 1997), and (b) anger and disgust expressions have a high degree of perceptual overlap (Widen & Russell, 2013).

Apparatus.

Each infant was tested in a small room, divided into two sections by an opaque curtain. In one half of the room, infants sat on their parent’s lap approximately 60cm away from a 48cm color computer monitor and audio speakers. A camera was located approximately 10cm above the monitor and focused on the infant’s face to capture their looking behavior. In the other half of the room, behind the curtain, the experimenter sat at a table with a laptop computer (connected to the testing monitor). A secondary monitor displayed a live feed from the camera that was focused on the infant’s face. The experimenter used this live feed to record infants’ looking behavior during each trial. The Habit 2 software program (Oakes, Sperka, & Cantrell, 2015) was used to present the stimuli, record infants’ looking times, and calculate the habituation criteria (described below).

Procedure.

After obtaining parental consent, infants were seated on their parent’s lap in the testing room. During the session, parents looked down and were asked not to speak to their infant or point to the screen. Before each habituation and test trial, an “attention-getter” (i.e., a blue flashing, chiming circle) directed infants’ attention to the monitor. The experimenter began each trial when the infant was looking at the monitor and recorded the duration of infant’s looking behavior during that trial. For a look to be counted, infants had to look continuously for at least 2s. Each habituation and test trial played until infants looked away for more than two continuous seconds or until the 30s trial ended.

Infants first saw a pre-test trial (i.e., plush cow toy rocking back and forth) designed to acclimate them to the task. The subsequent habituation trials varied based on condition. In the Anger-Sad condition, infants saw three different people expressing anger and sadness, and in the Disgust-Sad condition, infants saw three different people expressing disgust and sadness. These six habituation events (i.e., three people, each expressing the two negative emotions) were randomized and presented in blocks. Prior research suggests that 18-month-olds have difficulty forming abstract categories with a large number of exemplars during habituation (Casasola, 2005). Thus, the habituation trials were limited to six. These trials continued until infants’ looking time across the last three trials decreased 50% or more from their looking time during the first three consecutive habituation trials or until 18 habituation trials were presented. Only infants who met the habituation criteria were included in the final analyses.

After the habituation trials, infants were presented with four test trials. The trials were selected based on previous research with habituation-categorization paradigms (Casasola & Cohen, 2002; Ruba et al., 2017). For purposes of “stimulus sampling” (Wells & Windschitl, 1999), and to confirm that infants’ emotion categories were generalizable across multiple people, the person displaying the emotion was varied during the test trials. The selected familiar/novel people were counterbalanced across participants, and the presentation order of the test stimuli was blocked and randomized (see Table 1 for example stimuli). Further, we assumed that infants would not simply form a superordinate category for familiar faces during the habituation trials.

Table 1.

Sample habituation and test events for Experiment 1 and 2. Habituation and test events were presented in a randomized order. Pictures reprinted with permission from the creators of the Radboud Face Database. For more details, please see Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D., Hawk, S., & van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cognition & Emotion, 24(8), 1377—1388. doi: 10.1080/0269993090348507

| Disgust-Sad Habituation Stimuli | Disgust-Sad Test Stimuli | |||

|---|---|---|---|---|

|

Negative Familiar Event (disgust) | Negative Novel Face (sadness) | Negative Novel Emotion (anger) | Positive Novel Emotion (happiness) |

|

|

|

|

|

| Anger-Sad Habituation Stimuli | Anger-Sad Test Stimuli | |||

|

Negative Familiar Event (anger) | Negative Novel Face (sadness) | Negative Novel Emotion (disgust) | Positive Novel Emotion (happiness) |

|

|

|

|

|

The following test trials were used in the current study. First, the negative familiar event test trial was a person seen during habituation, expressing one of the habituation emotions (e.g., sad person 1). The negative novel face test trial depicted a new person, not seen during habituation, expressing one of the habituation emotions (e.g., anger person 4). We included this trial in order to test whether infants would generalize a superordinate emotion category to a novel person (not seen during habituation). If infants were to include a novel exemplar in their superordinate category, this would provide stronger evidence that this category was based on emotion rather than person identity. The negative novel emotion test trial depicted a person seen during habituation expressing a novel, negative emotion (e.g., disgust person 2), which was either disgust (Anger-Sad condition) or anger (Disgust-Sad condition). Lastly, the positive novel emotion test trial depicted a person seen during habituation expressing a novel, positive emotion, which was always happiness (e.g., happy person 3). The negative novel emotion and positive novel emotion test trials employed familiar people (seen during habituation), so that infants’ responses could be attributed to novelty of the emotion only.

Scoring.

Infants’ looking behavior was live-coded by a trained research assistant. The coder was blind to which stimuli the infant was currently viewing during the habituation and test trials. A second research assistant, who was also display-blind, rescored 25% of the tapes (n = 24) offline. Reliability was excellent for duration of looking on each trial, r = .93, p < .001.

Results

For each experiment, all of the statistical tests were two-tailed and alpha was set at .05. We used Fisher’s least significant difference (LSD) procedure, such that if the omnibus analysis was statistically significant, then follow up comparisons were conducted without any correction. All analyses were conducted in R (R Core Team, 2014), and figures were produced using the package ggplot2 (Wickham, 2009). Analysis code can be found here: https://osf.io/dgv3k/?view_only=057b02400528409aaa83cae8903518d6.

Habituation phase.

To confirm that infants’ looking times sufficiently decreased from habituation to test, a 2 (Age) x 2 (Condition) x 2 (Trials: mean of first three habituation trials vs. negative familiar event test trial) mixed-model analysis of variance (ANOVA) was conducted. This analysis yielded a significant main effect of Age, F(1, 92) = 3.93, p = .050, ηp2 = .04, whereby 18-month-olds looked longer across the trials (M = 14.64s, SD = 8.83s) than the 14-month-olds (M = 13.04s, SD = 8.20s). There was also a significant main effect of Trials, F(1, 92) = 479.44, p < .001, ηp2 = .84, and a significant Trials x Condition interaction, F(1, 92) = 6.85, p = .010, ηp2 = .07. There were no other significant effects.

Follow-up analyses, comparing the habituation and the test trials, were conducted to identify the source of the Trials x Condition effect. Paired sample t-tests revealed that infants’ looking behavior significantly decreased from habituation to test in the Anger-Sad, t(47) = 12.42, p < .001, d = 1.79, and the Disgust-Sad conditions, t(47) = 18.71, p < .001, d = 2.70. Hence, infants had not reached the habituation criteria by chance (Oakes, 2010). However, infants in the Disgust-Sad condition had significantly shorter looking times to the negative familiar event (M = 6.05s, SD = 2.96s) compared to infants in the Anger-Sad condition (M = 7.95s, SD = 5.04s), t(94) = 2.26, p = .026 d = .46. Infants’ looking times during the first three habituation trials did not differ between the Disgust-Sad condition (M = 21.36s, SD = 5.59s) and the Anger-Sad condition (M = 19.99s, SD = 6.09s), t(94) = 1.14, p > .25, d = .23. Thus, the decrease in infants’ looking times from habituation to test was more marked in the Disgust-Sad condition relative to the Anger-Sad condition.

Test phase.

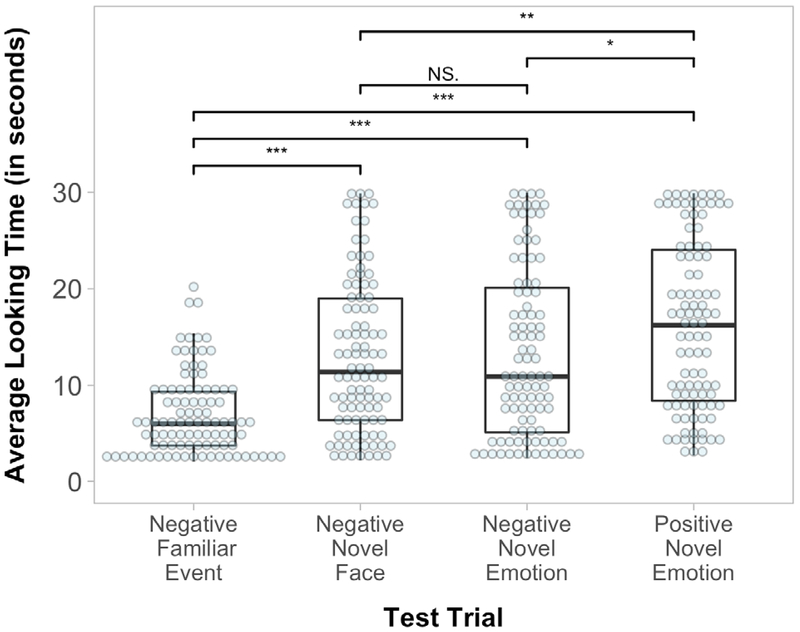

Infants’ looking times during the test trials were analyzed in a 4 (Test Trial) x 2 (Age) x 2 (Condition) mixed-model ANOVA. This analysis yielded a significant main effect of Test Trial, F(3, 276) = 31.70, p < .001, ηp2 = .26. Follow-up comparisons between the test trials were conducted using paired t-tests (see Figure 1). These analyses revealed that infants spent significantly less time looking at the negative familiar event compared to the negative novel face (p < .001, d = .83), the negative novel emotion (p < .001, d = .77), and the positive novel emotion (p < .001, d = 1.06). This pattern of looking suggests that infants did not form a superordinate category of negative valence. Infants also looked significantly longer at the positive novel emotion compared to the negative novel face (p = .008, d = .28) and the negative novel emotion (p = .015, d = .25). Infants’ looking behavior to the negative novel face and the negative novel emotion did not differ (p > .25, d = .03; see Table 2 for cell means). Thus, infants differentiated the three negative emotion test trials from the positive novel emotion. This pattern of results was evident for both the 14- and 18-month-olds when their data was separately analyzed (see Supplementary Materials).

Figure 1.

Experiment 1: Infants’ total looking time during the test trials, *p < .05, **p < .01, ***p < .001 for pairwise comparisons between events.

Table 2.

Mean looking times (and standard deviations) in seconds during the test trials.

| Exp. | Condition | Age | n | Negative Familiar Event |

Negative Novel Face |

Negative Novel Emotion |

Positive Novel Emotion |

|---|---|---|---|---|---|---|---|

| 1 | AS, DS | 14, 18 | 96 | 7.00 (4.22) | 13.07 (8.02) | 13.40 (8.87) | 16.21 (8.79) |

| 2 | AS | 14, 18 | 64 | 11.00 (7.32) | 17.17 (8.84) | 18.36 (9.30) | 18.70 (8.28) |

| 2 | DS | 14, 18 | 64 | 10.72 (6.14) | 12.47 (7.29) | 11.69 (7.05) | 18.10 (8.04) |

| 2 | AS, DS | 14 | 64 | 10.42 (6.58) | 13.26 (7.55) | 14.09 (8.65) | 19.37 (8.21) |

| 2 | AS, DS | 18 | 64 | 11.29 (6.90) | 16.37 (8.99) | 15.96 (9.06) | 17.44 (8.01) |

| 3 | DS | 14 | 24 | 11.35 (7.01) | 7.66 (4.90) | 10.99 (8.87) | 10.65 (8.50) |

| 4 | DS | 14 | 24 | 11.03 (7.07) | 10.95 (7.81) | 10.56 (6.76) | 17.65 (6.78) |

Note: Conditions are Anger-Sad (AS) and Disgust-Sad (DS). For Experiment 1, there were no interactions with Condition or Age; therefore, only the combined means are presented.

In addition, a significant main effect of Age, F(1, 92) = 4.92, p = .029, ηp2 = .05, revealed that 18-month-olds looked longer at the test trials (M = 13.49s, SD = 8.64s) compared to the 14-month-olds (M = 11.35s, SD = 8.01s). Finally, there was a significant main effect of Condition, F(1, 92) = 9.31, p = .003, ηp2 = .09, whereby infants in the Anger-Sad condition looked longer to the test trials (M = 13.90s, SD = 8.77s) compared to infants in the Disgust-Sad condition (M = 10.94s, SD = 7.73s). There were no other significant effects.

Discussion

At both ages and across conditions, infants’ looking time to the negative familiar event was significantly shorter than their looking time to the other two negative emotion test trials (i.e., negative novel face and negative novel emotion). Thus, infants registered which event they had seen during habituation, but they did not form a superordinate category of negative valence. This finding was unexpected, given that social referencing research suggests that infants show similar behavioral responses (i.e., avoidance) to different negative emotions (Martin et al., 2014; Sorce et al., 1985; Walle et al., 2017). The current findings are also inconsistent with predictions made by the “theory of constructed emotion” (Barrett, 2017), which argues that infants have superordinate representations of facial expressions. Instead, taken together with previous research on basic-level emotion categorization (Bornstein & Arterberry, 2003; Kotsoni et al., 2001; Ruba et al., 2017), these findings suggest that infant emotion categorization may progress in a “narrow-to-broad” fashion (Quinn et al., 2011).

Interestingly, infants’ looking times for each of the negative emotion test trials was significantly shorter than their looking time to the positive novel emotion test trial. Thus, infants showed valence-based discrimination of the facial expressions by differentiating each of the three negative emotions (anger, sadness, disgust) from the positive emotion (happiness). This replicates previous findings that infants can discriminate happy expressions from negative facial expressions (e.g., anger, sadness) in the first year of life (Bornstein & Arterberry, 2003; Flom & Bahrick, 2007; Montague & Walker-Andrews, 2001). In other words, while infants’ looking times did not indicate that they perceived the “novel” negative events as equivalent to the “familiar” negative event, infants did seem to make some differentiation between the positive novel emotion and the negative test events. Thus, infants did not form a superordinate category in the “traditional” sense (i.e., they did not treat the “novel” events as “familiar”), but some valence-based differentiation did occur.

Experiment 2

Given that infants did not spontaneously form a superordinate emotion category, Experiment 2 tested whether labeling each habituation event with the same novel word (i.e., “toma”) would facilitate categorization. We predicted that the addition of a label would help infants form a superordinate category for negative emotions, in the same way that labels facilitate categorization of objects and object properties (Casasola, 2005; Pruden et al., 2013; Waxman & Markow, 1995). Specifically, we predicted that: (a) infants’ looking time to the negative familiar event, negative novel face, and negative novel emotion would not differ from one another, and (b) infants’ looking times for each of these three negative emotion test trials would be significantly shorter than their looking times to the positive novel emotion. A few studies have reported that verbal labels are more likely to facilitate category formation for infants with larger vocabularies (Casasola & Bhagwat, 2007; Nazzi & Gopnik, 2001; Waxman & Markow, 1995; but see, Althaus & Plunkett, 2016). Thus, we also examined the relationship between infants’ vocabulary size and their emotion categorization in the presence of labels. Due to their exploratory nature, these analyses are presented in the Supplementary Materials.

Methods

Participants.

The final sample consisted of 64 (32 female) 14-month-olds (M = 14.11 months, SD = .18 months, range = 13.78 – 14.47 months) and 64 (32 female) 18-month-olds (M = 18.07 months, SD = .23 months, range = 17.62 – 18.48 months). Infants were identified as Caucasian (78%, n = 100), multiracial (16%, n = 20), Asian (5%, n = 7), or Native American (1%, n = 1). About 9% (n = 11) of infants were identified as Hispanic or Latino. An additional 23 infants (13 18-month-olds) were tested but excluded from final analyses for the following reasons: failure to meet the habituation criteria (n = 6 18-month-olds), failure to finish the study due to fussiness (n = 14; 6 18-month-olds), and fussiness/inattentiveness during the study (n = 3; 1 18-month-old). The sample size was larger than Experiment 1 in order to conduct exploratory analyses related to infants’ language development (see Supplementary Materials). Infants were randomly assigned to the Anger-Sad condition or to the Disgust-Sad condition.

Parental report confirmed that very few of these infants were using emotion labels. Only twelve (18-month-old) infants had produced the word “happy,” six (18-month-old) infants had produced the word “sad,” and no infants had produced the words “angry” or “disgusted” (or synonyms). Thus, the majority of infants in this sample did not have emotion labels in their expressive vocabularies, particularly for negative emotions, which is consistent with previous research findings for this age group (Ridgeway et al., 1985).

Stimuli.

The visual stimuli were identical to Experiment 1. However, a label was added to each of the six habituation events. The label was a pre-recorded nonsense word (i.e., “toma”) spoken by a native English-speaking female using infant-directed speech. In each habituation event, the same novel word was spoken twice after the person’s target expression appeared. The novel word was never presented immediately before or during the appearance of the expression. This presentation format increased the likelihood that infants would associate the novel word with the expressions themselves, and decreased the likelihood that infants would: (a) associate the word with the expression change, or (b) make causal attributions (e.g., the word caused the person’s expression to change). This label was only presented with the habituation trials: the test trials were presented in silence, without labels.

Apparatus and Procedure.

The apparatus and habituation procedure were identical to Experiment 1. Infants’ receptive and expressive vocabularies were measured by parental report via the CDI, English Long Form (Fenson et al., 2007).

Scoring.

The scoring procedure was identical to Experiment 1. Reliability was excellent (25% of tapes rescored; n = 32) for duration of looking on each trial, r = .96, p < .001.

Results

Habituation phase.

A 2 (Age) x 2 (Condition) x 2 (Trials) mixed-model ANOVA was conducted. A significant main effect of Trials, F(1, 124) = 567.02, p < .001, ηp2 = .82, revealed that infants looked significantly longer to the first three habituation trials (M = 25.02s, SD = 4.70s) compared to the negative familiar event (M= 10.86s, SD = 6.73s). There were no other significant effects.

Test phase.

Infants’ looking times during the test trials were analyzed in a 4 (Test Trial) x 2 (Age) x 2 (Condition) mixed-model ANOVA. Significant main effects of Test Trial, F(3, 372) = 27.31, p < .001, ηp2 = .18, and Condition, F(1, 124) = 10.86, p = .001, ηp2 = .08, were qualified by a significant Test Trial x Condition interaction, F(3, 372) = 7.05, p < .001, ηp2 = .05. In addition, a significant Test Trial x Age interaction emerged, F(3, 372) = 3.31, p = .020, ηp2 = .03. Follow-up independent t-tests revealed that 18-month-olds looked significantly longer at the negative novel face test trial compared to the 14-month-olds (p = .036, d = .38). There were no significant age group differences among the other test trials (see Table 2 for cell means).

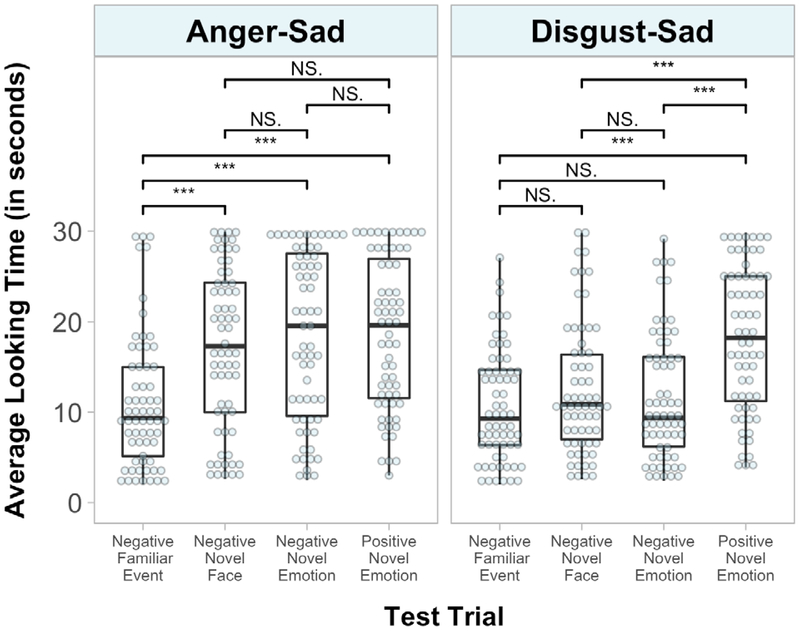

Follow-up analyses to explore the Test Trial x Condition interaction were conducted separately by Condition (see Figure 2). For the Anger-Sad condition, a significant main effect of Test Trial emerged, F(3, 186) = 17.12, p < .001, ηp2 = .22. Follow-up paired t-tests revealed that infants looked significantly less at the negative familiar event compared to the negative novel face (p < .001, d = .58), the negative novel emotion (p < .001, d = .75), and the positive novel emotion (p < .001, d = .80). There were no other significant differences. Thus, as with Experiment 1, infants did not form a superordinate category of negative valence in the Anger-Sad condition

Figure 2.

Experiment 2: Infants’ total looking time during the test trials, separated by Condition, *** p < .001 for pairwise comparisons between events.

For the Disgust-Sad condition, a significant main effect of Test Trial also emerged, F(3, 186) = 17.25, p < .001, ηp2 = .22. Follow-up paired t-tests revealed that infants looked significantly longer at the positive novel emotion compared to the negative familiar event (p < .001, d = .81), the negative novel face (p < .001, d = .59), and the negative novel emotion (p < .001, d = .64). Critically, however, infants’ looking to the negative familiar event did not differ from the negative novel face (p = . 12, d = .20) or the negative novel emotion (p > .25, d = . 12), and looking to the negative novel face did not differ from the negative novel emotion (p > .25, d = .09). Thus, infants formed a superordinate category of negative valence in the Disgust-Sad condition (see Table 2 for cell means). For completeness, we also ran the analyses (a) separately for both age groups, and (b) equating the sample size with that of Experiment 1 (see the Supplementary Materials). The same results were obtained for both ages and when the data from only the first 24 infants (per age) tested in each experimental condition were analyzed.

Combined Experiment 1 and Experiment 2 analysis.

To determine whether the addition of a label in Experiment 2 significantly impacted infants’ categorization in comparison to Experiment 1, data for the Disgust-Sad condition were analyzed in a 4 (Test Trial) x 2 (Age) x 2 (Experiment) mixed-model ANOVA. To equate sample sizes across experiments, only the first 24 participants in the Disgust-Sad condition of Experiment 2 were analyzed. This analysis revealed a significant main effects of Test Trials, F(3, 276) = 25.98, p < .001, ηp2 = .22, and Experiment, F(1, 92) = 5.23, p = .025, ηp2 = .05, which were qualified by a marginally significant Test Trial x Experiment interaction, F(3, 276) = 2.26, p = .081, ηp2 = .02. Therefore, infants’ looking time during the test trials did not significantly differ between the two experiments.

Discussion

The results of Experiment 2 suggest that adding a novel verbal label to each habituation event facilitated the formation of a superordinate category of negative valence in the Disgust-Sad condition. In this condition, infants’ looking times to the negative familiar event, negative novel face, and negative novel emotion test trials did not differ from one another (i.e., infants perceived these events to be equivalent). Infants’ looking times to each of the three negative trials were also significantly shorter than their looking to the positive novel emotion test trial. On the other hand, in the Anger-Sad condition, infants did not form a superordinate category of negative valence. Instead, as with Experiment 1, infants attended longer to the negative novel face and the negative novel emotion relative to the negative familiar event. These condition differences are considered further in the General Discussion.

Although infants’ looking times in the Disgust-Sad condition suggest that they formed a superordinate category, this conclusion is tempered by the results of the cross-experiment analysis. When data from Experiments 1 and 2 were analyzed together, the Test Trial x Experiment interaction only approached significance. Thus, the labeling effect requires replication. The findings in the first two experiments are also complicated by the fact there were clear perceptual differences between some of the facial expression stimuli (see Table 1). Specifically, while the anger, sad, and happy facial expressions displayed teeth, the disgust expressions did not. As previously mentioned, it is currently unknown whether and how older infants’ emotion categories are influenced by these salient facial features.

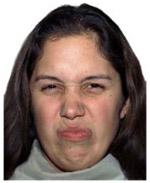

Thus, to replicate and extend the results of the first two experiments, Experiments 3 and 4 were conducted with expressions that did not display teeth (see Table 3, selected from the NimStim Set of Facial Expressions, Tottenham et al., 2009). A new expression database was used because the original database (Radboud Face Database, Langner et al., 2010) did not contain disgust or happy expressions that lacked teeth (see Footnote 2). Given that there were no age differences in Experiment 1 and 2, only 14-month-olds were tested in the subsequent experiments. Further, since infants in the Anger-Sad condition did not show any evidence of categorization, only the Disgust-Sad condition was tested further. Experiment 3 examined whether infants would form a superordinate category of negative valence when the facial expressions did not include teeth.

Table 3.

Sample stimuli for Experiment 3 and 4 (Disgust-Sad condition only). Habituation and test events were presented in a randomized order. Pictures of Model #03 reprinted with permission from the creators of the NimStim Set of Facial Expressions. For more details, see Tottenham, N., Tanaka, J. W., Leon, A. C., McCarry, T., Nurse, M., Hare, T. A., … & Nelson, C. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research, 168(3), 242-249.

| Disgust | Sadness | Anger | Happiness |

|---|---|---|---|

|

|

|

|

Experiment 3

Methods

Participants.

The final sample consisted of 24 (13 female) 14-month-olds (M = 14.15 months, SD = .18 months, range = 13.71 – 14.40 months). Participants were recruited in the same manner as Experiment 1. Infants were identified as Caucasian (79%, n = 19), multiracial (17%, n = 4), or Asian (4%, n = 1). About 8% (n = 2) of infants were identified as Hispanic or Latino. An additional 11 infants were tested but excluded from final analyses for the following reasons: failure to meet the habituation criteria (n = 3), failure to finish the study due to fussiness (n = 3), and fussiness or inattentiveness during the study (n = 5).

Stimuli.

The stimuli were similar to Experiment 1. The key difference was that pictures from the NimStim Set of Facial Expressions were used (for validation information, see Tottenham et al., 2009). In order to eliminate the potential influence of teeth on infants’ categorization, pictures were chosen that did not show teeth. Models #07 and #08 were used for the negative novel face test event. Models #03, #06, and #09 were used for habituation and all other test events (see Table 3 for pictures of Model #03).

Apparatus and Procedure.

All infants were tested in the Disgust-Sad condition. All other aspects of the apparatus and procedure were identical to Experiment 1.

Scoring.

The scoring procedure was identical to Experiment 1. Reliability was excellent (50% of tapes rescored; n = 12) for duration of looking on each trial, r = .95, p < .001.

Results

Habituation phase.

A paired samples t-test confirmed that infants’ looking behavior significantly decreased from habituation to test, t(23) = 4.96, p < .001, d = 1.01. Infants had significantly longer looking times during the first three habituation trials (M = 21.33s, SD = 5.39s) compared to the negative familiar event (M = 11.35s, SD = 7.01s).

Test phase.

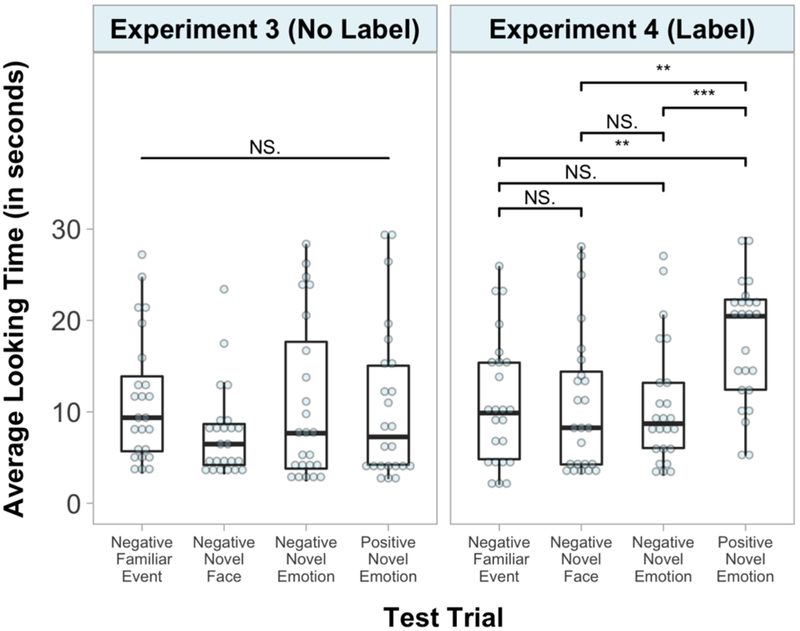

Infants’ looking times during the test trials were analyzed in a repeated-measures ANOVA. This analysis did not yield a significant main effect of Test Trial, F(3, 69) = 1.60, p = .20, ηp2 = .07 (see Table 2 for cell means). Thus, infants’ looking times did not differ between the four test trials (see Figure 3).

Figure 3.

Experiment 3 and Experiment 4: Infants’ total looking time during the test trials, separated by Experiment, ** p < .01, *** p < .001 for pairwise comparisons between events.

Discussion

Similar to Experiment 1, infants in Experiment 3 did not form a superordinate category of negative valence. However, in contrast to Experiment 1, infants in Experiment 3 did not differentiate (a) the positive novel emotion from the negative emotion test trials, or (b) the negative familiar event from the other test trials. In other words, infants did not discriminate between the happy expression and the negative expressions. Even more surprisingly, infants did not track which event they had seen in the habituation trials. One possibility is that Experiment 3 was underpowered (n = 24 14-month-olds) relative to Experiment 1 (n = 48 14- and 18-month-olds). However, this is unlikely, given that the 14-month-olds in the Disgust-Sad condition in Experiment 1 were able to track which event they had seen during habituation (see Supplementary Materials for analysis).

A more likely explanation for the findings in Experiment 3 is that infants were unable to extract the relevant emotional information in the absence of a salient perceptual cue (e.g., teeth). In Experiment 1, the presence versus absence of teeth in the habituation trials may have highlighted the differences between the emotions (i.e., disgust expressions displayed teeth, sad expressions did not), enabling infants to track and differentiate the expressions. Although previous research has suggested that 7-and 10-month-olds will use salient facial features, such as teeth, as the basis for emotion categorization (Caron et al., 1985; Kestenbaum & Nelson, 1990), this is the first study to suggest that infants in the second year of life are still sensitive to these salient perceptual cues. Our claim is not that infants have difficulty deciphering “non-toothy” facial expressions, specifically, but that the lack of salient perceptual cues in the habituation trials may have impaired infants’ categorization. Related to this, the facial expressions selected from the Radbound database (Langner et al., 2010) had generally higher validation scores than the facial expressions selected from the NimStim database (Tottenham et al., 2009). Thus, the NimStim faces employed in Experiment 3 may have been more difficult for infants to differentiate because the facial expressions were less “stereotypical”.

Experiment 4

Experiment 4 aimed to replicate and extend the findings of Experiment 2, that adding a verbal label to each habituation event would facilitate superordinate category formation. Specifically, we predicted that: (a) infants’ looking time to the negative familiar event, negative novel face, and negative novel emotion would not differ from one another, and (b) infants’ looking times for each of these three negative emotion test trials would be significantly shorter than their looking times to the positive novel emotion. In addition, we predicted a significant Test Trial by Experiment interaction when data from Experiments 3 and 4 were analyzed together.

Methods

Participants.

The final sample consisted of 24 (15 female) 14-month-olds (M = 14.09 months, SD = .17 months, range = 13.84 – 14.47 months). Participants were recruited in the same manner as Experiment 1. Infants were identified as Caucasian (75%, n = 18), multiracial (21%, n = 5), and Asian (4%, n = 1). About 12% (n = 3) of infants were identified as Hispanic or Latino. An additional four infants were tested but excluded from final analyses for the following reasons: failure to meet the habituation criteria (n = 2), failure to finish the study due to fussiness (n = 1), and fussiness or inattentiveness during the study (n = 1).

Parental report confirmed that virtually none of these infants were using emotion labels. One infant was reported to say the word “happy”. No infants were reported to have produced the words “sad,” “angry,” or “disgusted” (or synonyms). Thus, nearly all infants in this sample did not have emotion labels in their expressive vocabularies.

Stimuli.

The stimuli were identical to Experiment 3. However, a label (i.e., “toma”) was added to each of the six habituation events in the same manner as Experiment 2. This label was only presented with the habituation trials: the test trials were presented in silence, without labels.

Apparatus and Procedure.

All infants were tested in the Disgust-Sad condition. All other aspects of the apparatus and procedure were identical to Experiment 2.

Scoring.

The scoring procedure was identical to Experiment 2. Reliability was excellent (50% of tapes rescored; n = 12) for duration of looking on each trial, r = .96, p < .001.

Results

Habituation phase.

A paired samples t-test confirmed that infants’ looking behavior significantly decreased from habituation to test, t(23) = 7.15, p < .001, d = 1.46. Infants had significantly longer looking times during the first three habituation trials (M = 24.33s, SD = 5.43s) compared to the negative familiar event (M = 11.03s, SD = 7.07s).

Test phase.

Infants’ looking times during the test trials were analyzed in a repeated-measures ANOVA. This analysis yielded a significant main effect of Test Trial, F(3, 69) = 5.81, p = .001, ηp2 = .20. Follow-up paired t-tests revealed that infants looked significantly longer at the positive novel emotion compared to the negative familiar event (p = .001, d = .76), the negative novel face (p = .007, d = .60), and the negative novel emotion (p = .001, d = .76). Critically, however, infants’ looking to the negative familiar event did not differ from the negative novel face (p > .25, d < .01) or the negative novel emotion (p > .25, d = .05), and looking to the negative novel face did not differ from the negative novel emotion (p > .25, d = .04). Thus, infants formed a superordinate category of negative valence (see Table 2 for cell means).

Combined Experiment 3 and Experiment 4 analysis.

To confirm that the addition of a verbal label impacted infants’ categorization, data from Experiments 3 and 4 were analyzed in a 4 (Test Trial) x 2 (Experiment) mixed-model ANOVA. A significant main effect of Test Trial, F(3, 138) = 4.36, p = .006, ηp2 = .09, and a marginally significant main effect of Experiment, F(1, 46) = 3.55, p = .07, ηp2 = .07, were qualified by a Test Trial x Experiment interaction, F(3, 138) = 3.29, p = .023, ηp2 = .07. Therefore, infants’ looking time during the test trials significantly differed between the two experiments.

General Discussion

This series of experiments are the first to demonstrate that: (a) in the second year of life, infants do not spontaneously form superordinate categories of negatively valenced facial expressions and (b) verbal labels can help infants form these categories. In Experiments 1 and 3, infants did not form a superordinate category based on negative valence for facial expressions of disgust, anger, and sadness displayed by multiple people. However, when a verbal label (“toma”) was added to each habituation trial in Experiments 2 and 4, infants were able to form this category (in the Disgust-Sad condition). These verbal labeling effects were obtained using facial expressions from two different stimuli sets (Radboud Face Database: Langner et al., 2010; NimStim: Tottenham et al., 2009). The latter controlled for the presence of teeth displayed on the expressions, which therefore provided a more stringent test of infants’ emotion categorization abilities and the facilitative verbal labeling effects.

The addition of a verbal label may have prompted infants to search for (and find) the commonalities between the facial expressions (Waxman & Markow, 1995). The label may have shifted infants’ focus away from the variable features of the stimuli (Althaus & Plunkett, 2016), such as the scrunched noses on the disgust faces and downturned mouths on the sad faces. In turn, the label may have drawn infants’ attention towards the shared, abstract feature of the stimuli: their negative valence. Thus, the addition of a verbal label enabled infants to form a category that included: (a) a familiarized person expressing disgust or sadness (negative familiar event), (b) a new person expressing disgust or sadness (negative novel face), and (c) a familiarized person expressing anger (negative novel emotion). Infants also attended significantly less to these three negative test trials compared to the positive novel emotion. Thus, verbal labels prompted infants to form a superordinate category of negative valence that differed from a novel happy expression.

Habituation Condition Differences

Although the addition of verbal labels in the Disgust-Sad condition enabled infants to form a superordinate category that included anger, sad, and disgust expressions, infants did not form this category when habituated to anger and sad expressions (Anger-Sad condition). Habituation condition differences are common in emotion categorization studies, particularly when infants are habituated to anger (Ruba et al., 2017) or fear (Bornstein & Arterberry, 2003; Kotsoni et al., 2001).

Why did verbal labels help infants form a category of negative valence when habituated to disgust and sadness, but not when habituated to anger and sadness? Prior research suggests that verbal labels do not facilitate category formation if objects do not belong to an obvious category (Waxman & Markow, 1995). Thus, one possibility is that, during the habituation trials, infants viewed the anger and sad expressions as belonging to separate, unrelated categories. Given that both the anger and the sad expressions did not show teeth in Experiments 1 and 2, infants could not have based their categories on this perceptual feature. Instead, emotional arousal may have been the basis for forming these categories. Like emotional valence, emotional arousal is another dimension by which all emotions can be categorized (Russell, 1980). In the current study, anger is “high” arousal, while sadness is “low” arousal (Widen & Russell, 2008). On the other hand, it may have been easier for infants to form a superordinate category during habituation in the Disgust-Sad condition since the two habituation emotions (i.e., disgust and sadness) are closer in arousal level (“moderate” vs. “low” arousal) (Croucher, Calder, Ramponi, Barnard, & Murphy, 2011; Widen & Russell, 2008). It is also the case that anger, disgust, and sadness differ with respect to their behavioral “signal.” While anger expressions are thought to signal behavioral approach, disgust and sad expressions do not (Shariff & Tracy, 2011). This signal value could have been another dimension that infants used to differentiate the anger and sad expressions during habituation in the Anger-Sad condition.

The looking-time methods employed here cannot distinguish between these possibilities. Future research may consider complimentary methods, such as eye tracking and EEG, to further explore why infants did not form a superordinate category in the Anger-Sad condition. Such methods have been successfully used to explore other habituation condition differences, specifically, infants’ failure to habituate to fearful facial expressions (Grossmann & Jessen, 2017; Leppänen & Nelson, 2012; Peltola, Hietanen, Forssman, & Leppänen, 2013). For example, eye tracking techniques could elucidate whether infants visually process disgust and sad expressions in a more similar manner than anger and sad expressions.

Narrow-to-Broad Development?

Irrespective of these condition differences, infants in the current studies did not spontaneously form superordinate emotion categories (without verbal labels). These findings are in line with predictions made by Quinn and colleagues (2011), who hypothesize that infants’ emotion categories develop in a “narrow-to-broad” pattern. In other words, infants may initially form basic-level categories of different negative facial expressions (e.g., anger vs. disgust) before appreciating that these facial expressions also belong to a superordinate category of “negative valence.” Interestingly, infant object categorization seems to follow a “broad-to-narrow” pattern, whereby infants form superordinate categories (e.g., mammals) earlier in development than basic-level categories (e.g., cats) (Mandler & McDonough, 1993; Quinn & Johnson, 2000). Therefore, while verbal labels facilitate superordinate categorization for both facial expressions and objects (Waxman & Markow, 1995), emotion category development may follow a different developmental trajectory than object category development. Future research could further investigate the degree to which emotion category development seems to be domain-general versus emotion-specific (Hoemann et al., in press.; Quinn et al., 2011). For example, with respect to word learning, researchers could consider how learning labels for emotion categories (e.g., “happy”) functions in a similar or different way from learning labels for object categories (e.g., “ball”) by including both types of categorization tasks within the same experiment.

In contrast, this pattern of results seems to counter predictions made by constructionist theories of emotion, which argue that preverbal infants (exclusively) form superordinate categories of facial expressions (Lindquist & Gendron, 2013; Shablack & Lindquist, 2019; Widen, 2013). Further, these results do not align with the predictions made by the “theory of constructed emotion,” specifically, which argues that labels should facilitate the division of superordinate emotion categories into basic-level categories (Barrett, 2017). Instead, the current studies found that infants did not spontaneously form superordinate categories of negative valence and that verbal labels facilitated the formation of these categories. Based on these findings, an argument can be made to bypass the constructionist hypothesis, and conclude instead (in line with “classical” emotion theories) that preverbal infants perceive facial expressions in terms of narrow/basic-level categories (Izard, 1994; Leppänen & Nelson, 2009; Walker-Andrews, 1997). Overall, prior research seems to support the idea that infants can form these basic-level categories (Bayet et al., 2015; Cong et al., 2019; Ruba et al., 2017; Safar & Moulson, 2017; White et al., 2019), and the present studies found that infants did not form superordinate emotion categories based on valence.

However, it is also important to consider these infant emotion categorization studies in the context of what is known about older, verbal children. Categorization studies with verbal children largely support the constructionist “broad-to-narrow” hypothesis. Specifically, many studies have found that preschoolers have superordinate, valence-based emotion categories (e.g., negative valence) that slowly differentiate into basic-level categories (e.g., anger, disgust) over the first decade of life (for a review, see Widen, 2013). Preschool emotion categorization is often assessed through behavioral sorting tasks or verbal tasks that require a child to label a facial expression (Widen & Russell, 2004, 2008, 2010). Thus, infant categorization tasks and preschool categorization tasks may place different demands on children’s cognitive abilities (Mareschal & Quinn, 2001; Westermann & Mareschal, 2012). In other words, while preverbal infants and verbal children likely use both perceptual cues and conceptual knowledge in categorization tasks (Madole & Oakes, 1999), these sources of information may be recruited to different extents in different tasks.

When viewed through this lens, it is possible that infants in the current studies failed to form a superordinate category of negative emotions because of differences in “perceptual” facial features. In Experiment 1, anger, sad, and disgust expressions may not have been sufficiently perceptually similar to compel category formation. On the other hand, in Experiment 3, when the facial expressions were more perceptually similar (i.e., none of them displayed teeth), infants appeared to group all of the expressions into a single, undifferentiated category (i.e., there were no looking time differences across the four test events). In Experiments 2 and 4, the addition of verbal labels to the habituation trials may have facilitated such superordinate category formation by drawing infants attention away from isolated perceptual features of the expressions (Plunkett, Hu, & Cohen, 2008; Westermann & Mareschal, 2013). This is not to say that infants have no “conceptual” understanding of these facial expressions, but rather that this knowledge may not have been recruited in this specific habituation paradigm when verbal labels were not included. In contrast, labeling and sorting tasks, as used with preschoolers, may compel children to recruit higher-level conceptual and linguistic skills (Ruba et al., 2017).

Ultimately, this explanation could account for why infants show a “narrow-to-broad” pattern for emotion category development in habituation tasks, while preschoolers show a “broad-to-narrow” pattern in sorting and labeling tasks. It is important to note that perceptual similarities have some correspondence to superordinate category membership (i.e., a cow looks more similar to a dog than a plane) (Westermann & Mareschal, 2012, 2013), and some researchers have argued that a “perceptual” versus “conceptual” category distinction is ultimately ambiguous and unhelpful (Madole & Oakes, 1999). Based on theory and data available to date, we favor the view that, regardless of age, children draw on both perceptual and conceptual information in emotion categorization tasks, albeit to different extents depending on the task. At a more methodological level, these issues are important to consider if researchers wish to conduct longitudinal studies comparing a child’s performance across emotion categorization tasks in infancy and early childhood.

Future Directions

The current studies are only a first step to documenting the influence of language on infants’ emotion category formation. More work is needed to fully understand how emotion perception develops in infancy. Importantly, it is unclear whether infants in these studies formed a category of negative valence that was distinct from a category of “positive valence.” Since only one positive facial expression (i.e., happiness) was included in the test trials, it is possible that infants were responding to specific features of the happy expression, rather than to a category of positive valence per se. The current findings also cannot speak to the detailed mechanism by which verbal labels facilitated categorization. For example, future research is needed to determine whether and how verbal labels impact the visual processing of the facial expressions (Althaus & Plunkett, 2016). As previously mentioned, additional methods such as eye-tracking and EEG have the potential to help unpack how labels facilitate categorization (Westermann & Mareschal, 2013).

Finally, more research is needed to confirm whether emotion categorization follows a “narrow-to-broad” pattern of differentiation that is distinct from the “broad-to-narrow” pattern of object categorization. Studies with additional ages and facial expressions may determine: (a) whether there are any circumstances in which infants will form a superordinate category of negative valence without verbal labels, and (b) if so, whether labels can facilitate the division of this superordinate category. It is possible that factors such as age, language ability, parental emotional expressiveness, and/or culture could be related to whether or not an infant forms these categories. In addition, it is possible that infants form a superordinate category when habituated to facial expressions that are more perceptually similar (e.g., anger and disgust) (Widen & Russell, 2013). Ultimately, further research along these lines could provide some support for “the theory of constructed” emotion and may provide a level of reconciliation between “classical” and “constructionist” emotion theories. Such work could also shed light on how emotion perception emerges and transforms over the course of development, contributing to a more comprehensive model of developmental affective science.

Supplementary Material

Acknowledgments

Funding Statement: This research was supported by grants from the National Institute of Mental Health (T32MH018931 to ALR), the University of Washington Royalty Research Fund (65-4662 to BMR), and the University of Washington Institute for Learning & Brain Sciences Ready Mind Project (to ANM).

Footnotes

Footnote 1.: It is important to note that other developmentally orientated constructionist theories also exist (e.g., dynamic systems theory). However, these theories tend to focus on infant emotion expression rather than perception (Camras, 2011; Fogel et al., 1992).

Footnote 2.: In a pilot study with “toothy” facial expressions (selected from the NimStim Set of Facial Expressions), half of the sample did not meet the habituation criteria. Thus, “non-toothy” facial expressions were used for Experiments 3 and 4.

References

- Althaus N, & Mareschal D (2014). Labels direct infants’ attention to commonalities during novel category learning. PLoS ONE, 9(7). 10.1371/journal.pone.0099670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Althaus N, & Plunkett K (2016). Categorization in infancy: labeling induces a persisting focus on commonalities. Developmental Science, 19(5), 770–780. 10.1111/desc.12358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amso D, Fitzgerald M, Davidow J, Gilhooly T, & Tottenham N (2010). Visual exploration strategies and the development of infants’ facial emotion discrimination. Frontiers in Psychology, 1(180), 1–7. 10.3389/fpsyg.2010.00180 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balaban MT, & Waxman SR (1997). Do words facilitate object categorization in 9-month- old infants? Journal of Experimental Child Psychology, 64(1), 3–26. 10.1006/jecp.1996.2332 [DOI] [PubMed] [Google Scholar]

- Barrett LF (2017). How emotions are made: The secret life of the brain. Boston: Houghton Mifflin Harcourt. [Google Scholar]

- Barrett LF, Adolphs R, Marsella S, Martinez AM, & Pollak SD (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20(1), 1–68. 10.1177/1529100619832930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist KA, & Gendron M (2007). Language as context for the perception of emotion. Trends in Cognitive Sciences, 11(8), 327–332. 10.1016/j.tics.2007.06.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayet L, Quinn PC, Tanaka JW, Lee K, Gentaz É, & Pascalis O (2015). Face gender influences the looking preference for smiling expressions in 3.5-month-old human infants. PLoS ONE, 10(6), 6–13. 10.1371/journal.pone.0129812 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth AE, & Waxman SR (2002). Object names and object functions serve as cues to categories for infants. Developmental Psychology, 38(6), 948–957. 10.1037/0012-1649.38.6.948 [DOI] [PubMed] [Google Scholar]

- Bornstein MH, & Arterberry ME (2003). Recognition, discrimination and categorization of smiling by 5-month-old infants. Developmental Science, 6(5), 585–599. 10.1111/1467-7687.00314 [DOI] [Google Scholar]

- Camras LA (2011). Differentiation, dynamical integration and functional emotional development. Emotion Review, 3(2), 138–146. 10.1177/1754073910387944 [DOI] [Google Scholar]

- Caron RF, Caron AJ, & Myers RS (1985). Do infants see emotional expressions in static faces? Child Development, 56(6), 1552–1560. 10.1111/j.1467-8624.1985.tb00220.x [DOI] [PubMed] [Google Scholar]

- Casasola M (2005). Can language do the driving? The effect of linguistic input on infants’ categorization of support spatial relations. Developmental Psychology, 41(1), 183–192. 10.1037/0012-1649.41.1.183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casasola M, & Bhagwat J (2007). Do novel words facilitate 18-month-olds’ spatial categorization? Child Development, 78(6), 1818–1829. 10.1111/j.1467-8624.2007.01100.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casasola M, & Cohen LB (2002). Infant categorization of containment, support and tight-fit spatial relationships. Developmental Science, 5(2), 247–264. 10.1111/1467-7687.00226 [DOI] [Google Scholar]

- Cohen LB, & Cashon CH (2001). Do 7-month-old infants process independent features or facial configurations? Infant and Child Development, 10(1–2), 83–92. 10.1002/icd.250 [DOI] [Google Scholar]

- Cong Y-Q, Junge C, Aktar E, Raijmakers MEJ, Franklin A, & Sauter DA (2019). Pre-verbal infants perceive emotional facial expressions categorically. Cognition and Emotion, 33(3), 391–403. 10.1080/02699931.2018.1455640 [DOI] [PubMed] [Google Scholar]

- Croucher CJ, Calder AJ, Ramponi C, Barnard PJ, & Murphy FC (2011). Disgust enhances the recollection of negative emotional images. PLoS ONE, 6(11), e26571 10.1371/journal.pone.0026571 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P (1994). Strong evidence for universals in facial expressions: A reply to Russell’s mistaken critique. Psychological Bulletin, 115(2), 268–287. 10.1037/0033-2909.115.2.268 [DOI] [PubMed] [Google Scholar]

- Fenson L, Marchman VA, Thal DJ, Dale PS, Reznick JS, & Bates E (2007). MacArthur-Bates communicative development inventories. Retrieved from https://www.uh.edu/class/psychology/dcbn/research/cognitive-development/_docs/mcdigestures.pdf

- Ferry AL, Hespos SJ, & Waxman SR (2010). Categorization in 3- and 4-month-old infants: An advantage of words over tones. Child Development, 81(2), 472–479. 10.1111/j.1467-8624.2009.01408.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferry AL, Hespos SJ, & Waxman SR (2013). Nonhuman primate vocalizations support categorization in very young human infants. Proceedings of the National Academy of Sciences, 110(38), 15231–15235. 10.1073/pnas.1221166110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flom R, & Bahrick LE (2007). The development of infant discrimination of affect in multimodal and unimodal stimulation: The role of intersensory redundancy. Developmental Psychology, 43(1), 238–252. 10.1037/0012-1649.43.1.238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fogel A, Nwokah E, Dedo JY, Messinger D, Dickson KL, Matusov E, & Holt SA (1992). Social process theory of emotion: A dynamic systems approach. Social Development, 1(2), 122–142. 10.1111/j.1467-9507.1992.tb00116.x [DOI] [Google Scholar]

- Fugate JMB (2013). Categorical perception for emotional faces. Emotion Review, 5(1), 84–89. 10.1177/1754073912451350 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fugate JMB, Gouzoules H, & Barrett LF (2010). Reading chimpanzee faces: Evidence for the role of verbal labels in categorical perception of emotion. Emotion, 10(4), 544–554. 10.1037/a0019017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fulkerson AL, & Waxman SR (2007). Words (but not tones) facilitate object categorization: Evidence from 6- and 12-month-olds. Cognition, 105(1), 218–228. 10.1016/j.cognition.2006.09.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gendron M, Lindquist KA, Barsalou L, & Barrett LF (2012). Emotion words shape emotion percepts. Emotion, 12(2), 314–325. 10.1037/a0026007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grinspan D, Hemphill A, & Nowicki S (2003). Improving the ability of elementary school-age children to identify emotion in facial expression. The Journal of Genetic Psychology, 164(1), 88–100. 10.1080/00221320309597505 [DOI] [PubMed] [Google Scholar]