Abstract

Monitoring breathing is important for a plethora of applications including, but not limited to, baby monitoring, sleep monitoring, and elderly care. This paper presents a way to fuse both vision-based and RF-based modalities for the task of estimating the breathing rate of a human. The modalities used are the F200 Intel® RealSenseTM RGB and depth (RGBD) sensor, and an ultra-wideband (UWB) radar. RGB image-based features and their corresponding image coordinates are detected on the human body and are tracked using the famous optical flow algorithm of Lucas and Kanade. The depth at these coordinates is also tracked. The synced-radar received signal is processed to extract the breathing pattern. All of these signals are then passed to a harmonic signal detector which is based on a generalized likelihood ratio test. Finally, a spectral estimation algorithm based on the reformed Pisarenko algorithm tracks the breathing fundamental frequencies in real-time, which are then fused into a one optimal breathing rate in a maximum likelihood fashion. We tested this multimodal set-up on 14 human subjects and we report a maximum error of BPM compared to the true breathing rate.

Keywords: vital signs, IR-UWB radar, micro-Doppler, optical flow, spectral estimation

1. Introduction

Vital signs extraction has been a research topic both in the computer vision and radar research community. The computer vision-based algorithms are tackling this problem from two different angles: One is the color-based algorithms [1,2,3,4], which capture the minute color variation of the human skin during a heartbeat [5]. Color based algorithms are primarily used for heartbeat estimation which is not the scope of this paper. The other is known as motion magnification [6] that magnify minute movements in a video. This is used mainly for heartbeat but can be used also for breathing rate estimation.

Intel’s RealSense camera was used in [7] to estimate the heartrate of a human subject. They used the infrared (IR) channel for estimating the heartrate. The depth channel was used as well for estimating the pose of the human head. The use of optical flow for breathing estimation was presented in [8]; however, the detailed algorithm and result performance was not reported. Finally, in [9] we describe in details the algorithm to reliably extract breathing from a RGB video alone in real-time.

Lately, biological signals monitoring utilizing uDoppler has been the topic of the research community. Respiration rate extraction with a pulse-Doppler architecture is presented in [10]. The wavelet transform was used in [11] to overcome the Discrete Fourier Transform (DFT) resolution insufficiency, and in [12], the chirp Z transform was used on a IR-UWB radar echos to extract respiration rate. The same transform was used in [13] coupled with an analytical model for the remote extraction of both respiration and heartrate. Moreover, they verified the validity of a model in which the thorax and the heart are considered vibrating-scatterers, such that the total uDoppler return is a superposition of two sinusoids with different frequencies and amplitudes in which, the breathing frequency is smaller than the heartrate, and its amplitude is much larger.

In this paper, we add to the reported results in [9] and show that breathing information also lies in the depth and radar signals. Coupled with the available RGB information, we can improve the reported accuracy results by more than twofold. We will use optical flow tracking coupled with a sinusoidal detector to determine if the optical flow track is sinus-like, exactly as presented in [9]. Each tracked point of interest coordinate change through time is considered a separate signal. In addition, at these specific coordinates of interest, the depth values are extracted from the depth channel. All these signals including the analyzed radar return are then fed into a maximum likelihood estimator to produce one optimal breathing rate. In Section 2, we describe the radar set-up, and in Section 3, we describe the algorithm outline. The method is outlined in Section 4, followed by the experiments outline and the results in Section 5. We conclude the paper in Section 6.

2. Radar Measurement Setup

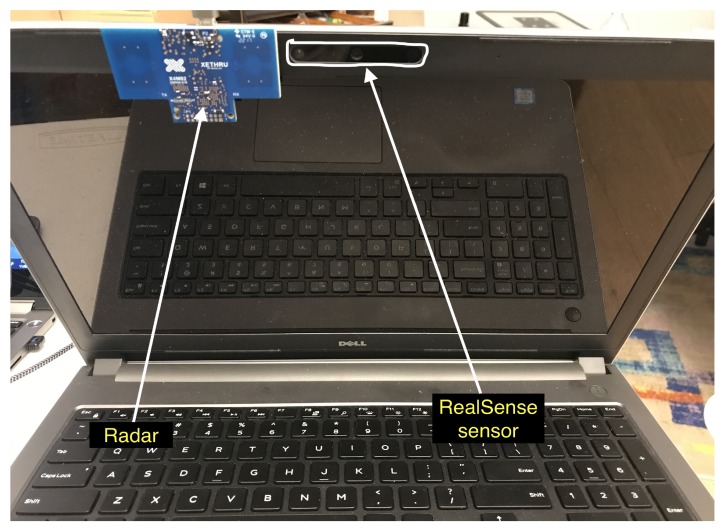

The radar measurement setup is given in Figure 1 and Figure 2. We use a IR-UWB impulse XeThru X4 radar module that transmits on a human subject (hereinafter “subject”). The raw data is collected at the PC through a USB interface and fed into the algorithm which is running real time. The radar is synced to the F200 Intel® RealSenseTM RGBD sensor. The radar operating parameters is given in Table 1. This single setup cost is ~$400. The cost can be reduced substantially after system design and large quantities discounts.

Figure 1.

Xethru X4 radar mounted to a laptop with RealSense sensor on it.

Figure 2.

Subject under test will be sitting on this chair infront of the radar and RealSense sensor.

Table 1.

Radar parameters.

| Value | Units | Comment | |

|---|---|---|---|

| Pulse Repetition Frequency | 40.5 | MHz | |

| Center frequency | 7.29 | GHz | |

| Bandwidth | 1.5 | GHz | |

| Peak pulse power | –0.7 | dBm | |

| Frame rate | ∼10 | Hz | ∼70 dB processing gain |

3. Algorithm Outline

For the sake of brevity, we repeat the main ideas of the algorithm outline. The reader is referred to [9] for a more detailed description. The first component of the system is the F200 Intel® RealSenseTM sensor, which is capturing image frames at a resolution of pixel, and at a rate of 10 frames per second (FPS). Frame by frame, we extract N feature points using Shi and Tomasi [14] algorithm. Next, optical flow tracking [15] is used, and we save the last three x, y, depth coordinates for each feature point. The depth value at these specific x,y coordinates is read from the sensor’s depth channel. We use each feature point at the last three x, y, depth values to estimate a fundamental frequency for each of the points, producing a vector of estimated fundamental frequencies. The estimation is computed iteratively based only on the last three samples using the reformed Pisarenko harmonic decomposition (RPHD) algorithm [16]. Next, a sine detector using a generalized likelihood ratio test is executed on each signal to detect which of the signals follows a sinus-like pattern. The signals that pass this test are kept while separate breathing rate is estimated from the synced radar signal in a way we will describe further in the paper. Last, all the plurality of breathing rates are fused in a ML fashion producing one optimal breathing rate estimation result.

4. Method

4.1. Model

The assumed model due to breathing is a sinus-like motion on the axes, namely, x-axis, y-axis, and the depth axis. Therefore, denoting by the number of signals acquired from the RGBD sensor. We can write

| (1) |

where is the breathing angular frequency in , and is the k-th sample of a sinusoid on a certain axis, and is the number of samples observed at the i-th feature of interest. The noise is assumed to be i.i.d in space and time.

4.2. Acquiring Time Domain Signals from Rgbd

The body moves due to the breathing. A camera is projecting this 3D movement into the image plane. Our goal is to acquire and track enough moving points on the image plane and observe the change in coordinates and depth through time, and, in real-time, estimate the motion’s fundamental frequencies. Thus, we get plurality of estimations for the breathing rate. These are all fused in an optimal manner to get one estimation of .

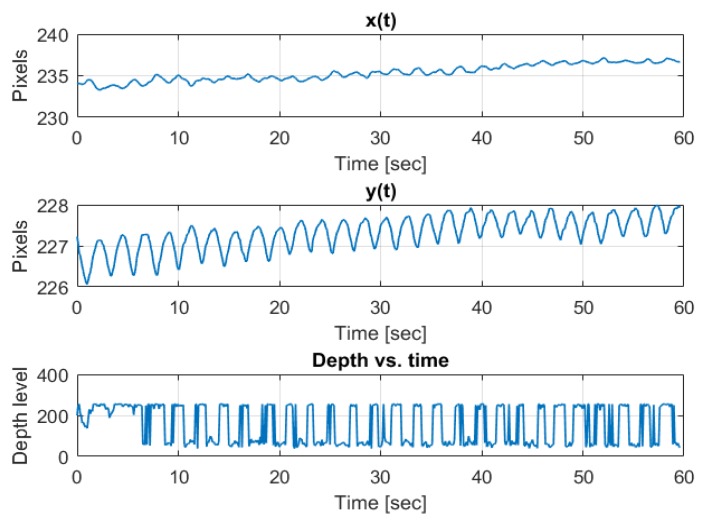

The signals acquisition and tracking is done using the algorithms of Shi-Tomasi and Lucas-Kanade [14,15], respectively.

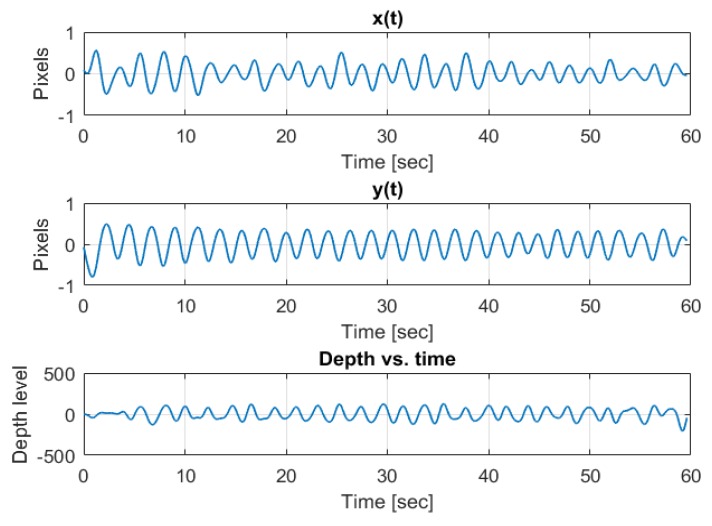

An example of a coordinate change through time of an arbitrary tracked feature point while the author was breathing in front of the camera is depicted in Figure 3. We observe the sinus-like coordinate change carried on a trend line due to small shifts in the body location as well as small biases in the optical flow tracking algorithm. The depth is carried on a DC term, and is very square-wave-like due to low resolution of depth quantization. The same signals passed through a band-pass filter with cutoff frequencies at the respiration band is depicted in Figure 4.

Figure 3.

Pre band-pass filtering example of three axes (x, y, depth) breathing pattern.

Figure 4.

Post band-pass filtering example of three axes (x, y, depth) breathing pattern.

Therefore, as depicted in Figure 3, the observed signal can be written as

| (2) |

where are the trend parameters for the i-th signal.

Thus, we will estimate those two trend parameters for each of the signals, and then subtract the trend from the observed signal, thus getting the desired model sa in (1).

4.3. Estimating the Trend

We use the recursive least squares (RLS) algorithm [17] (pp. 566–571) to estimate the trend real time, taking only the last three samples, namely, k, , into account. This algorithm is executed on all signals. For in-depth breakdown of the algorithm the reader is referred to [9].

Next, we de-trend the signals by subtracting the trend from , to get

| (3) |

where is white noise with variance representing the estimation error.

4.4. Estimating the Fundamental Frequency,

We chose to use the RPHD algorithm [16] for iteratively estimating the fundamental frequency .

The algorithm, fed by only the last three samples, iteratively and asymptotically efficiently estimates the fundamental frequency of a sinusoid as shown in [16].

The algorithm starts by ”waiting” for the first three frames to come in, and then it is refining the estimation iteratively with each new available sample.

This estimator’s variance can be shown to be [9,16]

| (4) |

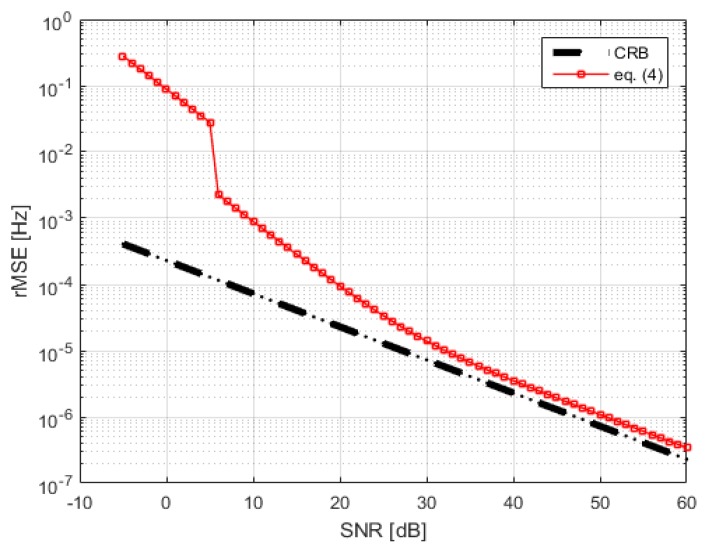

As seen in (4), the estimator’s variance is a function of the number of samples and the SNR. Therefore, we need to estimate . This is done by computing the spectral power outside the respiration frequency band. We compare the estimator’s variance to the CRB in Figure 5. This variance calculation is used in the ML fusion step.

Figure 5.

CRB and Equation (4) vs. SNR for K = 1000.

4.5. Generalized Likelihood Ratio Test (Glrt)

All estimated fundamental frequencies that are outside the respiration band are immediately discarded. The signals that survive this are tested to be oscillating, or in other words, to follow the model given in (3). Thus, we look at the following two hypotheses,

| (5) |

, which is solved by applying a quadrature matched filter on each signal [18] (pp. 262–268)

| (6) |

where is the well-known periodogram and and is the test threshold. is given by

| (7) |

The per signal threshold, is derived by fixing a constant probability of false alarm, and using Neyman-Pearson’s theorem, and is given by [18] (Equation (7.26))

| (8) |

The periodogram and its threshold are updated iteratively with each sample that comes in. The signals that do not meet the threshold are discarded, the rest of the signals’ estimated fundamental frequencies are fused, as presented in Section 4.7.

4.6. Radar Based Breathing Extraction

We denote by the slow vs. fast-time matrix of size , where is the number of frames, each frame is a row in this matrix where k corresponds to the current frame or the last row. Each frame sample represent a different fast-time bin, and is called a range-gate. The number of range gates is denoted by . Therefore, there are slow-time signals corresponding to the columns of this matrix.

All of these slow-time signals are band-pass filtered from to 5 Hz. Next, a pre-FFT step of Hanning windowing is applied and then each matrix column is transformed to the Fourier domain by FFT. The matrix we get is a range-Doppler map.

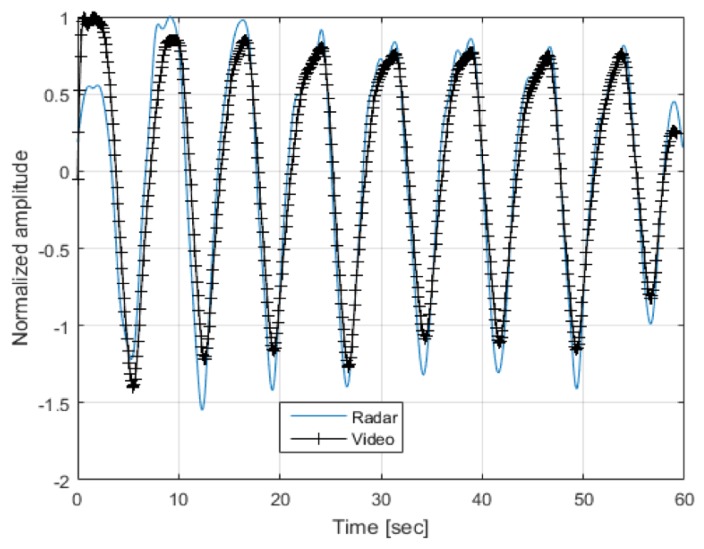

The range-Doppler map is searched for peaks using a constant false alarm rate (CFAR) detector, the largest peak inside the respiration frequency band is declared as the breathing frequency and is further validated by finding at least one more harmony (i.e., , etc.) in the spectrum. This frequency value is then introduced as another input to the fusion algorithm. An example of the radar extracted and a vision extracted breathing signal is depicted in Figure 6. Note that the signals are highly correlated.

Figure 6.

Radar and vision extracted breathing signals.

4.7. Fusing the Estimated Breathing Frequencies from the Rgbd Sensor and the Radar

The vision and radar based estimated frequencies that survived the tests described above, are collectively introduced into the maximum likelihood fusion algorithm. Each individual estimator , for each signal i, is associated with its own estimation error variance . These errors are assumed (An assumption that was validated in our experiments.) to be zero-mean Gaussian random variables. Thus, we can formulate the problem as follows,

| (9) |

where and is the true parameter value.

Writing the same in a vector-matrix form

| (10) |

where is a vector of ones, and and is the error covariance matrix.

The solution is given by [19] (pp. 225–226)

| (11) |

where is the number of estimators that have survived and are participating in this last fusion step. This algorithm yields a refined estimate of the fundamental breathing frequency.

5. Experiments

The following sections describe the experiments and their results. The first batch of experiment was done on three adults and two babies; the same subjects as in [9] for comparison purposes. The second batch of experiments was done on 10 healthy adults 20 to 45 years of age. All subjects gave their written consent as well as no underlying respiratory issues were reported.

5.1. Comparing Obtained Results to Our Previous Work

The algorithm was tested on three adults and two babies (All of which gave their consent to participate). The true breathing rate was estimated manually from the 10 s long video feed. The results are given in Table 2. After 10 s of starting of an experiment, we get a maximal error of BPM, which is twice as accurate as the framework and hardware we proposed in [9].

Table 2.

Experiments results.

| Subject Gender [m/f] |

Subject Age [years] |

Maximum Error- Proposed Algorithm [BPM] |

Maximum Error- Optimal Algorithm [BPM] |

|---|---|---|---|

| m | 40 | 0.26 | 0.1 |

| m | 50 | 0.3 | 0.12 |

| m | 30 | 0.4 | 0.15 |

| m | 2 | 0.4 | 0.2 |

| f | 0.5 | 0.5 | 0.12 |

Futhermore, the true rate for each subject was compared against the high-complexity ML estimator that solves for all parameters in (2), with the addition of the radar extracted breathing rate using the whole 10 s. This approach is optimal so it is used as a lower bound of the estimation error. As seen from Table 2, the optimal ML estimator yields a maximal error of BPM. Moreover, the largest deviation of the proposed algorithm from the optimal ML is only BPM.

5.2. More Experiments

Nine more subjects were recruited to run more experiments. The subjects are healthy/non-smoking females and males with no respiratory underlying medical conditions. The experiments were divided to two phases. The first phase, involving all 9 subjects, was conducted in a set-up as depicted in Figure 2. Two minutes of breathing were recorded. Each recording was split into 12 non-overlapped sections of 10 s to cover the whole two minutes. The maximum error and the mean error (over these 12 sections) is reported in Table 3.

Table 3.

Experiments results.

| Subject Gender [f/m] |

Subject Age [years] |

Mean Breathing Rate [BPM] |

Mean Error [BPM] |

Mean Error [% of Breathing Rate] |

|---|---|---|---|---|

| f | 25 | 14 | 0.27 | 1.92 |

| f | 28 | 13 | 0.33 | 2.53 |

| f | 31 | 16 | 0.29 | 1.81 |

| m | 35 | 16 | 0.29 | 1.81 |

| f | 38 | 14 | 0.39 | 2.78 |

| f | 39 | 14 | 0.31 | 2.21 |

| m | 41 | 15 | 0.40 | 2.66 |

| m | 43 | 13 | 0.39 | 2.92 |

| m | 43 | 17 | 0.41 | 2.41 |

5.3. Extracted Signal Fidelity

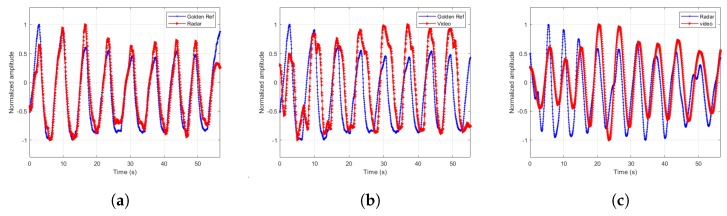

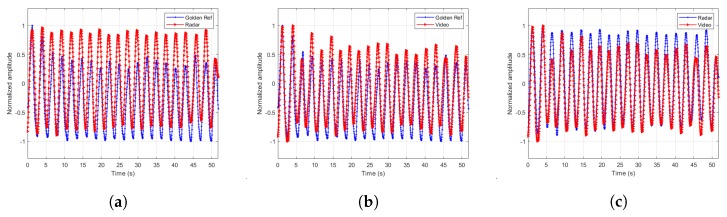

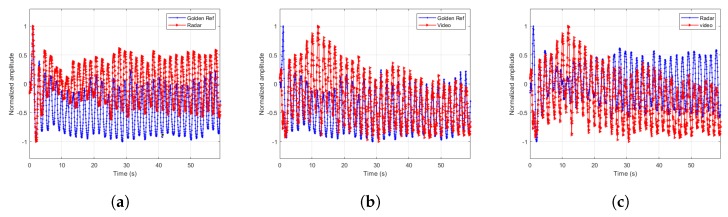

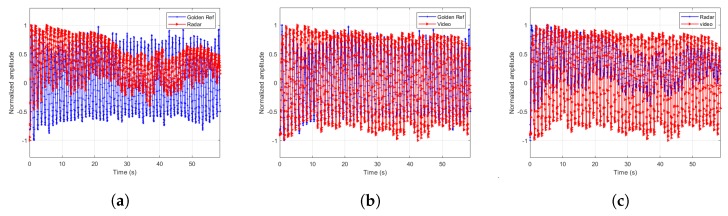

The extracted signals are paramount to the estimator’s accuracy. Therefore, we designed a few more experiments to demonstrate the extracted signal fidelity. In these experiments, we chose one male subject of age 43 and asked him to breathe at different breathing rates according to the cadence of a metronome. We recorded the torso movement through time using Neulog’s Respiration Monitor Belt logger NUL-236 [20] to be used as a golden reference or ground truth. Except for visually confirming high correlation between the video, radar and ground truth, as depicted in Figure 7, Figure 8, Figure 9 and Figure 10, we also ran a sample by sample sliding window of 10 s, on approximately 60 s of recording. On each 10 s window, we performed DFT and compared the peak frequency value between the radar, RGB, and depth signals. We report the accuracy results in Table 4. As can be seen, the fidelity of the signals extracted is very high, with maximum error of 0.1731 BPM (error of 0.3%) for the scenario in which the subject was breathing extremely fast.

Figure 7.

Extracted signals comparisons for breathing rate of 9.37 BPM. (a) Radar and ground truth signals. (b) Depth and ground truth signals. (c) Radar and depth signals.

Figure 8.

Extracted signals comparisons for breathing rate of 23.43 BPM. (a) Radar and ground truth signals. (b) RGB and ground truth signals. (c) Radar and RGB signals.

Figure 9.

Extracted signals comparisons for breathing rate of 33.98 BPM. (a) Radar and ground truth signals. (b) RGB and ground truth signals. (c) Radar and RGB signals.

Figure 10.

Extracted signals comparisons for breathing rate 56.25 BPM. (a) Radar and ground truth signals. (b) RGB and ground truth signals. (c) Radar and RGB signals.

Table 4.

Signal fidelity experimental results.

| Breathing Rate (BPM) |

Mean Radar Error (BPM) |

Mean RGB Error (BPM) |

Mean Depth Error (BPM) |

|---|---|---|---|

| 9.37 | 0 | 0 | 0.0020 |

| 23.43 | 0 | 0 | 0.0019 |

| 33.98 | 0.0191 | 0.0191 | 0.0193 |

| 56.25 | 0.1254 | 0.1688 | 0.1731 |

6. Conclusions

This paper presents a novel, illumination insensitive system and set of algorithms from which a human breathing rate is remotely extracted, using a radar and a RGBD camera. The vision-based algorithm is estimating the breathing rate by tracking in three dimensions (x, y, depth) points of interest in the frame coupled with the radar-based estimation, which are then fused into a single more accurate estimation using a ML approach. Experiments were done on 14 subjects and show over twofold improvement comparing to the results we reported at [9], which were RGB vision-based only. Moreover, the extracted signals (from the RGD, depth, and radar modalities) fidelity was qualitatively and quantitatively inspected and yielded very positive, high fidelity results.

Author Contributions

Data gathering, experiments, formal analysis, method development, algorithm development—N.R.; Supervision—D.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Kwon S., Kim H., Park K.S. Validation of heart rate extraction using video imaging on a built-in camera system of a smartphone; Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Diego, CA, USA. 28 August–1 September 2012; pp. 2174–2177. [DOI] [PubMed] [Google Scholar]

- 2.Li X., Chen J., Zhao G., Pietikäinen M. Remote Heart Rate Measurement from Face Videos under Realistic Situations; Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition; Columbus, OH, USA. 23–28 June 2014; pp. 4264–4271. [DOI] [Google Scholar]

- 3.Poh M.Z., McDuff D.J., Picard R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express. 2010;18:10762–10774. doi: 10.1364/OE.18.010762. [DOI] [PubMed] [Google Scholar]

- 4.Poh M.Z., McDuff D.J., Picard R.W. Advancements in Noncontact, Multiparameter Physiological Measurements Using a Webcam. IEEE Trans. Biomed. Eng. 2011;58:7–11. doi: 10.1109/TBME.2010.2086456. [DOI] [PubMed] [Google Scholar]

- 5.Balakrishnan G., Durand F., Guttag J. Detecting Pulse from Head Motions in Video; Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, CVPR ’13; Portland, OR, USA. 23–28 June 2013; Washington, DC, USA: IEEE Computer Society; 2013. pp. 3430–3437. [DOI] [Google Scholar]

- 6.Wu H.Y., Rubinstein M., Shih E., Guttag J., Durand F., Freeman W.T. Eulerian Video Magnification for Revealing Subtle Changes in the World. ACM Trans. Graph. 2012;31:1–8. doi: 10.1145/2185520.2185561. [DOI] [Google Scholar]

- 7.Chen J., Chang Z., Qiu Q., Li X., Sapiro G., Bronstein A., Pietikäinen M. RealSense= Real Heart Rate: Illumination Invariant Heart Rate Estimation from Videos; Proceedings of the 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA); Oulu, Finland. 12–15 December 2016. [Google Scholar]

- 8.Nakajima K., Matsumoto Y., Tamura T. Development of real-time image sequence analysis for evaluating posture change and respiratory rate of a subject in bed. Physiol. Meas. 2001;22:N21. doi: 10.1088/0967-3334/22/3/401. [DOI] [PubMed] [Google Scholar]

- 9.Regev N., Wulich D. A simple, remote, video based breathing monitor; Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Seogwipo, Korea. 11–15 July 2017; pp. 1788–1791. [DOI] [PubMed] [Google Scholar]

- 10.Koo Y.S., Ren L., Wang Y., Fathy A.E. UWB MicroDoppler Radar for human Gait analysis, tracking more than one person, and vital sign detection of moving persons; Proceedings of the 2013 IEEE MTT-S International Microwave Symposium Digest (IMS); Seattle, WA, USA. 2–7 June 2013; pp. 1–4. [DOI] [Google Scholar]

- 11.Tariq A., Shiraz H.G. Doppler radar vital signs monitoring using wavelet transform; Proceedings of the 2010 Loughborough, Antennas and Propagation Conference (LAPC); Loughborough, UK. 8–9 November 2010; pp. 293–296. [DOI] [Google Scholar]

- 12.Hsieh C.H., Shen Y.H., Chiu Y.F., Chu T.S., Huang Y.H. Human respiratory feature extraction on an UWB radar signal processing platform; Proceedings of the 2013 IEEE International Symposium on Circuits and Systems (ISCAS); Beijing, China. 19–23 May 2013; pp. 1079–1082. [DOI] [Google Scholar]

- 13.Lazaro A., Girbau D., Villarino R. Analysis of vital signs monitoring using an IR-UWB radar. Prog. Electromagn. Res. 2010;100:265–284. doi: 10.2528/PIER09120302. [DOI] [Google Scholar]

- 14.Shi J., Tomasi C. Good Features to Track; Proceedings of the 1994 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); Seattle, WA, USA. 21–23 June 1994; pp. 593–600. [DOI] [Google Scholar]

- 15.Lucas B.D., Kanade T. Proceedings of the 7th International Joint Conference on Artificial Intelligence-Volume 2. Morgan Kaufmann Publishers Inc.; San Francisco, CA, USA: 1981. An Iterative Image Registration Technique with an Application to Stereo Vision; pp. 674–679. IJCAI’81. [Google Scholar]

- 16.So H.C., Chan K.W. Reformulation of Pisarenko harmonic decomposition method for single-tone frequency estimation. IEEE Trans. Signal Process. 2004;52:1128–1135. doi: 10.1109/TSP.2004.823473. [DOI] [Google Scholar]

- 17.Haykin S. Adaptive Filter Theory. 3rd ed. Prentice-Hall, Inc.; Upper Saddle River, NJ, USA: 1996. [Google Scholar]

- 18.Kay S.M. Fundamentals of Statistical Signal Processing, Volume 2: Detection Theory. Prentice-Hall Inc; Upper Saddle River, NJ, USA: 1993. [Google Scholar]

- 19.Kay S.M. Fundamentals of Statistical Signal Processing: Estimation Theory. Prentice-Hall, Inc.; Upper Saddle River, NJ, USA: 1993. [Google Scholar]

- 20.Neulog’s Respiration Monitor Belt logger NUL-236. [(accessed on 13 December 2019)]; Available online: https://neulog.com/respiration-monitor-belt/