Abstract

Objective

The United States faces an opioid crisis. Integrating prescription drug monitoring programs into electronic health records offers promise to improve opioid prescribing practices. This study aimed to evaluate 2 different user interface designs for prescription drug monitoring program and electronic health record integration.

Materials and Methods

Twenty-four resident physicians participated in a randomized controlled experiment using 4 simulated patient cases. In the conventional condition, prescription opioid histories were presented in tabular format, and computerized clinical decision support (CDS) was provided via interruptive modal dialogs (ie, pop-ups). The alternative condition featured a graphical opioid history, a cue to visit that history, and noninterruptive CDS. Two attending pain specialists judged prescription appropriateness.

Results

Participants in the alternative condition wrote more appropriate prescriptions. When asked after the experiment, most participants stated that they preferred the alternative design to the conventional design.

Conclusions

How patient information and CDS are presented appears to have a significant influence on opioid prescribing behavior.

Keywords: user-computer interface, decision support systems, clinical, medical order entry systems, prescription drug monitoring programs, pain management

INTRODUCTION

Opioid overdose deaths quadrupled between 1999 and 2016 in the United States, accounting for more than half of drug overdose deaths.1 Prescribed opioids are believed to have contributed to the crisis: 1 in 10 patients prescribed opioids became dependent,2 4 in 5 heroin users started with an opioid prescription,3 and many opioid prescriptions have been diverted.4

This is not the first opioid crisis in the United States—lawmakers responded to the crisis of the 1960s by writing the Controlled Substances Act of 1970, which set the contemporary framework for controlling drugs with “abuse potential” via criminological and medical institutions.5 In response to the current crisis, all 50 U.S. states and Guam have developed prescription drug monitoring program (PDMP) databases to track prescriptions of most controlled substances, and to make patients’ prescription histories available to licensed prescribers.6,7 Further, the U.S. Centers for Disease Control and Prevention and other governing bodies have recommended or even required that prescribers verify each patient’s PDMP history before prescribing opioids.8–10

In most states, PDMPs are provided via standalone websites; they are not integrated with the electronic health records (EHRs) that most prescribers now use to place medication orders. Therefore, accessing PDMP information is often a tedious task: a prescriber must first locate the website, and then contend with strict password logistics, rigid search engines, and cluttered information displays.7,11–13

There have been some efforts to integrate PDMPs into EHRs,14–17 which may address the difficulties locating and logging into PDMPs. Whether this integration should be mandated has also been discussed.17,18 However, little attention has been paid to where and how PDMP information should be presented in the EHR, as well as how clinical decision support (CDS) should be designed to augment cognition while introducing minimal disruption to workflow.7,19,20

A conventional method of implementation would be to provide the PDMP in a dedicated tab in the user interface and to present CDS via interruptive modal dialogs (ie, pop-up alerts). Such a design is, however, susceptible to a number of issues known in the human factors and health informatics literature. First, prescribers have difficulty reading and interpreting PDMP reports (eg, owing to cluttered, disorganized displays).7,12 Second, without contextual cues to draw prescribers’ attention to the PDMP information, the tab may likely be neglected.21 As for CDS, the problem of alert fatigue22 may arise: when a CDS system issues too many alerts, and when many of them are irrelevant, users tend to cease to pay attention to them.23 Further, the literature suggests against restrictive designs such as modal dialogs24,25 because of their interruptive nature, and excessive use of modal dialogs may have contributed to clinician dissatisfaction and burnout.26–29

In this work, we applied human factors principles to improve the design of PDMP-EHR integration. Human factors research aims to develop technologies that fit users’ expectations, rather than requiring users to conform to any given design. It has been widely applied in health informatics to study a variety of applications such as medical devices,30,31 EHRs,32 and computerized prescriber order entry systems.33–37

In this study, we conducted a simulation experiment to compare 2 designs for PDMP-EHR integration. In the conventional design, the patient’s controlled substance prescription history is presented in tabular format, in a separate PDMP tab, and CDS advisories are presented in interruptive modal dialogs when an order is about to be placed. In the alternative design, multiple contextual cues are provided to draw prescribers’ attention to PDMP information, along with noninterruptive CDS presented as part of the ordering process. We subsequently provide details and illustrations of these 2 designs.

We hypothesized that the alternative design would increase the appropriateness of physician prescriptions, because it was intended to facilitate “information foraging,”21 convey information through cognitively efficient graphic representation,7,38 and deliver CDS early on in the prescribing process.39 We also hypothesized that physicians would prefer the alternative design, the alternative condition would require less time to use, and physicians would visit the PDMP tab more often under the alternative condition when information was available. Next, we describe the 2 designs and the protocol for the simulated experiment.

MATERIALS AND METHODS

Two competing designs for PDMP-EHR integration

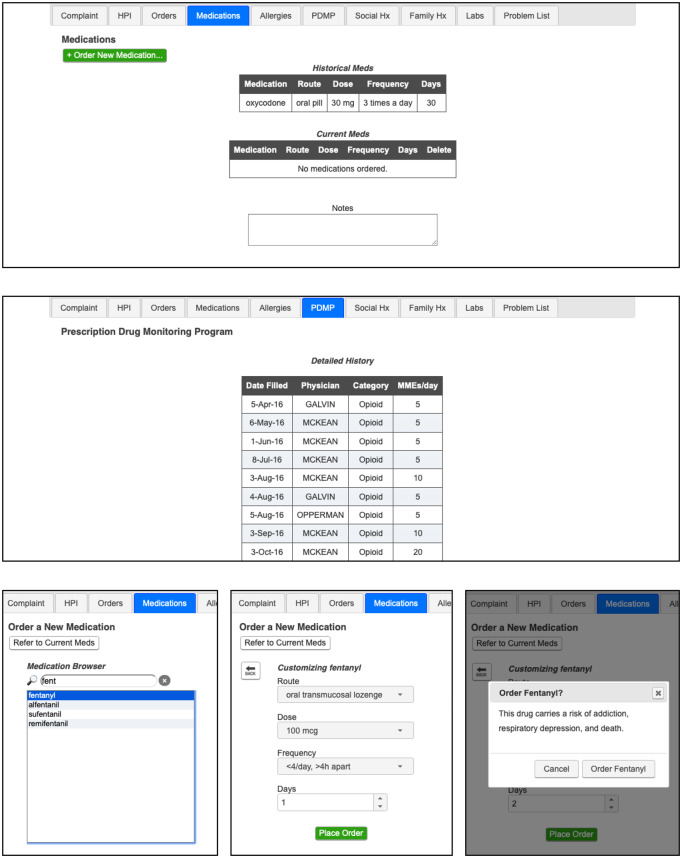

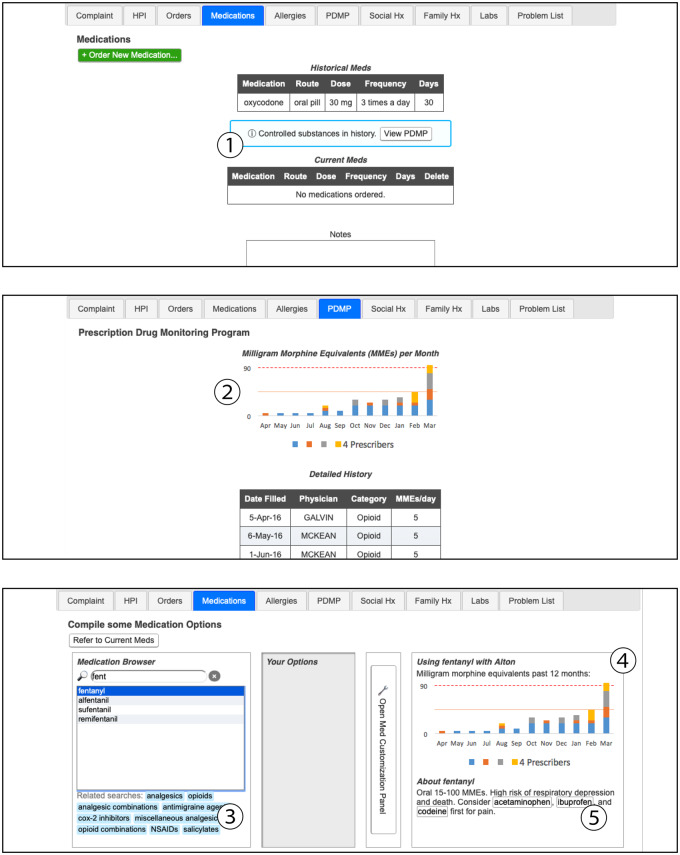

Demonstrations of the 2 designs are available online (https://www.ics.uci.edu/∼mihussai/demos/2019-simulation-study/). In Table 1, we summarize the features present in each of the designs. Briefly, the conventional design (Figure 1) has a dedicated PDMP tab, which presents controlled substance prescription history in a tabular format, as is typical of PDMPs.7 It also features a typical CDS design, which presents text-only modal dialogs immediately before the order is placed.33,39 The alternative design also presents a noninterruptive cue to draw the prescriber’s attention to PDMP information, a graphical opioid prescription history along with tabular PDMP data, and noninterruptive CDS advisories presented as part of the ordering process (Figure 2).

Table 1.

Feature description and comparison

| Feature | Conventional | Alternative |

|---|---|---|

| Medication list | Displays medication history and current medications. | Cue for availability of PDMP information. When PDMP data are available for the patient, a noninterruptive cue appears, with a shortcut to the PDMP tab ①. |

| Prescribed controlled substances tab | Displays a table, showing date filled, prescribing physician, drug category, and MMEs. | Graphical presentation of opioid history. The tabular PDMP data are supplemented with a stacked bar chart showing MMEs and distinct prescribers in the past year ②. |

| Medication ordering entry |

|

|

CDC: Centers for Disease Control and Prevention; CDS: clinical decision support; MME: milligram morphine equivalent; PDMP: prescription drug monitoring program.

Figure 1.

Conventional design, which presents the patient’s medication history as a simple list (top), the prescription drug monitoring program information in a tabular format on a separate tab (middle), and interruptive modal dialogs for delivering decision support (bottom).

Figure 2.

Alternative design, featuring a contextual cue when prescription drug monitoring program information is available (①), a graphical presentation of opioid prescription history (②), and noninterruptive decision support delivered as contextual cues as part of the ordering process (③, ④, and ⑤).

In the experiment, participants completed 4 scenarios, developed by an attending pain specialist (AMN). These scenarios, and accompanying graphical prescription opioid histories, are provided in the Supplementary Appendix. We created mock patient interview videos to present the scenarios, each of which featured a white male actor between 28 and 56 years of age, to minimize potential discriminatory prescribing effects—prior research41,42 has found that opioids are prescribed less frequently for black and female patients.

Study setting and experiment protocol

All study participants were either anesthesiology or physical medicine and rehabilitation (PM&R) residents; practitioners in these disciplines commonly prescribe opioids. All participants had completed at least 1 year of residency training at a large academic medical center in Southern California. Researchers presented the study during monthly resident meetings and recruited in person. All eligible residents but 1 agreed to participate. Half of the participants were randomly assigned to use 1 of the designs. At the beginning of the experiment, participants viewed a tutorial video about how to use the simulated EHR. Then, participants proceeded to the first patient interview video, reviewed the patient’s medical records, and placed medication orders. The experiment concluded after the participant completed all 4 patient scenarios, which were presented in a random order. In this article, we refer to each instance of a participant completing a scenario as a trial, in accordance with how it is described in experimental psychology studies. This portion of the study took approximately half an hour, with no apparent differences in time between the 2 conditions.

After the experiment, participants who used the conventional design were shown a video tour of the alternative design, and vice versa. They were then asked to preferentially compare the 2 designs, and to provide a reason for their preference. Participants did not receive compensation or an honorarium for their participation. The institutional review board of the University of California, Irvine reviewed the research protocol of the study and determined that it met the exemption criteria.

Data collection and appropriateness review

We implemented a tracking mechanism in both designs to record mouse clicks as well as timestamps of interaction events in order to measure the time spent between actions (eg, starting an order and placing an order).

In order to assess whether the pain medication orders placed for each scenarios were appropriate, we developed an appropriateness panel review, based on the process described by McCoy et al.43 First, 2 pain specialists (AMN, BY) created a scoring rubric (included in Supplementary Appendix) through consensus development. Then, they independently reviewed the prescriptions placed for each trial. During the entire process, reviewers were blinded to the experimental condition (alternative vs conventional) in which each prescription was written. Interrater reliability was assessed using Cohen’s kappa.44 If there were scoring differences, they were reconciled through discussion and consensus development.

Data analyses

We used JASP v. 0.10.2 (JASP Team, Amsterdam, the Netherlands) to conduct a 2-way mixed-effects analysis of variance (ANOVA) analysis of appropriateness. We tested the sphericity and equality of variance assumptions using Mauchly’s and Levene’s tests, respectively.

We used a chi-square test to evaluate participants’ design preferences. We also conducted a mixed-effects ANOVA to assess time reduction from each trial to the next, between conditions, to examine the learning effect and time efficiency of each of the designs.

Further, we conducted a 1-way mixed-effects ANOVA to test to assess whether those in the conventional condition visited the PDMP tab less often than their peers in the alternative condition when the patient’s PDMP information was available. We also analyzed the usage of different features presented in the interfaces of the 2 conditions (eg, recommended alternative medications or pop-up alerts).

RESULTS

Participant demographics

Seventeen (71%) of the participants were anesthesiology residents and the other 7 (30%) were PM&R residents. We randomly assigned 9 (53%) of the anesthesiology residents and 3 (43%) of the PM&R residents to the conventional condition, and the rest to the alternative condition. Among the participants who reported demographic data, the mean age was 31 (range, 26–38) years of age; there were 8 (40%) women and 12 (60%) men. Fifty-five percent (n = 10) of them were White, 35% (n = 7) were Asian, and 8% (n = 2) were Black or African American. The Supplementary Appendix provides additional demographic details.

Appropriateness analysis

Participants completed 94 trials in total; 2 were incomplete due to loss of network connectivity. Interrater reliability was high between the 2 attending physicians’ appropriateness ratings (Cohen’s κ = 0.93).44

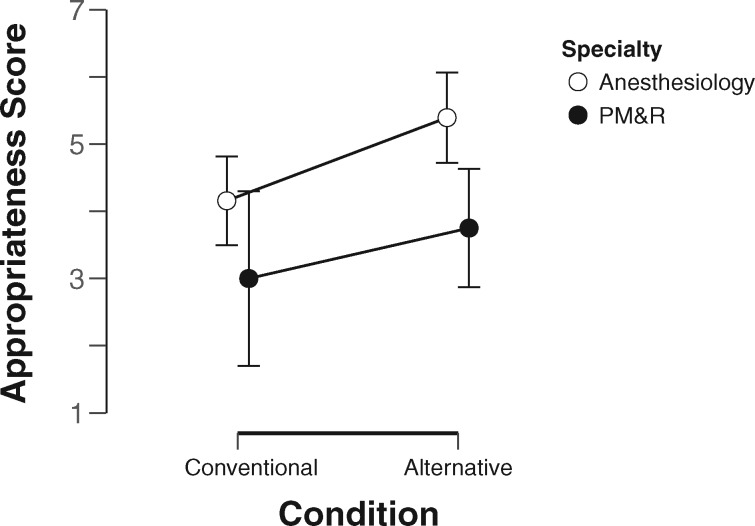

The results of our 2-way mixed-effects ANOVA analysis are shown in Table 2. According to these results, there was a borderline significant effect of the experimental condition, which explained 14% of the variance (F1,18 = 4.40, P = .05, η2 > .14); prescribers who used the conventional design achieved lower scores (3.94 ± 1.96) than those who used the alternative design (4.85 ± 1.84). Further, there was a significant main effect of specialty, which explained 28% of the variance (F1,18 = 8.73, P < .05, η2 > .28). Overall, anesthesiology residents received higher appropriateness scores (4.80 ± 1.83) than PM&R residents (3.43 ± 1.86).

Table 2.

Two-way mixed-effects analysis of variance analysis of prescription appropriateness

| Sum of Squaresa | df | Mean Square | F | P | η² | |

|---|---|---|---|---|---|---|

| Between-participants effects | ||||||

| Condition | 18.546 | 1 | 18.546 | 4.398 | .050 | .140 |

| Specialty | 36.819 | 1 | 36.819 | 8.732 | .008 | .278 |

| Condition × Specialty | 1.113 | 1 | 1.113 | 0.264 | .614 | .008 |

| Residual | 75.897 | 18 | 4.217 | |||

| Within-participants effects | ||||||

| Scenario | 76.292 | 3 | 25.431 | 13.955 | <.001 | .242 |

| Scenario × Condition | 5.732 | 3 | 1.911 | 1.049 | .379 | .018 |

| Scenario × Specialty | 0.411 | 3 | 0.137 | 0.075 | .973 | .001 |

| Scenario × Condition × Specialty | 2.061 | 3 | 0.687 | 0.377 | .770 | .007 |

| Residual | 98.406 | 54 | 1.822 |

Type III sum of squares.

There were no significant interaction effects. This analysis withstood Mauchly’s test (P > .05) and Levene’s test (P > .05). As shown in Figure 3, those in the alternative condition tended to receive higher scores.

Figure 3.

Appropriateness scores by specialty and experimental condition. PM&R: physical medicine and rehabilitation.

Participants’ preferences

As described previously, researchers showed a video of the alternative design to participants randomly assigned to the conventional condition, and vice versa. Among those who provided a preference, 7 (70%) in the conventional condition stated that they preferred the alternative design and 9 (81%) in the alternative condition preferred it to the conventional design. Using a chi-square test, we found this result to be statistically significant (n = 21; χ21 = 5.74, P < .05). The top reason provided by the participants for preferring the alternative design was the visual representation of PDMP information, followed by its flexibility in interaction, and participants’ aversion to modal dialogs.

Trial duration and feature usage

In our mixed-effects ANOVA analysis of time, while we detected a statistically significant overall reduction in trial completion time as participants progressed through the 4 trials (144 seconds vs 135 seconds vs 89 seconds vs 91 seconds; F3,60 = 6.24, P < .001), we did not detect a statistically significant difference in trial completion time between the 2 conditions. We also did not detect an interaction between trial progression and experimental condition.

As mentioned in the Materials and Methods section, we conducted a 1-way mixed-effects ANOVA analysis to measure the influence of the experimental condition on whether participants checked the PDMP tab when information was available. We found a significant interaction effect of scenario and experimental condition on whether the participant visited the PDMP tab—meaning that the design and the presence of a PDMP history produced the effect together—which explained 10% of the variance (F3,60 = 3.44, P < .05, η2 = .10). Scenarios 3 and 4 were the only scenarios in which PDMP information was available; both patients had been prescribed opioids in the past year. In these scenarios, participants in the conventional condition neglected to visit the PDMP tab 58% of the time, whereas their peers in the alternative condition neglected to visit the tab only 8% and 27% of the time, respectively. There were no main effects, as expected. Levene’s test did not pass under scenario 3 (P < .05).

In the conventional condition, participants overrode 45 of 47 (96%) modal dialogs. In the alternative condition, the patient’s PDMP information was available in 23 trials. In 14 (61%) of these cases, participants clicked the PDMP shortcut button (Figure 2, ①). In another 6 (26%) trials, they clicked the PDMP tab directly, rather than using the shortcut. Among the other features provided in the alternative condition, alternative medication suggestions were barely clicked, and search suggestions were never used. However, we do not know whether the information presented on screen had an influence on participants’ prescribing decisions.

DISCUSSION

To combat the opioid crisis, there is a broad consensus that it is imperative to integrate PDMP into EHRs to make it easier for prescribers to access patients’ prescription history of controlled substances at the point of care.7,11–13 However, how PDMP information should be presented in the EHR, and how this information should be optimally incorporated into clinicians’ workflow and decision-making processes, have remained understudied.

As mentioned previously, the primary approach to presenting medication safety alerts is through modal dialogs. Modal dialogs are relatively easy to implement, and there seems to be a perception that modal dialogs—because of their interruptive nature—are an effective means of obtaining clinicians’ attention, leading to a higher likelihood of actions. However, there has been an extensive body of literature suggesting that alerts delivered through modal dialogs are frequently overridden,22,45 much like in our study, in which participants overrode 96% of modal dialogs. Further, modal dialogs are a significant contributing factor to clinician frustration,46 burnout,47 and potentially unsafe prescribing practices.27

Alternative design improved prescription appropriateness

In our study, participants who used the alternative design for integrating PDMP information into the EHR, which features noninterruptive, contextual cues, wrote more appropriate pain medication prescriptions than did those who used an interruptive, modal dialog–based design, as we expected. This result suggests that attention to interactive design can improve the effectiveness of PDMP-EHR integration while minimizing disruption to workflow and clinicians’ decision-making processes.

Participants preferred the alternative design

Most participants preferred the contextual cue-based version, again as expected. The results of participant feedback suggest that participants found the graphical PDMP display to be valuable, and they also liked the fact that interaction with the system in the alternative design was more flexible. In related research, prescribers have stated that they are unlikely to check the database unless they see a legitimate reason to do so.7,11,12 We believe that the PDMP history indicator provided one such reason: the fact that the database actually had some information to offer.

Contextual information preferable to direct persuasion

While the alternative condition did not appear to save time, it also did not appear to increase the time burden. It appears, then, that the alternative condition allowed physicians to make better use of their time, as measured by appropriateness. For example, according to our usage statistics, those in the alternative design condition were far more likely to visit the PDMP tab when information was available, as we expected; we attribute this to the PDMP history indicator, which participants frequently clicked.

Further, direct persuasion features (eg, modal dialogs and alternative medication recommendations) were almost always ignored. We believe that alternative medication recommendations could be more acceptable if they were more tuned to the patient’s chief complaint, problem list, or diagnoses—developing such a recommender system would require careful research in its own right.

The relative apparent efficacy of those “guiding” features, such as the PDMP history cue and the visual representation of PDMP data, seems to lend credence to design principles such as “anticipate clinician needs and bring information to clinicians at the time they need it.”48 We also note that participants said they liked the alternative design’s flexibility. By this, we believe that they were referring to its support for flexible task wayfinding,49 the process by which a user explores the structure of a task, such as composing a medication order. The alternative design allowed users to move quite freely between the “high level” (eg, compiling medication options and regimens) and the “low level” (specifying order details, such as route, dose, and frequency). By contrast, the conventional condition was more regimented; it required the user to fully specify route, dose, and frequency as soon as a medication was selected. We believe that the alternative design’s support for flexible task wayfinding contributed to the overall improvement in appropriateness.

We conclude that alert fatigue continues to be a barrier to realizing the efficacy of CDS systems. Future research should seek alternative means of delivering decision-supporting information, such as through contextual cues.

Limitations

First, our simulation apparatus only displayed generic names of the medications, whereas most commercial EHRs display both generic and brand names. However, because generic names were presented in both conditions (alternative and conventional), we do not believe that it influenced the outcomes of the study. Second, both attending physicians who scored the results are anesthesiologists. This might explain why the anesthesiology residents received slightly higher overall scores than the PM&R residents did. Third, participants were all resident physicians. Therefore, the results may not be generalizable to more experienced participants, or physicians in specialties other than anesthesiology and PM&R. Fourth, our study was designed to evaluate multiple user interface design features; further research is needed to isolate which features contributed more to the overall effect. Further, this was an experimental study conducted in a simulated setting; further evaluation in realistic clinical environments is needed. Last, it should be acknowledged that certain U.S. states prohibit PDMP-EHR integration by law. Alternative methods for facilitating provider access to PDMP information may need to be developed for these states, or lawmakers may consider allowing some form of integration given the improved information utility.

CONCLUSION

With PDMP-EHR integration efforts projected to be underway across the United States, it would be prudent to consider using human factors principles to ensure such integration is not only useful but also usable, in order to achieve its maximum benefits. In this study, we found that an alternative design using graphical presentation of PDMP data and contextual cues resulted in improved pain prescribing compared with a conventional design that features tabular data display and modal dialogs for presenting CDS. Based on these results, we conclude that the effectiveness of PDMP-EHR integration is critically dependent on interactive design.

FUNDING

The project described was supported in part by the University of California, Irvine Council on Research, Computing, and Libraries and the U.S. Department of Education Graduate Assistance in Areas of National Need Fellowship. It was also supported by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through grant UL1 TR0001414, awarded to Kai Zheng. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health or other funding bodies.

AUTHOR CONTRIBUTIONS

MIH provided substantial contributions to the conception and design of the work, as well as the acquisition, analysis, and interpretation of data for the work, and drafted portions of the work and revised it critically for important intellectual content. AMN provided substantial contributions to the design of the work, as well as the acquisition and analysis of data for the work, and revised it critically for important intellectual content. BGY provided substantial contributions to the design of the work, as well as the analysis of data for the work, and revised it critically for important intellectual content. LS provided substantial contributions to the design of the work, as well as the acquisition of data for the work, and drafted portions of the work. KG provided substantial contributions to the conception and design of the work, as well as the interpretation of data for the work, an revised it critically for important intellectual content. All authors provided final approval of the version to be published, and agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Supplementary Material

ACKNOWLEDGMENTS

We are grateful to the participants for their valuable time, to the University of California, Irvine Simulation Center for the use of their facility, and to Kathryn Campbell, the statistical consultant, for her outstanding professional generosity.

CONFLICT OF INTEREST STATEMENT

None declared.

REFERENCES

- 1. Rudd RA, Seth P, David F, et al. Increases in drug and opioid-involved overdose deaths—United States, 2010–2015. MMWR Morb Mortal Wkly Rep 2016; 65: 1445–52. [DOI] [PubMed] [Google Scholar]

- 2. Vowles KE, McEntee ML, Julnes PS, et al. Rates of opioid misuse, abuse, and addiction in chronic pain: a systematic review and data synthesis. Pain 2015; 156 (4): 569–76. [DOI] [PubMed] [Google Scholar]

- 3. Cicero TJ, Ellis MS, Surratt HL, et al. The changing face of heroin use in the United States: a retrospective analysis of the past 50 years. JAMA Psychiatry 2014; 71 (7): 821–6. [DOI] [PubMed] [Google Scholar]

- 4. Clarke JL, Skoufalos A, Scranton R.. The American opioid epidemic. Popul Health Manag 2016; 19 (Suppl 1): S1–10. [DOI] [PubMed] [Google Scholar]

- 5.Controlled Substances Act of 1970, P.L. No. 91-513, §84 Stat. 1236.

- 6.Prescription Drug Monitoring Program Training and Technical Assistance Center. Status of Prescription Drug Monitoring Programs (PDMPs). 2017. http://www.pdmpassist.org/pdf/PDMP_Program_Status_20170824.pdf. Accessed April 3, 2019. [Google Scholar]

- 7. Hussain MI, Nelson AM, Polston G, et al. Improving the design of California’s Prescription Drug Monitoring Program (PDMP). JAMIA Open 2019; 2 (1): 160–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Dowell D, Haegerich T, Chou R.. CDC Guideline for Prescribing Opioids for Chronic Pain—United States, 2016 Atlanta, GA: Centers for Disease Control and Prevention; 2016. MMWR Recomm Rep 2016; 65 (1): 1–49. [DOI] [PubMed]

- 9. Dowell D, Zhang K, Noonan RK, et al. Mandatory provider review and pain clinic laws reduce the amounts of opioids prescribed and overdose death rates. Health Aff (Millwood) 2016; 35 (10): 1876–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Unick GJ, Rosenblum D, Mars S, et al. Intertwined epidemics: national demographic trends in hospitalizations for heroin- and opioid-related overdoses, 1993–2009. PLoS One 2013; 8 (2): e54496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Irvine JM, Hallvik SE, Hildebran C, et al. Who uses a prescription drug monitoring program and how? Insights from a statewide survey of Oregon clinicians. J Pain 2014; 15 (7): 747–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Leichtling GJ, Irvine JM, Hildebran C, et al. Clinicians’ use of prescription drug monitoring programs in clinical practice and decision-making. Pain Med 2016; 18: 1063–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Haffajee RL, Jena AB, Weiner SG.. Mandatory use of prescription drug monitoring programs. JAMA 2015; 313 (9): 891–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Connecting for Impact: Integrating Health IT and PDMPs to Improve Patient Care. Washington, DC: Office of the National Coordinator for Health Information Technology; 2013. https://healthit.gov/sites/default/files/connecting_for_impact-final-508.pdf. Accessed January 28, 2018.

- 15. Baldwin G. Integrating and Expanding Prescription Drug Monitoring Program Data: Lessons from Nine States Atlanta, GA: Centers for Disease Control and Prevention; 2017. https://www.cdc.gov/drugoverdose/pdf/pehriie_report-a.pdf Accessed April 20, 2019.

- 16. State Health Information Exchange Cooperative Agreement Program Washington, DC: Office of the National Coordinator for Health Information Technology; 2011. https://healthit.gov/topic/onc-hitech-programs/state-health-information-exchange. Accessed February 12, 2018.

- 17. Tahir D. Fed mandate to use opioid data-sharing technology angers states. Politico 2019. https://www.politico.com/story/2019/04/12/opioid-data-sharing-angers-states-1320532. Accessed April 19, 2019. [Google Scholar]

- 18. Cohen JK. There’s a push to slow down required EHR-PDMP integration. Modern Healthcare2019. https://www.modernhealthcare.com/politics-policy/theres-push-slow-down-required-ehr-pdmp-integration. Accessed July 2, 2019.

- 19. Finley EP, Schneegans S, Tami C, et al. Implementing prescription drug monitoring and other clinical decision support for opioid risk mitigation in a military health care setting: a qualitative feasibility study. J Am Med Inform Assoc 2018; 25 (5): 515–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Sinha S, Jensen M, Mullin S, et al. Safe opioid prescription: a SMART on FHIR approach to clinical decision support. Online J Public Health Inform 2017; 9 ( 2; ): 1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Pirolli P, Card S.. Information foraging. Psychol Rev 1999; 106 (4): 643–75. [Google Scholar]

- 22. van der Sijs H, Aarts J, Vulto A, et al. Overriding of drug safety alerts in computerized physician order entry. J Am Inform Assoc 2006; 13: 138–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Wickens CD, Hollands JG, Banbury S, et al. Alarms and alert systems In: Campanella C, Mosher J, eds. Engineering Psychology and Human Performance. Upper Saddle River, NJ: Pearson Education; 2013: 23–25. [Google Scholar]

- 24.Apple Computer, Incorporated. Dialog Boxes. Macintosh Human Interface Guidelines. Vol. 12–13 Menlo Park, CA: Addison-Wesley Publishing Company; 1995: 178–193. [Google Scholar]

- 25. Jameson A, Berendt B, Gabrielli S, et al. Choice architecture for human-computer interaction. In: Bederson B, ed. Found Trends Hum Comput Interact. Boston, MA, USA: Now Publishers, 2013; 7 (1–2): 1–235. [Google Scholar]

- 26. Gregory M, Russo E, Singh H.. Electronic health record alert-related workload as a predictor of burnout in primary care providers. Appl Clin Inform 2017; 8: 686–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc 2019; 26 (2): 106–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Talbot SG, Dean W. Physicians aren’t ‘burning out.’ they’re suffering from moral injury. STAT2018. https://www.statnews.com/2018/07/26/physicians-not-burning-out-they-are-suffering-moral-injury/. Accessed April 1, 2019.

- 29. Litz BT, Stein N, Delaney E, et al. Moral injury and moral repair in war veterans: A preliminary model and intervention strategy. Clin Psychol Rev 2009; 29 (8): 695–706. [DOI] [PubMed] [Google Scholar]

- 30. Sawyer D, Aziz K, Backinger C, et al. Do it by Design: An Introduction to Human Factors in Medical Devices. In: U.S. Department of Health and Human Services, Health and Human Services, Public Health Service, Food and Drug Administration, Center for Devices and Radiological Health. Washington, DC; 1996. [Google Scholar]

- 31. Malhotra S, Laxmisan A, Keselman A, et al. Designing the design phase of critical care devices: a cognitive approach. J Biomed Inform 2005; 38 (1): 34–50. [DOI] [PubMed] [Google Scholar]

- 32. Patel VL, Kaufman DR.. Cognitive science and biomedical informatics In: Shortliffe EH, Cimino JJ, eds. Biomedical Informatics. London, United Kingdom: Springer-Verlag; 2014: 109–48. [Google Scholar]

- 33. Russ AL, Zillich AJ, Melton BL, et al. Applying human factors principles to alert design increases efficiency and reduces prescribing errors in a scenario-based simulation. J Am Med Inform Assoc 2014; 21 (e2): e287–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Green LA, Nease D, Klinkman MS.. Clinical reminders designed and implemented using cognitive and organizational science principles decrease reminder fatigue. J Am Board Fam Med 2015; 28 (3): 351–9. [DOI] [PubMed] [Google Scholar]

- 35. Bell GC, Crews KR, Wilkinson MR, et al. Development and use of active clinical decision support for preemptive pharmacogenomics. J Am Med Inform Assoc 2014; 21 (e1): e93–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Horsky J, Phansalkar S, Desai A, et al. Design of decision support interventions for medication prescribing. Int J Med Inform 2013; 82 (6): 492–503. [DOI] [PubMed] [Google Scholar]

- 37. Phansalkar S, Edworthy J, Hellier E, et al. A review of human factors principles for the design and implementation of medication safety alerts in clinical information systems. J Am Med Inform Assoc 2010; 17 (5): 493–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Cleveland W, McGill R.. Graphical perception and graphical methods for analyzing scientific data. Science 1985; 229 (4716): 828–33. [DOI] [PubMed] [Google Scholar]

- 39. Hayward J, Thomson F, Milne H, et al. Too much, too late’: mixed methods multi-channel video recording study of computerized decision support systems and GP prescribing. J Am Med Inform Assoc 2013; 20 (e1): e76–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.U.S. National Library of Medicine. Unified Medical Language System. RxNorm. 2019. https://www.nlm.nih.gov/research/umls/rxnorm/. Accessed April 12, 2019.

- 41. Heins J, Heins A, Grammas M, et al. Disparities in analgesia and opioid prescribing practices for patients with musculoskeletal pain in the emergency department. J Emerg Nurs 2006; 32 (3): 219–24. [DOI] [PubMed] [Google Scholar]

- 42. Miller MM, Allison A, Trost Z, et al. Differential effect of patient weight on pain-related judgements about male and female chronic low back pain patients. J Pain 2018; 19 (1): 57–66. [DOI] [PubMed] [Google Scholar]

- 43. McCoy AB, Waitman LR, Lewis JB, et al. A framework for evaluating the appropriateness of clinical decision support alerts and responses. J Am Med Inform Assoc 2012; 19 (3): 346–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Landis JR, Koch GG.. An application of hierarchical kappa-type statistics in the assessment of majority agreement among multiple observers. Biometrics 1977; 33 (2): 363–74. [PubMed] [Google Scholar]

- 45. Hussain MI, Reynolds T, Zheng K.. Medication safety alert fatigue may be reduced via interaction design and clinical role-tailoring: a systematic review. J Am Med Inform Assoc 2019; 26 (10): 1141–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Pine KH, Mazmanian M. Institutional logics of the EMR and the problem of “perfect” but inaccurate accounts. In: Proceedings of the 17th ACM Conference on Computer Supported Cooperative Work & Social computing - CSCW ’14 Baltimore, MD: ACM Press; 2014: 283–94.

- 47. Hoffman S. Healing the healers: legal remedies for physician burnout. Case Studies Legal Research Paper No. 2018-10. Yale J Health Policy Law Ethics 2019; 18: 59.

- 48. Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003; 10 (6): 523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Mirel B. Usefulness: focusing on inquiry patterns, task landscapes, and core activities In: Buehler M, Cerra D, Siebert B, Breyer B, eds. Interaction Design for Complex Problem Solving: Developing Useful and Usable Software. San Francisco, CA, USA: Morgan Kaufmann; 2004: 32–64. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.