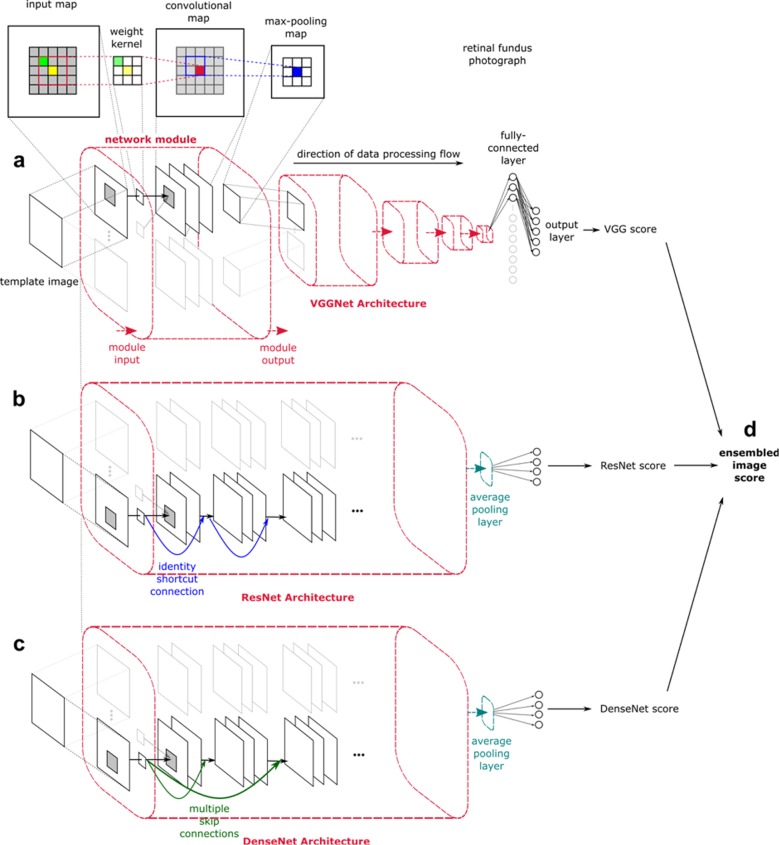

Fig. 4. Convolutional neural networks investigated.

The architecture of convolutional neural networks (CNNs) are based on few general principles. The network is composed of mathematically weighted neurons that form sequential layers where there is linear transfer of signal from the input through to the output layers. For this study, each input image was pre-processed by scaling to a fixed template of 512 × 512 pixels in resolution. These images were subsequently represented as a matrix of Red Green Blue (RGB) values in the input layer. Sequential convolutions were conducted by superimposing a weighted kernel over these input maps, with our study using a 3 × 3 weighted kernel with subsequent max-pooling. The output layer utilizes a softmax classifier to generate probability values for the pre-defined output classes15,32,52. a VGGNet is the oldest CNN used in this comparison, released in 2014. Despite its standard uniform architecture composed of 16 layers, it has had great success at feature extraction53. b ResNet has been highly favored since its introduction in 2015, with its atypical architecture utilizing skip residual connections (visualized as blue arrows) to bypass signals across layers. This allows for increase in layers without compromising the ease of training, resulting in supra-human performance of 3.6% top-5 error rate54. c DenseNet is a newer CNN released in 2017 that has been shown to perform better than ResNet. Its architecture builds on a similar principle to the one capitalized by ResNet, but rather has a dense connectivity pattern where each layer receives information from all preceding layers as shown by the green arrows. This allows concatenation of sequential layers and compacting the network into a ‘denser’ configuration40. d Ensemble is a combination of the three networks’ probability output scores generated per eye, through the acquisition of the mean value.