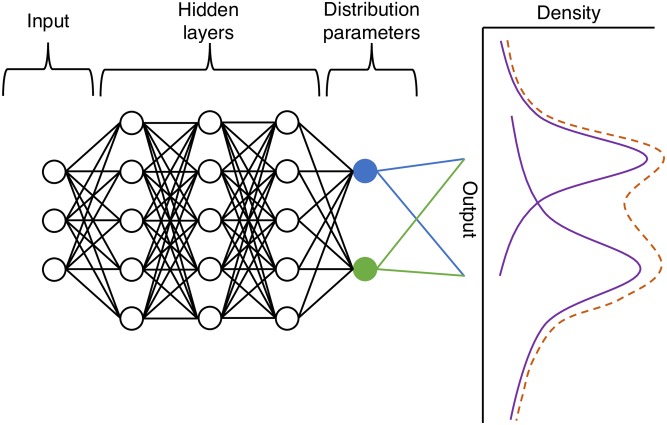

Fig 1. MDN that emulates a model with three inputs and a one-dimensional output with two mixtures.

The inputs are passed through two hidden layers, which are then passed on to the normalised neurons, which represent the parameters of a distribution and its weights e.g. the mean (shown in blue) and variance (shown in green) of a normal distribution. These parameters are used to construct a mixture of distributions (represented as a dashed line).