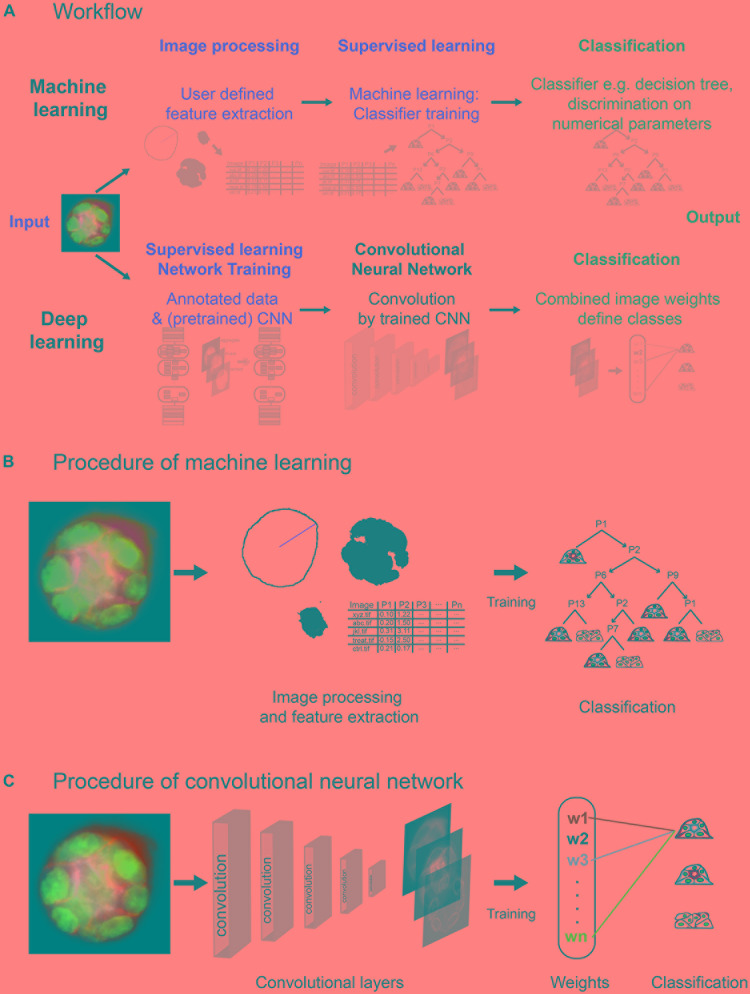

FIGURE 2.

Layout of machine learning and deep learning strategies. (A) Workflow of approaches, showing computational steps as required for machine learning (upper panel) and deep learning (lower panel). Machine learning requires human input (red) in the steps of image processing and supervised learning to develop a classifier for spheroid classification. Deep learning requires human input in form of annotated data and a (pretrained) network within network training. Convolution of images and classification run within the network (CNN). (B) Machine learning comprises initial image processing and feature extraction steps. Equatorial plane images are processed to generate numerical parameters, which describe shape and/or structure of the spheroids, and radial distribution of fluorescence intensities. Upon training of a classifier, for example, classification tree, based on a subset of spheroid images, numerical parameters are used to determine the polarity of additional spheroids. (C) Convolutional neural networks reduce dimensionality applying different filters/kernels over a defined number of layers. This allows extraction of different local features such as edges in higher dimensional layers up to features of higher spatial distribution in deeper layers. Weights of these features are then combined in densely connected layers of the network to assign the classification label.