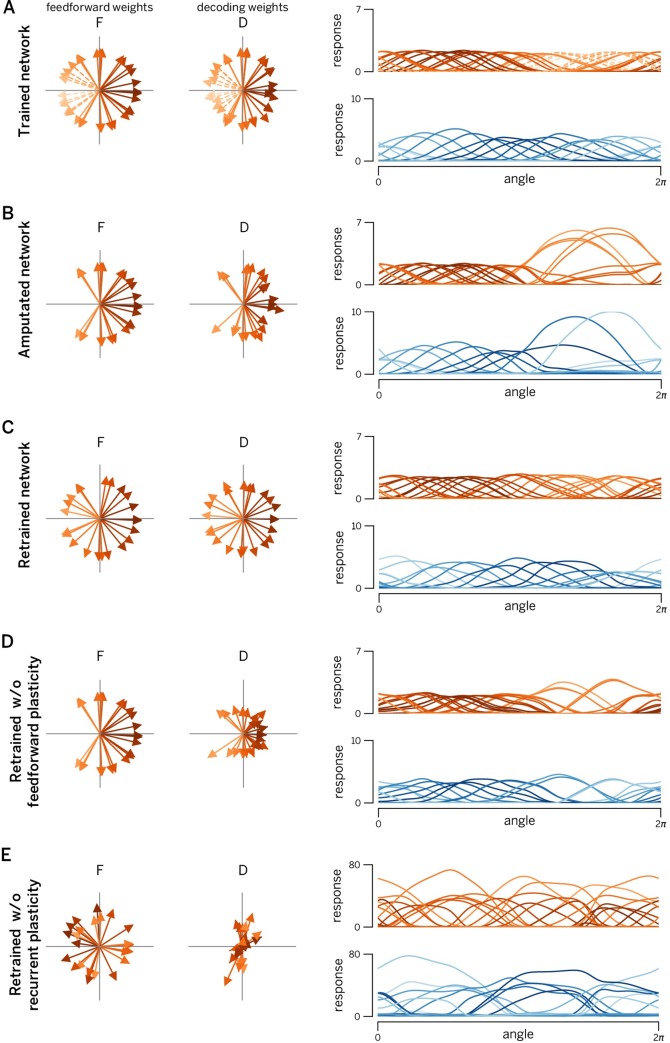

Fig 8. Manipulating recurrent and feedforward plasticity.

A. EI network with 80 excitatory neurons and 40 inhibitory neurons, trained with two uncorrelated time-varying inputs. Left panels: learnt feedforward weights of excitatory population. Central panels: Optimal decoding weights of excitatory population. Right panels: Tuning curves of excitatory neurons (red) and inhibitory neurons (blue). Neurons encoding/decodings weights and tuning curves are shaded according to their preferred direction (direction of their decoding weight vector) in 2D input space. The color code is maintained in all subsequent figures (even if their preferred direction changed after lesion and/or retraining). B. Same network after deletion of leftward-coding excitatory neurons (see dashed lines in panel A). Note that no new training of the weights has yet taken place. Changes in tuning curves and decoding weights are due to internal network dynamics. We observe a large increase in firing rates and a widening and shifting of tuning curves towards the lesion, a signature that the network can still encode leftward moving stimuli, but does it in an inefficient way. C. Retrained network. The lesioned network in (B) was subjected to 1000s re-training of the connection. Consequently, the “hole” induced by the lesion has been filled by the new feedforward/decoding weights, all tuning curves once again covering the input space uniformly. D. Network with retrained recurrent connections (feedforward weights are the same as in panel B). Even without feedforward plasticity, the lesioned network is able to recover its efficiency to a large extent. E. Network with retrained feedforward weights only (recurrent connections are the same as in panel B). While feedforward weights once again cover the input space, absence of recurrent plasticity results in a massive increase in firing rates (and a concomitant decrease in coding precision). Consequently, training only feedforward weights after a lesion actually worsens the representation.