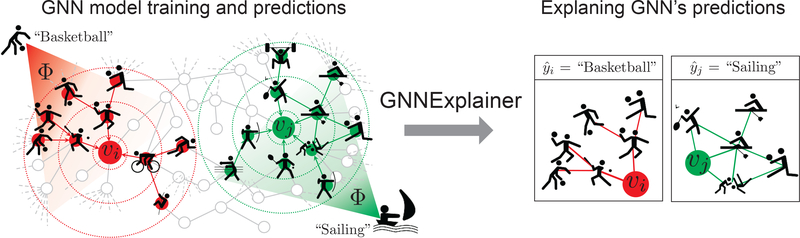

Figure 1:

GnnExplainer provides interpretable explanations for predictions made by any GNN model on any graph-based machine learning task. Shown is a hypothetical node classification task where a GNN model Φ is trained on a social interaction graph to predict future sport activities. Given a trained GNN Φ and a prediction for person vi, GnnExplainer generates an explanation by identifying a small subgraph of the input graph together with a small subset of node features (shown on the right) that are most influential for . Examining explanation for , we see that many friends in one part of vi’s social circle enjoy ball games, and so the GNN predicts that vi will like basketball. Similarly, examining explanation for , we see that vj ‘s friends and friends of his friends enjoy water and beach sports, and so the GNN predicts