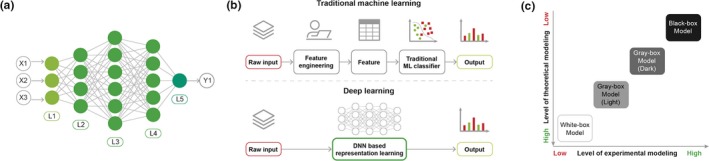

Figure 1.

(a) A deep neural network (DNN) is a collection of neurons organized in a sequence of multiple layers. There are three types of layers. The input layer (L1), which contains the features extracted from the input data. Second, there are the hidden layers (L2, L3, and L4). Each of them is a set of nodes acting as computational units. The neurons implement a nonlinear mapping from the input to the output. This mapping is learned from the data by adapting the weights of each neuron. The output layer (L5) is similar to the hidden layer but produces the final output. The number of nodes in the output layer depends on the type of task to be solved. (b) Traditional machine learning (ML) relies on feature engineering, which transforms raw data into features that better represent the predictive task. DNNs discover the mapping from representation to output and learn the most informative features from data. This ability to automatically extract high‐dimensional abstract information from a data without the need to hand‐design features and the flexibility and adaptability of the model architecture are two advantages of DNN in the context of molecular design. (c) Depending on the balance between the levels of experimental and theoretical modeling, the outputs of ML methods can be difficult for humans to interpret (Table 1). For standard ML, the features are interpretable and the role of the algorithm is to map the representation to output. An interpretation for a decision made can be retrieved by scrutinizing the inference process. For deep learning methods, although the input domain of the DNN is also interpretable, the learned internal representations and the flow of information through the network are harder to analyze and modules must be implemented to interpret the output.