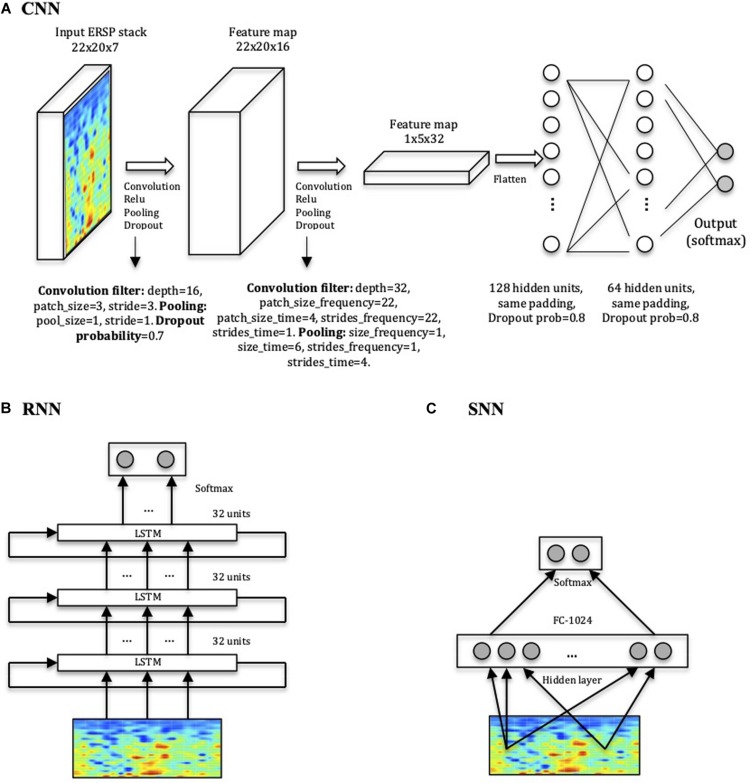

FIGURE 3.

Network architectures. (A) CNN model displaying input, convolution with pooling layers, and hidden-unit layers. The first two layers perform the convolution, the Rectified Linear Units (ReLU) function and the pooling processes for feature extraction. The last two layers with 128 and 64 hidden nodes perform the class classification in HC or ADHD. (B) RNN consisting of three stacked layers of LSTM cells, where each cell uses as input the outputs of the previous one. Each cell used 32 hidden units, and dropout was used to regularize it. (C) SNN architecture used for comparison with one layer of 1024 units.