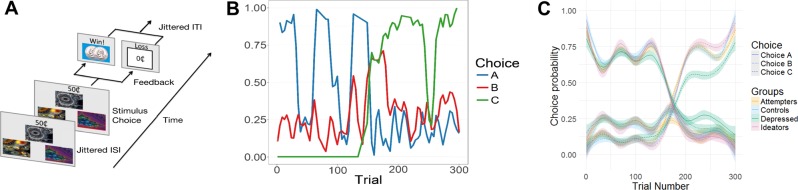

Fig. 1. Volatile reinforcement learning task and participants’ performance.

a Task schematic. On each trial in the task, participants were presented with three stimuli and the possible winnings (10, 25, or 50¢) if the chosen stimulus was rewarded. Reward magnitude was manipulated independently of the chosen stimulus and shown at trial onset. Stimuli and possible winnings were presented until participants made a response using MRI-compatible response gloves. After an option was selected, it was highlighted and the presence or absence of a reward was displayed after a jittered ISI (sampled from an exponential distribution with mean = 4000 ms); reward feedback was displayed for 750 ms. The intertrial interval was sampled from an exponential distribution with a mean of 2920 ms. b Reward probabilities by trial and stimulus. The probability of reward after choosing each stimulus varied dynamically over time, requiring participants to continually update the expected values. Colored lines indicate the probability of reward (y axis; 0–1) for each stimulus at each trial (x axis; 1–300). c Task performance by groups. All participants were able to track the changing probabilities and choose stimuli that reflected updated reward probabilities. Line types represent choices (A–C, corresponding to lines in b) and line colors represent participant groups.