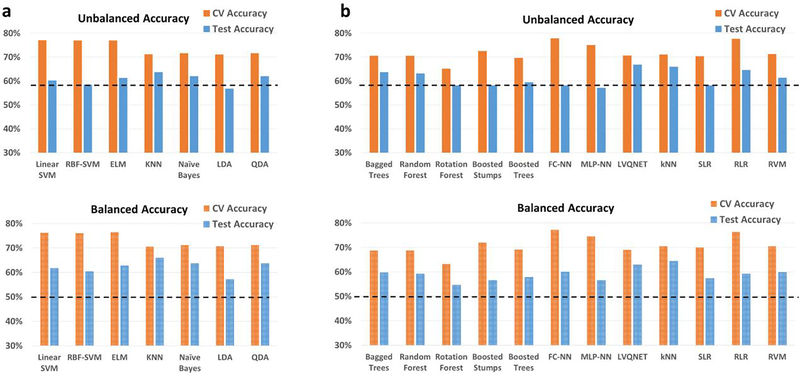

Fig. 5.

Unbalanced and balanced accuracy estimates for various classifiers a within recursive cluster elimination (RCE) framework, b outside RCE framework for autism brain imaging data exchange (ABIDE) data when the training/validation data and the hold-out test data are from different age groups for the binary classification between healthy controls and subjects with autism spectrum disorder. The training/validation data is from an age range of 7–21 years while the data from the age range of 22–58 years was used as a hold-out test data. The balanced accuracy was obtained by averaging the individual class accuracies. The orange bars indicate the cross-validation (CV) accuracy while the blue bars indicate the accuracy for the hold-out test data obtained by the voting procedure. The dotted line indicates the accuracy obtained when the classifier assigns the majority class to all subjects in the test data. For unbalanced accuracy, this happens to be 58.5% since healthy controls formed 58.5% of the total size of the hold-out test data. For balanced accuracy, this is exactly 50%. We chose the majority classifier as the benchmark since the accuracy obtained must be greater than that if it learns anything from the training data. The discrepancy between the biased estimates of the CV accuracy and the unbiased estimates of the hold-out accuracy is noteworthy. The best hold-out test accuracy was 66.8% obtained with learning vector quantization neural network (LVQNET) while the best balanced hold-out test accuracy was 64.4% obtained with k-nearest neighbors (KNN) implemented outside the RCE framework. ELM, extreme learning machine; LDA, linear discriminant analysis; QDA, quadratic discriminant analysis; SVM, support vector machine; FC-NN, fully connected neural network; MLP-NN, multilayer perceptron neural network; SLR, sparse logistic regression; RLR, regularized logistic regression; RVM, relevance vector machine