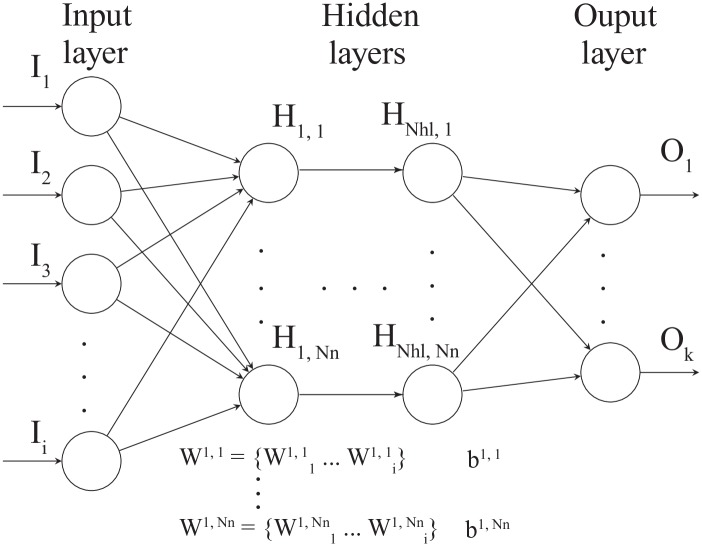

Fig 1. General neural network architecture used in this study [33, 43, 44].

The input layer contains up to i input nodes, and the output layer is composed of 1, …, k output nodes. Nhl refes to the number of hidden layers, and each hidden layer is composed of Nn neurons. Each neuron (e.g., H1,1 … ) is connected to the nodes of the previous layer with adjustable weights and also has an adjustable bias.