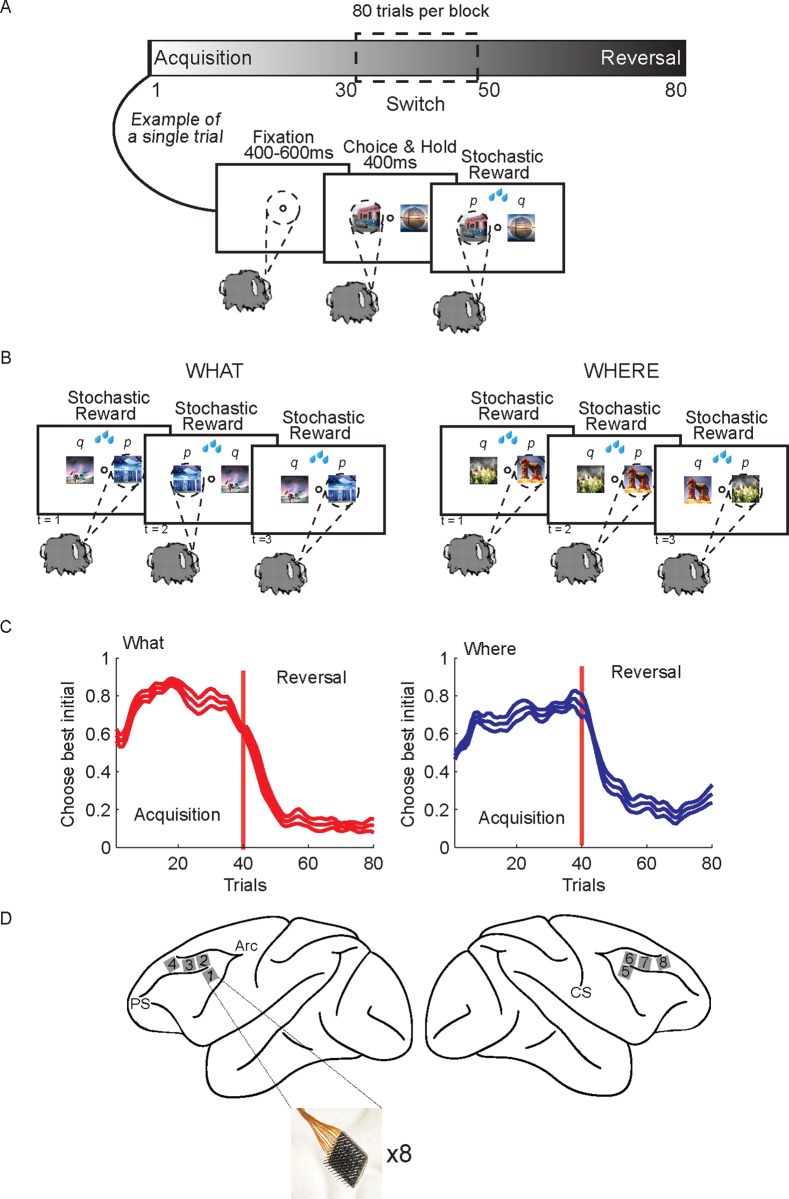

Fig 1. Two-armed bandit reinforcement learning task, behavior and recording locations.

A. The task was carried out in 80 trial blocks. At the beginning of each block of trials, 2 new images were introduced that the animal had not seen before. In each trial the animals fixated, and then two images were presented. The images were randomly presented left and right of fixation. Monkeys made a saccade to indicate their choice and then they were stochastically rewarded. B. There were two conditions. In the What condition one of the images was more frequently rewarded (p = 0.7) independent of which side it appeared on, and one of the images was less frequently rewarded (p = 0.3). In the Where condition one of the saccade directions was more frequently rewarded (p = 0.7) and one was less frequently rewarded (p = 0.3) independent of which image was at the chosen location. The condition remained fixed for the entire block. However, on a randomly chosen trial between 30 and 50, the reward mapping was reversed and the less frequently chosen object or location became more frequently rewarded, and vis-versa. C. Choice behavior across sessions. Animals quickly learned the more frequently rewarded image (left panel) or direction (right panel), and reversed their preferences when the choice-outcome mapping reversed. Because the number of trials in the acquisition and reversal phase differed across blocks, the trials were interpolated in each block to make all phases of equal length before averaging. The choice data was also smoothed using Gaussian kernel regression (kernel width sd = 1 trial). Thin lines indicate s.e.m. across sessions (n = 6 of each condition). D. Schematic shows locations of recording arrays, 4 in each hemisphere. Array locations were highly similar across animals.