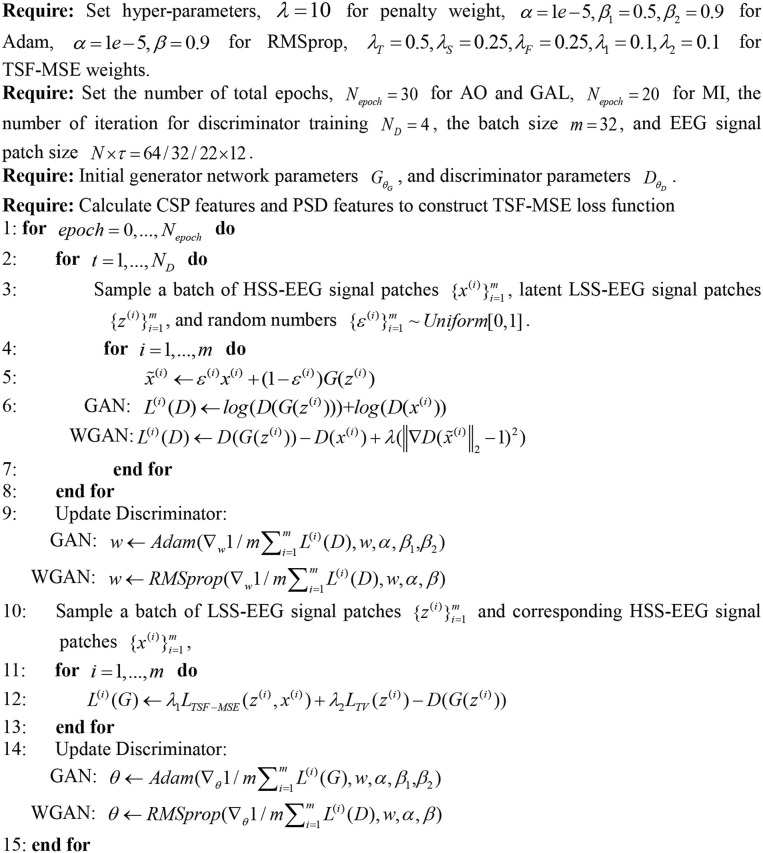

Figure 3.

The optimization procedure for the GAN/WGAN. Following the instructions of the GAN/WGAN frameworks, the Adam optimizer's hyperparameters are set as α = 1e−5, β1 = 0.5, β2 = 0.9, and the RMSprop optimizer's hyperparameters are set as α = 1e−5, β = 0.9. The hyperparameter for the gradient penalty is set as λ = 10 according to the suggestion in the reference. The hyperparameters in the TSF-MSE loss function and the joint reconstruction are set as λT = 0.5, λS = 0.25, λF = 0.25, λ1 = 0.1, λ2 = 0.1 according to our experimental experience. The optimization processes for the GAN and the WGAN are similar, except some places are changed to the corresponding optimizer and the loss functions.