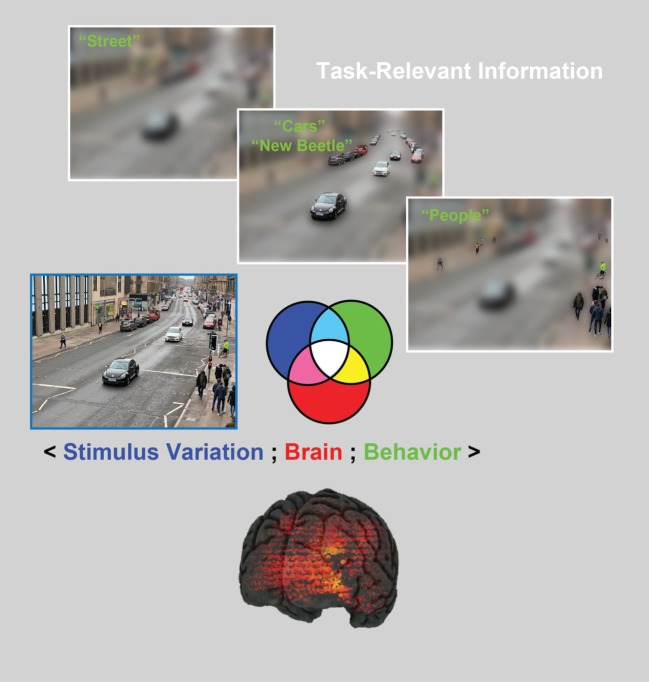

Figure 1.

The human brain typically performs multiple categorization tasks on a single image using task-relevant stimulus information. For example, the brain uses coarse, global scene information to categorize this image as ‘street’ in a categorization task. By contrast, local details (i.e. other task-relevant information), as represented by finer image resolution, support other categorization tasks, such as ‘cars and their make/model’ and ‘people’. As we will illustrate, in the stimulus information representation (SIR) framework, samples of the stimulus are randomly generated and shown to participants to categorize (blue set). This approach generates variations in the participant's categorization behaviour (green set, e.g. ‘people’). Concurrently, participants' brain activity is recorded using neuroimaging techniques (such as EEG and MEG, see the red set) while they perform the task. The three-way interaction between these three SIR components (〈stimulus variation; brain; behaviour〉), as represented by the colour-coded set intersection, enables us to better understand where, when and how task-relevant information is processed in the brain.