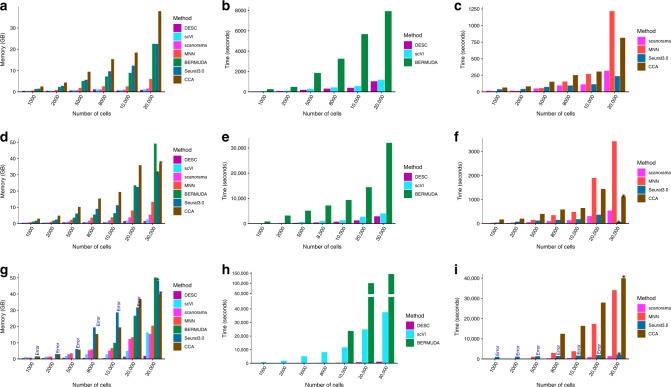

Fig. 9. Comparison of memory usage (first column) and running time (second and third columns).

a–c The number of batches for analyzed samples is 2. Analyzed data were from Kang et al.18. d–f The number of batches for analyzed samples is 4. g–i The number of batches for analyzed samples is 30. For d–i the analyzed data were form Peng et al.10 in which there are four batches when taking macaque id as batch definition and 30 batches when taking sample as batch definition. Because DESC, scVI, and BERMUDA are deep learning based methods, we put them together for ease of comparison. Remark: the reason that the running time of batch = 30 is smaller than that of batch = 4 for DESC is because when the data were standardized by sample id (i.e., when batch = 30), the algorithm converged quickly before reaching to the maximum number of epochs (300). The “Error” in the bar plot in g and i indicates that there was an error when using Seurat 3.0. This is because the numbers of cells in some batches are very small. The “asterisk” above the bar plot in d, f, g, and i indicates that the corresponding method broke due to memory issue (i.e., cannot allocate memory). Therefore, the recorded time is the computing time until the method broke. When the number of batches is 30, BERMUDA always throws out an error when the number of cells is less than 8000, so we only report BERMUDA when the number of cells ≥ 10,000. In addition, the reported running time and memory usage only include clustering procedure and not include the procedure of computing t-SNE or UAMP. All reported time and memory usage related to this figure were analyzed on our workstation Ubuntu 18.04.1 LTS with Intel® Core(TM) i7-8700K CPU @ 3.70 GHz and 64 GB memory.