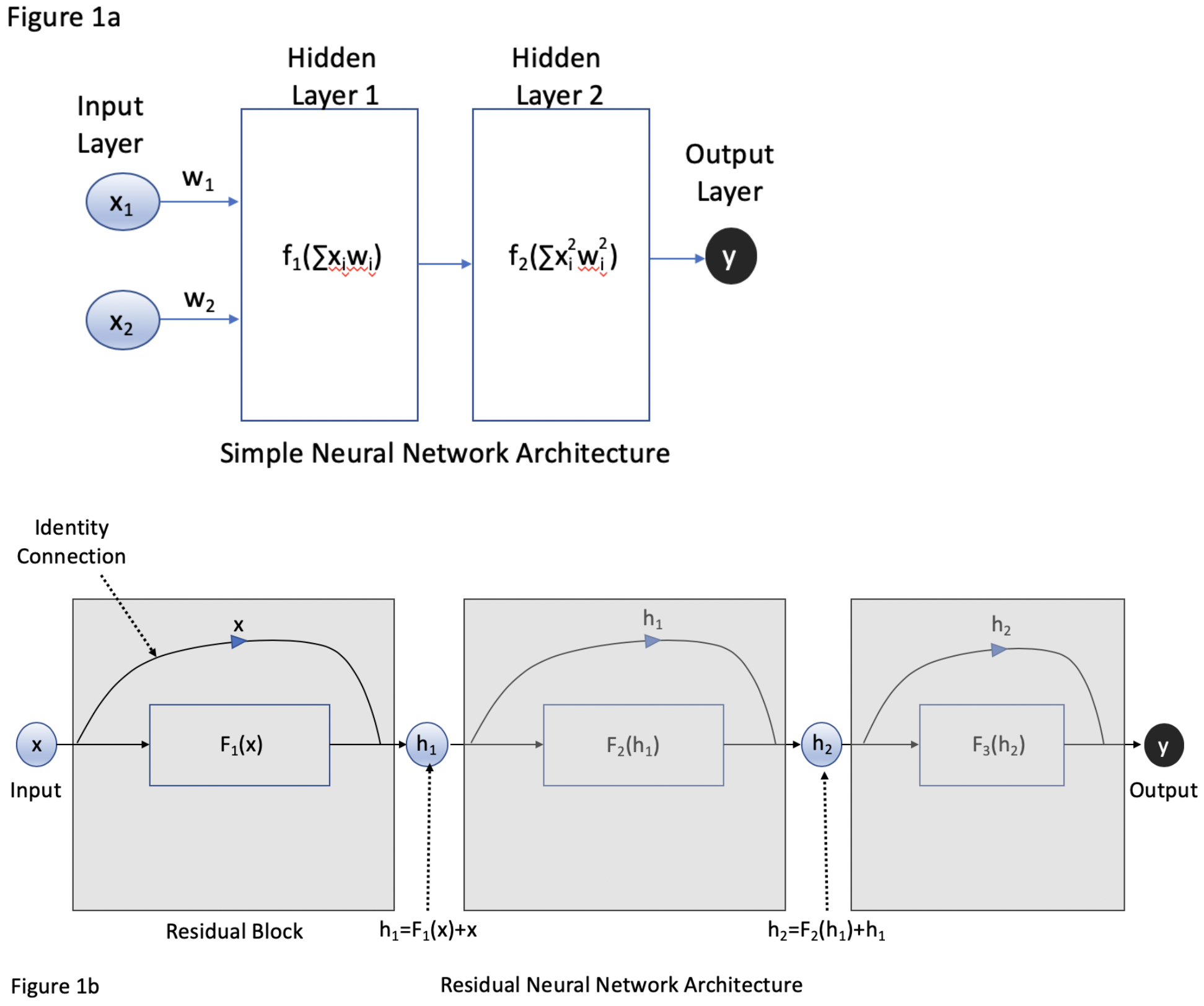

Figure 1.

A) Traditional neural network architecture where x represents input and w represents the weight of that input within the network. Within each layer are neurons that contain an activation function that considers the sum of the products xi*wi in determining whether or not to “fire” and process further information. Each layer passes information sequentially to the next layer until an output is produced. With each successive layer, information can be lost as it is processed and re-weighted by the network. B) A residual neural network is comprised of residual blocks (grey boxes), where an input x can be processed by a layer with a function F to generate a hidden output h. An identity connection allows the input of that block to bypass F. This allows weak connections via F to have less of an impact on the information that is passed down to subsequent blocks with goal of generating an output y.