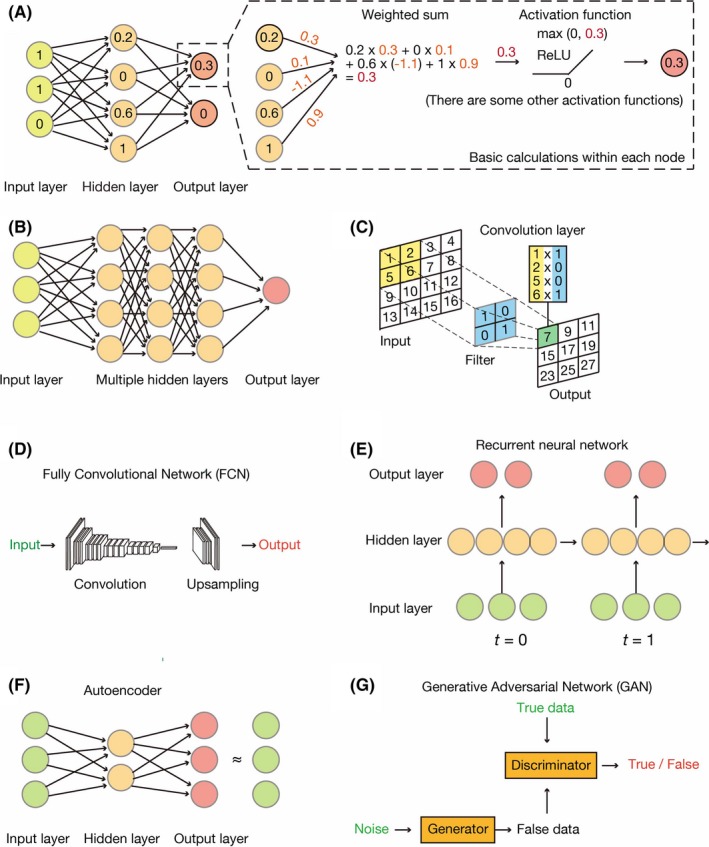

Figure 2.

Common architectures of neural networks. A, The simplest neural network comprises three layers: an input layer, a hidden layer and an output layer. Each node has some value and transmits its signal to the next layer. It first sums all weighted inputs and then transmits the resulting value to an activation function (rectified linear unit, or ReLU, in this case). B, A typical deep neural network, also known as a dense neural network, has multiple hidden layers, the nodes of each of which calculate values in the same manner as shown in (A). C, A convolutional neural network (CNN) applies multiple convolution layers before feeding the data into a dense neural network. The convolution layers apply filters (or kernels) to grid‐based data. D, A fully convolutional network (FCN) is a variant of a CNN in that it lacks densely connected layers. E, A recurrent neural network (RNN) is a special network designed for time‐series data. Each hidden layer holds certain variables and transmits them to the next time step. F, An autoencoder resembles a dense neural network but is trained to output signals that are identical to the inputs. Such networks offer a means to encode and decode data, with encoded features being stored in the hidden layer. G, A generative adversarial network (GAN) consists of two independent neural networks: a generator and a discriminator. The generator attempts to create new data (false data) that resemble the true data. In contrast, the discriminator discriminates real data from artificial data created by the generator. Alternate training of these two networks helps to decipher the complex rules underlying the data