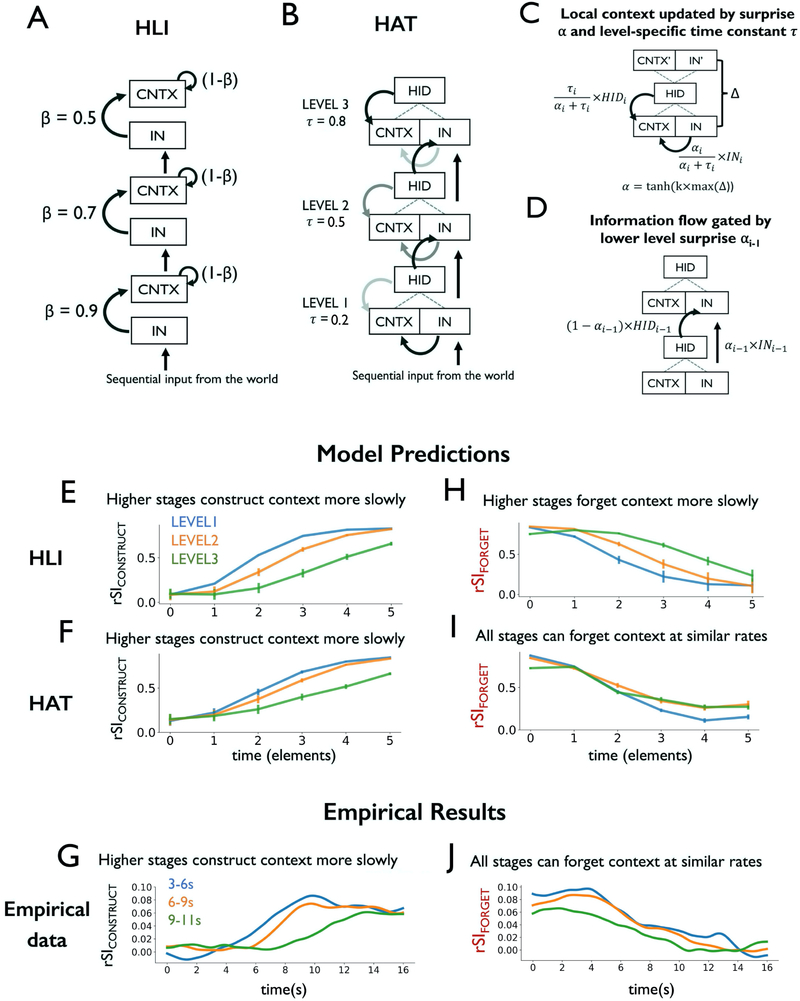

Figure 5. Modeling context construction and context forgetting.

(A) HLI model schematic: the new state of each unit is a linear weighted sum of its old state and its new input. (B) HAT model schematic: each region maintains a representation of temporal context, which is combined with new input to form a simplified joint representation. (C) An AT unit, in which local context CNTX is updated via hidden representation HID and current input IN, modulated by time constant τ and “surprise” α. α is computed via auto-associative error Δ and a scaling parameter k. (D) In HAT, the input to level i is gated by surprise α from level (i-1). (E) HLI simulation of rSIDE:CE predicts longer alignment time at higher stages of processing. (F) HAT simulation of rSIDE:CE predicts longer alignment time at higher stages of processing. (G) Empirical rSIDE:CE results grouped by alignment time, consistent with predictions of both HLI and HAT. (H) HLI simulation of rSICD:CE predicts that regions that construct context slowly will also forget context slowly. (I) HAT simulations predict that the timescale of context separation (rSICD:CE) need not be slower in levels of the model with longer alignment times (rSIDE:CE). (J) Empirical rSICD:CE results grouped by alignment time. HLI = hierarchical linear integrator, HAT = hierarchical autoencoders in time, AT = autoencoder in time, rSI = intact-scramble ISPC.