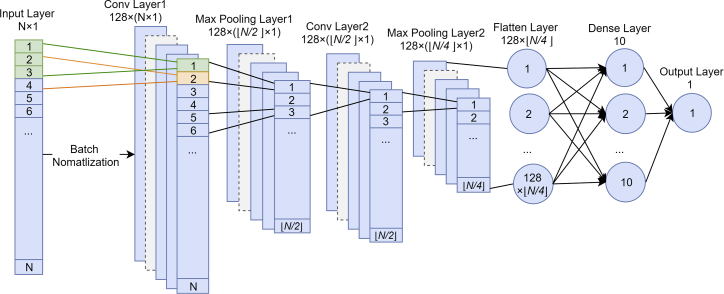

Figure 4.

The Architecture of Our CNN-Based Classifier for Short AMP Prediction

The model accepts a feature vector of N elements as input. First, the data values are normalized using a batch size of 64; then, the input is transferred into convoluted features by two convolutional layers and two maximum pooling layers. Each convolutional layer applies 128 kernels using a kernel size of 3 × 1 with stride 1, while each maximum pooling layer pools together data using a kernel size of 2 × 1 with stride 2. A dropout rate of 20% is applied in the maximum pooling step to prevent overfitting. Finally, all convoluted features are flattened and fed into a fully connected neural network with 10 hidden nodes and 1 output node. The rectified linear function (ReLU) is used as the activation function in the convolutional layer and by the hidden nodes, but the sigmoid function is used by the output node.