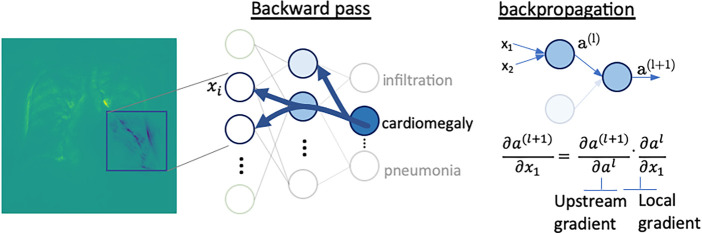

Figure 2c:

Gradient-based saliency maps for image classification. (a) Basic concepts of neuron activation. A neuron is activated via a weighted combination of inputs and application of an activation function, g. (b) Gradient-based methods rely on a forward and a backward pass. Given an input image x, a class k is maximally activated through forward passing throughout all layers of the network. All positive forward activations are recorded for later use during the backward pass. To visualize the contribution of pixels in the image to the class k, all activations are set to zero except for the studied class k, and then (c) backpropagation uses the chain rule to compute gradients from the output to the input of the network. ReLU = rectified linear unit, tanh = hyperbolic tangent.