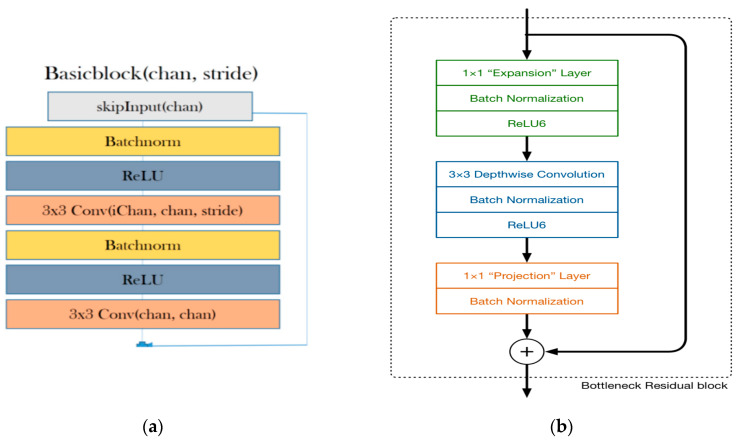

Figure 4.

(a) ResNet architecture [21]. ReLU is a linear rectifier function, which is an activation function for the neural network. Batchnorm, or batch normalization, is a technique used to standardize the input either by an activation function or by a prior node. Stride is the number of pixel shifts over the input matrix. For instance, when the stride is 1, then we move the filters 1 pixel at a time, and when the stride is 2, then we move the filters 2 pixels at a time. (b) MobileNetV2 architecture [26].