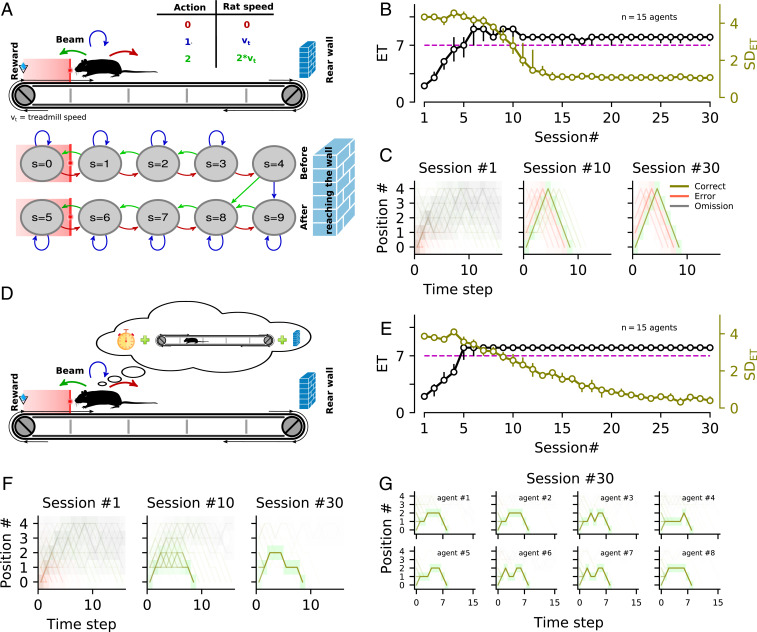

Fig. 6.

Performance comparison between artificial agents with or without time knowledge. (A) Schematic representation of the action (Top)–state (Bottom) space and transitions used to model the treadmill task for agents that did not use time information (see SI Appendix, Methods for details). (B) Average learning profile for several agents with different learning parameters. Median for the first 30 sessions. The dashed magenta line shows the GT. (C) Trajectories of three sessions at different stages of learning. Each session contained 100 trials. Across training sessions, the artificial agents (simulated rats) waited longer and longer and reduced their ET variability by performing the front–back–front trajectory. (D) Same as in A, but agents have now access to the time elapsed since trial onset. (E and F) Same as B and C, respectively, but for agents following the model sketched in D. Agents with temporal knowledge also learned progressively to enter in the reward area at the right time and reduced their variability. (G) Trajectories after training for eight different agents that accessed time information.