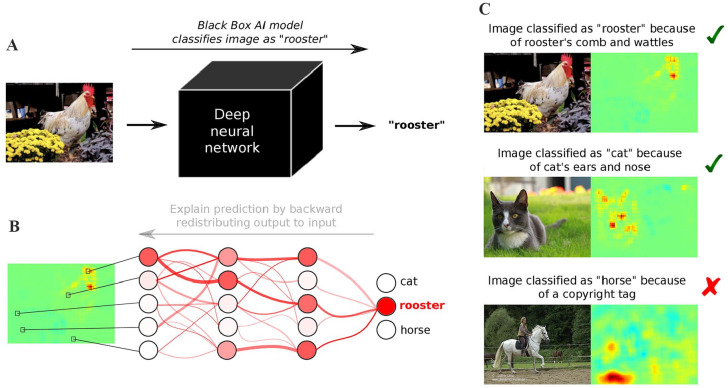

Figure 3.

More transparency through explainable AI (XAI). (A) Today’s AI models are often considered black boxes, because they take an input (e.g., an image) and provide a prediction (e.g., “rooster”) without saying how and why they arrived at it. (B) Recent XAI methods (Samek et al. 2019) redistribute the output back to input space and explain the prediction in terms of a “heatmap,” visualizing which input variables (e.g., pixels) were decisive for the prediction. (C) This allows to distinguish between meaningful and safe prediction strategies, for example, classifying rooster images by detecting the roster’s comb and wattles or classifying cat images by focusing on the cat’s ears and nose, and so-called Clever Hans predictors (Lapuschkin et al. 2019), for example, classifying horse images based on the presence of a copyright tag.