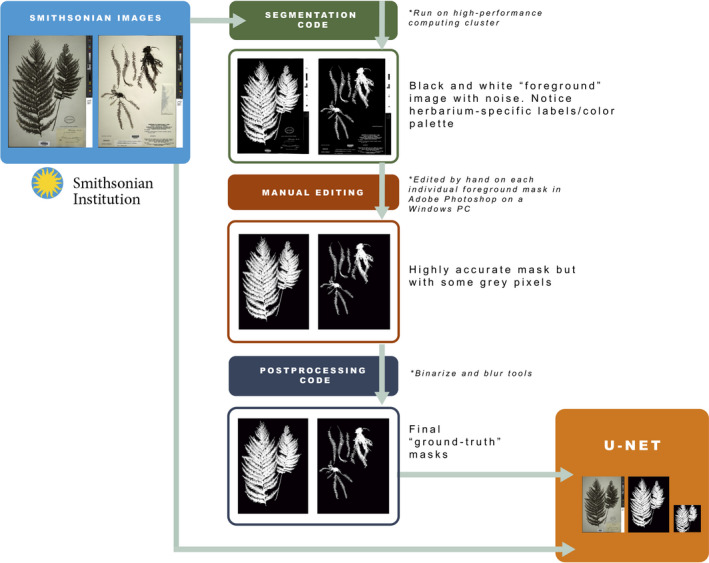

FIGURE 2.

Workflow outlining the automatic and manual steps in generating the image masks and training the U‐Net. High‐resolution JPEG (.jpg) files were exported from the Smithsonian Digital Asset Management System to the High‐Performance Computing Cluster where we ran the segmentation Python code. Outputs from this step were edited in Adobe Photoshop to remove label and color palette before running the postprocessing code (binarize and blur tools) that produced the final ground‐truth masks. These ground‐truth masks were then used as training data for the U‐Net model.