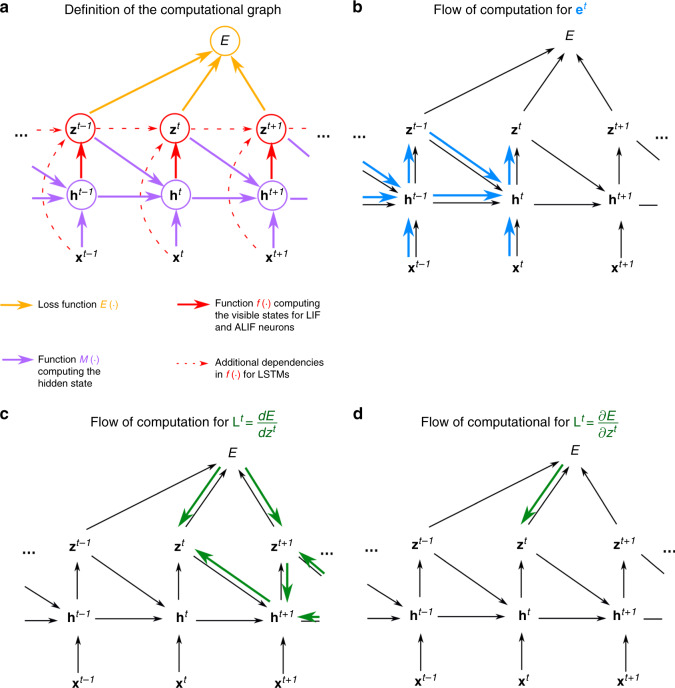

Fig. 6. Computational graph and gradient propagations.

a Assumed mathematical dependencies between hidden neuron states , neuron outputs zt, network inputs xt, and the loss function E through the mathematical functions E( ⋅ ), M( ⋅ ), f( ⋅ ) are represented by colored arrows. b–d The flow of computation for the two components et and Lt that merge into the loss gradients of Eq. (3) can be represented in similar graphs. b Following Eq. (14), the flow of the computation of the eligibility traces is going forward in time. c Instead, the ideal learning signals requires to propagate gradients backward in time. d Hence, while is computed exactly, is approximated in e-prop applications to yield an online learning algorithm.