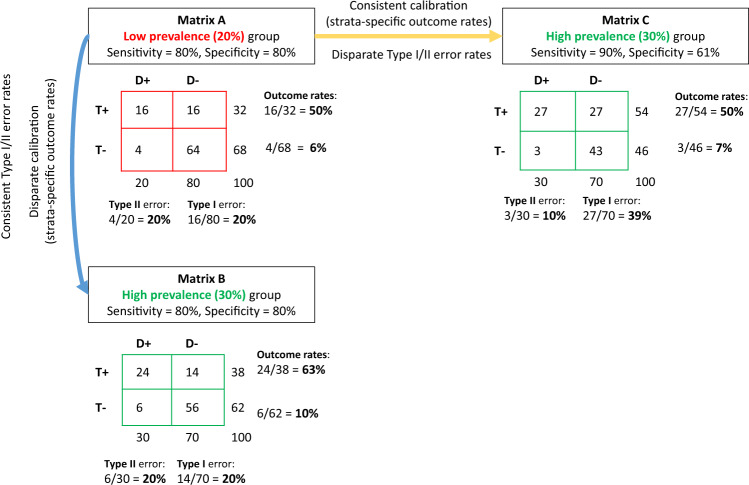

Fig. 1. Mutual incompatibility of fairness criteria.

For two groups with different outcome rates, a predictive test can have consistent error rates or consistent calibration but not both. We present outcomes using coarsened prediction scores, thresholded to divide the population (N = 100) into low and high risk strata. Confusion matrices for a low prevalence group with a 20% outcome rate (Matrix A, red) and a high prevalence group with a 30% outcome rate (Matrices B and C, green) are shown. For the low prevalence group, a predictive test with an 80% sensitivity and specificity identifies a high risk (test+) strata with an outcome rate of 50% (i.e., the positive predictive value) and a low risk (test−) strata with an outcome rate of ~6% (i.e., the false omission rate). However, as shown in Matrix B, the same sensitivity and specificity in the higher prevalence group gives rise to outcome rates of ~63% and ~10% in the high and low risk-strata, respectively. This violates the criterion of test fairness, since the meaning of a positive or negative test differs across the two groups. Holding risk-strata specific outcome rates constant would require a higher sensitivity and lower specificity (Matrix C). This violates the fairness criteria of equalized error rates. For example, the Type I error rate (i.e., the false positive rate) would almost double from 20% in the low prevalence population to ~39% in the higher prevalence population. The diagnostic odds ratio was fixed at ~16 across this example, whole numbers are used to ease interpretation.