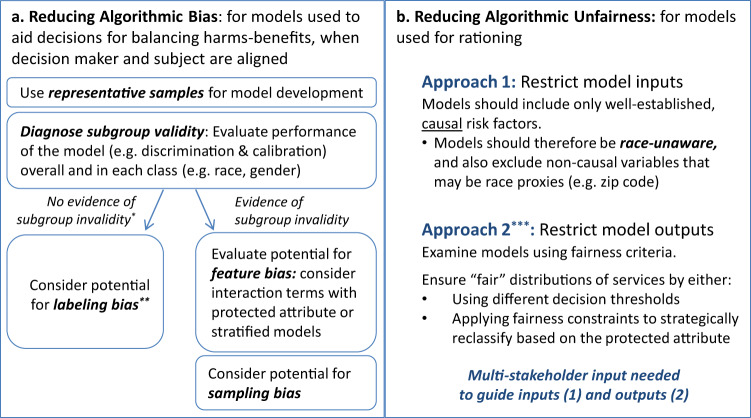

Fig. 3. Mitigating algorithmic bias and unfairness in clinical decision-making.

Bias arises through differential model performance across protected classes, such as across racial groups. a It is a concern in both polar and non-polar decision contexts and can be addressed by “debiasing” predictions, typically through the explicit encoding of the protected attribute to ameliorate subgroup validity issues, or by the more thoughtful selection of labels (in the case of labeling bias). Fairness concerns are exclusively a concern in polar decision contexts, and may persist even when prediction is not biased. b There are two broad and fundamentally very different unfairness mitigation approaches: (1) an input-focused approach, and (2) an output-focused approach (Fig. 3b). The goal of the input-focused approach is to promote class-blind allocation by meticulously avoiding the inclusion of race or race proxies. The output-focused approach evaluates fairness using criteria such as those described in Table 1 and Fig. 1. Fairness violations can be (partially) addressed through the use of “fairness constraints” (which systematically reclassify participants/patients to equalize allocation between groups) or by applying different decision thresholds across groups.