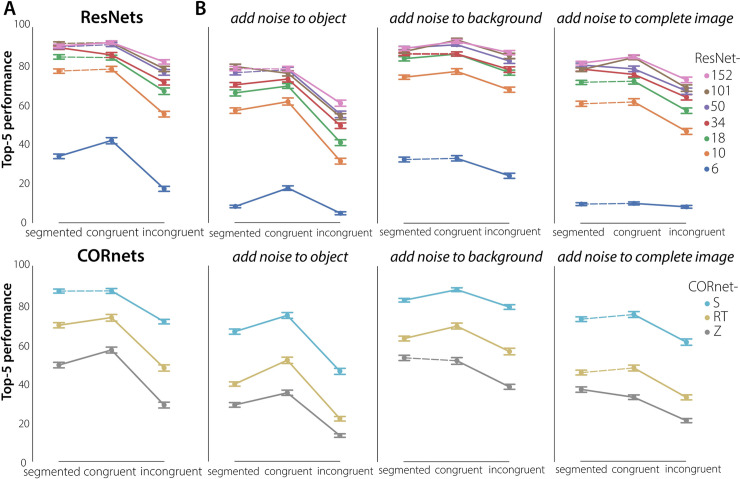

Fig 2. DCNN performance on the object recognition task.

A) DCNN performance on the object recognition task. 38 different subsets of 243 stimuli were presented, each subset consisting of the same number of images per target category and condition (segmented, congruent, incongruent) that human observers were exposed to (81 per condition, 3 per category). For all models, performance was better for the congruent than for the incongruent condition. For the ResNets, this decrease was most prominent for ResNet-6, and got smaller as the models get deeper. For 'ultra-deep’ networks it mattered less if the background was congruent, incongruent or even present. For the CORnets, this decrease was most prominent for the feedforward architecture (CORnet-Z). For CORnet-S (recurrent + skip connection) performance was similar to an ‘ultra-deep’ network. Using Post-hoc Wilcoxon signed-rank tests with Benjamini/Hochberg FDR correction, differences between the conditions were evaluated for all networks. Significant differences are indicated with a solid line vs. a dashed line (all segmented–incongruent comparisons were significant). Error bars represent bootstrap 95% confidence interval. B) DCNN performance on the object recognition task after adding noise to the object, the background, or both.