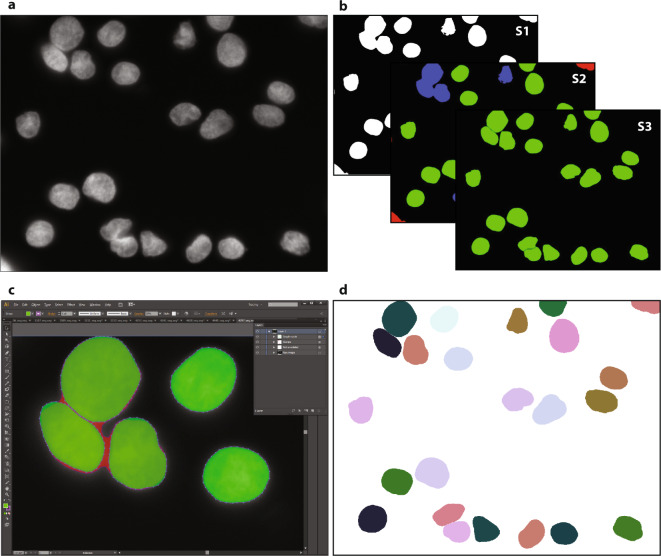

Fig. 1.

Workflow for ground truth image annotation. (a) Raw image visualizing HaCaT cytospinned nuclei. (b) A machine learning framework was used to annotate the raw image, learning from user interaction within three consecutive steps: S1. foreground extraction, S2. connected component classification (red = non-usable objects, blue = nuclei aggregations, green = single nuclei) and S3. splitting of aggregated objects into single nuclei, resulting in an annotation mask. (c) Zoom-in of the SVG-file showing the nuclear image overlaid with polygons representing each annotated nucleus. Polygons were modified by expert biologists to fit effective nuclear borders. Challenging decisions on how to annotate nuclei, mainly occurring due to aggregated or overlapped nuclei, were presented to an expert pathologist and corrected to obtain the final ground truth. (d) The curated SVG-file was transformed into a labeled nuclear mask.