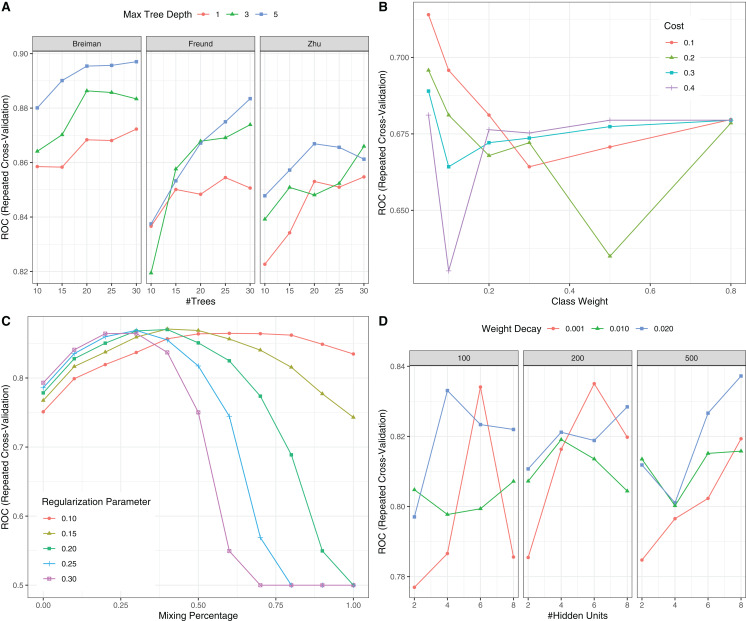

Figure 6. Hyperparameter tuning for machine learning algorithms by grid search.

The area under the receiver operating characteristic curve (AU-ROC) was used as the performance metric, which was estimated by 5-fold cross validation method. (A) Hyperparameter tuning for Adaboost which included three hyperparameters: the number of iterations for which boosting is run or the number of trees to use, learning coefficient and maximum depth of the trees. In Breiman method, is used. The Freund uses . In both cases the AdaBoost.M1 algorithm is used and α is the weight updating coefficient. On the other hand, if the Zhu method is used, the SAMME algorithm is implemented with . (B) Hyperparameter tuning for SVM. The cost and class weight were tuned in a grid. (C) Hyperparameter tuning for generalized linear model with regularization. Hyperparameters included alpha (Mixing Percentage) and lambda (Regularization Parameter). (D) Hyperparameter tuning for neural networks. Abbreviations: Adaboost: adaptive boosting with classification trees; SVM: support vector machines.