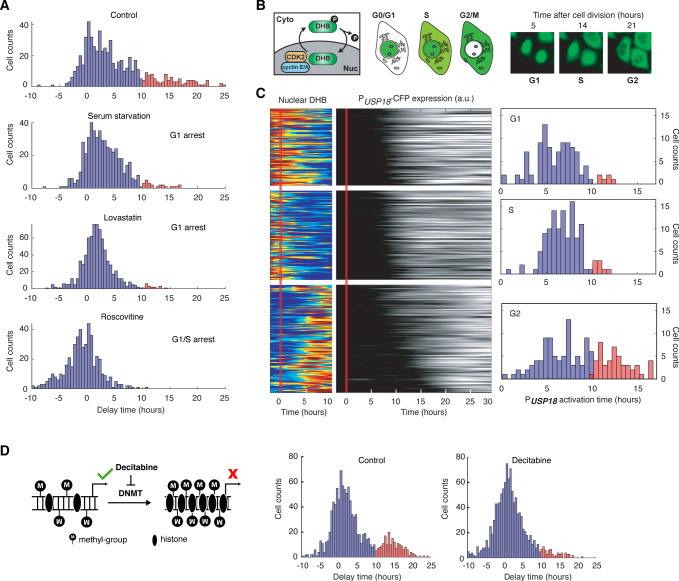

Figure 5. USP18 expression was differentially regulated by cell cycle phases.

(A) Distributions of delay times in cells treated with different cell cycle perturbations. Cells were serum-starved or treated with lovastatin (5 μM), or with roscovitine (5 μM) for 36 hrs prior to IFN-α treatment. Proportions of Group 2 cells (with a delay longer than 10 hrs) are 16.28% (control), 4.55% (serum starvation), 2.31% (lovastatin), and 0.21% (roscovitine), respectively. (B) Schematic of CDK2 activity reporter. Amino acids 994–1087 of human DNA helicase B (DHB) was fused with mCherry. The construct was stably integrated into PUSP18 cell line using lentivirus. The dynamics of nuclear translocation of DHB-mCherry can be used to infer the cell cycle phase. Representative time-lapse images of DHB-mCherry illustrate the inference of cell cycle phases. (C) Color maps showing nuclear DHB and PUSP18-driven gene expression in the same single cells. Each row represents the time trace of a single cell. Cells were grouped into G1 (n = 104), S (n = 124) and G2 (n = 144) based on the nuclear DHB signals (left) at the time of IFN-α addition. For each group, cells were sorted based on PUSP18-CFP activation time (middle). Right: Distributions of PUSP18-CFP activation times for each group. (D) Distributions of delay times in cells treated with decitabine, a DNA methyltransferase (DMNT) inhibitor. Left: Schematic of the effect of decitabine on DNA methylation and nucleosome occupancy. Right: Distribution of delay times upon decitabine treatment. Cells were cultured with medium in the absence (control) or presence of 100 μM decitabine for 48 hrs prior to 100 ng/ml IFN-α treatment. Cells with delay times longer than 10 hrs are shown in red. Proportions of Group 2 cells (with a delay longer than 10 hrs) are 17.89% (control) and 5.92% (decitabine treated).