Abstract

In this paper, a rigorous formalism of information transfer within a multi-dimensional deterministic dynamic system is established for both continuous flows and discrete mappings. The underlying mechanism is derived from entropy change and transfer during the evolutions of multiple components. While this work is mainly focused on three-dimensional systems, the analysis of information transfer among state variables can be generalized to high-dimensional systems. Explicit formulas are given and verified in the classical Lorenz and Chua’s systems. The uncertainty of information transfer is quantified for all variables, with which a dynamic sensitivity analysis could be performed statistically as an additional benefit. The generalized formalisms can be applied to study dynamical behaviors as well as asymptotic dynamics of the system. The simulation results can help to reveal some underlying information for understanding the system better, which can be used for prediction and control in many diverse fields.

Keywords: Information transfer, continuous flow, discrete mapping, Lorenz system, Chua’s system

1. Introduction

Uncertainty quantification in complex dynamical systems is an important topic in prediction models. By integrating information-theoretic methods to investigate potential physics and measure indices, the uncertainty can be quantified better in ensemble practical predictions of complex dynamical systems. For instance, one of the important motivations is the couplings among variables of dynamical systems generating information at a nonzero rate [1], which produces information exchange [2]. Entropy can be used to quantitatively describe production, gathering, exchange and transfer of information [3]. Information transfer analysis can be used to detect asymmetry in the interactions of subsystems [1,4]. The emergent phenomena cannot be simply derived or solely predicted from the knowledge of the structure or from the interactions among individual elements in complex systems [5]. The dynamics of information transportation plays a critical role in complex systems, resulting in the system prediction [6,7], controls of a system [8,9] and causal analysis [10,11]. It emphasizes further understanding and investigating information transportation in complex dynamical systems. It has been applied to quantify nonlinear interactions based on the information transfer by several underlying efficient estimation strategies in complex dynamical systems [12,13,14]. Simple examples are used to illustrate various complex phenomena. The formalisms about information transfer are mostly based on two time series [1,15,16,17].

Recently, a new approach on information flow between the components of two-dimensional (2D) systems was adapted by Liang and Kleeman [6], which can be used to deal with the change of the uncertainty of one component given by the other component. This idea is based on specific interactions between two components in complex dynamical systems. For a system with dynamics given, a measure of information transfer can be rigorously formulated (referred as LK2005 formalism henceforth in [6]). In the forms of continuous flows and discrete mappings, the information flow has been analyzed using the Liouville equations [18] and the Frobenius–Perron operators [18]. These two equations are the evolution equations of the joint probability distributions, respectively. The present formalism is consistent with the transfer entropy of Schreiber [1] in both transfer asymmetry and quantification. A variety of generalizations and applications of the work in Reference [4] are developed in [19,20,21,22,23,24,25]. Majda and Harlim [26] applied the strategy to study subspaces of complex dynamical systems. For 2D systems, Liang and Kleeman discovered a concise law on the entropy evolution of deterministic autonomous systems and obtained the time rate of information flow from one component to the other [6]. Until now, the 2D formalism has been extended to some dynamical systems in different forms and scales with successful applications between two variables [23,25]. In the light of these applications, by thoroughly describing the statistical behavior of a system, this rigorous LK2005 formalism has yielded remarkable results [3].

However, the uncertainty of many real-world systems needs to be quantified among the variables for revealing the nonlinear relationships, so as to better understand the intrinsic mechanism and predict the forthcoming states of the systems [27]. Besides, many physical systems are affected by the interactions between multiple components in diverse fields [28]. For example, sensitivity analysis of an aircraft system with respect to design variables, parameters and uncertainty factors can be used to estimate the effects on the objective function or constraint function. The uncertainty analysis and sensitivity analysis (UASA) process is one of the key steps for determining the optimal search direction and guiding the design and decision-making, which aims at predicting complex computer models by quantifying the sensitivity information of the coupling variables. It can be offered to quick guide of determining design parameters which lead to high performance aircraft designs. Some preceding tools [29,30] related to sensitivity analysis are applicable for low-dimensional static problems and an urgent problem of high dimensionality arises when outputting variables of numerical models with spatially and temporally need to be solved [31]. The rigorous formalism of information flow has the potential to revolutionize the ability to analyze and measure uncertainty and sensitivity information in dynamical systems.

Hence, considering realistic applications, we generalize the LK2005 formalism to several variables of multi-dimensional dynamical systems in this paper. More precisely, we extend the results in [6,25] to the information flow between groups of components, rather than individual components. We aim to demonstrate that the formalism is feasible among several variables in arbitrary multi-dimensional dynamical systems when dynamics is fully known. In addition, the generalized formalisms can be reduced to two-dimensional formalisms as a special case. We also highlight the relationship between the LK2005 formalism and our generalized formalisms. Two applications are proposed with the classical Lorenz system and Chua’s system as validations of our formalisms. Compared with the LK2005 formalism and the transfer mutual information method [32], the generalized formalisms are beneficial for revealing more information among variables. It can better explore the complexity of evolution and intrinsic regularity of multi-dimensional dynamical systems. Meanwhile, it can provide a simple and versatile method to analyze sensitivity in dynamical models. These generalized formulas enable one to understand the relationship between information transfer and the behavior of a system. It can be used to perform sensitivity analysis as a measure in multi-dimensional complex dynamical systems. Therefore, the generalized formalisms have much wider applications and are significant to investigate real-world problems.

The structure of this paper is as follows: Section 2 recalls a systematic introduction of the theories and the formalisms about information flow in 2D systems; In Section 3, the formalisms are generalized to adapt to multi-dimensional complex dynamical system components based on the LK2005 formalism. Details on the derivations of the formalisms and the related properties are demonstrated; Section 4 gives a description about the formalisms with multi-dimensional applications; the summary of this paper is given in Section 5.

2. Two-Dimensional Formalism of Information Transfer (the LK2005 Formalism [6])

2.1. Continuous Flows

For 2D continuous and deterministic autonomous systems with fully known dynamics,

| (1) |

where with for any is known as the flow vector and A stochastic process with joint probability density at time t is the random variables corresponding to the sample values . For convenience, we will use the notation or instead of the notation throughout Section 2, including the same expression at multi-dimensional cases in Section 3. In addition, the integral domain is the whole sample space , except where noted. The probability density associated with Equation (1) satisfies the Liouville equation [18]:

| (2) |

The rate of change of joint entropy of and satisfies the relation [6]

| (3) |

where E means the mathematical expectation with respect to and That is to say, when a system evolves with time, the change of its joint entropy is totally controlled by the contraction or expansion of the phase space [6]. Later on, Liang and Kleeman showed that this property holds for deterministic systems of arbitrary dimensionality [20].

Liang and Kleeman [6] provided a very efficient heuristic argument to describe the decomposition of the various evolutionary mechanisms of information transfer in terms of the individual and joint time rates of entropy changes of and Firstly, they computed and , where is the entropy of defined according to the marginal density, Secondly, they employed the novel idea of frozen variables to analyze the individual time rates of entropy changes. When is fixed and evolves on its own in 2D systems, they found its temporal rate of change of entropy depends only on denoted by In the presence of interactions between and , they observed that Therefore, Liang and Kleeman [6] concluded that the difference between and should equal to the rate of entropy transfer from to In the meantime, they denoted the rate of flow from to by (T stands for ”transfer”) and defined information flow/transfer as

| (4) |

where and with different at the same time.

2.2. Discrete Mappings

Similarly, Liang and Kleeman [6] also gave the formalism about a system in the discrete mapping form. Considering a 2D transformation

where and The evolution of the density of is driven by the Frobenius–Perron operator ( operator) [18]. The entropy increases as

where is the Jacobian matrix of . When is invertible in 2D transformations,

| (5) |

The entropy of increases as

where is the marginal density of When is noninvertible in 2D transformations,

| (6) |

where is the operator when is frozen as a parameter in . The entropy transferring from to is

| (7) |

where with different at the same time.

3. n-Dimensional Formalism of Information Transfer

3.1. Continuous Flows

Firstly, we consider a three-dimensional (3D) continuous autonomous system,

| (8) |

where is a known flow vector. Similarly, the probability density associated with Equation (8) satisfies the Liouville equation [18]:

| (9) |

Analogous to the derivation in [6], firstly, multiplying by for Equation (9), after some algebraic manipulations:

| (10) |

Then, integrating for Equation (10),

Assuming that vanishes at the boundaries (the compact support assumption for and the assumption is reasonable in real-world problems [6]), it is found that the time rate of the joint entropy change of and

satisfies

or

where

As mentioned above, the time rate of change of H equals to the mathematical expectation of the divergence of the flow vector When we are interested in the entropy evolution of a component, in 3D systems, the marginal density is

The evolution equation of is derived by taking the integral of Equation (9) with respect to and over the subspace

The third and fourth terms in Equation (9) have been integrated out with the compact support assumption for So the entropy for the component

evolves as

i.e.,

| (11) |

The Equation (11) states how evolves with time. The evolutionary mechanism of derives from two parts: One is from the evolution itself, another from the transfers of and according to the coupling in the joint density distribution From Section 2, we know that when evolves on its own, then

Therefore, the rate of information flow/transfer from to is

| (12) |

where and with different at the same time.

In particular, if has no dependence on then . There is no information transfer from random variable component to This holds true with the transfers defined in LK2005 formalism. Obviously, in system (8), when has no dependence on there should be no information transfer from to but there is possibility that the transfers in other directions may be nonzero when depends on or depends on This is consistent with the information transfer defined in Equation (12). As a matter of fact, an important property of the transfer is given below.

Theorem 1.

If is independent of in system (8) with different , then

Proof of Theorem 1

According to the formalism of information transfer for system (8), with the notation of

□

It is worth noting that, while gains information from or , or and or might have no dependence on in 3D systems. An important property about information transfer is its asymmetry among the components [1]. In addition, it is interesting to note that the formalism of 3D systems can be reduced to 2D cases under the condition that one variable does not depend on another variable. For example, If the evolution of is independent of , then

| (13) |

In particular, when is independent of and ,

According to Theorem 1, the results are apparent. Furthermore, when depends on and ,

or

| (14) |

From the above derivations, we can see that our formalisms are further intensified by emphasizing the inherent relation with the formalisms in 2D systems. The information flows from two variables and the high order interactions between them to another variable are quantified by formula (12). These are generalized forms of the LK2005 formalism. In Section 4, we will validate the conclusions by the applications of all formulas in the Lorenz and Chua’s systems. Moreover, when several variables are involved, the formalisms are capable to tackle information transfers of a multi-dimensional system.

Combining the Liouville equation

| (15) |

with Equation (3), in n-dimensional situations, we can generalize the formalism to n-dimensional continuous and deterministic autonomous systems in the same way. For example, the transfer of information from components to is

Hence, Theorem 1 can be generalized to multi-dimensional cases.

3.2. Discrete Mappings

For a 3D transformation the evolution of its density is driven by the Frobenius–Perron operator ( operator) [18]. Similar to the 2D case, after some efficient computations, the entropy transfer from to in three-dimensional mappings has the following form:

| (16) |

We also give a theorem for the discrete mappings and highlight the relationship between two-dimensional formalisms and generalized formalisms. The formalisms can be extended to high-dimensional situations as well. The detailed processes are demonstrated in Appendix A.

4. The Application of Multi-Dimensional Formalism of Information Transfer

4.1. The Lorenz System

In this section, we propose an application to study the information flows about the Lorenz system [33]:

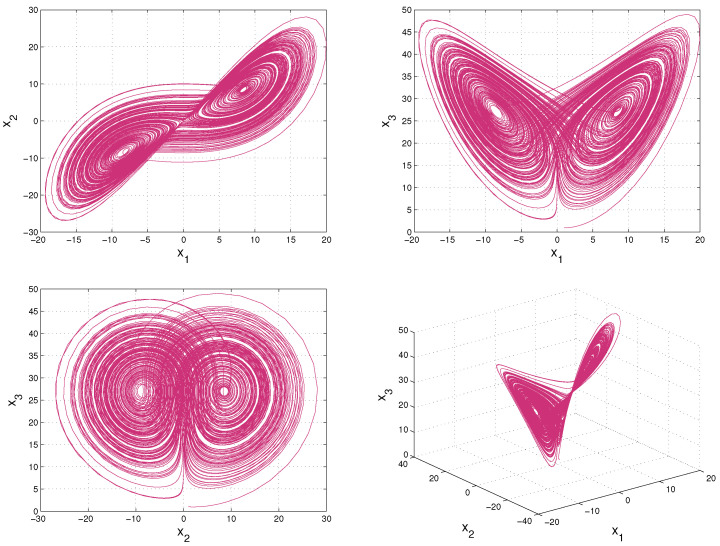

where and b are parameters, and are the system state variables, and t is time. A chaotic attractor of Lorenz system with is shown in Figure 1.

Figure 1.

The Lorenz attractor with initial value (1,1,1).

Firstly, we need to obtain the joint probability density function of to calculate information flows among the variables. For a deterministic system with known dynamics, the underlying evolution of the joint density can be obtained by solving the Liouville equation. Taking into account of the computational load, we estimate the joint density via numerical simulations. The steps are summarized as follows:

Initialize the joint density with a preset distribution , then generate an ensemble through drawing samples randomly according to the initial distribution .

Partition the sample space into “bins”.

Obtain an ensemble prediction for the Lorenz system at every time step.

Estimate the three-variable joint probability density function via bin counting at every time step.

The Lorenz system is solved by applying a fourth order Runge–Kutta method with a time step . According to Figure 1, the computation domain is restricted to which includes the attractor of the Lorenz system. We discretize the sample space into bins to ensure covering the whole attractor and one draw per bin on average via making 21,600 random draws. Initially, we assume is distributed as a Gaussian process , with a mean u and a covariance matrix :

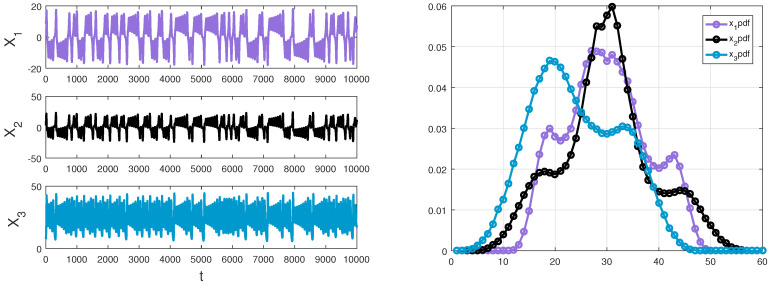

Although we have used different parameters u and to compute information flows for the Lorenz system, the final results are the same and the trends stay invariant. The parameters u and can be adjusted for different experiments. Here we only show the results of one experiment with and . The ensemble is developed by drawing sample randomly in the light of a pre-established distribution . We obtain an ensemble of and estimate the three-variable joint probability density function by the way of counting the bins, at every time step. As the equations are integrated forward in the Lorenz system, can be estimated as a function of time and describe the statistics of the system. A detailed discussion on probability estimation through bin counting are referred to [20,25]. The sample data with initial value (1,1,1) and an estimated marginal density of and are displayed in Figure 2.

Figure 2.

Left panel: a sample data ( and ) of the Lorenz system generated by a fourth order Runge–Kutta method with . Right panel: an estimated marginal density of and via counting the bins and initializing with a Gaussian distribution, respectively.

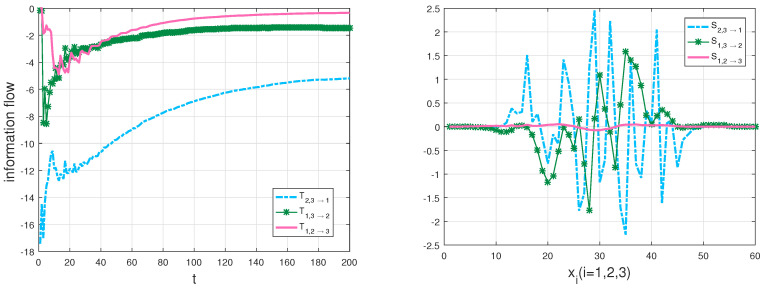

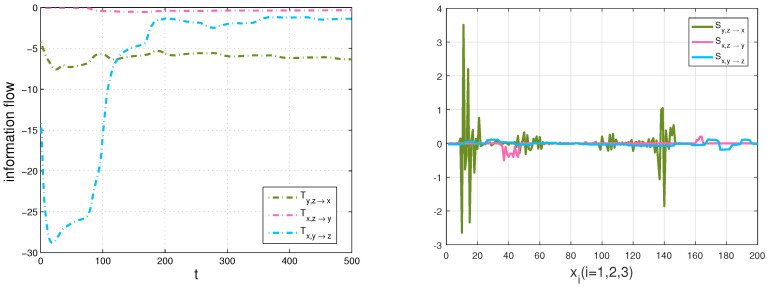

Through formula (12), the information transfer within three variables can be computed. There are nine transfer series in the Lorenz system, but here we mainly focus on the couple effect from two components to another component, that is, with different at the same time. A nonzero means that and are causal to , and the value means how much uncertainty that and bring to . Among all the transfers, it is clearly shown that any two variables drive the other variable in the dynamics except the evolution of which only depends on For the sake of revealing some underlying information in the chaotic dynamical system better, we also give information transfer among the components over space with a Gaussian distribution initialization and the averaged density over time via using the following formula: , which characterizes the strength of information transfer at different planes of . That is to say, it demonstrates the information transfer of and to plane, whose relative values represent the magnitudes of information transfer. The calculation results are plotted in the left panel and the right one of Figure 3, respectively. According to the magnitude of parameters in the Lorenz system and the definition of rigorous 3D formalisms, the information transfer from to is the smallest. The results are just as we expected, , as shown in the left panel of Figure 3. Meanwhile, we can get much information through numerical simulations. For example, the information transfer from and on is larger than that of and on in the Lorenz system, which is helpful for us to better analyze the system and the fields of interest. Only the absolute value of T measures the information transfer among the variables [23]. As the ensemble evolution is carried forth, any two variables aim to reduce the uncertainty of the other variable [24]; in other words, any two variables tend to stabilize the other variable. All information flows go to constants, which means that the system tends to be stable simultaneously. Comparing the left panel with the right one in Figure 3, we can find that not only the information flow from and to is the largest at different times, but also the total information transfer is the largest at plane, and the strength of information transfer obeys a distribution in each direction of x. Repeated experiments are found to be in line with the results no matter whatever the initialization is given.

Figure 3.

Left panel: the multivariate information flow of the Lorenz system: blue dot-dash line: ; green star line: ; red solid line: (in nats per unit time); Right panel: the information strength of transfer in the Lorenz system: blue dot-dash line: ; green star line: ; red solid line: (arbitrary unit).

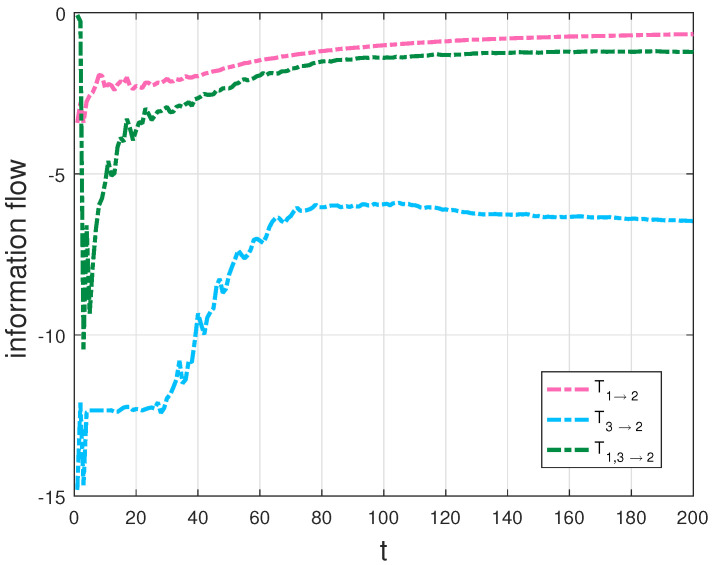

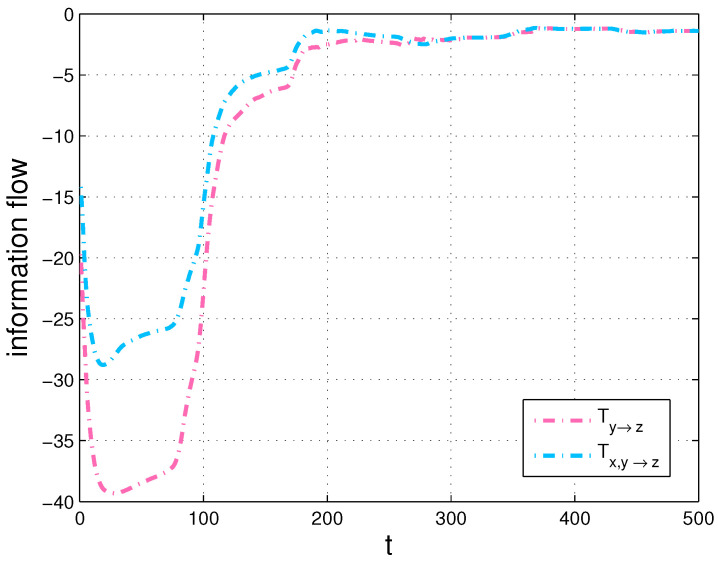

In particular, we compute the transfer, , then compare with the transfer, in Figure 4, as well as plot the transfers , and in Figure 5.

Figure 4.

and in the Lorenz system (in nats per unit time).

Figure 5.

, and in the Lorenz system (in nats per unit time).

Since the evolution of is independent of and the evolution of depends on and in the Lorenz system, the transfer should be equal to and neither the transfer nor should not be equal to according to the derivations in Section 3.1. As expected, there is almost no difference between the two flows in Figure 4. The interpretation of the results is that is not causal to in the Lorenz system. The result agrees well with theoretical analysis, which also validates our formalisms. But the graphs and are quite different from the graph in Figure 5, as that both and are causal to in the Lorenz system. From Figure 4 and Figure 5, we can find that the information flow is different from , as a property of asymmetry of the information transfer. There exists hidden sensitivity information in information transfer processes of high-dimensional dynamical systems: whether or not one variable brings more uncertainty to another variable. Comparing the magnitudes of three flows in Figure 5, we can say that is more sensitive to than to from the sensitivity analysis point of view. All the above differences are exactly the embodiment of the differences between the information flows in multi-dimensional dynamical systems and the LK2005 formalism. The proposed formalisms can be used to measure information transfers among the variables in dynamical systems and the numerical results can show how the measurement behavior with time, compared with the qualification of information transfer between two variables [4] and the transfer mutual information method [32]. For example, it can be quantified the influence that on the relationship between and using the transfer mutual information method in the Lorenz system. With our generalized formalisms, we can give quantitatively the influence from to the relationship between and as a dynamical process and other relationships (such as the asymmetrical influence between two variables) among the variables for analyzing the system better. To test the influence of error propagation on the measurement of information transfers, we use a different natural interval extension to compute information transfers according to the striking method [34]. In other words, we compute information transfers using formula (12) in the Lorenz system with the rewritten second equation, that is, is used to replace . For the Lorenz system, the results show that, the algorithm performs well (the relative error < 2%). All simulations are performed on a 64-bit Matlab R2016a environment. The physical consistency of the proposed approach in this paper can be explained as that a direction of the phase space is frozen in order to extract information transfers from the other two directions [3]. In addition, nonlinearity may lead a deterministic system to chaos, which causes the “spikes” in the right panel of Figure 3 and corresponds to intermittent switching in the chaotic dynamics. As the remarkable theory stated in [35], it indicates when the dynamics are about to switch lobes of the attractor in the Lorenz system.

Since Liouville equations and Frobenius–Perron analysis describe an ensemble of trajectories, we can use the generated formalisms of information flow as a sensitivity analysis index to perform dynamic sensitivity information analysis instead of the preceding widely used methods such as repeated calculation of principal component coefficients [36,37], construction of functional metamodels [31,38], calculation of moving average of the sensitivity index [39] and direct perturbation analysis of a dynamical system [40]. Using information flow to identify sensitive variables is directly based on the statistical perspective, which can improve numerical accuracy and efficiency while reduce the calculation load, compared with conventional dynamic sensitivity analysis methods. We cannot only quantify how much the uncertainty among variables of a system, but also understand how they influence system behavior, so it may be measured and used for prediction and control in realistic applications.

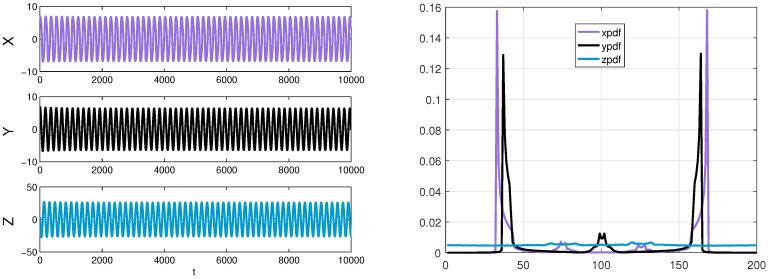

Furthermore, we use Equation (15) to compute information transfers, , , , and with the same strategy in the four-dimensional (4D) dynamical system:

whose results are shown in Figure 6.

Figure 6.

Left panel: an estimated marginal density of and w via counting the bins and initializing with a Gaussian distribution, respectively; Right panel: the multivariate information flow over time of a 4D dynamical system.

The generalized formalisms are useful to deal with universal problems, which is not difficult to be applied to higher-dimensional cases.

4.2. The Chua’s System

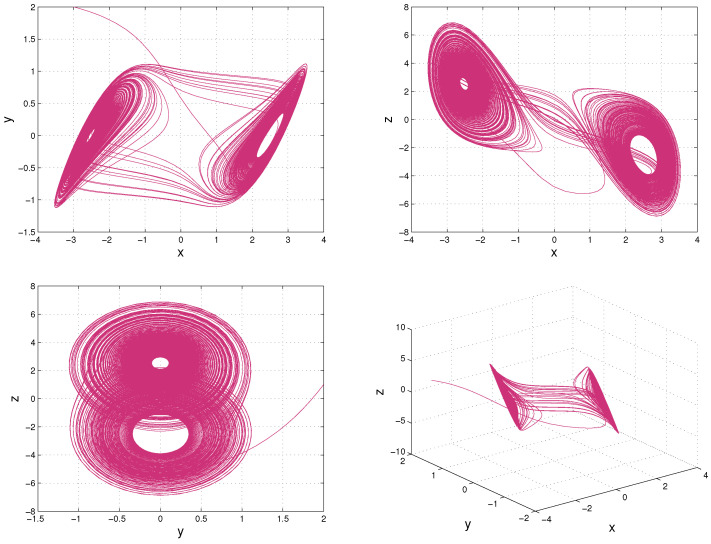

Since it is the first analog circuit to realize chaos in experiments, the initial Chua’s system is a well-known dynamical model [41]. The Chua’s system is described in reference [42] and there are many researches on its dynamical behavior [43,44]. Here we present an investigation of the information flows within the smooth Chua’s system [45]:

where are parameters, and z are state variables in and When and , a chaotic attractor of the Chua’s system is shown in Figure 7.

Figure 7.

The attractor of Chua’s system with The former three trajectories are -plane,-plane and -plane, respectively. The last trajectory is a 3D plot of and

As mentioned before, using the same estimation procedures, we can obtain the density of by counting the bins at each step. From Figure 7, the appropriate computation domain which includes an attractor of the Chua’s system can be selected to estimate the three-variable joint probability density function. The following computation is demonstrated by applying a fourth order Runge–Kutta method. Similarly, we only show the results of one experiment after computing information flows multiple times by using different parameters. Suppose that is distributed as a Gaussian process with a mean u and a covariance matrix in the initial state:

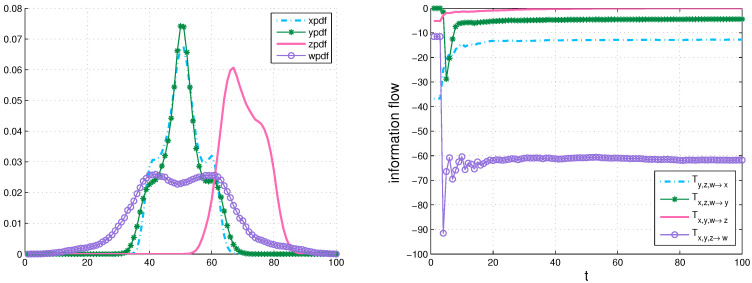

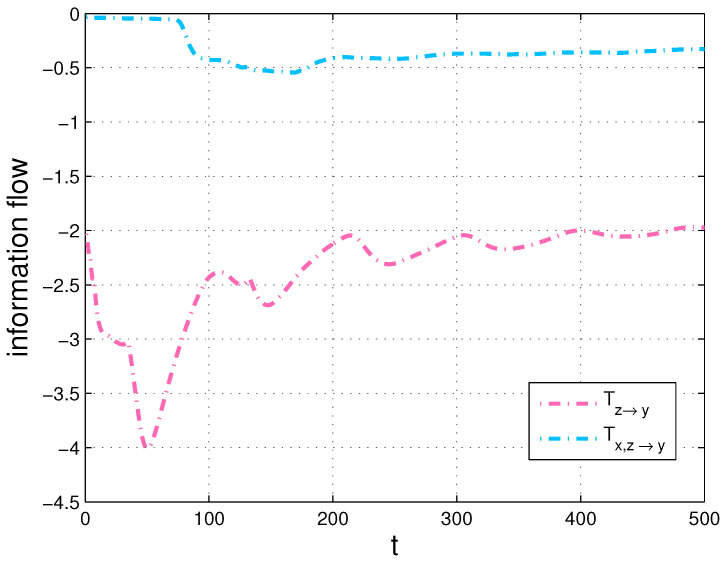

Due to the additional fact that the smooth Chua’s circuit has a highly non-coherent dynamics [46], we discretize the sample space into bins to adequately understand the information transfer and the behavior of the system over time. A sample data and an estimation result of three marginal densities are shown in Figure 8, and we can find that the dynamical behaviors of the system are consistent with the results, such as symmetry. Using formula (12) to compute the information transfers within three variables of Chua’s system. Firstly, we discuss the coupling effect from two components to the other component, the calculation results are demonstrated in Figure 9.

Figure 8.

Left panel: a sample data ( and Z) of the Chua’s system generated by a fourth order Runge–Kutta method with ; Right panel: the purple line, black line, and blue line represent an estimated marginal density of by counting bins, respectively.

Figure 9.

Left panel: the multivariate information flow of the Chua’s system: green dot-dash line: ; red dot-dash line: ; blue dot-dash line: (in nats per unit time); Right panel: the information strength of transfer in the Chua’s system: green dot-dash line: ; red dot-dash line: ; blue dot-dash line: (arbitrary unit).

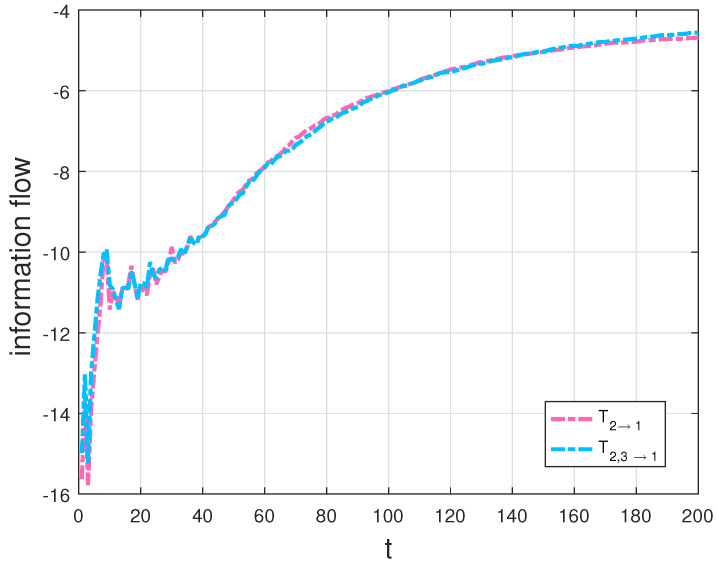

Secondly, we compute the transfers, and , then compare with the transfer, and with in Figure 10 and Figure 11, respectively. We also show the corresponding results of the strength of information transfer among the components with a Gauss distribution initialization and the averaged density over time in Figure 9.

Figure 10.

and in the Chua’s system (in nats per unit time).

Figure 11.

and in the Chua’s system (in nats per unit time).

Since X causes Y but does not cause Z in the Chua’s system, the numerical results of Figure 10 and Figure 11 conform with the derivations of Equations (13) and (14) in Section 3.1. More specifically, there is almost no difference between the two flows in Figure 10, however, there exists large disparity between the two flows in Figure 11. The results also verify our formalisms. In addition, as shown in Figure 10 and Figure 11, we can see that the information flow is different from due to the asymmetry of information transfer. All simulations are performed on a 64-bit Matlab R2016a environment. We are able to estimate that one variable makes another variable more uncertain or more predictable via the generalized formalisms. Besides, we can identify sensitive variables by computing information transfers among the variables in dynamical systems.

Compared with the Lorenz system, the Chua’s system embodies in engineering systems besides that their discoveries were extraordinary and changed scientific thinking [46]. It can be used as another means to research, experiment and think about humanity, identity and art, etc. [47,48]. In studying visualization of the dynamics of Chua’s circuit through computational models, the quantitative transformations of behavior are being taken into account [46]. The multi-dimensional formalisms of information flow enable us to improve our ability to estimate, predict, and control complex systems in many diverse fields. Furthermore, most existing approaches in control and synchronization of chaotic systems require adjusting the parameters of the model and estimating system parameters, which become an active area of research [49], and an additional benefit provided by the multi-dimensional formalisms of information flow is parameter estimation. We can compute information flows of the simulation model with different sets of parameters and do the same procedure for obtaining a group of feedback, then determine the optimal parameters that cater for the actual needs in order to put insight into complex behavior of models by comparing the change rates.

5. Conclusions

Based on the LK2005 formalism, we propose a rigorous and general formalism of the information transfer among multi-dimensional complex dynamical system components, for continuous flows and discrete mappings, respectively. Information transfers are quantified through entropy transfers from some components to another component, enabling us to better understand the physical mechanism underlying the superficial behavior and explore deeply hidden information in the evolution of multi-dimensional dynamical systems. When the generalized formalisms are reduced to 2D cases, the results are consistent with the LK2005 formalism. We mainly focus on the study of 3D systems and apply the formalisms to investigate information transfers for the Lorenz system and the Chua’s system. In the above-mentioned two cases, we show that information flows of the whole evolution and the strength of information transfer at different planes, which implies that how uncertainty propagates and how dynamic essential information in the system transports. The results of experiments on the generalized formalisms conform with observations and empirical analysis in the literature, whose application may benefit many diverse fields. Compared with the qualification of information transfer between two variables [4] and the transfer mutual information method [32], the generalized formalisms are helpful for analyzing the relationships among the variables in dynamical systems and the research of complex systems. Moreover, since the formalism is built on the statistical nature of information, it has the potential to perform sensitivity analysis in multi-dimensional complex dynamical systems and advance our ability to estimate, predict and control these systems. In practice, for complex high-dimensional dynamical systems, it is not easy to give the dynamics analytically. Considering many critical data-driven problems are primed to take advantage of progress in the data-driven discovery of dynamics [35], we are developing a dynamic-free formulation to analyze information flows of multi-dimensional dynamical systems.

In the future, the formalism will be further generated to high-dimensional stochastic dynamical systems and time-delay systems. Meanwhile, future research should investigate how the information flow as a new indicator can be deployed in the frame of dynamic sensitivity analysis.

Acknowledgments

This work is supported by the National Natural Science Foundation of China (Grant No. 11771450).

Appendix A. Discrete Mappings

Now consider a 3D transformation

| (A1) |

and the Frobenius–Perron operator ( operator) [18] which steers the evolution of its density. Loosely, given a density P is defined as that

where w represents any subset of When is invertible, P can be expressed clearly as , where is the determinant of the Jacobian matrix of Similar to the two-dimensional case, the entropy increases

concisely rewritten as

| (A2) |

Meantime, in the case when is invertible of 3D transformations,

| (A3) |

The entropy of increases as

| (A4) |

where is the marginal density of

When is noninvertible,

| (A5) |

where is the operator when is frozen as parameters in . It is easy to find that Equation (A5) reduces to Equation (A3) when is invertible. Therefore, the entropy transfers from to can be unified into a form

| (A6) |

where with different at the same time.

Just as the former case with continuous variables, the information flow obtained by Equation (16) has the following property:

Theorem A1.

If is independent of in system (A1) with different , then

The detailed proof of Theorem A1 is presented in Appendix A.1. Moreover, the formalism of 3D system can be reduced to the formalism in 2D cases with the previously mentioned conditions being satisfied. For example, when has no dependence on ,

In particular, when has no dependence on and ,

Furthermore, when has dependence on and ,

The above formalisms can also be generalized to n-dimensional systems by efficient processing of the relationship between the operator

and the entropy evolution at different time steps. For example, the transfer of entropy from to is

Here is the simplified script of Similar to the continuous cases, the generalized version of the property of Theorem A1 is also suitable for multi-dimensional discrete mappings.

Appendix A.1.

Proof of Theorem A1

We only need to show that when is independent of in 3D system,

According to Equation (A4) and Equation (A5), we only need to prove

According to the definition of the operator and the condition that is independent of at the same time,

because

where So

□

Author Contributions

Y.Y. proposed the original idea, implemented the experiments, analyzed the data and wrote the paper. X.D. contributed to the theoretical analysis and simulation designs and revised the manuscript. All authors read and approved the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Schreiber T. Measuring Information Transfer. Phys. Rev. Lett. 2000;85:461. doi: 10.1103/PhysRevLett.85.461. [DOI] [PubMed] [Google Scholar]

- 2.Horowitz J.M., Esposito M. Thermodynamics with Continuous Information Flow. Phys. Rev. X. 2014;4:031015. doi: 10.1103/PhysRevX.4.031015. [DOI] [Google Scholar]

- 3.Cafaro C., Ali S.A., Giffin A. Thermodynamic aspects of information transfer in complex dynamical systems. Phys. Rev. E. 2016;93:022114. doi: 10.1103/PhysRevE.93.022114. [DOI] [PubMed] [Google Scholar]

- 4.Gencaga D., Knuth K.H., Rossow W.B. A Recipe for the Estimation of Information Flow in a Dynamical System. Entropy. 2015;17:438–470. doi: 10.3390/e17010438. [DOI] [Google Scholar]

- 5.Kwapien J., Drozdz S. Physical approach to complex systems. Phys. Rep. 2012;515:115–226. doi: 10.1016/j.physrep.2012.01.007. [DOI] [Google Scholar]

- 6.Liang X.S., Kleeman R. Information Transfer between Dynamical Systems Components. Phys. Rev. Lett. 2005;95:244101. doi: 10.1103/PhysRevLett.95.244101. [DOI] [PubMed] [Google Scholar]

- 7.Kleeman R. Information flow in ensemble weather predictions. J. Atmos. Sci. 2007;6:1005–1016. doi: 10.1175/JAS3857.1. [DOI] [Google Scholar]

- 8.Touchette H., Lloyd S. Information-Theoretic Limits of Control. Phys. Rev. Lett. 2000;84:1156. doi: 10.1103/PhysRevLett.84.1156. [DOI] [PubMed] [Google Scholar]

- 9.Touchette H., Lloyd S. Information-theoretic approach to the study of control systems. Phys. A. 2004;331:140. doi: 10.1016/j.physa.2003.09.007. [DOI] [Google Scholar]

- 10.Sun J., Cafaro C., Bollt E.M. Identifying coupling structure in complex systems through the optimal causation entropy principle. Entropy. 2014;16:3416–3433. doi: 10.3390/e16063416. [DOI] [Google Scholar]

- 11.Cafaro C., Lord W.M., Sun J., Bollt E.M. Causation entropy from symbolic representations of dynamical systems. CHAOS. 2015;25:043106. doi: 10.1063/1.4916902. [DOI] [PubMed] [Google Scholar]

- 12.Majda A., Kleeman R., Cai D. A Framework for Predictability through Relative Entropy. Methods Appl. Anal. 2002;9:425–444. [Google Scholar]

- 13.Haven K., Majda A., Abramov R. Quantifying predictability through information theory: Small-sample estimation in a non-Gaussian framework. J. Comp. Phys. 2005;206:334–362. doi: 10.1016/j.jcp.2004.12.008. [DOI] [Google Scholar]

- 14.Abramov R.V., Majda A.J. Quantifying Uncertainty for Non-Gaussian Ensembles in Complex Systems. SIAM J. Sci. Stat. Comp. 2004;26:411–447. doi: 10.1137/S1064827503426310. [DOI] [Google Scholar]

- 15.Kaiser A., Schreiber T. Information transfer in continuous processes. Phys. D. 2002;166:43–62. doi: 10.1016/S0167-2789(02)00432-3. [DOI] [Google Scholar]

- 16.Abarbanel H.D.I., Masuda N., Rabinovich M.I., Tumer E. Distribution of Mutual Information. Phys. Lett. A. 2001;281:368–373. doi: 10.1016/S0375-9601(01)00128-1. [DOI] [Google Scholar]

- 17.Wyner A.D., Mackey M.C. A definition of conditional mutual information for arbitrary ensembles. Inf. Control. 1978;38:51–59. doi: 10.1016/S0019-9958(78)90026-8. [DOI] [Google Scholar]

- 18.Lasota A., Mackey M.C. Chaos, Fractals, and Noise: Stochastic Aspects of Dynamics. Springer; New York, NY, USA: 1994. [Google Scholar]

- 19.Liang X.S., Kleeman R. A rigorous formalism of information transfer between dynamical system components. I. Discrete mapping. Physica D. 2007;231:1–9. doi: 10.1016/j.physd.2007.04.002. [DOI] [PubMed] [Google Scholar]

- 20.Liang X.S., Kleeman R. A rigorous formalism of information transfer between dynamical system components. II. Continuous flow. Physica D. 2007;227:173–182. doi: 10.1016/j.physd.2006.12.012. [DOI] [PubMed] [Google Scholar]

- 21.Liang X.S. Information flow within stochastic dynamical systems. Phys. Rev. E. 2008;78:031113. doi: 10.1103/PhysRevE.78.031113. [DOI] [PubMed] [Google Scholar]

- 22.Liang X.S. Uncertainty generation in deterministic fluid flows: Theory and applications with an atmospheric stability model. Dyn. Atmos. Oceans. 2011;52:51–79. doi: 10.1016/j.dynatmoce.2011.03.003. [DOI] [Google Scholar]

- 23.Liang X.S. The Liang-Kleeman information flow: Theory and application. Entropy. 2013;15:327–360. doi: 10.3390/e15010327. [DOI] [Google Scholar]

- 24.Liang X.S. Unraveling the cause-effect relation between time series. Phys. Rev. E. 2014;90:052150. doi: 10.1103/PhysRevE.90.052150. [DOI] [PubMed] [Google Scholar]

- 25.Liang X.S. Information flow and causality as rigorous notions ab initio. Phys. Rev. E. 2016;94:052201. doi: 10.1103/PhysRevE.94.052201. [DOI] [PubMed] [Google Scholar]

- 26.Majda A.J., Harlim J. Information flow between subspaces of complex dynamical systems. Proc. Natl. Acad. Sci. USA. 2007;104:9558–9563. doi: 10.1073/pnas.0703499104. [DOI] [Google Scholar]

- 27.Zhao X.J., Shang P.J. Measuring the uncertainty of coupling. Europhys. Lett. 2015;110:60007. doi: 10.1209/0295-5075/110/60007. [DOI] [Google Scholar]

- 28.Zhao X.J., Shang P.J., Huang J.J. Permutation complexity and dependence measures of time series. Europhys. Lett. 2013;102:40005. doi: 10.1209/0295-5075/102/40005. [DOI] [Google Scholar]

- 29.Iooss B., Lemaitre P. A review on global sensitivity analysis methods. Oper. Res. Comput. Sci. Interfaces. 2014;59:101–122. [Google Scholar]

- 30.Borgonovo E., Plischke E. Sensitivity analysis: A review of recent advances. Eur. J. Oper. Res. 2016;248:869–887. doi: 10.1016/j.ejor.2015.06.032. [DOI] [Google Scholar]

- 31.Auder B., Crecy A.D., Iooss B., Marques M. Screening and metamodeling of computer experiments with functional outputs. Application to thermal–hydraulic computations. Reliab. Eng. Syst. Safety. 2012;107:122–131. doi: 10.1016/j.ress.2011.10.017. [DOI] [Google Scholar]

- 32.Zhao X.J., Shang P.J., Lin A.J. Transfer mutual information: A new method for measuring information transfer to the interactions of time series. Physica A. 2017;467:517–526. doi: 10.1016/j.physa.2016.10.027. [DOI] [Google Scholar]

- 33.Lorenz E.N. Deterministic nonperiodic flow. J. Atmos. Sci. 1963;20:130–141. doi: 10.1175/1520-0469(1963)020<0130:DNF>2.0.CO;2. [DOI] [Google Scholar]

- 34.Nepomuceno E.G., Mendes E.M.A.M. On the analysis of pseudo-orbits of continuous chaotic nonlinear systems simulated using discretization schemes in a digital computer. Chaos Soliton Fract. 2017;95:21–32. doi: 10.1016/j.chaos.2016.12.002. [DOI] [Google Scholar]

- 35.Brunton S.L., Brunton B.W., Proctor J.L., Kaiser E., Kutz J.N. Chaos as an intermittently forced linear system. Nat. Commun. 2017 doi: 10.1038/s41467-017-00030-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Campbell K., McKay M.D., Williams B.J. Sensitivity analysis when model outputs are functions. Reliab. Eng. Syst. Saf. 2006;91:1468–1472. doi: 10.1016/j.ress.2005.11.049. [DOI] [Google Scholar]

- 37.Lamboni M., Monod H., Makowski D. Multivariate sensitivity analysis to measure global contribution of input factors in dynamic models. Reliab. Eng. Syst. Saf. 2011;96:450–459. doi: 10.1016/j.ress.2010.12.002. [DOI] [Google Scholar]

- 38.Marrel A., Iooss B., Jullien M., Laurent B., Volkova E. Global sensitivity analysis for models with spatially dependent outputs. Environmetrics. 2011;22:383–397. doi: 10.1002/env.1071. [DOI] [Google Scholar]

- 39.Loonen R.C.G.M., Hensen J.L.M. Dynamic sensitivity analysis for performance-based building design and operation; Proceedings of the BS2013: 13th Conference of International Building Performance Simulation Association; Chambéry, France. 26– 28 August 2013; pp. 299–305. [Google Scholar]

- 40.Richard R., Casas J., McCauley E. Sensitivity analysis of continuous-time models for ecological and evolutionary theories. Theor. Ecol. 2015 doi: 10.1007/s12080-015-0265-9. [DOI] [Google Scholar]

- 41.Chua L.O., Komuro M., Matsumoto T. The double scroll family: Parts I and II. IEEE Trans. Circuits Syst. 1986;CAS-33(11):1072–1118. doi: 10.1109/TCS.1986.1085869. [DOI] [Google Scholar]

- 42.Chua L.O. Archiv fur Elektronik und Ubertragungstechnik. Volume 46. University of California; Berkeley, CA, USA: 1992. The genesis of Chua’s circuit; pp. 250–257. [Google Scholar]

- 43.Chua L.O. A zoo of strange attractor from the canonical Chua’s circuits; Proceedings of the 35th Midwest Symposium on Circuits and Systems; Washington, DC, USA. 1992. pp. 916–926. [Google Scholar]

- 44.Liao X.X., Yu P., Xie S.L., Fu Y.L. Study on the global property of the smooth Chua’s system. Int. J. Bifurcat. Chaos Appl. Sci. Eng. 2006;16:2815–2841. doi: 10.1142/S0218127406016483. [DOI] [Google Scholar]

- 45.Zhou G.P., Huang J.H., Liao X.X., Cheng S.J. Stability Analysis and Control of a New Smooth Chua’s System. Abstract Appl. Anal. 2013;2013 doi: 10.1155/2013/620286. 10 pages. [DOI] [Google Scholar]

- 46.Bertacchini F., Bilotta E., Gabriele L., Pantano P., Tavernise A. Toward the Use of Chua’s Circuit in Education, Art and Interdisciplinary Research: Some Implementation and Opportunities. LEONARDO. 2013;46:456–463. doi: 10.1162/LEON_a_00641. [DOI] [Google Scholar]

- 47.Bilotta E., Blasi G.D., Stranges F., Pantano P. A Gallery of Chua Attractors. Part V. Int. J. Bifurcat. Chaos. 2007;17:1383–1511. doi: 10.1142/S0218127407018099. [DOI] [Google Scholar]

- 48.Adamo A., Tavernise A. Generation of Ego dynamics; Proceedings of the VIII International Conference on Generative Art; Milan, Italy. 15–17 December 2007. [Google Scholar]

- 49.Kingni S.T., Jafari S., Simo H., Woafo P. Three-dimensional chaotic autonomous system with only one stable equilibrium: Analysis, circuit design, parameter estimation, control, synchronization and its fractional-order form. Eur. Phys. J. Plus. 2014;129 doi: 10.1140/epjp/i2014-14076-4. [DOI] [Google Scholar]