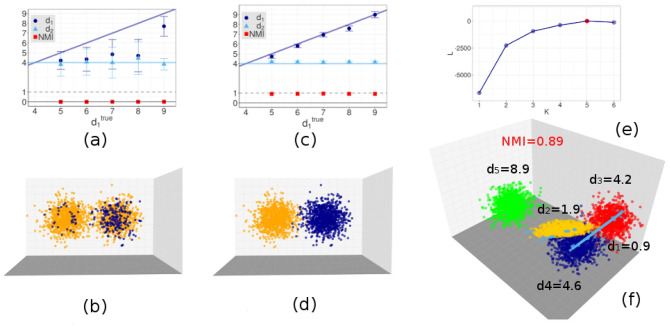

Figure 1.

Results on simple artificial data sets. We consider sets of points drawn from mixtures of multivariate Gaussians in different dimensions. In all cases, we perform iterations of the Gibbs sampling and repeat the sampling times starting from different random configurations of the parameters. We then consider the sampling with the highest maximum average of the log-posterior value. Panels (a,b) Points are drawn from two Gaussians in different dimensions. The higher dimension varies from to , the lower dimension is fixed at . points are sampled from each manifold. We fix . We show results obtained with , namely, without enforcing neighborhood uniformity (here , but since the value of q is irrelevant). In panel (a) we plot the estimated dimensions of the manifolds (dots: posterior means; error bars: posterior standard deviations) and the MI between our classification and the ground truth. In panel (b) we show the assignment of points to the low-dimensional (blue) and high-dimensional (orange) manifolds for the case (points are projected onto the first 3 coordinates). Similar figures are obtained for other values of . Panels (c,d) The same setting as in panel (a,b), but now we enforce neighborhood uniformity, using and . Points are now correctly assigned to the manifolds whose ID is properly estimated. Panels (e,f) Points drawn from five Gaussians in dimensions , , , , . points are sampled from each manifold. Some pairs of manifolds are intersecting, as their centers are one standard deviation apart. The analysis is performed assuming , , and with different values of K. In panel (e) we show the average log-posterior value as a function of K. The maximum corresponds to the ground truth value . In panel (f) we show the assignment of points to the five manifolds in different colors (points were projected onto the first 3 coordinates).