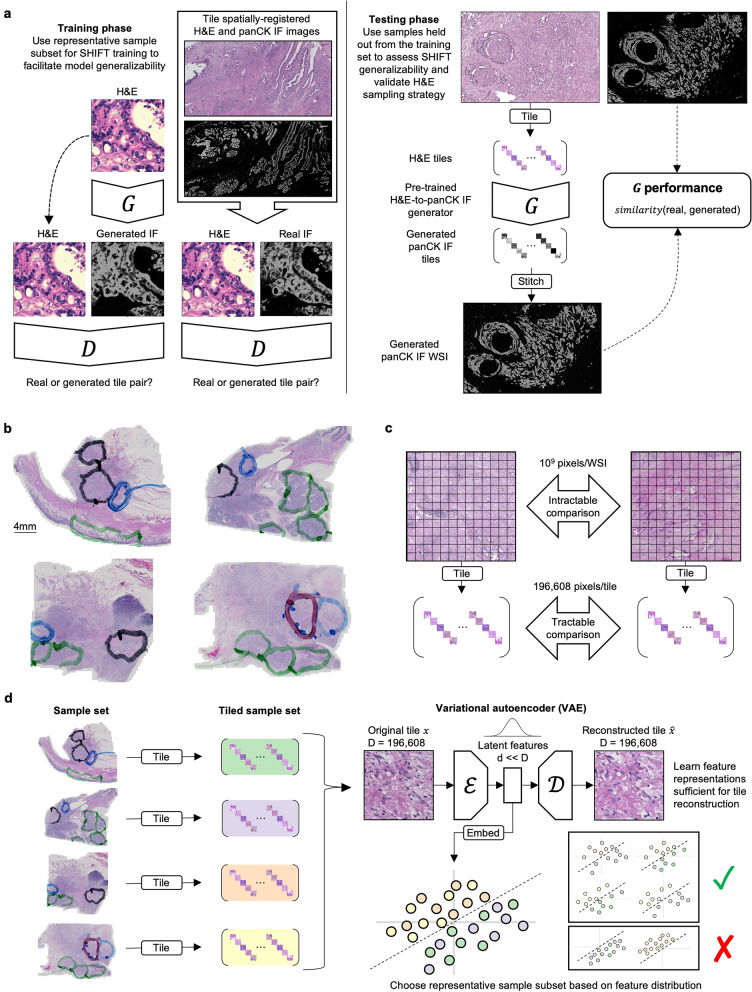

Figure 1.

Overview of virtual IF staining with SHIFT and feature-guided H&E sample selection. (a) Schematic of SHIFT modeling for training and testing phases. The generator network generates virtual IF tiles conditioned on H&E tiles. The discriminator network learns to discriminate between real and generated image pairs. See also Supplementary Fig. S2. (b) Four heterogeneous samples of H&E-stained PDAC biopsy tissue used in the current study. Pathologist annotations indicate regions that are benign (green), grade 1 PDAC (black), grade 2/3 PDAC (blue), and grade 2/3 adenosquamous (red). (c) Making direct comparisons between H&E whole slide images (WSIs) is intractable because each WSI can contain billions of pixels. By decomposing WSIs into sets of non-overlapping 256 × 256 pixel tiles, we can make tractable comparisons between the feature-wise distribution of tile sets. (d) Schematic of feature-guided H&E sample selection. First, H&E samples are decomposed into 256 × 256 pixel tiles. Second, all H&E tiles are used to train a variational autoencoder (VAE) to learn feature representations for all tiles; for each 196,608-pixel H&E tile in the dataset, the encoder learns a compact but expressive feature representation that maximizes the ability of the decoder to reconstruct the original tile from its feature representation (see “Methods”). Third, the tile feature representations are used to determine which samples are most representative of the whole dataset.