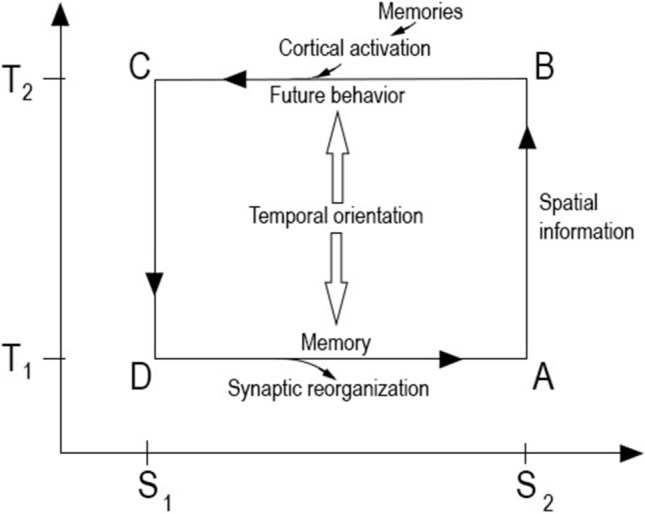

Fig. 3.

The reversed Carnot cycle illustrated on a temperature-entropy plane. The cycle operates via self-regulation between the high frequency, evoked state (T2), and the resting state (T1); the horizontal axis represents entropy. Stimulus increases the frequencies during the compression phase (AB). The energy state of synaptic potentials permits the electric flow to spread through the cortex (BC). The resulting potential reverses the direction of the electric flow (CD); these slower oscillations expand to formulate a response. Synaptic reorganization accumulates complexity as memory. Synaptic changes prepare the neural system to better respond to stimuli in the future (DA). The electrical activities reformulate the high entropy, resting state (DA). In deep learning, computation depends on the depth and number of connections of the network, and the phases of information processing are very similar to the cycle of neural computation