Abstract

Breast cancer is the most common type of malignancy diagnosed in women. Through early detection and diagnosis, there is a great chance of recovery and thereby reduce the mortality rate. Many preliminary tests like non-invasive radiological diagnosis using ultrasound, mammography, and MRI are widely used for the diagnosis of breast cancer. However, histopathological analysis of breast biopsy specimen is inevitable and is considered to be the golden standard for the affirmation of cancer. With the advancements in the digital computing capabilities, memory capacity, and imaging modalities, the development of computer-aided powerful analytical techniques for histopathological data has increased dramatically. These automated techniques help to alleviate the laborious work of the pathologist and to improve the reproducibility and reliability of the interpretation. This paper reviews and summarizes digital image computational algorithms applied on histopathological breast cancer images for nuclear atypia scoring and explores the future possibilities. The algorithms for nuclear pleomorphism scoring of breast cancer can be widely grouped into two categories: handcrafted feature-based and learned feature-based. Handcrafted feature-based algorithms mainly include the computational steps like pre-processing the images, segmenting the nuclei, extracting unique features, feature selection, and machine learning–based classification. However, most of the recent algorithms are based on learned features, that extract high-level abstractions directly from the histopathological images utilizing deep learning techniques. In this paper, we discuss the various algorithms applied for the nuclear pleomorphism scoring of breast cancer, discourse the challenges to be dealt with, and outline the importance of benchmark datasets. A comparative analysis of some prominent works on breast cancer nuclear atypia scoring is done using a benchmark dataset which enables to quantitatively measure and compare the different features and algorithms used for breast cancer grading. Results show that improvements are still required, to have an automated cancer grading system suitable for clinical applications.

Keywords: Nuclear pleomorphism, Nuclear atypia scoring, Breast cancer, Histopathological image analysis

Introduction

Cancer is a group of different diseases in which the cells divide abnormally and tend to proliferate in an uncontrollable manner. If this proliferation is not controlled, it may result in the death of the patient. Though the reason for this abnormality is not exactly known even today, the established cause may be due to gene mutation of the DNA within the cells. Major causes for this gene mutation are smoking, carcinogenic chemicals, exposure to radiation, hormonal imbalances, etc. Cancer is of great concern today due to the rapid increase in the number of cancer patients. According to WHO, cancer is now the second leading death-causing disease globally, and has led to the death of around 8.8 millions in 2015. Nearly 1 in 6 deaths worldwide is due to cancer [20]. Mouth and prostate cancers are the most common malignancies among men whereas breast cancer is more prevalent among women with almost 25% of all cancers worldwide. According to [45], breast cancer ranks highest among Indian females with 25.8 per 100,000 females and mortality of 12.7 per 100,000 females.

Histopathologic Slide Preparation

The death rate owing to breast carcinoma can be considerably decreased by early and timely diagnosis and treatment. Advancements in the area of medical imaging techniques has brought about detection of breast cancer at the initial stages through proper screening before the symptoms appear. The most common diagnosis technique is to have a mammogram of the breast. But the mammography is not perfect. The rate of false positives and false negatives is high in mammography. Women with high risk of breast cancer are advised to have annual MRIs to be taken. If either mammography or an MRI shows any sign of having a disease, the only confirmation is through breast biopsies. There are different types of biopsies: either using some needle or through some surgical procedures. In a fine needle aspiration (FNA) biopsy, a very fine needle connected to a syringe is used to collect the sample for testing. In core needle biopsy, a large needle is utilized to collect the sample of the lesion. In certain cases, surgery is done to remove the lump for biopsy. The collected samples are then fed for processing, sectioning, and are placed on a glass slide and stained for the examination by an expert pathologist using a high-magnification microscope. This manual examination of the processed tissue under a microscope for a symptom of an ailment is termed as histopathology (or histology).

Immediately after the specimen is collected from the patient, it is subjected to a process known as fixation, which will preserve the tissue from the enzyme activity and prevent the decay [57] of the tissues. Commonly formaldehyde is used as the fixing agent, often called as “formalin.” After fixation, the specimen is grossed, processed, placed, and oriented in an embedding mold ready to be sectioned. Thin sections are cut out of the specimen using a special instrument known as “microtome” using fine steel blades. Usually the cells and other elements of the tissue are colorless and for tissue component visualization under the microscope; they are stained using one or more suitable stains to highlight the tissue structures. The most widely used stain is the H&E stains for providing distinguished structural information. In H&E staining, the tissue specimen is subjected to two stains: hematoxylin and eosin. Hematoxylin is a purple-blue dye which binds with the nuclear chromatin giving the nuclei a dark blue color and the eosin is an acidic pinkish dye which binds with the cytoplasm. A typical H&E stained breast biopsy tissue is shown in Fig. 1. The stained sections of the cancer tissues are covered using a glass coverslip and used for the histopathological analysis using microscope by an expert pathologist.

Fig. 1.

H&E stained breast biopsy tissue

Sometimes, H&E staining alone may not be giving a complete picture of the disease. In such cases, additional specialized staining techniques like immunohistochemical (IHC) staining may be required to gather more histological information. The IHC technique is mostly used for diagnosing malignancy of the tumor and for determining the stage of the tumor growth. IHC helps in determining the cells at the origin of a tumor through determining the absence or presence of some specific proteins in these observed sections of the tissue. Depending on the type of proteins detected by the IHC, specific and discrete therapeutic treatments are adapted for the detected cancer type. IHC often helps in identifying progesterone receptors (PR), human epidermal growth factor receptors 2 (Her2), or estrogen receptors (ER) which greatly affect the cancer proliferation [28, 74].

The visual analysis and grading of these specimens under a microscope by a pathologist is the widely accepted clinical standard for the detection and accurate diagnosis of breast tumors. The diagnosis of the disease is greatly influenced by the experience of the pathologist and is subjective, directly affecting critical diagnosis and treatment decisions. As there is a tremendous increase in the number of cancer patients, analyzing large number of slides manually is a highly laborious and time-consuming process for the pathologists. Also, this manual diagnosis often result in inter- and intra-observer variations, inconsistency, and lack of traceability. According to [64], there is a variability of 20% between experienced and novice pathologists in tumor diagnosis. A recent study on this diagnostic disagreement of pathologists in breast cancer diagnosis revealed that there is a disagreement of 75.3% [16] between individual and expert diagnosis. Thus, there is a great requisite for developing a computer-aided accurate cancer diagnosis and grading system that can overcome the problem of intra- and inter-observer inconsistency and thereby improvise the accuracy and consistency in the cancer detection and treatment planning processes.

Digital Histopathological Image Analysis

Automation of cancer diagnosis and grading require digitalization of the histological slides. This digitization of tissue slides is referred to as digital pathology, which could improve visualization, analysis of tissue slides, efficiency of pathological diagnosis, and treatment planning. Though the field of digital pathology originated in the 1980s, various factors like slow scanners, high cost, poor display mechanism, limited memory, and network have prevented it from being used in clinical diagnosis [36]. Digitalization of specimen slides were done in early days using digital cameras mounted on microscopes. The development of the whole-slide imaging scanner in late 1990s by Wetzel and Gilbertson [71] has brought about a turning point in digital breast histopathological image analysis. The scanning of conventional glass slides to produce digital slide, referred as whole-slide imaging (WSI), is currently used by pathologists worldwide. Some of the most commonly used WSI scanners include 3DHISTECH,1 Hamamatsu,2 GE Omnyx,3 Roche(previously Ventana),4 Philips,5 Leica(previously Aperio).6 These scanners are capable of performing scanning of the slides at a magnification of × 20 or × 40 times with 0.46 μ m/pixel or 0.23 μ m/pixel spatial resolution, respectively. The RGB images produced are usually compressed using JPEG or JPEG2000 standards, that provides multilayered storage of images, that enables fast panning and zooming.

The onset of whole-slide imaging has given a rapid advancement in the field of pathology, supported by high image quality, fast image acquisition techniques and increased storage capacity, and fast networking. With the advent of these WSI scanning techniques, it is now possible to automate breast cancer diagnosis using the digitized histopathological images and computer-aided diagnosis (CAD) methodologies [27]. CAD techniques have started been used for medical image analysis in early 1960s. But only during the last decade they are being widely used for histopathological image analysis. Now the interpretation of pathological images using CAD techniques has become a strong tool for exploring a wide range of breast histological image analysis tasks like (1) cancer detection, (2) grading of malignancy level of cancer or nuclear atypia scoring, (3) nuclei segmentation tasks, and (4) classification of cancer to various subtypes.

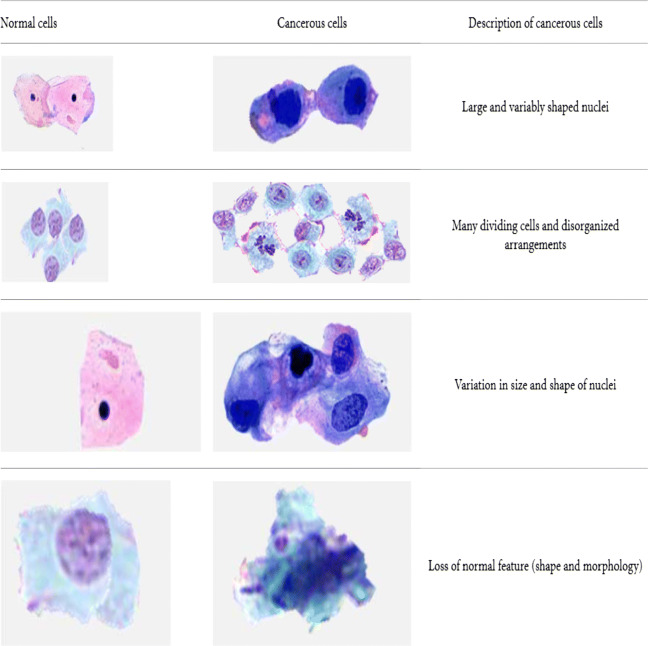

In histopathology, the cancer detection process normally consists of categorizing the image biopsy into cancerous one or non-cancerous one. The pathologists observe different characteristics like the shape, color, proportion of cytoplasm, and size of the cell nuclei and categorize the specimen. Figure 2 shows the difference between normal and cancerous cells [41]. The histological grading of cancer tissues, often called as nuclear atypia scoring, gives an estimate of patient prognosis and is helpful in developing patient-related treatment plans. The specimen is graded as low-grade, intermediate-grade, and high-risk breast cancer, according to the degree of tubular formation, nuclear pleomorphism, and mitotic activity. Automated nuclei segmentation and classification, often required for cancer detection and grading, is a recurring task and especially difficult in pathological images, since most of the nuclei appear in complex and irregular shapes and sizes. Studying the molecular level subtypes of breast cancer is often helpful in planning specific treatments and developing new therapeutic techniques. The profile regarding the cancer subtype can be determined through the genetic and molecular information obtained from tumor cells. Breast cancer includes mainly 4 significant molecular subtypes: Luminal A, Luminal B, HER2, and Triple negative/basal-like types.

Fig. 2.

Difference between normal and cancerous cells [41]

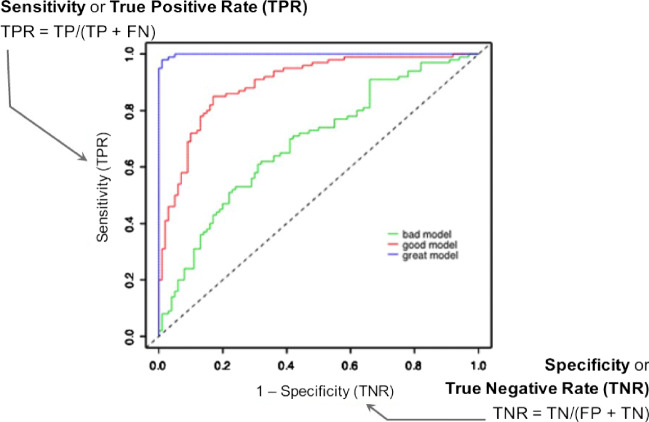

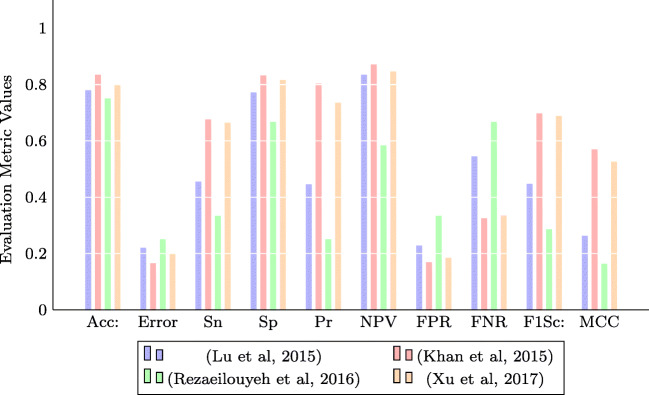

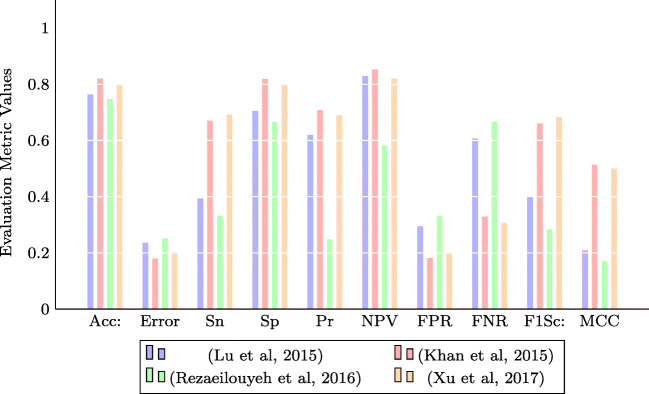

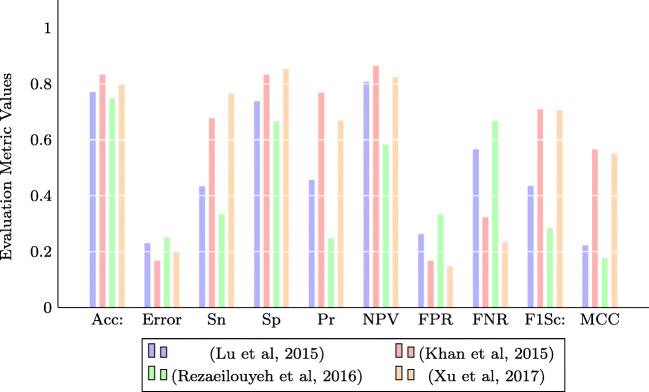

This review paper will be mainly focusing on nuclear atypia scoring aspect of breast carcinoma. The paper explores the different techniques and methodologies used for the grading of breast cancer, addresses the challenges involved, and discusses the strategies used by the image analysis techniques in overcoming these challenges. The study is a venture to abstract out the recent developments in breast cancer grading that sparks light into how knowledge has evolved within the field, spotlighting what has already been done, what is conventionally accepted, what is emerging, and what is the present status of research in this field. This helps in identifying the research gap, i.e, under-researched or unexplored areas that needs to be imbibed with research works in the right direction. The paper also gives an overview of the various evaluation metrics used for the quantitative analysis of nuclear pleomorphism scoring. The paper is organized as follows: the “Histological Grading of Breast Cancer Tissues” section will be giving an overview and challenges in nuclear atypia scoring, the “??” section will be dealing with the algorithms used for breast histological image grading, the “Evaluation Metrics” section summarizes the main evaluation metrics used for the evaluative analysis of cancer grading, and the “??” section will be dealing with the comparative analysis of major nuclear atypia scoring algorithms. A look into the future prospects of breast histopathological image analysis is given in the “Nuclear Atypia Scoring: Future Perspective” section and finally conclusions are drawn in the “Conclusion” section.

Histological Grading of Breast Cancer Tissues

Histological grading of cancer is the representation of a tumor based on how far the tumor tissues differ from normal tissue. Within the last decade, grading of hematoxylin-eosin (HE) stained histopathology image is often accepted as a standard practice for breast cancer prediction and prognosis. It provides an inexpensive and prognostic information about the biological characteristics and clinical behaviors of BC. Breast contains well-differentiated cells, that take specific shapes and structure based on their function within the organ, whereas cancerous cells lose this differentiation when compared with the normal cells. In an infected breast, the cells become disorganized, less uniform, and cell division occurs in an uncontrollable manner. Pathologists categorize the cancer as low-risk, intermediate-risk, or high-risk cancer depending on whether the cells are highly differentiated, moderately differentiated, or less differentiated, respectively, as the cell may progressively lose their characteristics observed in normal breast cells. Undifferentiated or poorly differentiated tumors may probably be growing and spreading at a faster rate with low survival rate.

Invasive breast cancers often spread out of the original site (either the lobules or milk ducts) into the neighboring breast tissues and they constitute almost 70% of all breast cancer cases [30], and they are usually having poor prognosis compared with the in situ subtypes. Further analysis on tumor differentiation can be done on isolation of invasive breast cancer. The cancer is graded depending upon different factors like the type of cancer. The internationally and widely recognized Nottingham grading system (NGS), an improved Scarf-Bloom-Richardson grading system, is the most widely used system for nuclear atypia scoring [23]. NGS forms a qualitative evaluation method where the atypia score is evaluated based on three morphological factors: extent of normal tubule structures, nuclear atypia or nuclear pleomorphism, and the count of mitotic cells [17].

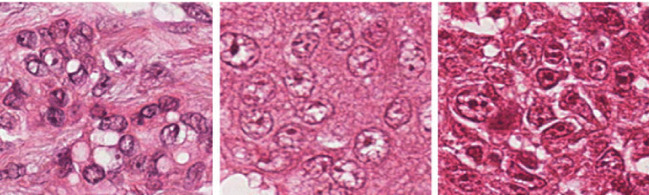

When evaluating tubules, the percentage of tumor that displays tubular structure is assessed. A score of 1 is given if the portion of area that is composed of definite tubules is more than 75% of the tumor area. If the area of tubule formation is between the values of 10 and 75%, a score of 2 is assigned and a score of 3 if the tubule formation area is less than 10%. In nuclear pleomorphism, a score of 1 is assigned when the nuclei are small in size, with regular outline and having a uniform distribution of nuclear chromatin. For cells with open, vesicular nuclei that shows visible nucleoli and with moderate variability in both size and shape, a score of 2 is given. Cells with prominent multiple nucleoli and vesicular nuclear structure is assigned a score of 3. Mitotic count determines the number of mitotic cells found in 10 High Power Fields (HPF) microscopes. If the number of mitotic cells are more, it implies a high-grade cancer. A score of 1 is given for up to 9 mitoses per 10 fields, score of 2 if there are 10 to 19 mitoses, and a score of 3 is given if more than 20 mitoses are observed. Table 1 gives a summary of Nottingham grading system (NGS) used for breast cancer scoring. The score from all three features are added up to get the overall breast cancer grade. If the total is between 3 and 5, it indicates that the tumor is well-differentiated and it is considered a grade I tumor. If the cells are moderately differentiated, the total score will be between 6 and 7, and hence treated as grade II cancer. Grade III cancers will be poorly differentiated with a total score between 8 and 9. Thus, the cancer will be graded with scores 1, 2, or 3, for the low, moderate or strong nuclear pleomorphism respectively. Sample images having a nuclear atypia score of 1, 2, and 3 [76] are given in Fig. 3.

Table 1.

Summary of Nottingham grading system (NGS) for breast cancer

| Feature | Score | Description |

|---|---|---|

| Tubule formation | 1 | ≥ 75% of the tumor forms tubule |

| 2 | 10–75% of the tumor forms tubule | |

| 3 | Less than 10% of the tumor forms tubule | |

| Nuclear atypia | 1 | Small, uniform, and regular nuclei |

| 2 | Moderate variations in size and shape | |

| 3 | Multiple nucleoli with prominent variation | |

| Mitosis count | 1 | 0–9 mitotic cells in 10 HPF |

| 2 | 10–19 mitotic cells in 10 HPF | |

| 3 | Greater than 20 mitotic cells in 10 HPF |

Fig. 3.

Sample images with NAS of 1, 2, and 3, respectively [76]

The currently existing manual visual analysis of the histopathological slides are highly subjected to the individual opinions of the pathologist and differ in intra- and inter-observer decisions, which greatly affects the disease diagnosis and the treatment prescribed. Hence, its highly essential to develop an automated cancer grading system based on quantitative digital image analysis techniques that can circumvent the observer variabilities and thus develop a consistent system for breast cancer diagnosis. Moreover, the quantitative analysis of histopathological digital images is not only essential for clinical applications but also for research purposes which may help us understand the biological mechanism and genetic abnormalities associated with the disease.

Challenges in Histopathological Image Analysis

For the past two decades, tremendous researches are going on computer-aided cancer diagnosis. Automated CAD analysis of digital histological images can ensure objectivity and reproducibility using digital image analysis techniques. Through image analysis techniques, various quantitative informations like size, shape, and deformities of the cells can be extracted. The major steps involved in digital image analysis of histopathological images include pre-processing, segmentation, feature extraction, feature selection, and classification. Many efficient computer-aided image processing algorithms are available for automated image analysis.

Automated cancer diagnosis can be considered a great promise for advanced cancer treatments, but it is not a forthright task, as a number of challenges need to be overcome. Especially, the breast histopathological image analysis has been a challenging job due to the numerous variabilities and artifacts instigated at slide preparation and also due to the complex structure of the cancer tissue architecture. The image analysis algorithms are greatly dependent on the image quality of the digital slides. Artifacts may be introduced in the stained slides due to many reasons like improper fixation, type of fixative used, errors in autofocusing, poor dehydration, or due to uneven microtome sectioning. The quality of the slides need to be ensured in terms of avoiding chatter artifacts and tissue folds induced during erroneous microtomic sectioning. Proper coverslipping has to be done to avoid creation of air bubbles and uniform section thickness has to be ensured [24].

Histological image analysis is also challenged, by improper staining and variations in lightning and scanning conditions leading to blurring, noise, and unwanted artifacts in the captured images. Color variations may occur in the tissue appearance, due to various reasons like difference in specimen preparation process, stain variations from different manufacturers or batches, usage of WSI scanners from different vendors, and difference in storage time of the stained specimen. The requirement for standardization of methods and reagents used in histological staining is discussed by Lyon et. al in [43]. Also uneven distribution of stain within the specimen tissue due to difference in concentration and timing create issues in processing the stained material. In the case of automated image analysis, this has been of more concern and hence before the image analysis, the images need to be normalized to reduce the effect of these staining variations. Stain normalization and color separation were used on the H&E stained images for the first time in [51]. Later on many explicit stain or color normalization algorithms have been applied as a pre-processing step in various algorithms for histopathological image analysis.

At the time of digital scanning of the slides, uniform light spectrum is used for illumination. Tissue auto-fluorescence (AF) variations in microscopic setup and differences in staining and sample thickness may cause uneven illumination across the tissue samples. Also the sensitivity of scanners is different for different wavelengths of the illuminating light. Usually the cameras exhibit low response for short wavelength signals like blue and show a high response at long wavelengths like red signals. These differences in the illumination need to be addressed before applying the image analysis techniques.

For feature extraction, mostly the nuclear structure of the tissue need to be segmented before extracting and selecting the suitable features. This nuclei or cell segmentation associated with cancer grading is another major challenge because of the complex structure and nature of the tissue specimen. This is of major concern in the high-grade tumors, where cells are poorly differentiated and nuclei are often hollow with broken membrane [33]. Segmentation is a challenging task in cases of specimen with occlusion, touching or overlapping clustered cells and tissues, which significantly influences the accuracy of nuclear pleomorphism scoring and cancer diagnosis.

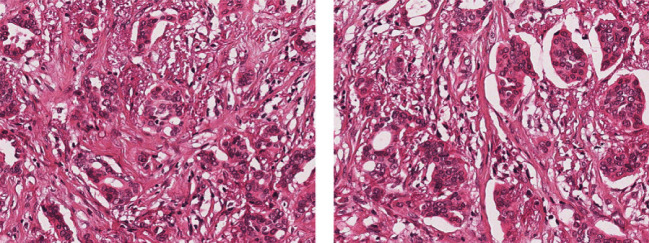

Feature selection refers to extracting and selecting relevant and important features from the histological images. This has now been an important area of research with the advancement of histopathological image analysis. It needs to represent properties of the tumor cells or tissues in a quantifiable manner. The features selected should be unique and distinguishable enough to automatically identify cancerous and non-cancerous tissues and to grade them accordingly. This is a challenging task, as in most cases, the overall appearance of images are quite similar, that it is quite difficult to quantify their properties using distinguishable extracted features. Figure 4 represents two sample images which are quite similar with respect to their appearance and texture but graded with NA score of 1 and 2.

Fig. 4.

Sample images with quite similar attributes and appearances, but scored with NAS of 1 and 2, respectively [40]

Last important challenge for the histopathological image analysis is the system evaluation. Due to the limitations in the availability of data, there may be chances of substantial amount of bias if the evaluation of the system is not done properly. Some algorithms may be claiming good results on limited dataset, but proper evaluation of these techniques can be done only if standardized and large collection of dataset are tested and assessed on them. This lack of unified benchmark dataset forms another major challenge, as most of the automated cancer diagnosis methods are carried out using their own private datasets, using different evaluation methods and diverse performance metrics. For the numerical comparison of these methods, benchmarked dataset is highly essential. This problem has been addressed to some extent with a few open scientific grand challenges conducted in the field of pathology images. The availability of standardized and annotated dataset in these challenges gives an opportunity of testing and evaluating different histological image analysis methods on the same data. This helps to have an objective comparison of the strengths and limitations of these methods. Some of the grand challenges conducted in the field of breast histopathological image analysis are discussed in the “??” section.

Grand Challenges in Breast Histopathological Image Analysis

Some of the challenges conducted in the histological image analysis of breast tumor includes AMIDA13, MITOS12, MITOS-ATYPIA14,7 TUPAC16,8 and BACH 2018 (BreAst Cancer Histology).9

The MITOS contest [58] for the detection of mitosis in H&E stained slides images of breast cancer was conducted in 2012 in connection with the conference ICPR 2012. Mitosis detection is a difficult task as often mitosis are small in size with large variations in shapes and the count of mitotic cells is considered a significant parameter for the accurate prediction of breast cancer outcome. The MITOS benchmark consists of 50HPFs from 5 different slides scanned at × 40 magnification. Seventeen teams participated in the contest and the performance of the best team was with an F1-score of 0.78. But the dataset provided was found to be too small to obtain a good assessment of robustness and reliability of the proffered algorithms.

Assessment of Mitosis Detection Algorithms 2013(AMIDA13) challenge was conducted in 2013 as part of MICCAI 2013 conference. AMIDA [68] benchmark re-edited the MITOS12 dataset with 12 training samples and 11 for testing, with more than one thousand mitotic figures annotated by multiple observers. A total of 14 teams submitted their methods with the highest F1-score of 0.611, which indicates progress still need to be made to reach clinically tolerable results.

MITOS-ATYPIA14 challenge at ICPR 2014 conference enlarged their MITOS2012 dataset and the challenge consists of two tasks: mitosis detection and evaluation of nuclear atypia score. The benchmark dataset includes hematoxylin and eosin (H&E) stained slides scanned by two WSI slide scanners: Aperio Scanscope XT and Hamamatsu Nanozoomer 2.0-HT. Several frames with × 20 magnification identified within the tumors in each slide are selected by experienced pathologists. These × 20 frames are considered for nuclear atypia scoring and they are further magnified at × 40 to obtain four subdivided frames. The × 40 frames are subjected to mitosis annotation and a scoring is performed for the six criteria related to nuclear atypia. The training data set consists of 284 frames at × 20 magnification and around 1136 frames at × 20 magnification. About 17 teams participated in the contest and the highest rank for the mitosis detection was for the algorithm with F1-score of 0.356. For nuclear atypia scoring contest, the highest secured point was 71.

As part of the MICCAI Grand Challenge, the Tumor Proliferation Assessment Challenge 2016 (TUPAC2016) was organized, which consisted of mainly three tasks: (1) Prediction of proliferation score based on mitosis counting, 2) Prediction of proliferation score based on molecular data, and 3) Mitosis detection. The training dataset consists of 500 breast cancer cases, represented with one whole-slide image and is annotated with a proliferation score based on mitosis counting by pathologists, and a molecular proliferation score. The highest score for the first task was with a quadratic weighted Cohen’s kappa score of 0.567, whereas for the second task, the highest Spearman’s correlation coefficient score was 0.617. For the mitosis detection task, a highest F1-score of 0.652 was secured.

Following the availability of these benchmark dataset, many histological image analysis techniques were proposed by researchers for breast mitosis detection, nuclear atypia scoring, and for cancer prognosis. But all these breast cancer diagnosis and prognosis methods are carried out on a small dataset as there was a shortage of public dataset containing large quantity of images. This problem was mitigated with the availability of the publicly available dataset, the BREAKHIS DATASET10 compiled by Spanhol et al. [61]. The Breast Cancer Histopathological Image Classification (BreakHis) consists of 9109 WSI images of breast cancer tissues obtained from 82 patients available at × 40, × 100, × 200, and × 400 magnifications. The availability of this dataset provides scope for the scientific community to have benchmarking and standardized evaluation to be made in this clinical area.

The recent BACH 2018 challenge conducted as part of the International Conference on Image Analysis and Recognition (ICIAR 2018) involves the task of classifying the histology breast cancer images into benign, normal, invasive carcinoma and in situ carcinoma. The dataset consist of high-resolution (2048 × 1536 pixels) images annotated by two experts and those images with difference of opinion are discarded. The highest overall prediction accuracy of 0.87 was achieved in the contest.

The most recently published BreCaHAD 2019 (breast cancer histopathological annotation and diagnosis) dataset [1] consists of 162 breast cancer histopathology images, that classify H&E stained images into six classes, i.e, mitosis, apoptosis, tubule, non-tubule, tumor nuclei, and non-tumor nuclei.

Though many datasets like TUPAC 2016, BREAKHIS, BACH 2018, and BreCaHAD 2019 challenge datasets related to breast cancer histopathological image analysis have been released, none of them consists of labeled samples for nuclear atypia scoring of breast cancer. In this regard, the MITOS-ATYPIA14 challenge dataset is the most recent one related to our problem of concern. Hence, we have adopted them for our comparative analysis.

In this paper, we present a systematic review of the computational steps involved in the nuclear atypia scoring or cancer grading on breast histopathological whole-slide images. In the following section, we will be explaining the different techniques and methodologies used for the grading of breast cancer, addressing the challenges involved and discussing the strategies used by these techniques in overcoming the challenges.

Histological Grading of Breast Cancer or Nuclear Atypia Scoring: Current Status

Grading of histopathological images of breast biopsy specimen is currently considered to be the golden clinical standard for the diagnosis and prognosis of breast tumor malignancy. Nuclear Atypia Scoring (NAS) is used as a quantitative diagnostic measure to assess the grade of different cancers, especially breast cancer. It provides a measure of the degree of variation in the shape and size of cancer nuclei as compared with normal nuclei in the breast. This histological grading along with other factors is used for the prognosis [9] and prediction of the disease progression, which help in choosing the best treatment method.

In the case of breast cancer, the visual examination of histological slides of biopsy specimen stained using hematoxylin and eosin (H&E) continues to be the standard procedure for cancer detection and determination of malignancy grade. Nottingham grading system (NGS), recommended by the World Health Organization (WHO), assesses the morphological measurement of tubule, mitotic count, and nuclear atypia for the breast cancer grading. Often these manual diagnosis require extensive training and experience for the pathologist to be a proficient in the technique and even then there will be great extent of disagreement between pathologists regarding the grade. This is particularly true in the case of nuclear pleomorphism, which is an indication of the size, shape, and chromatin distribution of the nuclei. This subjectivity of measurement and poor reproducibility have resulted in the great demand for an automated grading system. The advancements in the field of digitization of whole-slide histopathological images (WSI), increasing computing power and storage capacity, have made it practically possible to have histological breast cancer grading to be done fully digitally.

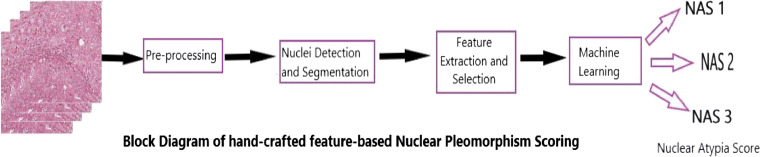

Histopathological image analysis algorithms for nuclear pleomorphism scoring can be broadly classified into two categories: handcrafted feature-based and learned feature-based algorithms. The handcrafted feature-based algorithms require image features to be explicitly extracted and mainly consists of image pre-processing, nuclei segmentation, feature extraction, feature selection, and classification steps. Pre-processing of the digital slides involves removal of noise and enhancement of the images for better feature highlights. This may include noise smoothening, thresholding, intensity normalization, stain normalization, color separation, etc. After pre-processing, some algorithms may perform a nuclei segmentation task. One of the pre-requisites for breast cancer grading in histopathological images is often the extraction of histopathological anatomical structures like lymphocytes, cancer nuclei, stroma, and background. The shape, size, and other morphological features of these structures are often used as measures for grading and assessing the severity of the disease. Segmentation may also be needed for nuclei counting, which can have some significance for certain types of cancers. Also these nuclei segmentation may be needed for nuclear pleomorphism which has got diagnostic importance in cancer grading [17, 63]. Various segmentation techniques like mean shift, watershed, Gaussian Model, and Active Contour Model [7, 11, 42, 67] are often used for nuclei segmentation.

After accurate segmentation, required features capable of distinguishing cancerous and non-cancerous cells are extracted in the feature extraction phase. These features may include the morphological, textural, fractal, or intensity-based features, like the size, shape, number of nuclei, and textural features like histogram, Local Binary Patterns (LBP), and Gray Level Co-occurrence Matrix (GLCM). The extracted features are fed to the classification phase which performs a statistical analysis of these features and often machine learning algorithms are used for segregating these features to different classes. The commonly used classifiers include SVM, Bayesian classifier, k-means clustering, and Artificial Neural Networks(ANN). The block diagram of the steps involved in handcrafted feature-based nuclear pleomorphism is shown in Fig. 5.

Fig. 5.

Block diagram of handcrafted feature-based nuclear pleomorphism scoring

The second category of histopathological cancer grading algorithms mainly involves the learned feature-based algorithms in which high-level feature abstractions are directly extracted from the histopathological images without the need of explicit feature extraction steps. This learned feature-based algorithms have achieved much attention with the success of deep neural networks in various computer vision tasks. These methods are data-driven approaches and hence can be directly transferred for cancer grading and they often outperform the conventional handcrafted approaches. Most of the recently developed algorithms for breast cancer grading belongs to these deep learning–based techniques using Convolutional Neural Networks (CNN), Deep Neural Networks (DNN), Residual Networks (RN), etc.

Compared with CAD applications for radiological images, only a few work has been reported in the quantitative analysis of breast histopathologic grading of stained WSI tissue images. The new era of computer-aided automated breast cancer grading began in 1995 when different morphological features are extracted from the segmented nuclei and used inductive machine learning techniques for atypia scoring by Wolberg et al. [73]. Nuclear features were procured from the manually segmented nuclei and inductive machine learning technique was used for the grading. Most of the works on breast cancer grading were focused on nuclei segmentation for feature extraction as the score is highly related to the morphological aspects of the nuclei in the specimen. This section gives a brief account of the various algorithms used for histopathological breast cancer grading.

Handcrafted Feature-Based Algorithms

Image Pre-processing

Pre-processing suppresses the unwanted distortions in the images and enhances the features that are important for further analysis, like feature extraction. In the image pre-processing step, the artifacts observed in the images may be rectified prior to feature extraction and image analysis. Most of these artifacts are due to the inconsistencies in the preparation of histology slides. Different methods are used in these histological slides to overcome many of the known inconsistencies in the staining process, to bring them into a common, normalized space to enable improved grading of the breast cancer.

In [10], an adaptive approach for color variation removal has been proposed. The pink component that represents the eosin stain of the image is averaged to C vector and the rest of the image is averaged to M vector. Then the H and E components are obtained by the orthogonal projection of M and C, respectively, as given by the following equations:

| 1 |

| 2 |

| 3 |

| 4 |

where Pi denotes the CMY color space vector for the pixel i.

Veta et al. [67] and Basavanhally [5] use color unmixing or color deconvolution, a special case of spectral unmixing, for separation of the stains. In this method, the proportion of each of the different stains applied is calculated based on stain-dependent RGB absorption. For this an orthonormal transformation of the RGB values is done, to get an independent information regarding the contribution of each stain [59]. The major disadvantage of this technique is that areas with multiple stains are treated as one color resulting in loss of information. After color separation, the irrelevant structures that may adversely affect the segmentation accuracy are removed using a series of mathematical morphological operations.

Stain normalization and color separation are done in [42] as a pre-processing step before performing the image analysis. For stain normalization [51], prior information of the stain vectors are used for estimation and unequal stain distribution is dealt with a clustering process, a variant of Otsu thresholding. Trust-region optimization is applied for the underlying optimization task. After normalizing the RGB image, the hematoxylin and eosin stained images are separated through a color deconvolution based on the estimated stain vectors. Then the hematoxylin image which mainly highlights the nuclei is further used for analysis.

Wan et al. [69] use a nonlinear mapping-based stain normalization proposed in [39], to correct the image intensity variations due to variability in tissue preparation. In this method, Principal Color Histograms (PCH) is obtained from a set of quantized image histograms for the evaluation of the color descriptors of the stain. Then this descriptor along with RGB intensity are used for supervised classification by the generation of stain-related probability maps. These probability maps are used for applying a nonlinear normalization of each channel.

In [47], the hematoxylin image is separated by the color deconvolution method proposed in [44]. The RGB color images are mapped to optical density space and subjected to SVD decomposition and a plane corresponding to the two vectors of the largest two singular values of the SVD is calculated. All data is projected onto the plane and normalized to obtain the Optimal Stain Vectors, used for the deconvolution.

Salahuddin et al. [60] used a Pattern-based Hyper Conceptual Sampling based on hyper context feature extraction and reduction, for selecting the most important data from the whole set of training data. Unmixing is done to separate the H&E color channels, followed by anisotropic diffusion filtering to enhance the contrast of the histopathological images in [18] .

Nuclei Detection and Segmentation

The detection and segmentation of nuclei are significant steps in cancer detection, prognosis, and diagnosis. Different aspects of nuclei like its size, morphological structure, and mitotic nuclei count are critical for diagnosing the presence of the disease and for interpreting its severity and malignancy levels. The different nuclei segmentation algorithms used as part of breast cancer grading can be mainly classified as (1) threshold-based techniques, (2) boundary-based techniques, and 3) region growing-based techniques.

Threshold-Based Methods

Image thresholding is considered to be a simple, effective, and widely used method for segregating an image into the foreground objects and background scene. Through selecting a suitable threshold, the histopathological image can be converted to binary image, which contain all of the essential information regarding the shape and size of the nuclear region. This reduces the complexity of the image and simplifies the process of feature extraction and classification. Many of the breast cancer grading algorithms apply this thresholding approach for nuclei segmentation.

Weyn et al. [72] used a combination of background correction and basic thresholding depending on the median of the intensity histogram for extracting the contour of the nuclei resulting in a binary mask, which is embedded into the actual histopathological image for further processing.

A hybrid method of optimal adaptive thresholding along with local morphological operations is used in [53]. A histogram partitioning is used for determining the optimal threshold that will maximize the variance between the classes and minimize the variances within the classes. Grade 3 nuclear segmentation is improved using a further sequence of morphological opening and closing operations combined with the micro structures’ prior knowledge. A similar segmentation technique, that utilizes the adaptive thresholding techniques for the optimal threshold value and standard edge smoothing and morphological filling algorithms, is used in [54].

Naik et al. [49] used a composition of low-level, high-level, and domain-specific information for nuclei segmentation. The Bayesian classifier is applied to generate a pixel-wise likelihood image based on the low-level image intensity and textural information from the RGB image. The intensity values in the likelihood image represents the probability of a pixel belonging to a particular group. The likelihood image is then thresholded to obtain a binary image and the structural constraints are imposed using the knowledge of the domain with respect to the arrangement of histopathological structures. A level set algorithm and template-matching scheme are then used for nuclei segmentation.

In [47], the nuclear region is segmented based on Maximally Stable Extreme Regions (MSER) applied on the hematoxylin contribution map. Multiple thresholds are applied to the image and those areas that change only very little are identified as Maximally Stable Extreme Regions. Then two morphological operations are performed on these regions—opening operation which removes small structures and closing which fills up small holes and breaks in the image.

Boundary-Based Methods

Edges and discontinuity in an image intensity are important characteristics of an image that carry information about object boundaries. Detection methods based on these discontinuities are often used for image segmentation and object identification. Variants of edge-based or boundary-based segmentation methods have been used for nuclear segmentation of breast histopathological images.

In [10], Difference of Gaussian (DoG) filter is applied over the H image, with the size of the filter matching the size of the nuclei. Then Hough Transform is applied to extract the edge map corresponding to various diameters and angles. An Active Contour model is then used for outlining the nuclear boundary. A collection of features that represent the texture, shape, and fitness of the outline are extracted and used for training an SVM classifier.

[12] used morphological operations and distance transform to select the candidate cell nuclei. For this, the gamma-corrected R image is thresholded into a binary image which is subjected to dilation and erosion morphological operations. Distance transform calculated on these images are used for calculating the size of the nuclei. Larger nuclei are avoided as they form part of a clustered tissue and the candidate nuclei are selected for segmentation. Boundary of the nuclei is extracted using polynomial curve fitting on the gradient image obtained from the image patch in the polar space. Then the size and shape features extracted are used for fitting a Gaussian model.

In [32], the nuclear edges are extracted using a snake-based algorithm applied on an image pre-processed with a sequence of thresholding and morphological filtering procedures. ROIs of histological images that include nuclear structures are extracted and then a polar space transformation is performed. An iterative snake algorithm outlines the nuclei boundary on this polar space.

Basavanhally et al. [5] proposed a Color Gradient–based Geodesic Active Contour (CGAC) approach for nuclei segmentation. The optimal segmentation is performed by minimizing an energy function which contains a third term in addition to the two terms in traditional geodesic active contour. This removes the reinitialization phase required for extracting a stable curve that is adopted in traditional methods. The edge detection is performed on the gradient of the gray scale image. The gradients evaluated from each image channel is locally summed up, to obtain the extreme rate of directional changes of the edges. Nuclear regions are extracted out from the boundaries obtained from the CGAC model.

Wan et al. [69] used a combination of boundary and region information, a hybrid active contour method for the automated segmentation of the nuclear region. The hybrid active contour method performs segmentation by reducing the energy function defined as

| 5 |

where represents the image which is to be segmented, denotes the Heaviside function, ω is the image domain, represents the gradient of the image, and α and β are pre-defined weights for the balancing of the two terms. Further, overlapping nuclei are segmented using some local image information. Both local and global image data are used in hybrid active contour model for the better segmentation of nuclear area in digital histopathological slides.

Faridi et al. [18] detected the center of nuclei using morphological operations and DOG filtering and applied the Distance Regularized Level Set Evolution (DRLSE) algorithm for extracting the nuclear boundaries.

Region Growing Methods

Region growing is another methodology used for image segmentation which examines the neighboring pixels based on homogeneity or similarity criteria and added to a region class if the class similarity criteria is satisfied. This process is repeated for each of the pixels surrounding the region. The region growing algorithm starts by selecting a set of seed points based on some criterion. The growing of these regions are then performed from these detected seed points based on some criterion related to region membership. The criterion can be gray level texture, raw pixel intensity, histogram properties, color, etc. Usually, the edges of the regions extracted by region growing are fully connected and are perfectly thin. Region growing techniques are generally better in noisy images where boundaries or edges are difficult to detect. Dalle, Veta, Lu, and Maqlin [11, 42, 46, 67] have used variants of region growing approach for nuclei segmentation for cancer grading.

A multi-resolution approach is used for segmentation in [11]. First neoplasm localization is conducted on the global image with low-resolution. Then cell segmentations are performed on the high-resolution images containing the neoplasmic structures by applying the Gaussian color models. The differences between the Gaussian color distributions are used for detecting the cell types.

A marker-controlled watershed segmentation method is used in [67]. The pre-processed images are used to detect the candidate nuclei, which contains highlighted points having high radial symmetry and regional minima. Watershed segmentation is done starting from these markers and the contour of the nuclei is approximated with ellipses.

In [42], seed detection, local thresholding, and morphological operations are used for the nuclei segmentation. The normalized hematoxylin stain image is then transformed into a blue-ratio (BR) image for easy detection of the nuclear region. A multiscale Laplacian of Gaussian (LoG) performed on this BR images detect the seed points in the image. The scale-normalized LoG-filtered image is fed to a mean-shift algorithm for segmenting the nuclear regions, followed by morphological operations for smoothening the boundaries.

In [46], the peripheral borders of the nuclei are segmented based on a convex grouping algorithm, mainly suitable for open vesicular and patchy type of nuclei which are quite commonly observed in high-risk breast cancers. A k-means clustering algorithm is used for segmenting the nuclear regions which may include these irregular nuclear structures. Then a convex boundary grouping is done to recover the missing edge boundaries.

Plenty of algorithms have been studied and investigated for automated nuclei segmentation. Detecting, segmenting, and classifying the nuclear regions in histopathological images are considered a challenging CAD problem due to the image acquisition artifacts and the heterogeneous nature of the nuclei. Variations in nuclei shape and size and incompleteness in the structure of nuclei also makes the task challenging. The success of the nuclear atypia scoring is highly contingent on the success of the image segmentation technique used, and hence development of a robust algorithm overcoming these issues in a powerful manner, in order to achieve a high level of segmentation accuracy still requires much research to be done.

Feature Extraction and Selection

Disorders in the cell life cycle results in excess cell proliferation in cancer tissues, which result in poor cellular differentiation. It is relevant to obtain various clinically significant and biologically interpretable features from the histopathological images that can better represent the cellular differences in the various grades of cancer. Also, the features should be capable for providing distinguishing quantitative measures for automatic diagnosis and grading of the cancer. Most of these features include morphological, textural, and graph-based topological features. Often, large sets of features are extracted in the hope that a subset of features may include the aspects utilized by human experts for the grading of tumor. And hence, many of the times, the features identified could be irrelevant or redundant. In such cases, a feature selection phase, to select the important and relevant features from this large collection of features, is performed before classification. This section deals with the different features, various feature extraction, and selection methods used in breast histological cancer grading.

Morphological Features

Mainly, the extraction of morphological features depends on the accuracy and efficiency of the underlying segmentation method used. A cancerous cell or nuclei often differ in size and shape when compared with normal one and this difference is made use of by pathologists for cancer grading. The morphological features often deliver details regarding the shape and size of a cell. The size of the segmented nuclei is generally expressed using the radii, perimeter, and area of the nuclei, whereas, the shape is represented by the quantities like smoothness, compactness, symmetry, length of the major and minor axes, roundness, and concavity. Many of the breast caner scoring algorithms have extracted and used these morphological features of the nuclei structures for cancer identification and grading.

Veta et al. [67] used the mean and standard deviation of the nuclear area, extracted from segmented nuclei, for cancer grading and prognosis. A multivariate survival analysis based on Cox’s proportional hazards model revealed that mean value of the nuclear area presented prominent prognostic value whereas the standard deviation of the nuclear region was found not to be an important predictor of the disease outcome.

Petushi et al. [53] extracted the minimum intensity value, area, intensity mean, major and minor axis, standard deviation, and minimum intensity values for each of the segmented nuclei. These 7-features vector is fed for clustering using a pretrained binary decision tree. Various features like area, mean intensity, and circularity of the nuclei and the unfolded nucleoli count are extracted from the boundary segmented nuclear regions in [18].

Different from other scoring algorithms, that mainly concentrate on nuclear pleomorphism for cancer grading, [11] combines all the three criteria in the Nottingham scoring system along with a multi-resolution approach for tumor grading. Tubule formation scoring is performed using the ratio of the total occupied area of the tubule and the total area of the tissue specimen in the histopathological image. For nuclear pleomorphism scoring, the probability distributions of the color values are modeled using the Gaussian functions in all the three grades of cancer. Cell with color distribution closest to the probability distribution of each class is classified of that particular grade. For mitos cell detection, solidity, area, eccentricity, mean, and standard deviation of the intensity values are used for feature vector construction. Gaussian models are constructed for the mitotic and the non-mitotic cell pleomorphism. If the probability of a cell being mitotic is C0 (weighting factor) times greater than the probability of being non-mitotic, then that cell is classified as a mitotic one. Score for mitotic count is calculated depending on the mean of the mitotic count obtained over all the image frames and multiplied by a factor of 10. The overall grade of the cancer is determined based on the scores obtained from tubule formation, nuclear pleomorphism, and mitotic cell count.

Textural Features

Textural features provide important information regarding the variation in intensity of pixel values over a surface in terms of quantities like smoothness, coarseness, and regularity. Textural features are usually extracted using statistical, spectral, and structural methods. The following are the different textural features extracted for histopathologic image analysis and cancer grading.

In [54], micro-texture parameters, which indicate the density of cell nuclei showing dispersed chromatin and the density of cross sections having tubular structure, are identified as potential predictors for the histologic grade of the tumor. These two discriminant features are used for supervised classification techniques like linear classifiers, decision trees, quadratic classifiers, and neural network, of which the quadratic classifiers are shown to have a minimum classification error.

Khan et al. [40] proposed a textural-based feature such as geodesic mean of region covariance descriptors for nuclear grading of breast tumor. They computed region covariance (RC) descriptors for different regions in an image and a single descriptor for the entire image is obtained by deriving the geodesic geometric mean of these RC, known as the gmRC, following a Riemannian trust-region solver-based optimization. The gmRC matrix thus obtained is used for classification based on NGS, using a Geodesic k-Nearest Neighbor classifier(GkNN).

Ojansivu et al. [52] proposed an algorithm based on the textural features for automated classification of breast cancer. They adopted Local Phase Quantization (LPQ) and Local Binary Patterns (LBP) as descriptors, which are used for forming the histograms that represents the statistical textural attributes of the image. The slides are classified into the three grades of cancer using an SVM classifier with a Radial Basis Function (RBF) kernel along with the chi-square distance metric.

In [47], a Bag of Features (BoF) using multiscale descriptors are utilized for representing the detected nuclei. The extracted descriptors are partitioned with the k-means clustering algorithm and used as the atoms of the dictionary. A feature vector obtained from the histogram of these descriptors is used to train an SVM classifier for nuclear pleomorphism grading.

Weyn and Rezaeilouyeh [56, 72] have performed multi-resolution analysis for cancer tissue classification using transform-based textural features like shearlets and wavelets.

The wavelet-based textural features, obtained by repeated low-/high-pass filtering is used in [72], for the representation of chromatin texture in the scoring of malignant breast cancer. From the multiscale representation of the wavelet coefficients, the wavelet-texture features are described as the energies of the image. The classification performance comparison revealed that the wavelet-texture features can perform well comparable with densitometric- and co-occurrence-based features, when used in an automated k-Nearest Neighbor(kNN) classifier.

Rezaeilouyeh et al. [56] computed the shearlet transform on histopathological images and extracted the magnitude and phase of the shearlet coefficients. These shearlet features together with the RGB histopathological image is used to train a CNN with several convolution, max-pooling, and fully connected layers.

Often the morphological and textural features are combined to form a hybrid technique for cancer grading, such as the works of [10, 12, 22, 32, 42, 46].

Cosatto et al. [10] segmented the nuclei outlines and extracted a variety of features that include structural, textural, and fitness of the boundary of the underlying image. Structural features consist of area, symmetry, smoothness, and compactness of edges. Textural features comprises count of vacuoles, variance of H and E channels, and count of nucleoli and DNA strands. They outlined the marked nuclei and discarded malformed ones. Also, the median of the nuclear area in a particular region and the count of the large well-differentiated nuclei in that region are calculated. An SVM classifier is trained based on these extracted features.

Dalle et al. [12] performed nuclear grading based on the shape, size, and texture of the segmented cell nuclei. The size criteria consists of the mean and standard deviation of the segmented cell nuclei. Shape feature includes the roundness of the nuclei and the texture feature is obtained as the mean of the intensity value for each segmented cell nuclei. These features are used as parameters for building a Gaussian model.

Huang et al. [32] use an application-driven image analysis algorithm for high-resolution images and the generic algorithms for the low-resolution images for the analysis, implemented in a multiscale structure supported by sparse coding and dynamic sampling for grading of breast biopsy slides. As a preliminary phase for breast cancer scoring, the most important invasive areas are identified in a low-magnification analysis so as to make the grading process faster. The first- and second-order visual features extracted are used to train a GPU-based SVM, to differentiate between infected areas and the normal tissues. For nuclear pleomorphism assessment, the nuclei are segmented out using iterative snake algorithm from the ROIs identified in the low-resolution analysis phase. A Gaussian distribution is learned from the geometric and radiometric features like size, roundness, and texture extracted from these segmented nuclei, and the scoring is performed using a Bayesian classifier. A multiscale dynamic sampling identifies nuclei with higher pleomorphism, thus avoiding an exhaustive analysis and thereby reducing the computation time.

Lu et al. [42] extracted a group of around 142 morphological and textural features for nuclear grading. These features include nuclear size, mean and standard deviation of stain, sum, entropy, and mean of gradient magnitude image, 3 Tamura texture features, 44 Gray-level run-length matrix-based textural features, and 88 co-occurrence matrix-based Haralick texture features. Each slide is represented using the histogram of each of these features and given to a SVM classifier for grading.

Features that represent the extent of shape and size variations of mitotic nuclei from normal nuclei are used in [46]. The mean and standard deviation of 10 parameters extracted from the segmented nuclei is used as the feature set. These parameters include area, solidity, eccentricity, equivdiameter, average gray value, average contrast, smoothness of the region, skewness, uniformity measure, and entropy.

Gandomkar et al. [22] used a hybrid segmentation-based and texture-based method for extracting features from the histopathological slides that can discriminate the different cancer grades. These cytological features are then combined using an ensemble of trees for regression with the pathologists’ assessment to determine the atypia score of the breast tissues.

Graph-Based Topological Features

Topological or architectural features give information regarding the structure and spatial arrangement of nuclei in a tumor tissue. For that, the spatial interdependency of the cells are represented using various types of graphs, from which the relevant features are extracted for classification. Often, graph-based features are used in combination with morphological or textural features for cancer grading.

Various morphological and graph-based nuclear features, based on the factors used by pathologists for automated grading of breast cancer are extracted out in [49]. The 16 morphological features that represent the shape and size of the segmented nuclei, including the 8 boundary features obtained from the nuclear and lumen structures, are calculated. Also 51 graph-based attributes extracted from the graphical representations like minimum spanning tree, Voronoi diagrams, and Delaunay triangulation are calculated for representing the spatial relationships of the nuclei. Principal Component Analysis (PCA) is done on the features for feature reduction and the high-grade vs. low-grade classification is done using SVM classifier.

Doyle et al. [14] uses a handful of textural- and graph-based features for cancer grading. The textural features include average, minimum-to-maximum ratio, standard deviation, and mode calculated from the second-order co-occurrence Haralick texture features, gray level features, and Gabor filter attributes. The architecture of shape and organization of nuclei within the digital histological slide is represented using graphs like Delaunay Triangulation, Voronoi Diagram, Nuclear density, and Minimum Spanning Trees. The dimensionality of the feature set is reduced by Spectral Clustering (SC) algorithms and fed to an SVM classifier for nuclear scoring.

Basavanhally et al. [5] uses a hybrid set of quantitative features, in which nuclear architecture is represented as graph-based features and nuclear texture as Haralick co-occurrence features. Nuclear architecture is represented using three graphs: Minimum spanning tree, Delaunay triangulation, and Voronoi diagram and features describing variations in these graphs are extracted. Nuclear texture vector is described as the mean, disorder statistics, and standard deviation of the Haralick co-occurrence features. Then the feature selection is obtained via Minimum Redundancy Maximum Relevance (mRMR) for dimensionality reduction and fed to a pretrained random forest for classification.

Wan et al. [69] extract multi-level features for pixel-, object-, and semantic-level information from the breast tumor histopathological images. These pixel-based features include textural features (Kirsch filters, first-order features, Gabor filters, and Haralick features), HoG, and LBP. The object-based features encapsulate the spatial interdependency of nuclei and are represented using Voronoi Diagram (VD), Minimum Spanning Tree (MST), and Delaunay Triangulation (DT). Semantic-level features capture heterogeneity of cancer biology using Convolutional Neural Networks (CNN)–derived descriptors. Dimension reduction is performed using graph embedding and fed to a cascaded ensemble of SVM-based classifiers.

Image Classification

The features extracted from the tumor tissue is a prerequisite for classification or grading of cancer. The classifiers make use of the attributes that represent the nuclear structure and their spatial interdependencies for performing the analysis. Usually classifiers work in two steps: the learning phase and the testing phase. In the learning phase, the features extracted from digital slides with annotation are used for training the classifier. Then these classifiers are tested with unseen data. In the case of nuclear atypia scoring, mainly machine learning algorithms are used to differentiate between the different grades of breast tumors, which includes k-Nearest Neighborhood (kNN) algorithm, decision trees, Support Vector Machines (SVM), Bayes classifiers, Gaussian Mixture models, random forest, and supervised learning techniques. The details regarding the classifiers used for grading of histopathology breast images have been summarized in Table 2. Multi-classifier systems or learning ensembles aggregate predictions of several similar classifiers’ for improving the classification accuracy. Such a cascaded ensemble of three stages of SVM classifiers is used in [69] for classifying the histopathological images into three breast cancer grades. A summary of different handcrafted feature-based algorithms used in literature for Breast Cancer Grading is given in Table 3.

Table 2.

Summary of machine learning algorithms for breast cancer grading

Table 3.

Summary of handcrafted feature-based algorithms used for breast cancer grading

| Paper reference | Pre-processing | Segmentation | Feature extraction | Feature selection | Classification |

|---|---|---|---|---|---|

| Weyn et al. [72] | — | Background correction and basic thresholding | Wavelet-texture features | — | k-nearest neighbor |

| Petushi et al. [53] | —— | Adaptive thresholding followed by morphological operations | Morphological and textural features | Decision tree | |

| Petushi et al. [54] | — | — | Micro-texture parameters | — | Supervised learning |

| Cosatto et al. [10] | Adaptive approach for color variation removal | Hough transform and active contour | Shape, texture, and fitness of the outline | — | SVM |

| Doyle et al. [14] | — | — | Texture- and graph-based features | Spectral clustering | SVM |

| Naik et al. [49] | — | A composition of low-level, high-level, and domain-specific information | Morphological and graph-based features | PCA | SVM |

| Dalle et al. [11] | — | A multi-resolution approach depending on Gaussian color models | Morphological features for the 3 attributes of cancer grading | — | Scoring and grading |

| Dalle et al. [12] | — | Intensity thresholding and line fitting | Size, shape, and textural features | — | Gaussian Mixture Model |

| Huang et al. [32] | — | Iterative snake algorithm | Size, roundness, and textural features | — | Bayesian classifier |

| Veta et al. [67] | Spectral unmixing and morphological operations | Marker controlled watershed segmentation | Mean and standard deviation of nuclear area | — | Cox’s proportional hazards model |

| Basavanhally et al. [5] | Stain-specific RGB absorption and morphological operations | Geodesic active contour | Graph-based and textural features | Minimum redundancy maximum relevance (mRMR) | Random forest |

| Ojansivu et al. [52] | — | — | Local Phase Quantization (LPQ) and Local Binary Patterns(LBP) | — | SVM |

| Lu et al. [42] | Stain normalization and color deconvolution | Mean-shift algorithm and morphological operations | Morphological and statistical texture features | — | SVM |

| Khan et al. [40] | — | — | Geodesic mean of region covariance descriptors | k-nearest neighbor | |

| Maqlin et al. [46] | — | k-means clustering and convex grouping technique | Mean and standard deviation of morphological and textural features | — | Artificial Neural Network |

| Moncayo et al. [47] | Color deconvolution by a linear generative model | Maximally Stable Extreme Regions (MSER) | Multiscale descriptor representing the texture used as Bag of Features (BoF) | — | SVM |

| Faridi et al. [18] | Unmixing of color channels and anisotropic diffusion filtering | DoG filtering and Distance Regularized Level Set Evolution (DRLSE) algorithm | Morphological features and unfolded nucleoli count | — | SVM |

| Wan et al. [69] | Nonlinear mapping-based stain normalization | Hybrid active contour | Textural, graph-based, and CNN-derived features | Graph embedding | SVM-based cascaded ensemble of classifiers |

| Salahuddin et al. [60] | Pattern-based Hyper Conceptual Sampling | — | Hyper context feature extraction | — | SVM, Naive Bayes, Feed Forward Net (FFN), Cascade Forward Net (CFN), and Pattern Net(PN) |

| Gandomkar et al. [22] | Stain normalization and color deconvolution | Morphological operations followed by thresholding | Textural features like first-order statistics, Haralick, LBP, gray-level run-length matrix, maximum response filter, and Gabor-based features | — | Multiple regression trees |

Automated handcrafted feature-based breast cancer grading or prognosis system relies on the disease-related features extracted from the histopathological images. This may require accurate detection and segmentation of nuclear structures, which itself is a challenging task because of the complexity and high density of histologic data. Consequently, there is a great demand for computational and intelligent systems for nuclear pleomorphism analysis. Recently developed deep learning (DL) techniques can extract and organize discriminative information about the data thereby avoiding the need of these handcrafted features. Many of the deep learning systems like Convolutional Neural Networks (CNNs) are found to be successful in various classification tasks such as object recognition, signal processing, speech recognition, and natural language processing.

Learned Feature-Based Algorithms

Various works like [3, 4, 29, 50, 55] have investigated applying Convolutional Neural Networks for nuclear atypia scoring and were found to perform better than systems that use handcrafted feature descriptors. Han et al. [29] propose an exhaustive recognition technique with a class structure-based deep convolutional neural network (CSDCNN) for multi-classification of the histopathological breast cancer images. The CSDCNN learns discriminative and semantic hierarchical features and the feature similarities of different classes are specified using feature space distance constraints assimilated into the network model. Rakhlin et al. [55] use a Gradient Boosting algorithm on top of the pretrained CNN on ImageNet for the classification of H&E stained breast cancer images. Bardou et al. [4] extract the handcrafted features (bag of words and locality constrained linear coding) and classified the cancer subtypes using CNN with a fully connected classifier layer and [50] uses a single-layer Convolutional Neural Network (CNN) for the binary classification of the microscopic breast cancer images. Araujo et al. [3] used CNN architecture designed to extract details at various scales in the nuclei and tissue level.

Wollmann et al. [6, 8, 26, 48, 56, 61, 62, 69, 75, 76] used deep learning technique-based CNN architectures for the classification of breast histopathological images. Wollmann et al. [75] performs slide level classification using deep neural networks on the patches cropped by color thresholding and these results are aggregated to grade the patient. Spanhol et al. [61] used an existing CNN architecture, AlexNet, modified for the grading problem in breast cancer. The image patches extracted from high-resolution BreaKHis dataset are used for training the deep networks and an integration of these patches is adopted for the final classification. The image patches are extracted mainly based on two strategies—using a sliding window allowing 50% of overlapping and a random choice of non-overlapped patches. Different networks are trained with different number of patches having various sizes. Hence, the results are improved through a combination of classifiers. The final layer in AlexNet is the fully connected layer using softmax activation which estimates the output as posterior probabilities. Different combination or fusion rules are applied for combining the posterior probability estimations from different classifiers using Sum, Product and Max rules. The fusion using the Max rule is found to outperform the Sum and Product rules.

Wan et al. [69] used CNN-derived semantic-level descriptors together with pixel-based and object-based features for atypia scoring. They proposed a 3-layer CNN model comprising of two successive convolutional and max-pooling layers and a fully connected classification layer. The two consecutive convolutional and the max-pooling layers adopt the same fixed 9× 9 convolutional kernel and 2×2 pooling kernel. The final layer has 38 neurons, which are connected to the last three neurons corresponding to three classes—low-grade, intermediate-grade and high-grade cancer. The semantic-based features are generated using this CNN trained with labeled and segmented nuclei of various grades.

Rezaeilouyeh et al. [56] used the shearlet coefficients, especially the magnitude and phase, as subsidiary information for the neural network along with the original image for the CNN-induced cancer classification. Shearlet transform is a multiscale directional representation exhibiting affine properties and can extract anisotropic features at various scales and orientations. The shearlet transform coefficient contains a magnitude and a phase part. Phase part contains most of the information and is invariant to the induced noise and to the variations in the image contrast. Magnitude part of the shearlet coefficients mainly represents the singularities or edges in the image. Since breast histopathological images are stained with H&E, the RGB color data together with the magnitude and phase of the shearlet coefficients are used for training the CNNs. The magnitude and phase of shearlets from different decomposition levels and the RGB images are fed to separate CNNs as they have different properties. The proposed CNN consists of three layers of convolution and pooling, with the convolutional layer using 64 Gaussian filters with a size of 5×5 having a standard deviation of about 0.0001 and a bias of zero and the max-pooling layer applied on 3×3 region with a step size of 2 pixels. The Rectified Linear Unit (ReLU) is adopted as the activation function and a fully connected layer is used for combining the outputs from different CNNs fed with various features.

Xu et al. [76] proposed a Multi-Resolution Convolutional Network (MR-CN) with Plurality Voting (MR-CN-PV) model for automated nuclear atypia scoring. The model consists of a combination of three Single-Resolution Convolutional Network based on AlexNet, each performing independent scoring based on majority voting on three different resolutions of images. Score from these three SR-CN-MV are then integrated with plurality voting strategy for the final atypia scoring.

Bayramoglu et al. [6] proposed a multi-task CNN architecture that can predict both the image magnification level and its malignancy property simultaneously. The proposed model allows combining image data from many more resolution levels than four discrete magnification levels. The proposed CNN consists of three separate convolutional layers, with each one followed by the ReLU operation and a max-pooling layer. After the convolution layers, two fully connected layers are added. For multi-tasking, the network is modified by splitting the last fully connected layer into two branches. The first branch, which classifies tissues based on malignancy, is fed to the 2-way softmax, at the same time, the second branch, which learns the magnification factor, is fed to a 4-way softmax layer and the softmax loss is minimized.

Nahid et al. [48] classify the breast cancer images using three deep neural network models: CNN, a Long-Short-Term-Memory (LSTM), and an integrated CNN+LSTM model, guided by an unsupervised clustering that extract out the structural and statistical information from the histopathological images. The extracted global and local features are used for deciding the class by the Softmax and SVM layers. Bejnordi et al. [8] use a stack of context-aware CNNs to extract the information regarding the interdependence of cellular structures in the WSIs of benign, DCIS, and IDC. Guo et al. [26] propose a hybrid CNN architecture with two CNN models: a patch-level voting module and a merging module and uses hierarchy voting tactic and bagging technique for reducing generalization error. The data augmentation and transfer learning are used to increase the number of training data.