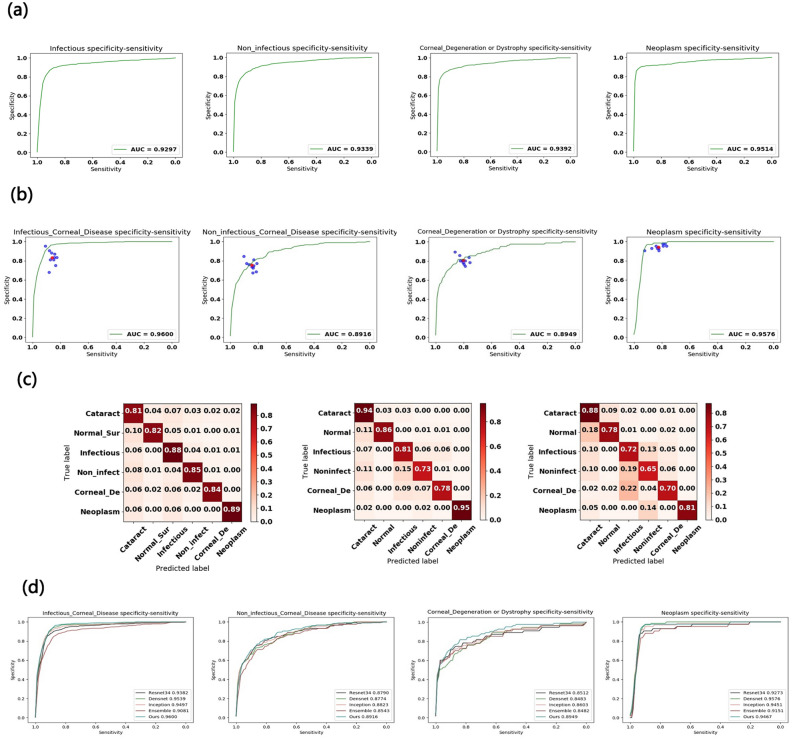

Figure 5.

Corneal diseases identification performance of the proposed deep learning algorithm and ophthalmologists. (a) The algorithm achieves acceptable AUC values in identifying corneal diseases on the testing dataset with 772 images. (b) Our algorithm was tested against 10 ophthalmologists for the 510-subject dataset. The algorithm outperforms the average of the 10 ophthalmologists at corneal inflammation disease (infectious and non-infectious keratitis) and achieves performance on par with them in corneal dystrophy, corneal degeneration, and neoplasm when using ocular surface photographic images. (c) Confusion matrices for diagnosis of normal, cataract and four common corneal diseases between the algorithm and two ophthalmologists with varying levels of clinical experience reveal similarities in misclassification between human experts and the algorithm. The distribution across column 1—cataract—is pronounced in all plots, demonstrating that many lesions are easily confused with this disease. Note that both the algorithm and the ophthalmologists noticeably confuse infectious and non-infectious keratitis (diseases 2 and 3) with each other, with ophthalmologists erring on the side of predicting infectious keratitis. The distribution across row 5 in all plots shows the difficulty of classifying corneal dystrophy or degeneration, which tends to be diagnosed as infectious keratitis. (d) Performance comparison with four existing methods, namely Resnet34, Densenet, Inception-v3, and Ensemble. Our algorithm achieved better AUC than Resnet34, Densenet, Inception-v3, and Ensemble in most of corneal diseases. Only Densenet has a higher AUC than ours in diagnosing corneal and limbal neoplasm.