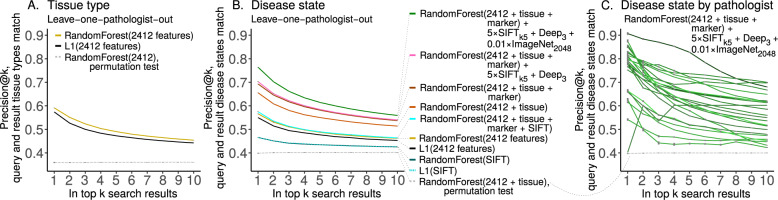

Fig. 10. Case similarity search performance.

We report search performance as precision@k for leave-one-pathologist-out cross-validation for (A) tissue and (B) disease state. We note search based on SIFT features performs better than chance, but worse than all alternatives we tried. Marker mention information improves search slightly, and we suspect cases that mention markers may be more relevant search results if a query case also mentions markers. SIFTk5 and histopathology-trained Deep3 features improve performance even less, but only natural-image-trained ImageNet2048 deep features increase performance substantially (Table S1). (C) We show per-pathologist variability in search, with outliers for both strong and weak performance. Random chance is dashed gray line. In our testing, performance for every pathologist is always above chance, which may suggest performance will be above chance for patient cases from other pathologists. We suspect variability in staining protocol, variability in photography, and variability in per-pathologist shared case diagnosis difficulty may underlie this search performance variability. The pathologist where precision@k = 1 is lowest shared five images total for the disease prediction task, and these images are of a rare tissue type. Table S2 shows per-pathologist performance statistics.